在openstack nova创建虚拟机过程(一)中,分析结束在/nova/conductor/rpcapi.py函数self.conductor_compute_rpcapi.schedule_and_build_instances(),如下:

def schedule_and_build_instances(self, context, build_requests,

request_specs,

image, admin_password, injected_files,

requested_networks,

block_device_mapping,

tags=None):

version = '1.17'

kw = {'build_requests': build_requests,

'request_specs': request_specs,

'image': jsonutils.to_primitive(image),

'admin_password': admin_password,

'injected_files': injected_files,

'requested_networks': requested_networks,

'block_device_mapping': block_device_mapping,

'tags': tags}

if not self.client.can_send_version(version):

version = '1.16'

del kw['tags']

cctxt = self.client.prepare(version=version)

# 发送异步rpc消息

cctxt.cast(context, 'schedule_and_build_instances', **kw)

它通过cast的方式将消息发送到消息总线上,等待nova-conductor处理。

注明:RPC.call()发送消息到队列,等待返回。阻塞;RPC.cast()发送消息到队列,不等待返回继续执行,非阻塞。

nova-conductor订阅了执行schedule_and_build_instances的消息,将调用/nova/conductor/manager.py中该函数,如下:

def schedule_and_build_instances(self, context, build_requests,

request_specs, image,

admin_password, injected_files,

requested_networks, block_device_mapping,

tags=None):

# Add all the UUIDs for the instances

instance_uuids = [spec.instance_uuid for spec in request_specs]

try:

# 返回适合创建虚拟机的主机列表,调用顺序:

# /nova/conductor/manager.py:_schedule_instances() --> /nova/scheduler/client/__init__.py:select_destinations() --> /nova/scheduler/client/query.py:select_destinations()

# --> /nova/scheduler/rpcapi.py:select_destinations(),向消息队列发送执行selet_destinations请求,使用call方法,等待nova-scheduler返回适合的主机

host_lists = self._schedule_instances(context, request_specs[0],

instance_uuids, return_alternates=True)

except Exception as exc:

LOG.exception('Failed to schedule instances')

self._bury_in_cell0(context, request_specs[0], exc,

build_requests=build_requests)

return

host_mapping_cache = {}

cell_mapping_cache = {}

instances = []

for (build_request, request_spec, host_list) in six.moves.zip(

build_requests, request_specs, host_lists):

instance = build_request.get_new_instance(context)

# host_list is a list of one or more Selection objects, the first

# of which has been selected and its resources claimed.

host = host_list[0]

# Convert host from the scheduler into a cell record

if host.service_host not in host_mapping_cache:

try:

host_mapping = objects.HostMapping.get_by_host(

context, host.service_host)

host_mapping_cache[host.service_host] = host_mapping

except exception.HostMappingNotFound as exc:

LOG.error('No host-to-cell mapping found for selected '

'host %(host)s. Setup is incomplete.',

{'host': host.service_host})

self._bury_in_cell0(context, request_spec, exc,

build_requests=[build_request],

instances=[instance])

# This is a placeholder in case the quota recheck fails.

instances.append(None)

continue

else:

host_mapping = host_mapping_cache[host.service_host]

cell = host_mapping.cell_mapping

# Before we create the instance, let's make one final check that

# the build request is still around and wasn't deleted by the user

# already.

try:

objects.BuildRequest.get_by_instance_uuid(

context, instance.uuid)

except exception.BuildRequestNotFound:

# the build request is gone so we're done for this instance

LOG.debug('While scheduling instance, the build request '

'was already deleted.', instance=instance)

# This is a placeholder in case the quota recheck fails.

instances.append(None)

rc = self.scheduler_client.reportclient

rc.delete_allocation_for_instance(context, instance.uuid)

continue

else:

instance.availability_zone = (

availability_zones.get_host_availability_zone(

context, host.service_host))

with obj_target_cell(instance, cell):

instance.create()

instances.append(instance)

cell_mapping_cache[instance.uuid] = cell

# NOTE(melwitt): We recheck the quota after creating the

# objects to prevent users from allocating more resources

# than their allowed quota in the event of a race. This is

# configurable because it can be expensive if strict quota

# limits are not required in a deployment.

if CONF.quota.recheck_quota:

try:

compute_utils.check_num_instances_quota(

context, instance.flavor, 0, 0,

orig_num_req=len(build_requests))

except exception.TooManyInstances as exc:

with excutils.save_and_reraise_exception():

self._cleanup_build_artifacts(context, exc, instances,

build_requests,

request_specs,

cell_mapping_cache)

zipped = six.moves.zip(build_requests, request_specs, host_lists,

instances)

for (build_request, request_spec, host_list, instance) in zipped:

if instance is None:

# Skip placeholders that were buried in cell0 or had their

# build requests deleted by the user before instance create.

continue

cell = cell_mapping_cache[instance.uuid]

# host_list is a list of one or more Selection objects, the first

# of which has been selected and its resources claimed.

host = host_list.pop(0)

alts = [(alt.service_host, alt.nodename) for alt in host_list]

LOG.debug("Selected host: %s; Selected node: %s; Alternates: %s",

host.service_host, host.nodename, alts, instance=instance)

filter_props = request_spec.to_legacy_filter_properties_dict()

scheduler_utils.populate_retry(filter_props, instance.uuid)

scheduler_utils.populate_filter_properties(filter_props,

host)

# TODO(melwitt): Maybe we should set_target_cell on the contexts

# once we map to a cell, and remove these separate with statements.

with obj_target_cell(instance, cell) as cctxt:

# send a state update notification for the initial create to

# show it going from non-existent to BUILDING

# This can lazy-load attributes on instance.

notifications.send_update_with_states(cctxt, instance, None,

vm_states.BUILDING, None, None, service="conductor")

objects.InstanceAction.action_start(

cctxt, instance.uuid, instance_actions.CREATE,

want_result=False)

instance_bdms = self._create_block_device_mapping(

cell, instance.flavor, instance.uuid, block_device_mapping)

instance_tags = self._create_tags(cctxt, instance.uuid, tags)

# TODO(Kevin Zheng): clean this up once instance.create() handles

# tags; we do this so the instance.create notification in

# build_and_run_instance in nova-compute doesn't lazy-load tags

instance.tags = instance_tags if instance_tags \

else objects.TagList()

# Update mapping for instance. Normally this check is guarded by

# a try/except but if we're here we know that a newer nova-api

# handled the build process and would have created the mapping

inst_mapping = objects.InstanceMapping.get_by_instance_uuid(

context, instance.uuid)

inst_mapping.cell_mapping = cell

inst_mapping.save()

if not self._delete_build_request(

context, build_request, instance, cell, instance_bdms,

instance_tags):

# The build request was deleted before/during scheduling so

# the instance is gone and we don't have anything to build for

# this one.

continue

# NOTE(danms): Compute RPC expects security group names or ids

# not objects, so convert this to a list of names until we can

# pass the objects.

legacy_secgroups = [s.identifier

for s in request_spec.security_groups]

with obj_target_cell(instance, cell) as cctxt:

self.compute_rpcapi.build_and_run_instance(

cctxt, instance=instance, image=image,

request_spec=request_spec,

filter_properties=filter_props,

admin_password=admin_password,

injected_files=injected_files,

requested_networks=requested_networks,

security_groups=legacy_secgroups,

block_device_mapping=instance_bdms,

host=host.service_host, node=host.nodename,

limits=host.limits, host_list=host_list)

之后,进入/nova/compute/rpcapi.py中,向消息队列发送非阻塞消息请求执行build_and_run_instance(),如下:

def build_and_run_instance(self, ctxt, instance, host, image, request_spec,

filter_properties, admin_password=None, injected_files=None,

requested_networks=None, security_groups=None,

block_device_mapping=None, node=None, limits=None,

host_list=None):

# NOTE(edleafe): compute nodes can only use the dict form of limits.

if isinstance(limits, objects.SchedulerLimits):

limits = limits.to_dict()

kwargs = {"instance": instance,

"image": image,

"request_spec": request_spec,

"filter_properties": filter_properties,

"admin_password": admin_password,

"injected_files": injected_files,

"requested_networks": requested_networks,

"security_groups": security_groups,

"block_device_mapping": block_device_mapping,

"node": node,

"limits": limits,

"host_list": host_list,

}

client = self.router.client(ctxt)

version = '4.19'

if not client.can_send_version(version):

version = '4.0'

kwargs.pop("host_list")

cctxt = client.prepare(server=host, version=version)

cctxt.cast(ctxt, 'build_and_run_instance', **kwargs)

nova-compute订阅了执行build_and_run_instance()的消息,并在/nova/compute/manager.py中执行该函数,如下:

def build_and_run_instance(self, context, instance, image, request_spec,

filter_properties, admin_password=None,

injected_files=None, requested_networks=None,

security_groups=None, block_device_mapping=None,

node=None, limits=None, host_list=None):

@utils.synchronized(instance.uuid)

def _locked_do_build_and_run_instance(*args, **kwargs):

# NOTE(danms): We grab the semaphore with the instance uuid

# locked because we could wait in line to build this instance

# for a while and we want to make sure that nothing else tries

# to do anything with this instance while we wait.

with self._build_semaphore:

try:

result = self._do_build_and_run_instance(*args, **kwargs)

except Exception:

# NOTE(mriedem): This should really only happen if

# _decode_files in _do_build_and_run_instance fails, and

# that's before a guest is spawned so it's OK to remove

# allocations for the instance for this node from Placement

# below as there is no guest consuming resources anyway.

# The _decode_files case could be handled more specifically

# but that's left for another day.

result = build_results.FAILED

raise

finally:

if result == build_results.FAILED:

# Remove the allocation records from Placement for the

# instance if the build failed. The instance.host is

# likely set to None in _do_build_and_run_instance

# which means if the user deletes the instance, it

# will be deleted in the API, not the compute service.

# Setting the instance.host to None in

# _do_build_and_run_instance means that the

# ResourceTracker will no longer consider this instance

# to be claiming resources against it, so we want to

# reflect that same thing in Placement. No need to

# call this for a reschedule, as the allocations will

# have already been removed in

# self._do_build_and_run_instance().

self._delete_allocation_for_instance(context,

instance.uuid)

if result in (build_results.FAILED,

build_results.RESCHEDULED):

self._build_failed()

else:

self._failed_builds = 0

# NOTE(danms): We spawn here to return the RPC worker thread back to

# the pool. Since what follows could take a really long time, we don't

# want to tie up RPC workers.

utils.spawn_n(_locked_do_build_and_run_instance,

context, instance, image, request_spec,

filter_properties, admin_password, injected_files,

requested_networks, security_groups,

block_device_mapping, node, limits, host_list)

调用/nova/compute/manager.py下的_do_build_and_run_instance(),如下:

def _do_build_and_run_instance(self, context, instance, image,

request_spec, filter_properties, admin_password, injected_files,

requested_networks, security_groups, block_device_mapping,

node=None, limits=None, host_list=None):

try:

LOG.debug('Starting instance...', instance=instance)

instance.vm_state = vm_states.BUILDING

instance.task_state = None

instance.save(expected_task_state=

(task_states.SCHEDULING, None))

except exception.InstanceNotFound:

msg = 'Instance disappeared before build.'

LOG.debug(msg, instance=instance)

return build_results.FAILED

except exception.UnexpectedTaskStateError as e:

LOG.debug(e.format_message(), instance=instance)

return build_results.FAILED

# b64 decode the files to inject:

decoded_files = self._decode_files(injected_files)

if limits is None:

limits = {}

if node is None:

node = self._get_nodename(instance, refresh=True)

try:

with timeutils.StopWatch() as timer:

self._build_and_run_instance(context, instance, image,

decoded_files, admin_password, requested_networks,

security_groups, block_device_mapping, node, limits,

filter_properties, request_spec)

LOG.info('Took %0.2f seconds to build instance.',

timer.elapsed(), instance=instance)

return build_results.ACTIVE

except exception.RescheduledException as e:

retry = filter_properties.get('retry')

if not retry:

# no retry information, do not reschedule.

LOG.debug("Retry info not present, will not reschedule",

instance=instance)

self._cleanup_allocated_networks(context, instance,

requested_networks)

self._cleanup_volumes(context, instance.uuid,

block_device_mapping, raise_exc=False)

compute_utils.add_instance_fault_from_exc(context,

instance, e, sys.exc_info(),

fault_message=e.kwargs['reason'])

self._nil_out_instance_obj_host_and_node(instance)

self._set_instance_obj_error_state(context, instance,

clean_task_state=True)

return build_results.FAILED

LOG.debug(e.format_message(), instance=instance)

# This will be used for logging the exception

retry['exc'] = traceback.format_exception(*sys.exc_info())

# This will be used for setting the instance fault message

retry['exc_reason'] = e.kwargs['reason']

# NOTE(comstud): Deallocate networks if the driver wants

# us to do so.

# NOTE(vladikr): SR-IOV ports should be deallocated to

# allow new sriov pci devices to be allocated on a new host.

# Otherwise, if devices with pci addresses are already allocated

# on the destination host, the instance will fail to spawn.

# info_cache.network_info should be present at this stage.

if (self.driver.deallocate_networks_on_reschedule(instance) or

self.deallocate_sriov_ports_on_reschedule(instance)):

self._cleanup_allocated_networks(context, instance,

requested_networks)

else:

# NOTE(alex_xu): Network already allocated and we don't

# want to deallocate them before rescheduling. But we need

# to cleanup those network resources setup on this host before

# rescheduling.

self.network_api.cleanup_instance_network_on_host(

context, instance, self.host)

self._nil_out_instance_obj_host_and_node(instance)

instance.task_state = task_states.SCHEDULING

instance.save()

# The instance will have already claimed resources from this host

# before this build was attempted. Now that it has failed, we need

# to unclaim those resources before casting to the conductor, so

# that if there are alternate hosts available for a retry, it can

# claim resources on that new host for the instance.

self._delete_allocation_for_instance(context, instance.uuid)

self.compute_task_api.build_instances(context, [instance],

image, filter_properties, admin_password,

injected_files, requested_networks, security_groups,

block_device_mapping, request_spec=request_spec,

host_lists=[host_list])

return build_results.RESCHEDULED

except (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError):

msg = 'Instance disappeared during build.'

LOG.debug(msg, instance=instance)

self._cleanup_allocated_networks(context, instance,

requested_networks)

return build_results.FAILED

except exception.BuildAbortException as e:

LOG.exception(e.format_message(), instance=instance)

self._cleanup_allocated_networks(context, instance,

requested_networks)

self._cleanup_volumes(context, instance.uuid,

block_device_mapping, raise_exc=False)

compute_utils.add_instance_fault_from_exc(context, instance,

e, sys.exc_info())

self._nil_out_instance_obj_host_and_node(instance)

self._set_instance_obj_error_state(context, instance,

clean_task_state=True)

return build_results.FAILED

except Exception as e:

# Should not reach here.

LOG.exception('Unexpected build failure, not rescheduling build.',

instance=instance)

self._cleanup_allocated_networks(context, instance,

requested_networks)

self._cleanup_volumes(context, instance.uuid,

block_device_mapping, raise_exc=False)

compute_utils.add_instance_fault_from_exc(context, instance,

e, sys.exc_info())

self._nil_out_instance_obj_host_and_node(instance)

self._set_instance_obj_error_state(context, instance,

clean_task_state=True)

return build_results.FAILED

继续在本文件下调用_build_and_run_instance(),开始在hypervisor上孵化虚拟机,如下:

def _build_and_run_instance(self, context, instance, image, injected_files,

admin_password, requested_networks, security_groups,

block_device_mapping, node, limits, filter_properties,

request_spec=None):

image_name = image.get('name')

self._notify_about_instance_usage(context, instance, 'create.start',

extra_usage_info={'image_name': image_name})

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.START,

bdms=block_device_mapping)

# NOTE(mikal): cache the keystone roles associated with the instance

# at boot time for later reference

instance.system_metadata.update(

{'boot_roles': ','.join(context.roles)})

self._check_device_tagging(requested_networks, block_device_mapping)

try:

scheduler_hints = self._get_scheduler_hints(filter_properties,

request_spec)

rt = self._get_resource_tracker()

with rt.instance_claim(context, instance, node, limits):

# NOTE(russellb) It's important that this validation be done

# *after* the resource tracker instance claim, as that is where

# the host is set on the instance.

self._validate_instance_group_policy(context, instance,

scheduler_hints)

image_meta = objects.ImageMeta.from_dict(image)

with self._build_resources(context, instance,

requested_networks, security_groups, image_meta,

block_device_mapping) as resources:

instance.vm_state = vm_states.BUILDING

instance.task_state = task_states.SPAWNING

# NOTE(JoshNang) This also saves the changes to the

# instance from _allocate_network_async, as they aren't

# saved in that function to prevent races.

instance.save(expected_task_state=

task_states.BLOCK_DEVICE_MAPPING)

block_device_info = resources['block_device_info']

network_info = resources['network_info']

allocs = resources['allocations']

LOG.debug('Start spawning the instance on the hypervisor.',

instance=instance)

with timeutils.StopWatch() as timer:

# 开始在hypervisor上孵化虚拟机

self.driver.spawn(context, instance, image_meta,

injected_files, admin_password,

allocs, network_info=network_info,

block_device_info=block_device_info)

LOG.info('Took %0.2f seconds to spawn the instance on '

'the hypervisor.', timer.elapsed(),

instance=instance)

except (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError) as e:

with excutils.save_and_reraise_exception():

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

except exception.ComputeResourcesUnavailable as e:

LOG.debug(e.format_message(), instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

raise exception.RescheduledException(

instance_uuid=instance.uuid, reason=e.format_message())

except exception.BuildAbortException as e:

with excutils.save_and_reraise_exception():

LOG.debug(e.format_message(), instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

except (exception.FixedIpLimitExceeded,

exception.NoMoreNetworks, exception.NoMoreFixedIps) as e:

LOG.warning('No more network or fixed IP to be allocated',

instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

msg = _('Failed to allocate the network(s) with error %s, '

'not rescheduling.') % e.format_message()

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=msg)

except (exception.VirtualInterfaceCreateException,

exception.VirtualInterfaceMacAddressException,

exception.FixedIpInvalidOnHost,

exception.UnableToAutoAllocateNetwork) as e:

LOG.exception('Failed to allocate network(s)',

instance=instance)

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

msg = _('Failed to allocate the network(s), not rescheduling.')

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=msg)

except (exception.FlavorDiskTooSmall,

exception.FlavorMemoryTooSmall,

exception.ImageNotActive,

exception.ImageUnacceptable,

exception.InvalidDiskInfo,

exception.InvalidDiskFormat,

cursive_exception.SignatureVerificationError,

exception.VolumeEncryptionNotSupported,

exception.InvalidInput) as e:

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

raise exception.BuildAbortException(instance_uuid=instance.uuid,

reason=e.format_message())

except Exception as e:

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

raise exception.RescheduledException(

instance_uuid=instance.uuid, reason=six.text_type(e))

# NOTE(alaski): This is only useful during reschedules, remove it now.

instance.system_metadata.pop('network_allocated', None)

# If CONF.default_access_ip_network_name is set, grab the

# corresponding network and set the access ip values accordingly.

network_name = CONF.default_access_ip_network_name

if (network_name and not instance.access_ip_v4 and

not instance.access_ip_v6):

# Note that when there are multiple ips to choose from, an

# arbitrary one will be chosen.

for vif in network_info:

if vif['network']['label'] == network_name:

for ip in vif.fixed_ips():

if not instance.access_ip_v4 and ip['version'] == 4:

instance.access_ip_v4 = ip['address']

if not instance.access_ip_v6 and ip['version'] == 6:

instance.access_ip_v6 = ip['address']

break

self._update_instance_after_spawn(context, instance)

try:

instance.save(expected_task_state=task_states.SPAWNING)

except (exception.InstanceNotFound,

exception.UnexpectedDeletingTaskStateError) as e:

with excutils.save_and_reraise_exception():

self._notify_about_instance_usage(context, instance,

'create.error', fault=e)

compute_utils.notify_about_instance_create(

context, instance, self.host,

phase=fields.NotificationPhase.ERROR, exception=e,

bdms=block_device_mapping)

self._update_scheduler_instance_info(context, instance)

self._notify_about_instance_usage(context, instance, 'create.end',

extra_usage_info={'message': _('Success')},

network_info=network_info)

compute_utils.notify_about_instance_create(context, instance,

self.host, phase=fields.NotificationPhase.END,

bdms=block_device_mapping)

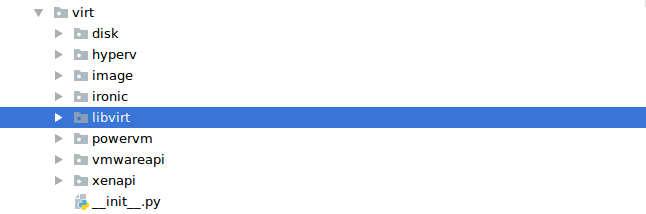

开始在hypervisor上孵化虚拟机需要调用hypervisor的相应python API驱动,在/nova/virt/下可以看到多种驱动程序,如下:

包括libvirt, powervm, vmwareapi, xenapi;我们使用libvirt,那么就会调用到/libvirt/driver.py下spawn()函数,如下:

def spawn(self, context, instance, image_meta, injected_files,

admin_password, allocations, network_info=None,

block_device_info=None):

# 获取disk配置信息

disk_info = blockinfo.get_disk_info(CONF.libvirt.virt_type,

instance,

image_meta,

block_device_info)

injection_info = InjectionInfo(network_info=network_info,

files=injected_files,

admin_pass=admin_password)

gen_confdrive = functools.partial(self._create_configdrive,

context, instance,

injection_info)

# 处理镜像

self._create_image(context, instance, disk_info['mapping'],

injection_info=injection_info,

block_device_info=block_device_info)

# Required by Quobyte CI

self._ensure_console_log_for_instance(instance)

# Does the guest need to be assigned some vGPU mediated devices ?

mdevs = self._allocate_mdevs(allocations)

# 将当前参数配置转化成创建虚拟机的xml文件

xml = self._get_guest_xml(context, instance, network_info,

disk_info, image_meta,

block_device_info=block_device_info,

mdevs=mdevs)

# 设置网络,创建实例

self._create_domain_and_network(

context, xml, instance, network_info,

block_device_info=block_device_info,

post_xml_callback=gen_confdrive,

destroy_disks_on_failure=True)

LOG.debug("Instance is running", instance=instance)

def _wait_for_boot():

"""Called at an interval until the VM is running."""

state = self.get_info(instance).state

if state == power_state.RUNNING:

LOG.info("Instance spawned successfully.", instance=instance)

raise loopingcall.LoopingCallDone()

timer = loopingcall.FixedIntervalLoopingCall(_wait_for_boot)

timer.start(interval=0.5).wait()

本文件下调用的_create_domain_and_network()如下:

def _create_domain_and_network(self, context, xml, instance, network_info,

block_device_info=None, power_on=True,

vifs_already_plugged=False,

post_xml_callback=None,

destroy_disks_on_failure=False):

"""Do required network setup and create domain."""

timeout = CONF.vif_plugging_timeout

if (self._conn_supports_start_paused and

utils.is_neutron() and not

vifs_already_plugged and power_on and timeout):

events = self._get_neutron_events(network_info)

else:

events = []

pause = bool(events)

guest = None

try:

with self.virtapi.wait_for_instance_event(

instance, events, deadline=timeout,

error_callback=self._neutron_failed_callback):

self.plug_vifs(instance, network_info)

self.firewall_driver.setup_basic_filtering(instance,

network_info)

self.firewall_driver.prepare_instance_filter(instance,

network_info)

with self._lxc_disk_handler(context, instance,

instance.image_meta,

block_device_info):

guest = self._create_domain(

xml, pause=pause, power_on=power_on,

post_xml_callback=post_xml_callback)

self.firewall_driver.apply_instance_filter(instance,

network_info)

except exception.VirtualInterfaceCreateException:

# Neutron reported failure and we didn't swallow it, so

# bail here

with excutils.save_and_reraise_exception():

self._cleanup_failed_start(context, instance, network_info,

block_device_info, guest,

destroy_disks_on_failure)

except eventlet.timeout.Timeout:

# We never heard from Neutron

LOG.warning('Timeout waiting for %(events)s for '

'instance with vm_state %(vm_state)s and '

'task_state %(task_state)s.',

{'events': events,

'vm_state': instance.vm_state,

'task_state': instance.task_state},

instance=instance)

if CONF.vif_plugging_is_fatal:

self._cleanup_failed_start(context, instance, network_info,

block_device_info, guest,

destroy_disks_on_failure)

raise exception.VirtualInterfaceCreateException()

except Exception:

# Any other error, be sure to clean up

LOG.error('Failed to start libvirt guest', instance=instance)

with excutils.save_and_reraise_exception():

self._cleanup_failed_start(context, instance, network_info,

block_device_info, guest,

destroy_disks_on_failure)

# Resume only if domain has been paused

if pause:

guest.resume()

return guest

本文件下调用的_create_domain()如下:

def _create_domain(self, xml=None, domain=None,

power_on=True, pause=False, post_xml_callback=None):

"""Create a domain.

Either domain or xml must be passed in. If both are passed, then

the domain definition is overwritten from the xml.

:returns guest.Guest: Guest just created

"""

if xml:

# 调用libvirt定义虚拟机

guest = libvirt_guest.Guest.create(xml, self._host)

if post_xml_callback is not None:

post_xml_callback()

else:

guest = libvirt_guest.Guest(domain)

if power_on or pause:

# 调用libvirt启动虚拟机

guest.launch(pause=pause)

if not utils.is_neutron():

guest.enable_hairpin()

return guest

总结:到此,基本梳理出了openstack创建虚拟机的基本代码运行流程:openstack cli发送创建虚拟机的RESTful url --->>> nova-api接收到url请求,路由到/nova/api/openstack/compute/servers.py下的具体controller方法(def create())开始创建流程,执行到/nova/conductor/rpcapi.py下self.conductor_compute_rpcapi.schedule_and_build_instances()函数,向rabbitmq消息队列发送“执行schedule_and_build_instances”请求 --->>> nova-conductor接收到rpc请求,执行/nova/conductor/manager.py下schedule_and_build_instances()函数,期间向nova-scheduler发送rpc请求去获取到host_lists(适合创建虚拟机的主机列表),一系列处理后向rabbitmq消息队列发送“执行build_and_run_instance”请求 --->>> nova-compute服务获取到rpc请求,执行/nova/compute/manager.py中build_and_run_instance()函数,然后调用到self.driver.spawn(),开始调用hypervisor驱动程序孵化虚拟机,最后完成虚拟机的定义和启动。