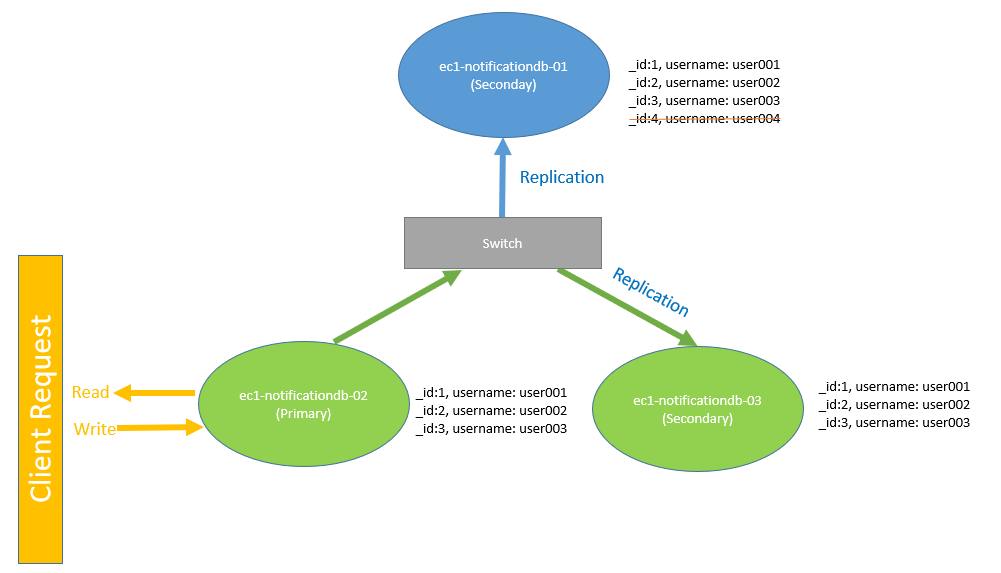

副本集架构(Architecture)

一个经典的多节点组成的副本集架构如下.

选举(Elections)

1. 当副本集的从节点ec1-notificationdb-02发现自己无法与主节点ec1-notificationdb-01取得联系并且该从节点有成为主节点的条件时,从节点ec1-notificationdb-02会向所有能到达的其他从节点ec1-notificationdb-03/04/05发送选举通知,请求选举自己为主节点.

2. 当从节点ec1-notificationdb-0/04/053收到该请求后,会判断该从节点ec1-notificationdb-02可能并不是一个合适的候选者,例如数据副本落后于自己,并且ec1-notificationdb-03/04/05能够和主节点取得联系,只是从节点ec1-notificationdb-02自己无法到达而已,这种情况下,其他从节点会拒绝该申请节点ec1-notificationdb-02的选举请求.

3. 如果其他从节点ec1-notificationdb-03/04/05并没有反对的理由,那么这些从节点将会对ec1-notificationdb-02的选举请求进行投票,如果ec1-notificationdb-03/04/05中超过半数同意ec1-notificationdb-02的选举请求,那么ec1-notificationdb-02选举成功并且将自己转化为主节点.如果没有得到超过半数的同意,那么在一段时间以后,从节点ec1-notificationdb-02会重新发起选举请求.

4. 主节点ec1-notificationdb-01将会一直处于Promary角色,如果它无法和副本集中大部分从节点通信.该主节点会自动降级成为从节点.

在一个健康的网络环境中并且副本集大多数节点正常工作情况下,选举过程几乎很快就会完成.这是因为副本集节点每隔2S发送一次心跳包,也意味着如果主节点宕机,从节点在接下来的一个心跳包中就可以监测到主节点异常,立刻开始选举过程,理想情况下几秒钟的时间就可以选举出新的主节点.但是实际情况中,也有可能是因为网络抖动或者服务器过载导致心跳包回复过慢,综合考虑各种情况下,选举过程可能会持续更长时间.

回滚(Rollbacks)

在选举一节中我们提到选举,如果一个主节点在写入一条数据后并没有来得及将该数据同步到从节点,此刻主节点突然宕机,这时从节点发起选举请求,选举出新的主节点,那么新的主节点是没有老的主节点那一条数据的.

如上图所示,在数据"_id:4,username:user004"还没有来得及写入从节点时,主节点因网络故障无法与所有从节点通信,此时从节点将会发起选举请求,选举出主节点,而原有的主节点网络恢复后会被降级成从节点,最终新的主节点的架构图如下.

这里需要特别注意,原来的主节点以从节点的身份加入副本集后,用自己最新的数据"_id:4, username:user004"和新的主节点对比,发现新的主节点并没有该条数据,于是再用自己较老的数据"_id:3, username:user003"和新的主节点数据对比,发现新的主节点数据和自己匹配,那么该从节点将会回滚数据至"_id:3, username:user003",该版本以后的数据会被存放至数据目录的rollback里.然后开始同步新的主节点的数据.

回滚现象模拟

首先我们在主节点查看data目录,确保MongoDB并没有rollback数据目录

$ ls -lh total 192K drwx------ 2 mongodb dba 294 Jan 28 09:31 admin drwx------ 2 mongodb dba 211 Jan 28 09:29 config drwx------ 2 mongodb dba 71 Jan 29 14:14 diagnostic.data drwx------ 2 mongodb dba 110 Jan 28 09:19 journal drwx------ 2 mongodb dba 4.0K Jan 28 09:24 local -rw------- 1 mongodb dba 36K Jan 28 09:32 _mdb_catalog.wt -rw------- 1 mongodb dba 6 Jan 28 09:19 mongod.lock -rw------- 1 mongodb dba 36K Jan 29 14:14 sizeStorer.wt -rw------- 1 mongodb dba 114 Jan 28 09:19 storage.bson -rw------- 1 mongodb dba 46 Jan 28 09:19 WiredTiger -rw------- 1 mongodb dba 4.0K Jan 28 09:19 WiredTigerLAS.wt -rw------- 1 mongodb dba 21 Jan 28 09:19 WiredTiger.lock -rw------- 1 mongodb dba 1.3K Jan 29 14:14 WiredTiger.turtle -rw------- 1 mongodb dba 92K Jan 29 14:14 WiredTiger.wt

在主节点插入测试数据

dbadmin@mydb:ec1-notificationdb-01 repl:PRIMARY> db.users.insertMany([ ... {_id : 1, "username" : "user001"}, ... {_id : 2, "username" : "user002"}, ... {_id : 3, "username" : "user003"} ... ]) { "acknowledged" : true, "insertedIds" : [ 1, 2, 3 ] }

在从节点确认数据已经同步

dbadmin@mydb:ec1-notificationdb-02 repl:SECONDARY> db.users.find() { "_id" : 1, "username" : "user001" } { "_id" : 3, "username" : "user003" } { "_id" : 2, "username" : "user002" }

dbadmin@mydb:ec1-notificationdb-03 repl:SECONDARY> db.users.find() { "_id" : 1, "username" : "user001" } { "_id" : 3, "username" : "user003" } { "_id" : 2, "username" : "user002" }

同时停止两个从节点的MongoDB服务,并在主节点再次插入一条数据,这里需要注意的是,如果主节点在20S内没有和它的从节点取得联系,那么主节点会将自己降级为从节点,所以插入测试的数据一定要快.

dbadmin@mydb:ec1-notificationdb-01 repl:PRIMARY> db.users.insert({_id : 4, "username" : "user004"})

WriteResult({ "nInserted" : 1 })

dbadmin@mydb:ec1-notificationdb-01 repl:PRIMARY> db.users.find()

Error: error: {

"operationTime" : Timestamp(1611930689, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

"code" : 13435,

"codeName" : "NotPrimaryNoSecondaryOk",

"$clusterTime" : {

"clusterTime" : Timestamp(1611930689, 1),

"signature" : {

"hash" : BinData(0,"kDtFyX7oSm2zvUHeEVGm7XznXfk="),

"keyId" : NumberLong("6922739574590406659")

}

}

}

dbadmin@mydb:ec1-notificationdb-01 repl:SECONDARY> rs.secondaryOk()

dbadmin@mydb:ec1-notificationdb-01 repl:SECONDARY> db.users.find()

{ "_id" : 1, "username" : "user001" }

{ "_id" : 2, "username" : "user002" }

{ "_id" : 3, "username" : "user003" }

{ "_id" : 4, "username" : "user004" }

从上可以看到,我们在主节点插入的时还是可以正常插入,查询的时候主节点已经降级成为从节点了,并且确定数据已经插入成功了,此时我们将主节点停止MongoDB服务

分别按顺序启动另外两个节点,可以发现ec1-notificationdb-02已经赢得选举成为主节点,而它的从节点为ec1-notificationdb-03,同时主节点并未同步过来我们刚才插入的一条测试数据.

dbadmin@mydb:ec1-notificationdb-02 repl:PRIMARY> db.users.find() { "_id" : 1, "username" : "user001" } { "_id" : 3, "username" : "user003" } { "_id" : 2, "username" : "user002" } dbadmin@mydb:ec1-notificationdb-02 repl:PRIMARY> rs.printSecondaryReplicationInfo() source: ec1-notificationdb-01.mypna.com:27017 syncedTo: Thu Jan 01 1970 00:00:00 GMT+0000 (GMT) 1611931739 secs (447758.82 hrs) behind the primary source: ec1-notificationdb-03.mypna.com:27017 syncedTo: Fri Jan 29 2021 14:48:59 GMT+0000 (GMT) 0 secs (0 hrs) behind the primary

这时我们启动停掉的主节点,同时观察log日志

2021-01-29T14:52:09.100+0000 I REPL [rsBackgroundSync] Changed sync source from empty to ec1-notificationdb-03.mypna.com:27017

2021-01-29T14:52:09.102+0000 I REPL [rsBackgroundSync] Starting rollback due to OplogStartMissing: Our last optime fetched: { ts: Timestamp(1611930689, 1), t: 1 }. source's GTE: { ts: Timestamp(1611931469, 2), t: 2 }

2021-01-29T14:52:09.102+0000 I REPL [rsBackgroundSync] Replication commit point: { ts: Timestamp(0, 0), t: -1 }

2021-01-29T14:52:09.102+0000 I REPL [rsBackgroundSync] Rollback using 'recoverToStableTimestamp' method.

2021-01-29T14:52:09.102+0000 I REPL [rsBackgroundSync] Scheduling rollback (sync source: ec1-notificationdb-03.mypna.com:27017)

2021-01-29T14:52:09.102+0000 I ROLLBACK [rsBackgroundSync] transition to ROLLBACK

2021-01-29T14:52:09.102+0000 I REPL [rsBackgroundSync] State transition ops metrics: { lastStateTransition: "rollback", userOpsKilled: 0, userOpsRunning: 4 }

2021-01-29T14:52:09.102+0000 I REPL [rsBackgroundSync] transition to ROLLBACK from SECONDARY

2021-01-29T14:52:09.102+0000 I NETWORK [rsBackgroundSync] Skip closing connection for connection # 7

2021-01-29T14:52:09.102+0000 I NETWORK [rsBackgroundSync] Skip closing connection for connection # 6

2021-01-29T14:52:09.102+0000 I NETWORK [rsBackgroundSync] Skip closing connection for connection # 2

2021-01-29T14:52:09.102+0000 I ROLLBACK [rsBackgroundSync] Waiting for all background operations to complete before starting rollback

2021-01-29T14:52:09.102+0000 I ROLLBACK [rsBackgroundSync] Finished waiting for background operations to complete before rollback

2021-01-29T14:52:09.102+0000 I ROLLBACK [rsBackgroundSync] finding common point

2021-01-29T14:52:09.226+0000 I ROLLBACK [rsBackgroundSync] Rollback common point is { ts: Timestamp(1611930684, 1), t: 1 }

2021-01-29T14:52:09.226+0000 I SHARDING [rsBackgroundSync] Marking collection local.system.rollback.id as collection version: <unsharded>

2021-01-29T14:52:09.227+0000 I REPL [rsBackgroundSync] Incremented the rollback ID to 2

2021-01-29T14:52:09.227+0000 I ROLLBACK [rsBackgroundSync] finding record store counts

2021-01-29T14:52:09.227+0000 I ROLLBACK [rsBackgroundSync] Preparing to write deleted documents to a rollback file for collection mydb.users with uuid d0b9b5f8-41ac-47f9-bd9f-6bdc0e3b2140 to /usr/local/mongodb/data/rollback/mydb.users/removed.2021-01-29T14-52-09.0.bson

2021-01-29T14:52:09.227+0000 I STORAGE [rsBackgroundSync] closeCatalog: closing all databases

2021-01-29T14:52:09.227+0000 I STORAGE [rsBackgroundSync] closeCatalog: closing storage engine catalog

2021-01-29T14:52:09.227+0000 I STORAGE [rsBackgroundSync] Deregistering all the collections

2021-01-29T14:52:09.227+0000 I STORAGE [WTOplogJournalThread] Oplog journal thread loop shutting down

2021-01-29T14:52:09.254+0000 I ROLLBACK [rsBackgroundSync] Rolling back to the stable timestamp. StableTimestamp: Timestamp(1611930684, 1) Initial Data Timestamp: Timestamp(1611930684, 1)

2021-01-29T14:52:09.254+0000 I STORAGE [rsBackgroundSync] openCatalog: loading storage engine catalog

2021-01-29T14:52:09.273+0000 I STORAGE [rsBackgroundSync] OplogTruncaterThread local.oplog.rs already started

2021-01-29T14:52:09.273+0000 I STORAGE [rsBackgroundSync] The size storer reports that the oplog contains 10494 records totaling to 1158449 bytes

2021-01-29T14:52:09.273+0000 I STORAGE [rsBackgroundSync] Scanning the oplog to determine where to place markers for truncation

2021-01-29T14:52:09.274+0000 I STORAGE [rsBackgroundSync] WiredTiger record store oplog processing took 1ms

2021-01-29T14:52:09.277+0000 I STORAGE [rsBackgroundSync] openCatalog: reconciling catalog and idents

2021-01-29T14:52:09.278+0000 I STORAGE [rsBackgroundSync] openCatalog: reopening all databases

2021-01-29T14:52:09.282+0000 I STORAGE [rsBackgroundSync] openCatalog: updating cached oplog pointer

2021-01-29T14:52:09.282+0000 I STORAGE [rsBackgroundSync] openCatalog: finished reloading collection catalog

2021-01-29T14:52:09.282+0000 I STORAGE [rsBackgroundSync] recoverToStableTimestamp successful. Stable Timestamp: Timestamp(1611930684, 1)

2021-01-29T14:52:09.282+0000 I ROLLBACK [rsBackgroundSync] Rollback reverted 1 insert operations, 0 update operations and 0 delete operations.

2021-01-29T14:52:09.282+0000 I ROLLBACK [rsBackgroundSync] Marking to truncate all oplog entries with timestamps greater than or equal to { ts: Timestamp(1611930689, 1), t: 1 }

2021-01-29T14:52:09.282+0000 I SHARDING [rsBackgroundSync] Marking collection local.replset.oplogTruncateAfterPoint as collection version: <unsharded>

2021-01-29T14:52:09.282+0000 I REPL [rsBackgroundSync] Removing unapplied entries starting at: { : Timestamp(1611930689, 1) }

2021-01-29T14:52:09.282+0000 I REPL [rsBackgroundSync] Replication recovery oplog truncation finished in: 0ms

2021-01-29T14:52:09.284+0000 I REPL [rsBackgroundSync] Recovering from stable timestamp: Timestamp(1611930684, 1) (top of oplog: { ts: Timestamp(1611930684, 1), t: 1 }, appliedThrough: { ts: Timestamp(0, 0), t: -1 }, TruncateAfter: Timestamp(0, 0))

2021-01-29T14:52:09.284+0000 I REPL [rsBackgroundSync] Starting recovery oplog application at the stable timestamp: Timestamp(1611930684, 1)

2021-01-29T14:52:09.284+0000 I REPL [rsBackgroundSync] No oplog entries to apply for recovery. Start point is at the top of the oplog.

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] Triggering the rollback op observer

2021-01-29T14:52:09.285+0000 I REPL [rsBackgroundSync] Resetting key manager cache

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] Rollback complete

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] Rollback summary:

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] start time: 2021-01-29T14:52:09.102+0000

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] end time: 2021-01-29T14:52:09.285+0000

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] sync source: ec1-notificationdb-03.mypna.com:27017

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] rollback data file directory: /usr/local/mongodb/data/rollback/mydb.users

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] rollback id: 2

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] last optime on branch of history rolled back: { ts: Timestamp(1611930689, 1), t: 1 }

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] common point optime: { ts: Timestamp(1611930684, 1), t: 1 }

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] last wall clock time on the branch of history rolled back: 2021-01-29T14:31:29.898+0000

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] wall clock time of the first operation after the common point: 2021-01-29T14:31:29.898+0000

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] difference in wall clock times: 0 second(s)

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] truncate timestamp: Timestamp(1611930689, 1)

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] stable timestamp: Timestamp(1611930684, 1)

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] shard identity document rolled back: false

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] config server config version document rolled back: false

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] affected sessions: none

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] affected namespaces:

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] mydb.users

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] counts of interesting commands rolled back:

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] update: 0

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] delete: 0

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] insert: 1

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] total number of entries rolled back (including no-ops): 1

2021-01-29T14:52:09.285+0000 I ROLLBACK [rsBackgroundSync] transition to SECONDARY

2021-01-29T14:52:09.285+0000 I REPL [rsBackgroundSync] transition to SECONDARY from ROLLBACK

2021-01-29T14:52:09.285+0000 I REPL [rsBackgroundSync] Resetting sync source to empty, which was ec1-notificationdb-03.mypna.com:27017

2021-01-29T14:52:09.285+0000 I REPL [rsBackgroundSync] Rollback successful.

可以很明显看到原来的主节点发生了rollback的现象,而且rollback的数据被保存到数据目录的rollback目录,我们到数据目录查看

$ ls -lh rollback/mydb.users/removed.2021-01-29T14-52-09.0.bson -rw------- 1 mongodb dba 40 Jan 29 14:52 rollback/mydb.users/removed.2021-01-29T14-52-09.0.bson

我们将rollback数据导入到主节点的临时表中查看

$ mongorestore --host=ec1-notificationdb-02 --username=dbadmin --password=88888888 --authenticationDatabase admin --db=mydb --collection=tb1 rollback/mydb.users/removed.2021-01-29T14-52-09.0.bson 2021-01-29T15:07:43.263+0000 checking for collection data in rollback/mydb.users/removed.2021-01-29T14-52-09.0.bson 2021-01-29T15:07:43.280+0000 restoring mydb.tb1 from rollback/mydb.users/removed.2021-01-29T14-52-09.0.bson 2021-01-29T15:07:43.341+0000 no indexes to restore 2021-01-29T15:07:43.342+0000 finished restoring mydb.tb1 (1 document, 0 failures) 2021-01-29T15:07:43.342+0000 1 document(s) restored successfully. 0 document(s) failed to restore.

$ mongo --host=ec1-notificationdb-02 --username=dbadmin --password=88888888 --authenticationDatabase admin mydb dbadmin@mydb:ec1-notificationdb-02 repl:PRIMARY> db.tb1.find() { "_id" : 4, "username" : "user004" }

此时我们可以根据需要决定是否将rollback的数据重新导入的副本集中.