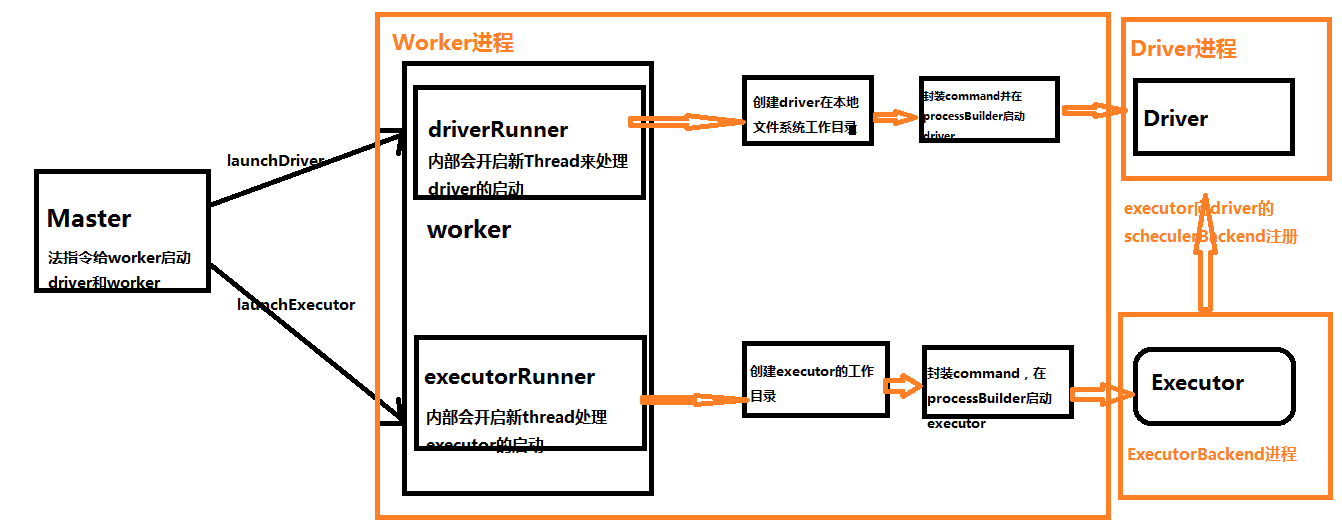

二:Spark Worker启动Driver源码解析

case LaunchDriver(driverId, driverDesc) => {

logInfo(s"Asked to launch driver $driverId")

val driver = new DriverRunner(//代理模式启动Driver

conf,

driverId,

workDir,

sparkHome,

driverDesc.copy(command = Worker.maybeUpdateSSLSettings(driverDesc.command, conf)),

self,

workerUri,

securityMgr)

drivers(driverId) = driver//将生成的DriverRunner对象按照driverId放到drivers数组中,这里面存放的HashMap的键值对,键为driverId,值为DriverRunner对象,用来标识当前的DriverRunner对象

driver.start()

//driver启动之后,将使用的cores和内存记录起来。

coresUsed += driverDesc.cores

memoryUsed += driverDesc.mem

}

补充说明:如果Cluster上的driver启动失败或者崩溃的时候,如果driverDescription的supervise设置的为true的时候,会自动重启,由worker负责它的重新启动。

DriverRunner对象

private[deploy] class DriverRunner(

conf: SparkConf,

val driverId: String,

val workDir: File,

val sparkHome: File,

val driverDesc: DriverDescription,

val worker: RpcEndpointRef,

val workerUrl: String,

val securityManager: SecurityManager)

extends Logging {

DriverRunner的构造方法,包括driver启动时的一些配置信息。这个类中封装了一个start方法,开启新的线程来启动driver

/** Starts a thread to run and manage the driver. */

private[worker] def start() = {

new Thread("DriverRunner for " + driverId) {//使用java的线程代码开启新线程来启动driver

override def run() {

try {

val driverDir = createWorkingDirectory()//创建driver工作目录

val localJarFilename = downloadUserJar(driverDir)//从hdfs上下载用户的jar包依赖(用户把jar提交给集群,会存储在hdfs上)

def substituteVariables(argument: String): String = argument match {

case "{{WORKER_URL}}" => workerUrl

case "{{USER_JAR}}" => localJarFilename

case other => other

}

// TODO: If we add ability to submit multiple jars they should also be added here

val builder = CommandUtils.buildProcessBuilder(driverDesc.command, securityManager,//如通过processBuilder来launchDriver

driverDesc.mem, sparkHome.getAbsolutePath, substituteVariables)

launchDriver(builder, driverDir, driverDesc.supervise)

}

catch {

case e: Exception => finalException = Some(e)

}

val state =

if (killed) {

DriverState.KILLED

} else if (finalException.isDefined) {

DriverState.ERROR

} else {

finalExitCode match {

case Some(0) => DriverState.FINISHED

case _ => DriverState.FAILED

}

}

finalState = Some(state)

worker.send(DriverStateChanged(driverId, state, finalException))//启动发生异常会向worker发消息。

}

}.start()

}

可以看出在run方法中会创建driver的工作目录

/**

* Creates the working directory for this driver.

* Will throw an exception if there are errors preparing the directory.

*/

private def createWorkingDirectory(): File = {

val driverDir = new File(workDir, driverId)

if (!driverDir.exists() && !driverDir.mkdirs()) {

throw new IOException("Failed to create directory " + driverDir)

}

driverDir

}

接下来会通过processBuilder来launchDriver

def buildProcessBuilder(

command: Command,

securityMgr: SecurityManager,

memory: Int,

sparkHome: String,

substituteArguments: String => String,

classPaths: Seq[String] = Seq[String](),

env: Map[String, String] = sys.env): ProcessBuilder = {

val localCommand = buildLocalCommand(

command, securityMgr, substituteArguments, classPaths, env)

val commandSeq = buildCommandSeq(localCommand, memory, sparkHome)

val builder = new ProcessBuilder(commandSeq: _*)

val environment = builder.environment()

for ((key, value) <- localCommand.environment) {

environment.put(key, value)

}

builder

}

剩下的就是异常处理了,这部分就是java的异常处理机制。需要说明的是如果启动失败,会发消息给worker和master。通知driver状态发生了改变。

case class DriverStateChanged(

driverId: String,

state: DriverState,

exception: Option[Exception])

extends DeployMessage

三:Worker启动Executor源码解析

Worker启动Executor的过程跟启动Driver基本一致,从本质上来说,Driver就是Worker上的一个Executor(当然是指Cluster模式)。这里就附上源码,不在展开了

case LaunchExecutor(masterUrl, appId, execId, appDesc, cores_, memory_) =>

if (masterUrl != activeMasterUrl) {

logWarning("Invalid Master (" + masterUrl + ") attempted to launch executor.")

} else {

try {

logInfo("Asked to launch executor %s/%d for %s".format(appId, execId, appDesc.name))

// Create the executor's working directory

val executorDir = new File(workDir, appId + "/" + execId)

if (!executorDir.mkdirs()) {

throw new IOException("Failed to create directory " + executorDir)

}

// Create local dirs for the executor. These are passed to the executor via the

// SPARK_EXECUTOR_DIRS environment variable, and deleted by the Worker when the

// application finishes.

val appLocalDirs = appDirectories.get(appId).getOrElse {

Utils.getOrCreateLocalRootDirs(conf).map { dir =>

val appDir = Utils.createDirectory(dir, namePrefix = "executor")

Utils.chmod700(appDir)

appDir.getAbsolutePath()

}.toSeq

}

appDirectories(appId) = appLocalDirs

val manager = new ExecutorRunner(

appId,

execId,

appDesc.copy(command = Worker.maybeUpdateSSLSettings(appDesc.command, conf)),

cores_,

memory_,

self,

workerId,

host,

webUi.boundPort,

publicAddress,

sparkHome,

executorDir,

workerUri,

conf,

appLocalDirs, ExecutorState.RUNNING)

executors(appId + "/" + execId) = manager

manager.start()

coresUsed += cores_

memoryUsed += memory_

sendToMaster(ExecutorStateChanged(appId, execId, manager.state, None, None))

} catch {

case e: Exception => {

logError(s"Failed to launch executor $appId/$execId for ${appDesc.name}.", e)

if (executors.contains(appId + "/" + execId)) {

executors(appId + "/" + execId).kill()

executors -= appId + "/" + execId

}

sendToMaster(ExecutorStateChanged(appId, execId, ExecutorState.FAILED,

Some(e.toString), None))

}

}

}