一 部署kube-controller-manager

1.1 高可用kube-controller-manager介绍

本实验部署一个三实例 kube-controller-manager 的集群,启动后将通过竞争选举机制产生一个 leader 节点,其它节点为阻塞状态。当 leader 节点不可用时,阻塞的节点将再次进行选举产生新的 leader 节点,从而保证服务的可用性。

为保证通信安全,本文档先生成 x509 证书和私钥,kube-controller-manager 在如下两种情况下使用该证书:

- 与 kube-apiserver 的安全端口通信;

- 在安全端口(https,10252) 输出 prometheus 格式的 metrics。

1.2 创建kube-controller-manager证书和私钥

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# cat > kube-controller-manager-csr.json <<EOF 4 { 5 "CN": "system:kube-controller-manager", 6 "hosts": [ 7 "127.0.0.1", 8 "172.24.8.71", 9 "172.24.8.72", 10 "172.24.8.73", 11 "172.24.8.100" 12 ], 13 "key": { 14 "algo": "rsa", 15 "size": 2048 16 }, 17 "names": [ 18 { 19 "C": "CN", 20 "ST": "Shanghai", 21 "L": "Shanghai", 22 "O": "system:kube-controller-manager", 23 "OU": "System" 24 } 25 ] 26 } 27 EOF #创建kube-controller-manager的CA证书请求文件

解释:

hosts 列表包含所有 kube-controller-manager 节点 IP;

CN 和 O 均为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限。

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem 3 -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json 4 -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager #生成密钥和证书

提示:本步骤操作仅需要在master01节点操作。

1.3 分发证书和私钥

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 scp kube-controller-manager*.pem root@${master_ip}:/etc/kubernetes/cert/ 7 done

提示:本步骤操作仅需要在master01节点操作。

1.4 创建和分发kubeconfig

kube-controller-manager 使用 kubeconfig 文件访问 apiserver,该文件提供了 apiserver 地址、嵌入的 CA 证书和 kube-controller-manager 证书:

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# kubectl config set-cluster kubernetes 4 --certificate-authority=/opt/k8s/work/ca.pem 5 --embed-certs=true 6 --server=${KUBE_APISERVER} 7 --kubeconfig=kube-controller-manager.kubeconfig 8 9 [root@master01 work]# kubectl config set-credentials system:kube-controller-manager 10 --client-certificate=kube-controller-manager.pem 11 --client-key=kube-controller-manager-key.pem 12 --embed-certs=true 13 --kubeconfig=kube-controller-manager.kubeconfig 14 15 [root@master01 work]# kubectl config set-context system:kube-controller-manager 16 --cluster=kubernetes 17 --user=system:kube-controller-manager 18 --kubeconfig=kube-controller-manager.kubeconfig 19 20 [root@master01 work]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig 21 22 [root@master01 ~]# cd /opt/k8s/work 23 [root@master01 work]# source /root/environment.sh 24 [root@master01 work]# for master_ip in ${MASTER_IPS[@]} 25 do 26 echo ">>> ${master_ip}" 27 scp kube-controller-manager.kubeconfig root@${master_ip}:/etc/kubernetes/ 28 done

提示:本步骤操作仅需要在master01节点操作。

1.5 创建kube-controller-manager的systemd

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# cat > kube-controller-manager.service.template <<EOF 4 [Unit] 5 Description=Kubernetes Controller Manager 6 Documentation=https://github.com/GoogleCloudPlatform/kubernetes 7 8 [Service] 9 WorkingDirectory=${K8S_DIR}/kube-controller-manager 10 ExecStart=/opt/k8s/bin/kube-controller-manager \ 11 --secure-port=10257 \ 12 --bind-address=127.0.0.1 \ 13 --profiling \ 14 --cluster-name=kubernetes \ 15 --controllers=*,bootstrapsigner,tokencleaner \ 16 --kube-api-qps=1000 \ 17 --kube-api-burst=2000 \ 18 --leader-elect \ 19 --use-service-account-credentials\ 20 --concurrent-service-syncs=2 \ 21 --tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \ 22 --tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \ 23 --authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \ 24 --client-ca-file=/etc/kubernetes/cert/ca.pem \ 25 --requestheader-allowed-names="system:metrics-server" \ 26 --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \ 27 --requestheader-extra-headers-prefix="X-Remote-Extra-" \ 28 --requestheader-group-headers=X-Remote-Group \ 29 --requestheader-username-headers=X-Remote-User \ 30 --cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \ 31 --cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \ 32 --experimental-cluster-signing-duration=87600h \ 33 --horizontal-pod-autoscaler-sync-period=10s \ 34 --concurrent-deployment-syncs=10 \ 35 --concurrent-gc-syncs=30 \ 36 --node-cidr-mask-size=24 \ 37 --service-cluster-ip-range=${SERVICE_CIDR} \ 38 --cluster-cidr=${CLUSTER_CIDR} \ 39 --pod-eviction-timeout=6m \ 40 --terminated-pod-gc-threshold=10000 \ 41 --root-ca-file=/etc/kubernetes/cert/ca.pem \ 42 --service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \ 43 --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \ 44 --logtostderr=true \ 45 --v=2 46 Restart=on-failure 47 RestartSec=5 48 49 [Install] 50 WantedBy=multi-user.target 51 EOF

提示:本步骤操作仅需要在master01节点操作。

1.6 分发systemd

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 scp kube-controller-manager.service.template root@${master_ip}:/etc/systemd/system/kube-controller-manager.service 7 done #分发system

提示:本步骤操作仅需要在master01节点操作。

二 启动并验证

2.1 启动kube-controller-manager 服务

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 ssh root@${master_ip} "mkdir -p ${K8S_DIR}/kube-controller-manager" 7 ssh root@${master_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager" 8 done

提示:本步骤操作仅需要在master01节点操作。

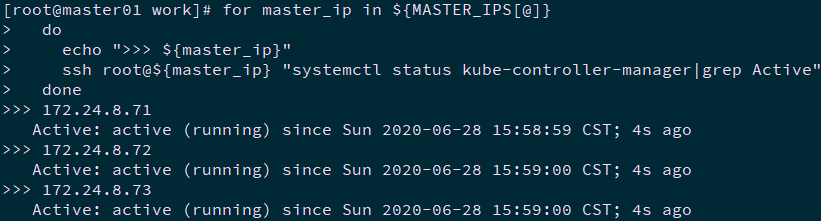

2.2 检查kube-controller-manager 服务

1 [root@master01 ~]# cd /opt/k8s/work 2 [root@master01 work]# source /root/environment.sh 3 [root@master01 work]# for master_ip in ${MASTER_IPS[@]} 4 do 5 echo ">>> ${master_ip}" 6 ssh root@${master_ip} "systemctl status kube-controller-manager|grep Active" 7 done

提示:本步骤操作仅需要在master01节点操作。

2.3 查看输出的 metrics

1 [root@master01 work]# curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://127.0.0.1:10257/metrics | head

提示:本步骤操作仅需要在master01节点操作。

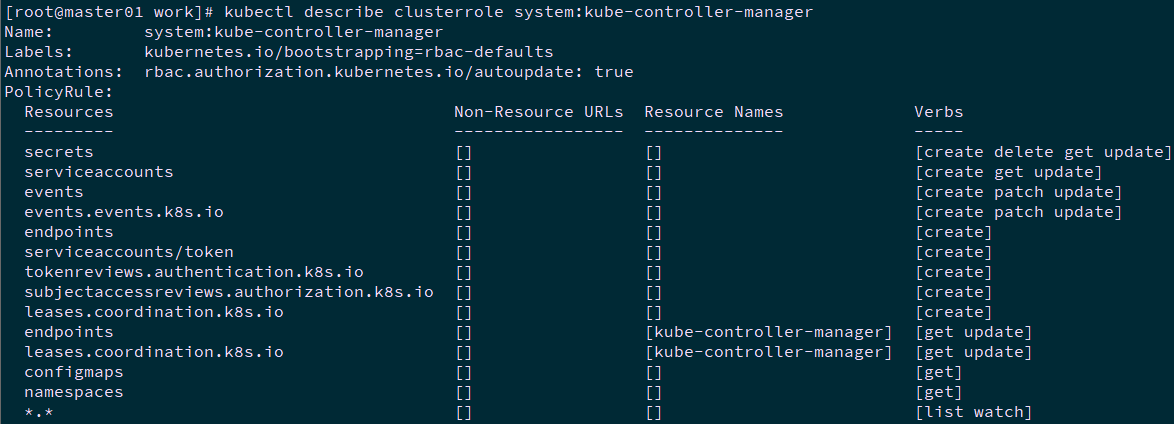

2.4 查看权限

1 [root@master01 ~]# kubectl describe clusterrole system:kube-controller-manager

ClusteRole system:kube-controller-manager 的权限很小,只能创建 secret、serviceaccount 等资源对象,各 controller 的权限分散到 ClusterRole system:controller:XXX 中。

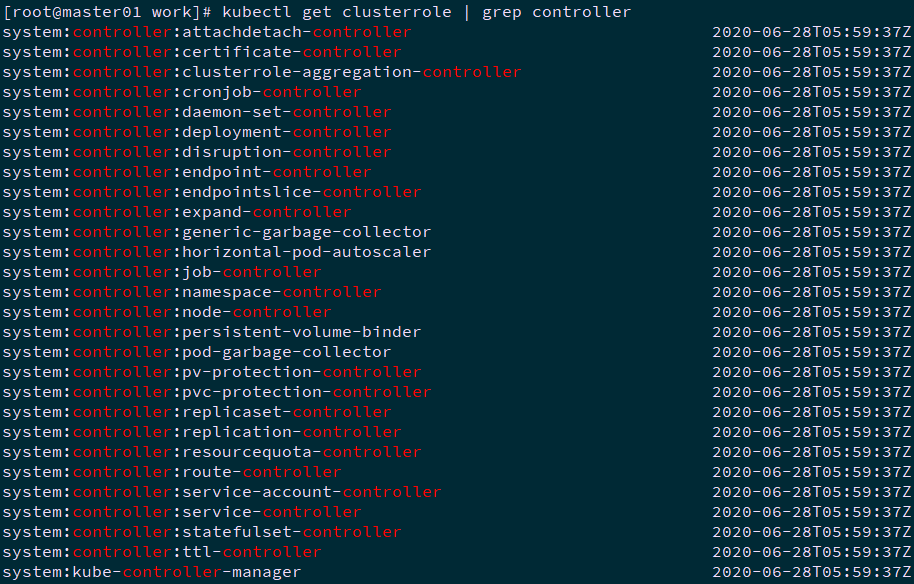

当在 kube-controller-manager 的启动参数中添加 --use-service-account-credentials=true 参数,这样 main controller 会为各 controller 创建对应的 ServiceAccount XXX-controller。内置的 ClusterRoleBinding system:controller:XXX 将赋予各 XXX-controller ServiceAccount 对应的 ClusterRole system:controller:XXX 权限。

1 [root@master01 ~]# kubectl get clusterrole | grep controller

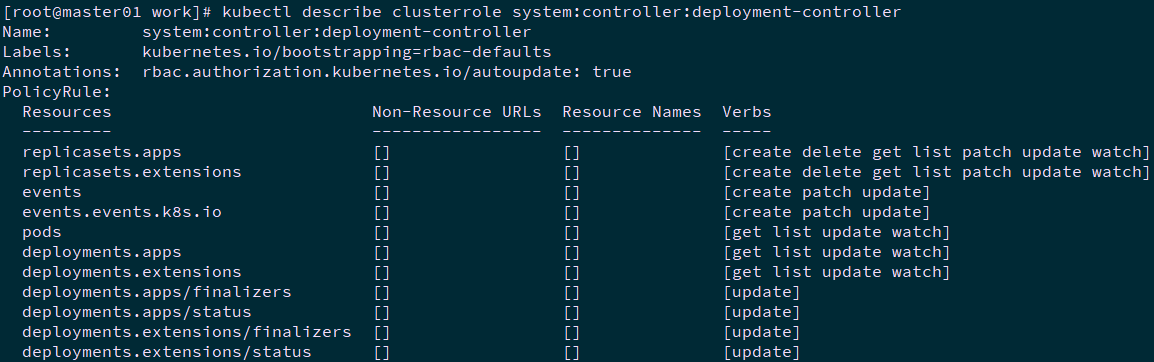

如deployment controller:

1 [root@master01 ~]# kubectl describe clusterrole system:controller:deployment-controller

提示:本步骤操作仅需要在master01节点操作。

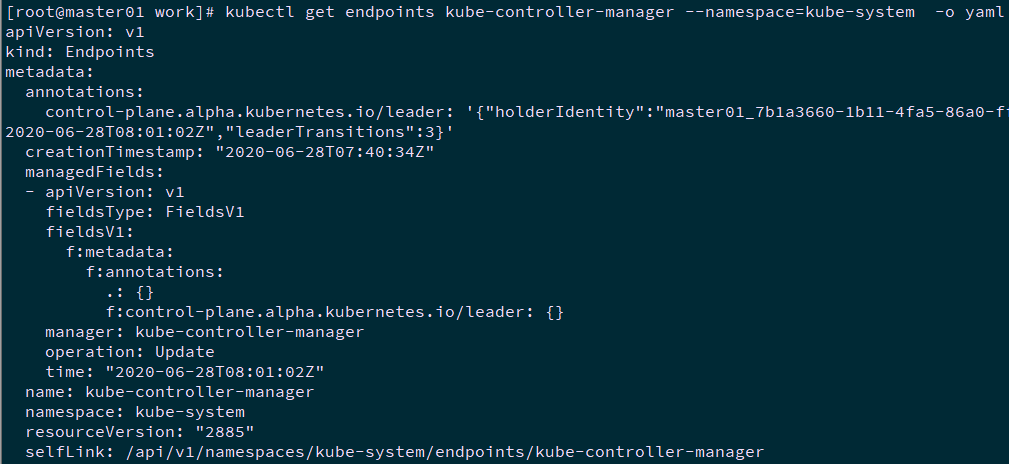

2.5 查看当前leader

1 [root@master01 ~]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

kubelet 认证和授权:https://kubernetes.io/docs/admin/kubelet-authentication-authorization/#kubelet-authorization