1.确定Hadoop处于启动状态

[root@neusoft-master ~]# jps

23763 Jps

3220 SecondaryNameNode

3374 ResourceManager

2935 NameNode

3471 NodeManager

3030 DataNode

2. 在/usr/local/filecotent下新建hellodemo文件,并写入以下内容,以 (tab键隔开)

[root@neusoft-master filecontent]# vi hellodemo

hello you

hello me

3.在linux中执行以下步骤:

3.1hdfs中创建data目录

[root@neusoft-master filecontent]# hadoop dfs -mkdir /data

3.2 将/usr/local/filecontent/hellodemo 上传到hdfs的data目录中

[root@neusoft-master filecontent]# hadoop dfs -put hellodemo /data

3.3查看data目录下的内容

[root@neusoft-master filecontent]# hadoop dfs -ls /data

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

17/02/01 00:39:44 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 1 items

-rw-r--r-- 3 root supergroup 19 2017-02-01 00:39 /data/hellodemo

3.4查看hellodemo的文件内容

[root@neusoft-master filecontent]# hadoop dfs -text /data/hellodemo

4. 编写WordCountTest.java并打包成jar文件

1 package Mapreduce; 2 3 import java.io.IOException; 4 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.fs.Path; 7 import org.apache.hadoop.io.LongWritable; 8 import org.apache.hadoop.io.Text; 9 import org.apache.hadoop.mapreduce.Job; 10 import org.apache.hadoop.mapreduce.Mapper; 11 import org.apache.hadoop.mapreduce.Reducer; 12 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 13 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 14 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 15 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 16 17 /** 18 * 假设有个目录结构 19 * /目录1 20 * /目录1/hello.txt 21 * /目录1/目录2/hello.txt 22 * 23 * 问:统计/目录1下面所有的文件中的单词技术 24 * 25 */ 26 public class WordCountTest { 27 public static void main(String[] args) throws Exception { 28 //2将自定义的MyMapper和MyReducer组装在一起 29 Configuration conf=new Configuration(); 30 String jobName=WordCountTest.class.getSimpleName(); 31 //1首先寫job,知道需要conf和jobname在去創建即可 32 Job job = Job.getInstance(conf, jobName); 33 34 //*13最后,如果要打包运行改程序,则需要调用如下行 35 job.setJarByClass(WordCountTest.class); 36 37 //3读取HDFS內容:FileInputFormat在mapreduce.lib包下 38 FileInputFormat.setInputPaths(job, new Path("hdfs://neusoft-master:9000/data/hellodemo")); 39 //4指定解析<k1,v1>的类(谁来解析键值对) 40 job.setInputFormatClass(TextInputFormat.class); 41 //5指定自定义mapper类 42 job.setMapperClass(MyMapper.class); 43 //6指定map输出的key2的类型和value2的类型 <k2,v2> 44 job.setMapOutputKeyClass(Text.class); 45 job.setMapOutputValueClass(LongWritable.class); 46 //7分区(默认1个),排序,分组,规约 采用 默认 47 48 //接下来采用reduce步骤 49 //8指定自定义的reduce类 50 job.setReducerClass(MyReducer.class); 51 //9指定输出的<k3,v3>类型 52 job.setOutputKeyClass(Text.class); 53 job.setOutputValueClass(LongWritable.class); 54 //10指定输出<K3,V3>的类 55 job.setOutputFormatClass(TextOutputFormat.class); 56 //11指定输出路径 57 FileOutputFormat.setOutputPath(job, new Path("hdfs://neusoft-master:9000/out1")); 58 59 //12写的mapreduce程序要交给resource manager运行 60 job.waitForCompletion(true); 61 } 62 private static class MyMapper extends Mapper<LongWritable, Text, Text,LongWritable>{ 63 Text k2 = new Text(); 64 LongWritable v2 = new LongWritable(); 65 @Override 66 protected void map(LongWritable key, Text value,//三个参数 67 Mapper<LongWritable, Text, Text, LongWritable>.Context context) 68 throws IOException, InterruptedException { 69 String line = value.toString(); 70 String[] splited = line.split(" ");//因为split方法属于string字符的方法,首先应该转化为string类型在使用 71 for (String word : splited) { 72 //word表示每一行中每个单词 73 //对K2和V2赋值 74 k2.set(word); 75 v2.set(1L); 76 context.write(k2, v2); 77 } 78 } 79 } 80 private static class MyReducer extends Reducer<Text, LongWritable, Text, LongWritable> { 81 LongWritable v3 = new LongWritable(); 82 @Override //k2表示单词,v2s表示不同单词出现的次数,需要对v2s进行迭代 83 protected void reduce(Text k2, Iterable<LongWritable> v2s, //三个参数 84 Reducer<Text, LongWritable, Text, LongWritable>.Context context) 85 throws IOException, InterruptedException { 86 long sum =0; 87 for (LongWritable v2 : v2s) { 88 //LongWritable本身是hadoop类型,sum是java类型 89 //首先将LongWritable转化为字符串,利用get方法 90 sum+=v2.get(); 91 } 92 v3.set(sum); 93 //将k2,v3写出去 94 context.write(k2, v3); 95 } 96 } 97 }

//1首先寫job,知道需要conf和jobname在去創建即可

Job job = Job.getInstance(conf, jobName);

//2将自定义的MyMapper和MyReducer组装在一起

Configuration conf=new Configuration();

String jobName=WordCountTest.class.getSimpleName();

FileInputFormat.setInputPaths(job, new Path("hdfs://neusoft-master:9000/data/hellodemo"));

//4指定解析<k1,v1>的类(谁来解析键值对)

job.setInputFormatClass(TextInputFormat.class);

//5指定自定义mapper类

job.setMapperClass(MyMapper.class);

//6指定map输出的key2的类型和value2的类型 <k2,v2>

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

//7分区(默认1个),排序,分组,规约 采用 默认

//接下来采用reduce步骤

//8指定自定义的reduce类

job.setReducerClass(MyReducer.class);

//9指定输出的<k3,v3>类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//10指定输出<K3,V3>的类

job.setOutputFormatClass(TextOutputFormat.class);

//11指定输出路径

FileOutputFormat.setOutputPath(job, new Path("hdfs://neusoft-master:9000/out1"));

//12写的mapreduce程序要交给resource manager运行

job.waitForCompletion(true);

//*13最后,如果要打包运行改程序,则需要调用如下行

job.setJarByClass(WordCountTest.class);

mapper任务

private static class MyMapper extends Mapper<LongWritable, Text, Text,LongWritable>{

Text k2 = new Text();

LongWritable v2 = new LongWritable();

@Override

protected void map(LongWritable key, Text value,//三个参数

Mapper<LongWritable, Text, Text, LongWritable>.Context context)

throws IOException, InterruptedException {

String line = value.toString();

String[] splited = line.split(" ");//因为split方法属于string字符的方法,首先应该转化为string类型在使用

for (String word : splited) {

//word表示每一行中每个单词

//对K2和V2赋值

k2.set(word);

v2.set(1L);

context.write(k2, v2);

}

}

}

Reducer任务

private static class MyReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

LongWritable v3 = new LongWritable();

@Override //k2表示单词,v2s表示不同单词出现的次数,需要对v2s进行迭代

protected void reduce(Text k2, Iterable<LongWritable> v2s, //三个参数

Reducer<Text, LongWritable, Text, LongWritable>.Context context)

throws IOException, InterruptedException {

long sum =0;

for (LongWritable v2 : v2s) {

//LongWritable本身是hadoop类型,sum是java类型

//首先将LongWritable转化为字符串,利用get方法

sum+=v2.get();

}

v3.set(sum);

//将k2,v3写出去

context.write(k2, v3);

}

}

}

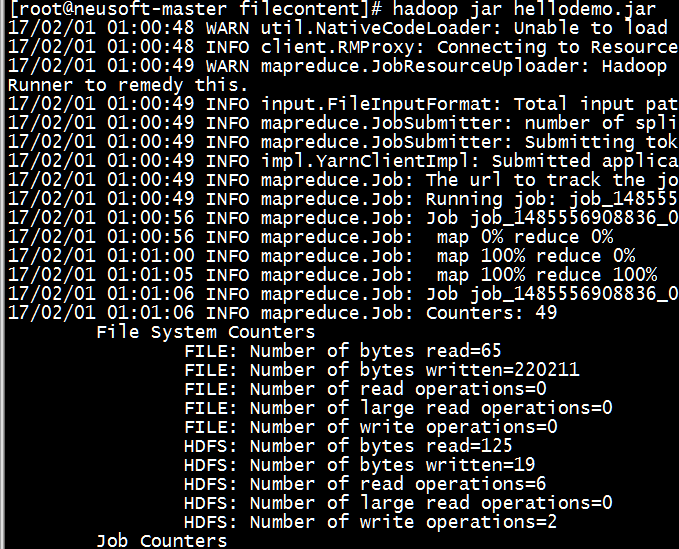

5.打成jar包并指定主类,在linux中运行

[root@neusoft-master filecontent]# hadoop jar hellodemo.jar

17/02/01 01:00:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/02/01 01:00:48 INFO client.RMProxy: Connecting to ResourceManager at neusoft-master/192.168.191.130:8080

17/02/01 01:00:49 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

17/02/01 01:00:49 INFO input.FileInputFormat: Total input paths to process : 1

17/02/01 01:00:49 INFO mapreduce.JobSubmitter: number of splits:1

17/02/01 01:00:49 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1485556908836_0003

17/02/01 01:00:49 INFO impl.YarnClientImpl: Submitted application application_1485556908836_0003

17/02/01 01:00:49 INFO mapreduce.Job: The url to track the job: http://neusoft-master:8088/proxy/application_1485556908836_0003/

17/02/01 01:00:49 INFO mapreduce.Job: Running job: job_1485556908836_0003

17/02/01 01:00:56 INFO mapreduce.Job: Job job_1485556908836_0003 running in uber mode : false

17/02/01 01:00:56 INFO mapreduce.Job: map 0% reduce 0%

17/02/01 01:01:00 INFO mapreduce.Job: map 100% reduce 0%

17/02/01 01:01:05 INFO mapreduce.Job: map 100% reduce 100%

17/02/01 01:01:06 INFO mapreduce.Job: Job job_1485556908836_0003 completed successfully

17/02/01 01:01:06 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=65

FILE: Number of bytes written=220211

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=125

HDFS: Number of bytes written=19

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=2753

Total time spent by all reduces in occupied slots (ms)=3020

Total time spent by all map tasks (ms)=2753

Total time spent by all reduce tasks (ms)=3020

Total vcore-seconds taken by all map tasks=2753

Total vcore-seconds taken by all reduce tasks=3020

Total megabyte-seconds taken by all map tasks=2819072

Total megabyte-seconds taken by all reduce tasks=3092480

Map-Reduce Framework

Map input records=2

Map output records=4

Map output bytes=51

Map output materialized bytes=65

Input split bytes=106

Combine input records=0

Combine output records=0

Reduce input groups=3

Reduce shuffle bytes=65

Reduce input records=4

Reduce output records=3

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=40

CPU time spent (ms)=1550

Physical memory (bytes) snapshot=448503808

Virtual memory (bytes) snapshot=3118854144

Total committed heap usage (bytes)=319291392

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=19

File Output Format Counters

Bytes Written=19

*********************

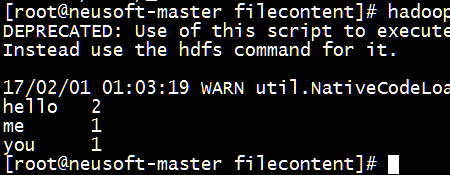

6.查看输出文件内容

[root@neusoft-master filecontent]# hadoop dfs -ls /out1

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

17/02/01 01:01:45 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 3 root supergroup 0 2017-02-01 01:01 /out1/_SUCCESS

-rw-r--r-- 3 root supergroup 19 2017-02-01 01:01 /out1/part-r-00000

[root@neusoft-master filecontent]# hadoop dfs -text /out1/part-r-00000

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

17/02/01 01:03:19 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

hello 2

me 1

you 1

7.结果分析

根据上传到Hdfs中得文件和所得结果分析,所得结果是正确无误的。

注意:主函数中得方法有一些步骤是可省的,需要着重注意

其中第6、8、10步均可省略

public static void main(String[] args) throws Exception {

//必须要传递的是自定的mapper和reducer的类,输入输出的路径必须指定,输出的类型<k3,v3>必须指定

//2将自定义的MyMapper和MyReducer组装在一起

Configuration conf=new Configuration();

String jobName=WordCountTest.class.getSimpleName();

//1首先寫job,知道需要conf和jobname在去創建即可

Job job = Job.getInstance(conf, jobName);

//*13最后,如果要打包运行改程序,则需要调用如下行

job.setJarByClass(WordCountTest.class);

//3读取HDFS內容:FileInputFormat在mapreduce.lib包下

FileInputFormat.setInputPaths(job, new Path("hdfs://neusoft-master:9000/data/hellodemo"));

//4指定解析<k1,v1>的类(谁来解析键值对)

//*指定解析的类可以省略不写,因为设置解析类默认的就是TextInputFormat.class

job.setInputFormatClass(TextInputFormat.class);

//5指定自定义mapper类

job.setMapperClass(MyMapper.class);

//6指定map输出的key2的类型和value2的类型 <k2,v2>

//*下面两步可以省略,当<k3,v3>和<k2,v2>类型一致的时候,<k2,v2>类型可以不指定

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

//7分区(默认1个),排序,分组,规约 采用 默认

//接下来采用reduce步骤

//8指定自定义的reduce类

job.setReducerClass(MyReducer.class);

//9指定输出的<k3,v3>类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//10指定输出<K3,V3>的类

//*下面这一步可以省

job.setOutputFormatClass(TextOutputFormat.class);

//11指定输出路径

FileOutputFormat.setOutputPath(job, new Path("hdfs://neusoft-master:9000/out1"));

//12写的mapreduce程序要交给resource manager运行

job.waitForCompletion(true);

}

END