第一部分.Hadoop计数器简述

hadoop计数器:

可以让开发人员以全局的视角来审查程序的运行情况以及各项指标,及时做出错误诊断并进行相应处理。 内置计数器(MapReduce相关、文件系统相关和作业调度相关),

也可以通过http://master:50030/jobdetails.jsp查看

MapReduce的输出:

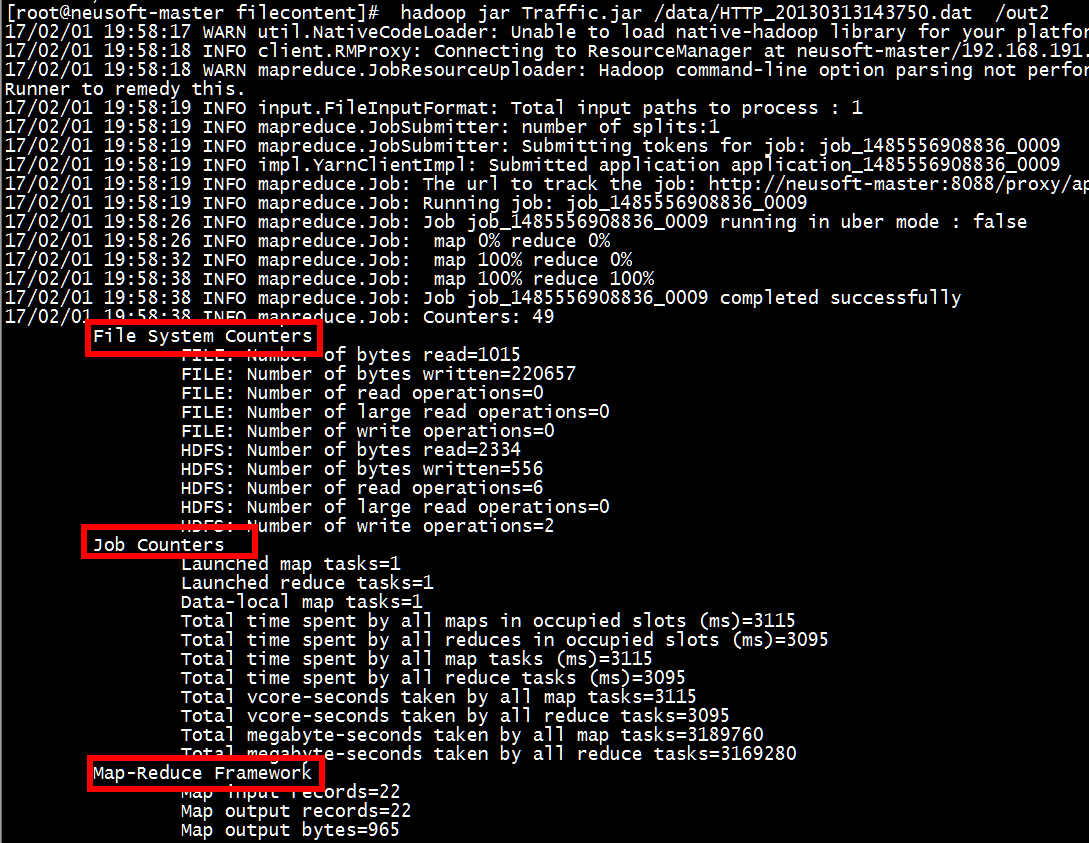

运行jar包的详细步骤:

[root@neusoft-master filecontent]# hadoop jar Traffic.jar /data/HTTP_20130313143750.dat /out2

17/02/01 19:58:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/02/01 19:58:18 INFO client.RMProxy: Connecting to ResourceManager at neusoft-master/192.168.191.130:8080

17/02/01 19:58:18 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

17/02/01 19:58:19 INFO input.FileInputFormat: Total input paths to process : 1

17/02/01 19:58:19 INFO mapreduce.JobSubmitter: number of splits:1

17/02/01 19:58:19 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1485556908836_0009

17/02/01 19:58:19 INFO impl.YarnClientImpl: Submitted application application_1485556908836_0009

17/02/01 19:58:19 INFO mapreduce.Job: The url to track the job: http://neusoft-master:8088/proxy/application_1485556908836_0009/

17/02/01 19:58:19 INFO mapreduce.Job: Running job: job_1485556908836_0009

17/02/01 19:58:26 INFO mapreduce.Job: Job job_1485556908836_0009 running in uber mode : false

17/02/01 19:58:26 INFO mapreduce.Job: map 0% reduce 0%

17/02/01 19:58:32 INFO mapreduce.Job: map 100% reduce 0%

17/02/01 19:58:38 INFO mapreduce.Job: map 100% reduce 100%

17/02/01 19:58:38 INFO mapreduce.Job: Job job_1485556908836_0009 completed successfully

17/02/01 19:58:38 INFO mapreduce.Job: Counters: 49

File System Counters 1.文件系统计数器,由两类组成,FILE类是文件系统与Linux(磁盘)交互的类,HDFS是文件系统与HDFS交互的类(本质上都是与磁盘数据打交道)

FILE: Number of bytes read=1015

FILE: Number of bytes written=220657

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2334

HDFS: Number of bytes written=556

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters 2.作业计数器 3.框架本身的计数器

Launched map tasks=1 加载map任务

Launched reduce tasks=1 加载reduce任务

Data-local map tasks=1 数据本地化

Total time spent by all maps in occupied slots (ms)=3115 所有map任务在被占用的slots中所用的时间------在yarn中,程序打成jar包提交给resourcemanager,nodemanager向resourcemanager申请资源,然后在nodemanager上运行, 而划分资源(cpu,io,网络,磁盘)的单位叫容器container,每个节点上资源不是无限的,因此应该将任务划分为不同的容器,job在运行的时候可以申请job的数量,之后由nodemanager确定哪些任务可以执行map,那些可以执行reduce等,从而由slot表示,表示槽的概念。任务过来就占用一个槽。

Total time spent by all reduces in occupied slots (ms)=3095 所有reduce任务在被占用的slots中所用的时间

Total time spent by all map tasks (ms)=3115 所有map执行时间

Total time spent by all reduce tasks (ms)=3095 所有reduce执行的时间

Total vcore-seconds taken by all map tasks=3115

Total vcore-seconds taken by all reduce tasks=3095

Total megabyte-seconds taken by all map tasks=3189760

Total megabyte-seconds taken by all reduce tasks=3169280

Map-Reduce Framework

Map input records=22 //输入的行数 或键值对数目

Map output records=22 // 输出的键值对

Map output bytes=965

Map output materialized bytes=1015

Input split bytes=120

Combine input records=0 规约 第五步

Combine output records=0

Reduce input groups=21 输入的是21个组

Reduce shuffle bytes=1015

Reduce input records=22 输入的行数或键值对数目

Reduce output records=21

Spilled Records=44

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=73

CPU time spent (ms)=1800

Physical memory (bytes) snapshot=457379840

Virtual memory (bytes) snapshot=3120148480

Total committed heap usage (bytes)=322437120

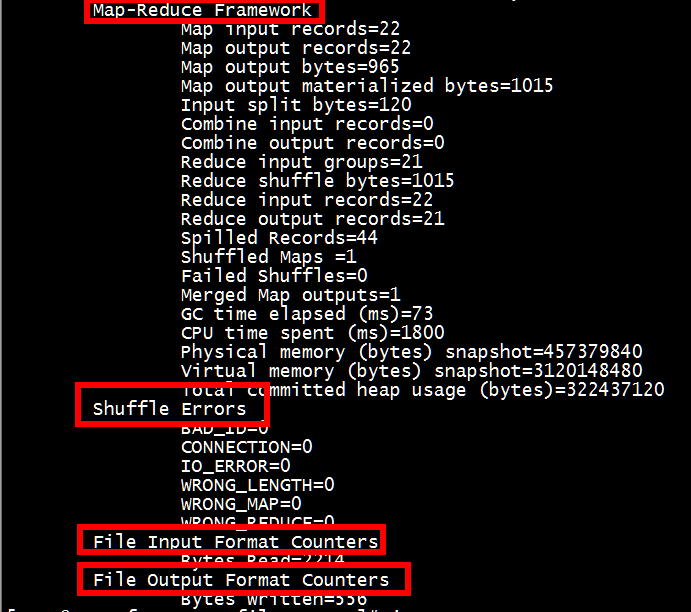

Shuffle Errors 4.shuffle错误

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters 5.输入计数器

Bytes Read=2214

File Output Format Counters 6.输出的计数器

Bytes Written=556

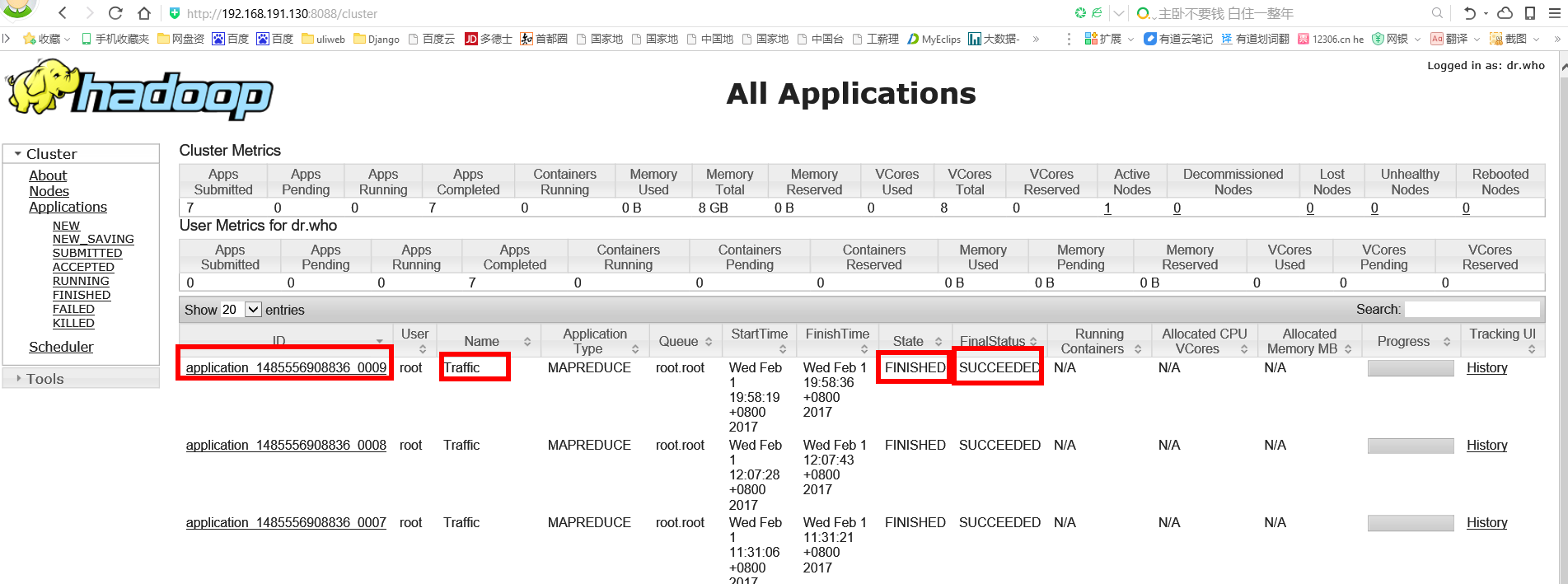

运行结果截图:

通过查看http://neusoft-master:8088/可得到详细的job信息

上述页面是resourcemanager的集群,上面显示了所有的application应用用户层面看是job作业,resourcemanager层面看是applicaton应用

第二部分 自定义计数器

核心代码:

//计数器使用~解决:判断下输入文件中有多少hello Counter counterHello = context.getCounter("Sensitive words","hello"); //假设hello为敏感词 if(line != null && line.contains("hello")){ counterHello.increment(1L); } //计数器代码结束

示例代码:

1 package Mapreduce; 2 3 import java.io.IOException; 4 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.fs.Path; 7 import org.apache.hadoop.io.LongWritable; 8 import org.apache.hadoop.io.Text; 9 import org.apache.hadoop.mapreduce.Counter; 10 import org.apache.hadoop.mapreduce.Job; 11 import org.apache.hadoop.mapreduce.Mapper; 12 import org.apache.hadoop.mapreduce.Reducer; 13 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 14 import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; 15 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 16 import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; 17 18 /** 19 * 20 * 计数器的使用及测试 21 */ 22 public class MyCounterTest { 23 public static void main(String[] args) throws Exception { 24 //必须要传递的是自定的mapper和reducer的类,输入输出的路径必须指定,输出的类型<k3,v3>必须指定 25 //2将自定义的MyMapper和MyReducer组装在一起 26 Configuration conf=new Configuration(); 27 String jobName=MyCounterTest.class.getSimpleName(); 28 //1首先寫job,知道需要conf和jobname在去創建即可 29 Job job = Job.getInstance(conf, jobName); 30 31 //*13最后,如果要打包运行改程序,则需要调用如下行 32 job.setJarByClass(MyCounterTest.class); 33 34 //3读取HDFS內容:FileInputFormat在mapreduce.lib包下 35 FileInputFormat.setInputPaths(job, new Path("hdfs://neusoft-master:9000/data/hellodemo")); 36 //4指定解析<k1,v1>的类(谁来解析键值对) 37 //*指定解析的类可以省略不写,因为设置解析类默认的就是TextInputFormat.class 38 job.setInputFormatClass(TextInputFormat.class); 39 //5指定自定义mapper类 40 job.setMapperClass(MyMapper.class); 41 //6指定map输出的key2的类型和value2的类型 <k2,v2> 42 //*下面两步可以省略,当<k3,v3>和<k2,v2>类型一致的时候,<k2,v2>类型可以不指定 43 job.setMapOutputKeyClass(Text.class); 44 job.setMapOutputValueClass(LongWritable.class); 45 //7分区(默认1个),排序,分组,规约 采用 默认 46 47 //接下来采用reduce步骤 48 //8指定自定义的reduce类 49 job.setReducerClass(MyReducer.class); 50 //9指定输出的<k3,v3>类型 51 job.setOutputKeyClass(Text.class); 52 job.setOutputValueClass(LongWritable.class); 53 //10指定输出<K3,V3>的类 54 //*下面这一步可以省 55 job.setOutputFormatClass(TextOutputFormat.class); 56 //11指定输出路径 57 FileOutputFormat.setOutputPath(job, new Path("hdfs://neusoft-master:9000/out3")); 58 59 //12写的mapreduce程序要交给resource manager运行 60 job.waitForCompletion(true); 61 } 62 private static class MyMapper extends Mapper<LongWritable, Text, Text,LongWritable>{ 63 Text k2 = new Text(); 64 LongWritable v2 = new LongWritable(); 65 @Override 66 protected void map(LongWritable key, Text value,//三个参数 67 Mapper<LongWritable, Text, Text, LongWritable>.Context context) 68 throws IOException, InterruptedException { 69 String line = value.toString(); 70 //计数器使用~解决:判断下输入文件中有多少hello 这里仅仅是举例,如果有很多的hello可能显示的还是如此结果 71 Counter counterHello = context.getCounter("Sensitive words","hello");//假设hello为敏感词 72 if(line != null && line.contains("hello")){ 73 counterHello.increment(1L); 74 } 75 //计数器代码结束 76 String[] splited = line.split(" ");//因为split方法属于string字符的方法,首先应该转化为string类型在使用 77 for (String word : splited) { 78 //word表示每一行中每个单词 79 //对K2和V2赋值 80 k2.set(word); 81 v2.set(1L); 82 context.write(k2, v2); 83 } 84 } 85 } 86 private static class MyReducer extends Reducer<Text, LongWritable, Text, LongWritable> { 87 LongWritable v3 = new LongWritable(); 88 @Override //k2表示单词,v2s表示不同单词出现的次数,需要对v2s进行迭代 89 protected void reduce(Text k2, Iterable<LongWritable> v2s, //三个参数 90 Reducer<Text, LongWritable, Text, LongWritable>.Context context) 91 throws IOException, InterruptedException { 92 long sum =0; 93 for (LongWritable v2 : v2s) { 94 //LongWritable本身是hadoop类型,sum是java类型 95 //首先将LongWritable转化为字符串,利用get方法 96 sum+=v2.get(); 97 } 98 v3.set(sum); 99 //将k2,v3写出去 100 context.write(k2, v3); 101 } 102 } 103 }

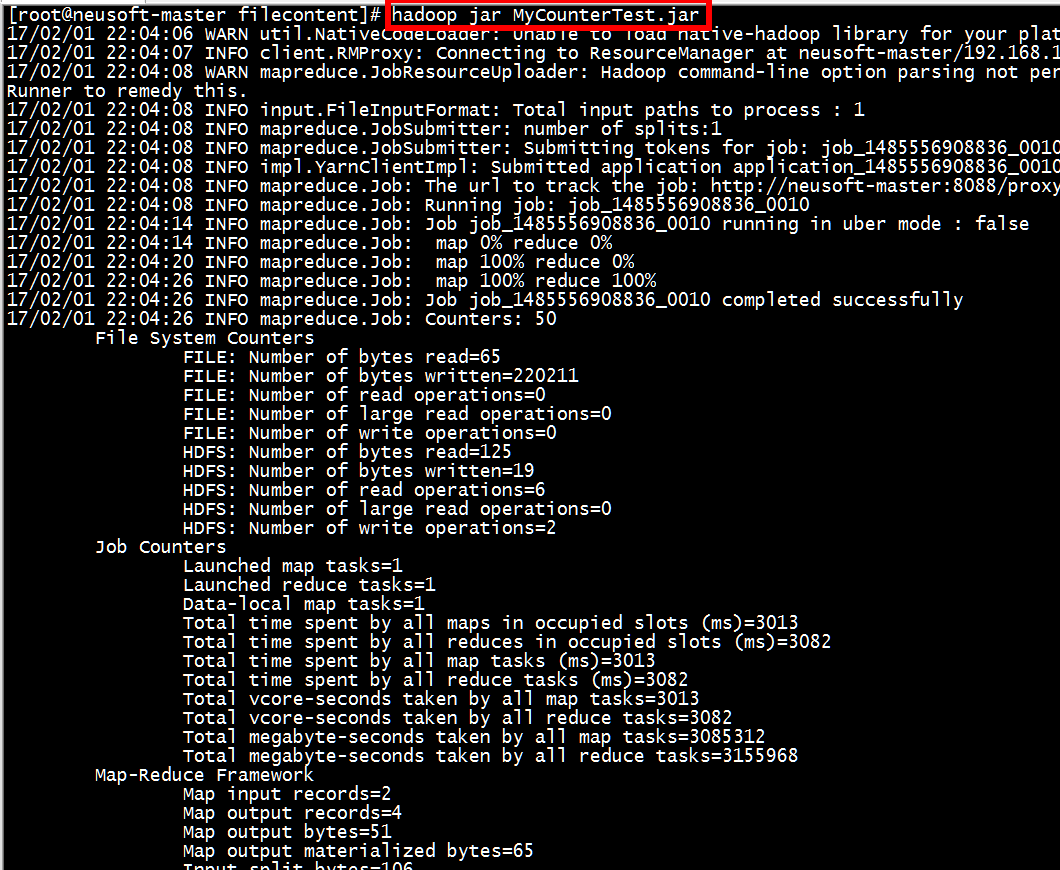

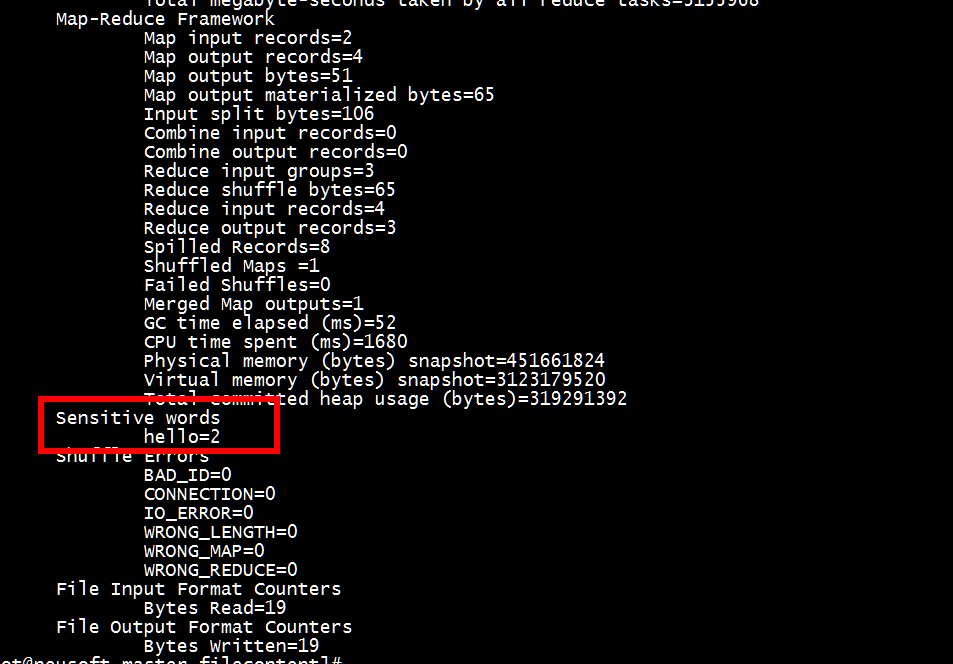

运行:

从上图中可以看到Sensitive words里面显示了hello的个数。

第三部分 总结:

问:partition的目的是什么?

答:多个reducer task实现并行计算,节省运行实际,提高job执行效率。

问:什么时候使用自定义排序?

答:.....

问:如何使用自定义排序?

答:自定义个k2类型,覆盖compareTo(...)方法

问:什么时候使用自定义分组?

答:当k2的compareTo方法不适合业务的时候。

问:如何使用自定义分组?

答:job.setGroupingComparatorClass(...);

问:使用combiner有什么好处?

答:在map端执行reduce操作,可以减少map最终的数据量,减少传输到reducer的数据量,减轻网络压力。

问:为什么combiner不是默认配置?

答:因为有个算法不适合使用combiner。什么样的算法不适合?不符合幂等性。

问:为什么在map端执行了reduce操作,还需要在reduce端再次执行哪?

答:因为map端执行的是局部reduce操作,在reduce端执行全局reduce操作。