Hadoop 下载 (2.9.2)

https://hadoop.apache.org/releases.html

准备工作

关闭防火墙 (也可放行)

# 停止防火墙

systemctl stop firewalld

# 关闭防火墙开机自启动

systemctl disable firewalld

修改 hosts 文件,让 hadoop 对应本机 IP 地址 (非 127.0.0.1)

vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 xxx.xxx.xxx.xxx hadoop

安装 JDK

https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

# 解压 tar -zxf /opt/jdk-8u202-linux-x64.tar.gz -C /opt/ # 配置环境变量 vim /etc/profile # JAVA_HOME export JAVA_HOME=/opt/jdk1.8.0_202/ export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$CLASSPATH export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH # 刷新环境变量 source /etc/profile # 验证 java -version # java version "1.8.0_202" # Java(TM) SE Runtime Environment (build 1.8.0_202-b08) # Java HotSpot(TM) 64-Bit Server VM (build 25.202-b08, mixed mode)

安装Hadoop

# 解压 tar -zxf /opt/hadoop-2.9.2-snappy-64.tar.gz -C /opt/ # 配置环境变量 vim /etc/profile # HADOOP_HOME export HADOOP_HOME=/opt/hadoop-2.9.2 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin # 刷新环境变量 source /etc/profile # 验证 hadoop version # 自己编译的,显示可能不一样 # Hadoop 2.9.2 # Subversion Unknown -r Unknown # Compiled by root on 2018-12-16T09:39Z # Compiled with protoc 2.5.0 # From source with checksum 3a9939967262218aa556c684d107985 # This command was run using /opt/hadoop-2.9.2/share/hadoop/common/hadoop-common-2.9.2.jar

配置 Hadoop 伪分布式

一、配置 HDFS

hadoop-env.sh

vim /opt/hadoop-2.9.2/etc/hadoop/hadoop-env.sh # 配置 JDK 路径 # The java implementation to use. export JAVA_HOME=/opt/jdk1.8.0_202/

core-site.xml

<configuration> <!-- 指定HDFS中NameNode的地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://hadoop:9000</value> </property> <!-- 指定Hadoop运行时产生文件的存储目录 --> <property> <name>hadoop.tmp.dir</name> <value>/opt/hadoopTmp</value> </property> </configuration>

hdfs-site.xml

<configuration> <!-- 指定HDFS副本的数量 --> <property> <name>dfs.replication</name> <value>1</value> </property> <!-- 默认为true,namenode 连接 datanode 时会进行 host 解析查询 --> <property> <name>dfs.namenode.datanode.registration.ip-hostname-check</name> <value>true</value> </property> </configuration>

启动 hdfs

# 第一次使用需要先格式化一次。之前若格式化过请先停止进程,然后删除文件再执行格式化操作 hdfs namenode -format # 启动 namenode hadoop-daemon.sh start namenode # 启动 datanode hadoop-daemon.sh start datanode # 验证,查看 jvm 进程 jps # 84609 Jps # 84242 NameNode # 84471 DataNode

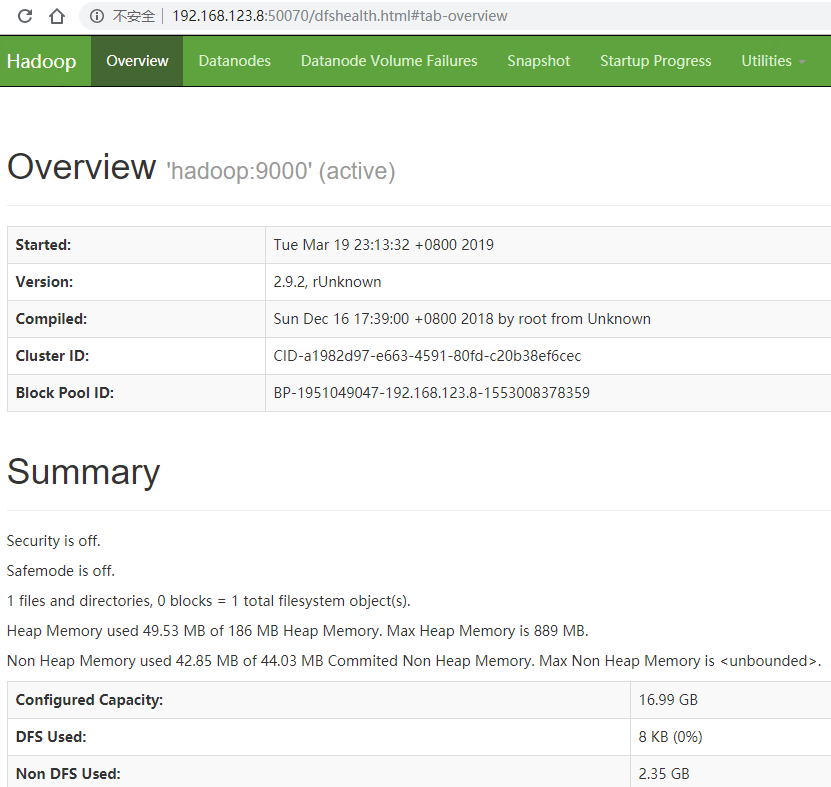

浏览器访问 CentOS 的 IP 地址加端口号 (默认50070) 即可看到 web 端

二、配置 YARN

yarn-env.sh

vim /opt/hadoop-2.9.2/etc/hadoop/yarn-env.sh # 配置 JDK 路径 # some Java parameters export JAVA_HOME=/opt/jdk1.8.0_202/

yarn-site.xml

<configuration> <!-- Site specific YARN configuration properties --> <!-- Reducer获取数据的方式 --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 指定YARN的ResourceManager的地址 --> <property> <name>yarn.resourcemanager.hostname</name> <value>hadoop</value> </property> </configuration>

启动 yarn,需保证 hdfs 已启动

# 启动 resourcemanager yarn-daemon.sh start resourcemanager # 启动 nodemanager yarn-daemon.sh start nodemanager # 查看 JVM 进程 jps # 1604 DataNode # 1877 ResourceManager # 3223 Jps # 1468 NameNode # 2172 NodeManager

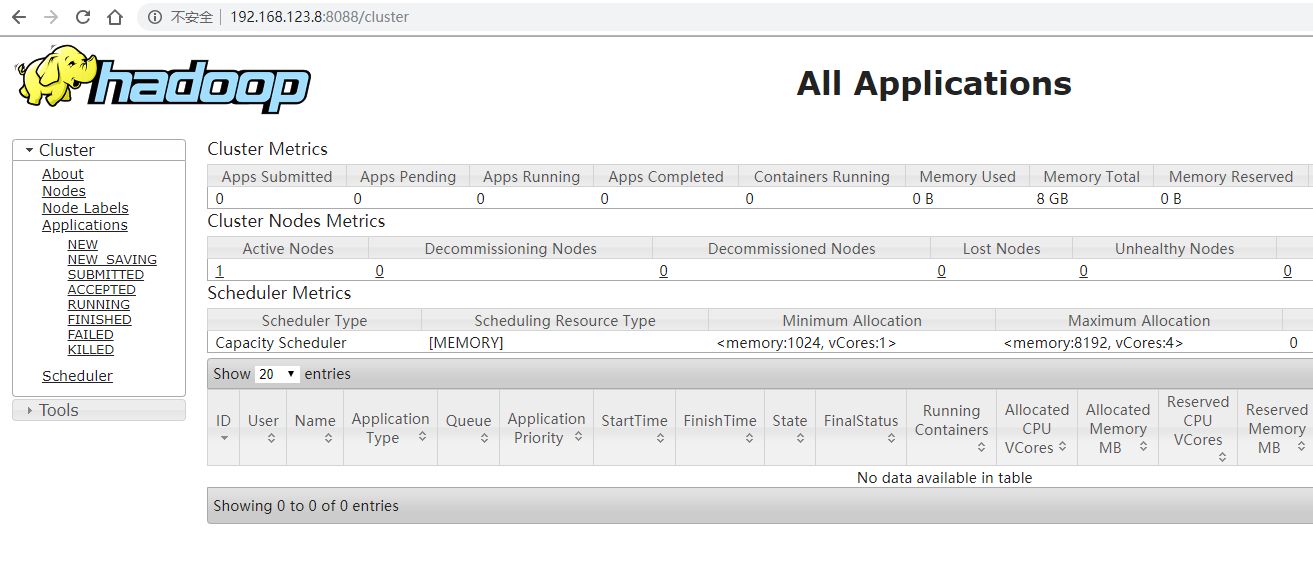

浏览器访问 CentOS 的 IP 地址加端口号 (默认8088) 即可看到 web 端

三、配置 MapReduce

mapred-env.sh

vim /opt/hadoop-2.9.2/etc/hadoop/mapred-env.sh # 配置 JDK 路径 export JAVA_HOME=/opt/jdk1.8.0_202/ # when HADOOP_JOB_HISTORYSERVER_HEAPSIZE is not defined, set it.

mapred-site.xml

# 复制一份 cp /opt/hadoop-2.9.2/etc/hadoop/mapred-site.xml.template /opt/hadoop-2.9.2/etc/hadoop/mapred-site.xml # 编辑 vim /opt/hadoop-2.9.2/etc/hadoop/mapred-site.xml

<configuration> <!-- 指定MR运行在YARN上 --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

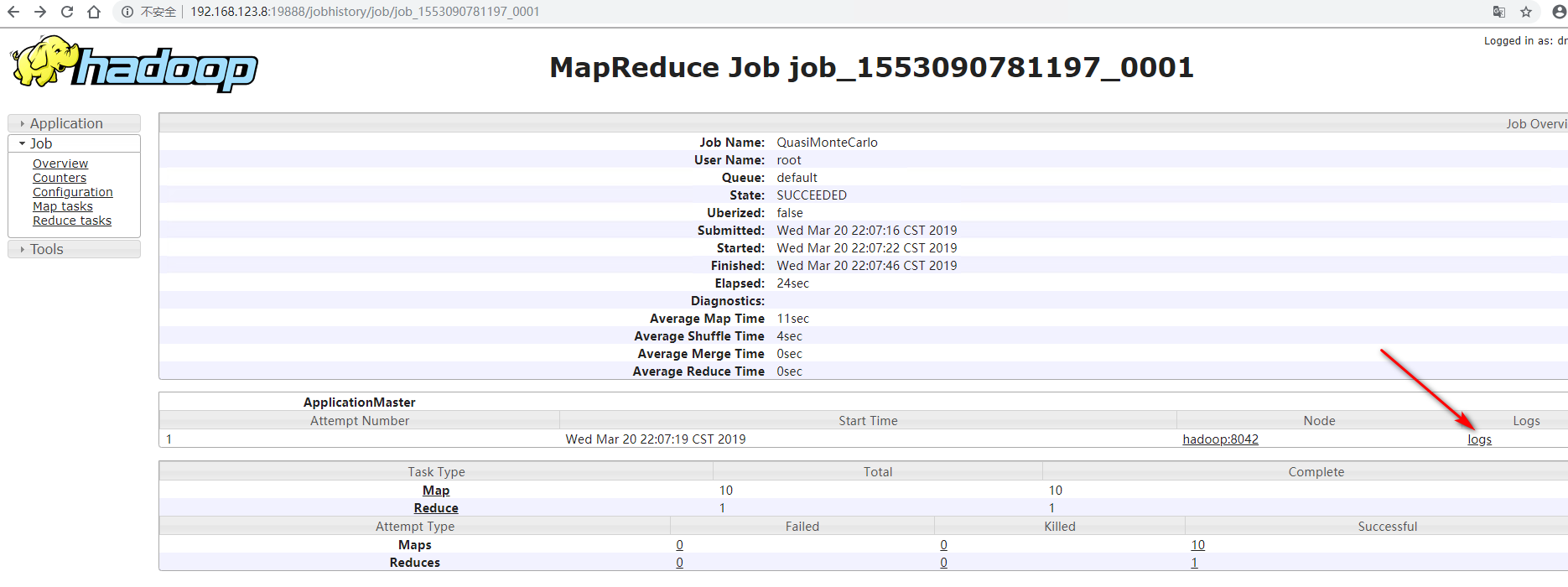

运行一个 MapReduce 任务

# 计算圆周率 hadoop jar /opt/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar pi 10 100 # Job Finished in 26.542 seconds # Estimated value of Pi is 3.14800000000000000000

浏览器访问 CentOS 的 IP 地址加端口号 (默认8088) 可以查看记录

其他配置

四、配置 jobhistory,打开历史记录

mapred-site.xml

<configuration> <!-- 历史服务器端地址 --> <property> <name>mapreduce.jobhistory.address</name> <value>hadoop:10020</value> </property> <!-- 历史服务器web端地址 --> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>hadoop:19888</value> </property> <property> <name>yarn.log.server.url</name> <value>http://hadoop:19888/jobhistory/logs</value> </property> </configuration>

# 启动 jobhistory mr-jobhistory-daemon.sh start historyserver # JVM 进程 jps # 7376 NodeManager # 6903 DataNode # 18345 Jps # 6797 NameNode # 7086 ResourceManager # 18254 JobHistoryServer

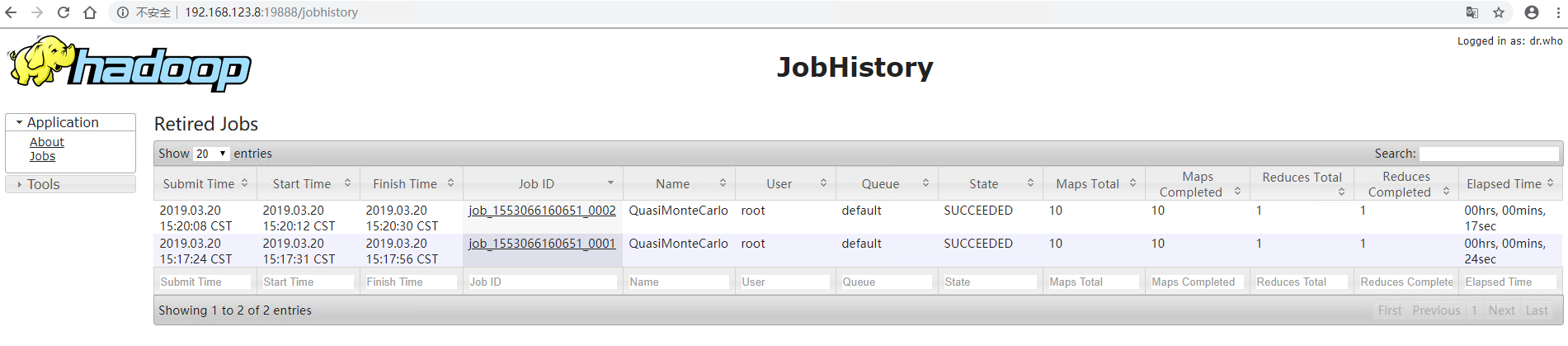

浏览器访问 CentOS 的 IP 地址加端口号 (默认19888) 即可看到 web 端

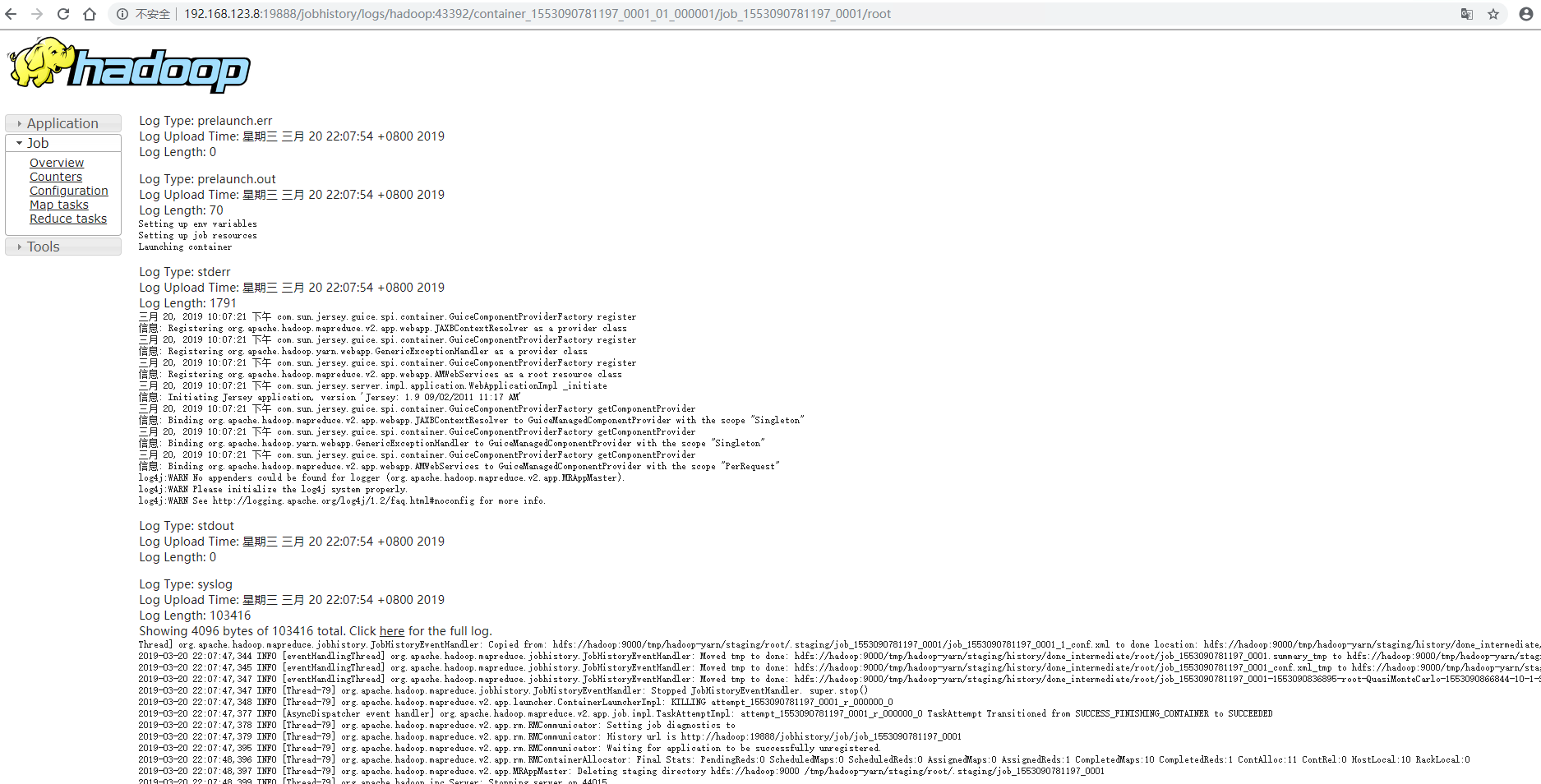

五、配置 log-aggregation,打开日志聚集,在 web 端可以查看运行详情

yarn-site.xml

<configuration> <!-- 开启日志聚集功能 --> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!-- 设置日志保留时间(7天) --> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>604800</value> </property> </configuration>

# 需要重启一遍服务 hadoop-daemon.sh stop namenode hadoop-daemon.sh stop datanode yarn-daemon.sh stop resourcemanager yarn-daemon.sh stop nodemanager mr-jobhistory-daemon.sh stop historyserver hadoop-daemon.sh start namenode hadoop-daemon.sh start datanode yarn-daemon.sh start resourcemanager yarn-daemon.sh start nodemanager mr-jobhistory-daemon.sh start historyserver # 再运行一个任务,就可以看到详情 hadoop jar /opt/hadoop-2.9.2/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.9.2.jar pi 10 100

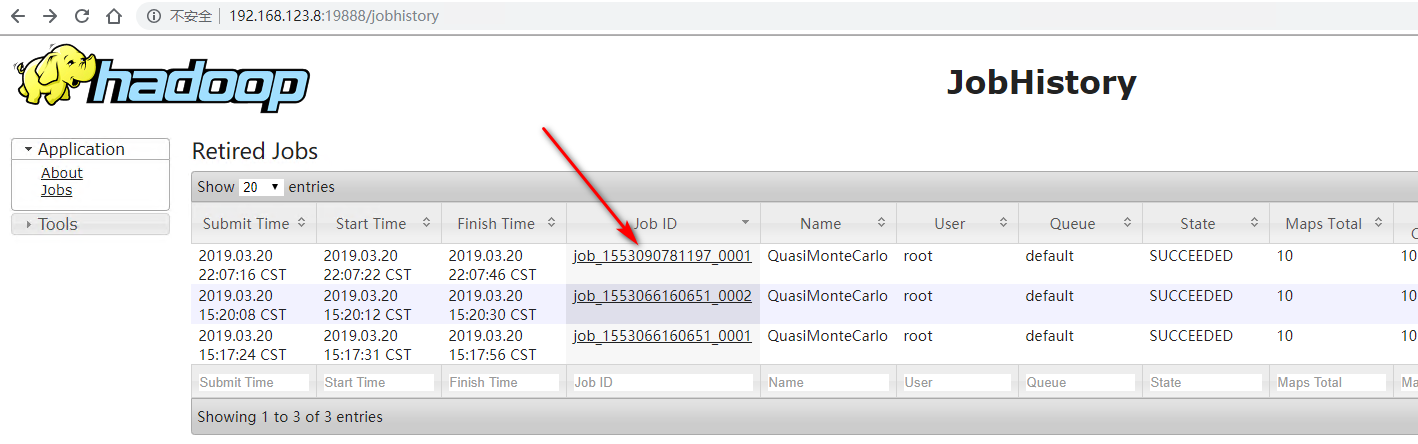

查看刚刚运行的任务详情,未开启日志聚集之前运行的任务无法查看详情

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/SingleCluster.html