node1 192.168.1.11 | node2 192.168.1.12 | node3 192.168.1.13 | 备注 | ||

NameNode | Hadoop | Y | Y | 高可用 | |

DateNode | Y | Y | |||

ResourceManager | Y | 高可用 | |||

| NodeManager | Y | Y | Y | ||

JournalNodes | Y | Y | Y | 奇数个,至少3个节点 | |

| ZKFC(DFSZKFailoverController) | Y | Y | 有namenode的地方就有ZKFC | ||

QuorumPeerMain | Zookeeper | Y | Y | Y | |

MySQL | HIVE | Hive元数据库 | |||

Metastore(RunJar) | |||||

HIVE(RunJar) | Y | ||||

| HMaster | HBase | Y | Y | 高可用 | |

| HRegionServer | Y | Y | Y | ||

Spark(Master) | Spark | Y | 高可用 | ||

Spark(Worker) | Y | Y |

apache-ant-1.9.9-bin.tar.gzapache-hive-1.2.1-bin.tar.gzapache-maven-3.3.9-bin.tar.gzapache-tomcat-6.0.44.tar.gzCentOS-6.9-x86_64-minimal.isofindbugs-3.0.1.tar.gzhadoop-2.7.3-src.tar.gzhadoop-2.7.3.tar.gzhadoop-2.7.3(自已编译的centOS6.9版本).tar.gzhbase-1.3.1-bin(自己编译).tar.gzhbase-1.3.1-src.tar.gzjdk-8u121-linux-x64.tar.gzmysql-connector-java-5.6-bin.jarprotobuf-2.5.0.tar.gzscala-2.11.11.tgzsnappy-1.1.3.tar.gzspark-2.1.1-bin-hadoop2.7.tgz

关闭防火墙

[root@node1 ~]# service iptables stop

[root@node1 ~]# chkconfig iptables off

zookeeper

[root@node1 ~]# wget -O /root/zookeeper-3.4.9.tar.gz https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.4.9/zookeeper-3.4.9.tar.gz

[root@node1 ~]# tar -zxvf /root/zookeeper-3.4.9.tar.gz -C /root

[root@node1 ~]# cp /root/zookeeper-3.4.9/conf/zoo_sample.cfg /root/zookeeper-3.4.9/conf/zoo.cfg

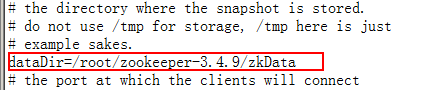

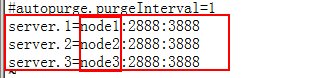

[root@node1 ~]# vi /root/zookeeper-3.4.9/conf/zoo.cfg

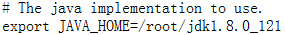

[root@node1 ~]# vi /root/zookeeper-3.4.9/bin/zkEnv.sh

[root@node1 ~]# mkdir /root/zookeeper-3.4.9/logs

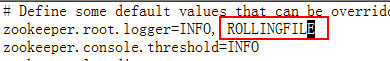

[root@node1 ~]# vi /root/zookeeper-3.4.9/conf/log4j.properties

[root@node1 ~]# mkdir /root/zookeeper-3.4.9/zkData

[root@node1 ~]# scp -r /root/zookeeper-3.4.9 node2:/root

[root@node1 ~]# scp -r /root/zookeeper-3.4.9 node3:/root

[root@node1 ~]# touch /root/zookeeper-3.4.9/zkData/myid

[root@node1 ~]# echo 1 > /root/zookeeper-3.4.9/zkData/myid

[root@node2 ~]# touch /root/zookeeper-3.4.9/zkData/myid

[root@node2 ~]# echo 2 > /root/zookeeper-3.4.9/zkData/myid

[root@node3 ~]# touch /root/zookeeper-3.4.9/zkData/myid

[root@node3 ~]# echo 3 > /root/zookeeper-3.4.9/zkData/myid

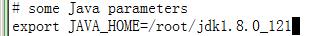

环境变量

[root@node1 ~]# vi /etc/profile

export JAVA_HOME=/root/jdk1.8.0_121export SCALA_HOME=/root/scala-2.11.11export HADOOP_HOME=/root/hadoop-2.7.3export HIVE_HOME=/root/apache-hive-1.2.1-binexport HBASE_HOME=/root/hbase-1.3.1export SPARK_HOME=/root/spark-2.1.1-bin-hadoop2.7export PATH=.:$PATH:$JAVA_HOME/bin:$SCALA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:/root:$HIVE_HOME/bin:$HBASE_HOME/bin:$SPARK_HOMEexport CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

[root@node1 ~]# source /etc/profile

[root@node1 ~]# scp /etc/profile node2:/etc

[root@node2 ~]# source /etc/profile

[root@node1~]# scp /etc/profile node3:/etc

[root@node3 ~]# source /etc/profile

Hadoop

[root@node1 ~]# wget -O /root/hadoop-2.7.3.tar.gz http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz

[root@node1 ~]# tar -zxvf /root/hadoop-2.7.3.tar.gz -C /root

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

<property><name>dfs.replication</name><value>2</value></property><property><name>dfs.blocksize</name><value>64m</value></property><property><name>dfs.permissions.enabled</name><value>false</value></property><property><name>dfs.nameservices</name><value>mycluster</value></property><property><name>dfs.ha.namenodes.mycluster</name><value>nn1,nn2</value></property><property><name>dfs.namenode.rpc-address.mycluster.nn1</name><value>node1:8020</value></property><property><name>dfs.namenode.rpc-address.mycluster.nn2</name><value>node2:8020</value></property><property><name>dfs.namenode.http-address.mycluster.nn1</name><value>node1:50070</value></property><property><name>dfs.namenode.http-address.mycluster.nn2</name><value>node2:50070</value></property><property><name>dfs.namenode.shared.edits.dir</name><value>qjournal://node1:8485;node2:8485;node3:8485/mycluster</value></property><property><name>dfs.journalnode.edits.dir</name><value>/root/hadoop-2.7.3/tmp/journal</value></property><property><name>dfs.ha.automatic-failover.enabled.mycluster</name><value>true</value></property><property><name>dfs.client.failover.proxy.provider.mycluster</name><value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value></property><property><name>dfs.ha.fencing.methods</name><value>sshfence</value></property><property><name>dfs.ha.fencing.ssh.private-key-files</name><value>/root/.ssh/id_rsa</value></property>

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/core-site.xml

<property><name>fs.defaultFS</name><value>hdfs://mycluster</value></property><property><name>hadoop.tmp.dir</name><value>/root/hadoop-2.7.3/tmp</value></property><property><name>ha.zookeeper.quorum</name><value>node1:2181,node2:2181,node3:2181</value></property>

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/slaves

node1node2node3

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/yarn-env.sh

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/mapred-site.xml

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.jobhistory.address</name><value>node1:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>node1:19888</value></property><property><name>mapreduce.jobhistory.max-age-ms</name><value>6048000000</value></property></configuration>

[root@node1 ~]# vi /root/hadoop-2.7.3/etc/hadoop/yarn-site.xml

<property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name><value>org.apache.hadoop.mapred.ShuffleHandler</value></property><property><name>yarn.resourcemanager.ha.enabled</name><value>true</value></property><property><name>yarn.resourcemanager.cluster-id</name><value>yarn-cluster</value></property><property><name>yarn.resourcemanager.ha.rm-ids</name><value>rm1,rm2</value></property><property><name>yarn.resourcemanager.hostname.rm1</name><value>node1</value></property><property><name>yarn.resourcemanager.hostname.rm2</name><value>node2</value></property><property><name>yarn.resourcemanager.webapp.address.rm1</name><value>node1:8088</value></property><property><name>yarn.resourcemanager.webapp.address.rm2</name><value>node2:8088</value></property><property><name>yarn.resourcemanager.zk-address</name><value>node1:2181,node2:2181,node3:2181</value></property><property><name>yarn.resourcemanager.recovery.enabled</name><value>true</value></property><property><name>yarn.resourcemanager.store.class</name><value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value></property><property><name>yarn.log-aggregation-enable</name><value>true</value></property><property><name>yarn.log.server.url</name><value>http://node1:19888/jobhistory/logs</value></property>

[root@node1 ~]# mkdir -p /root/hadoop-2.7.3/tmp/journal

[root@node2 ~]# mkdir -p /root/hadoop-2.7.3/tmp/journal

[root@node3 ~]# mkdir -p /root/hadoop-2.7.3/tmp/journal

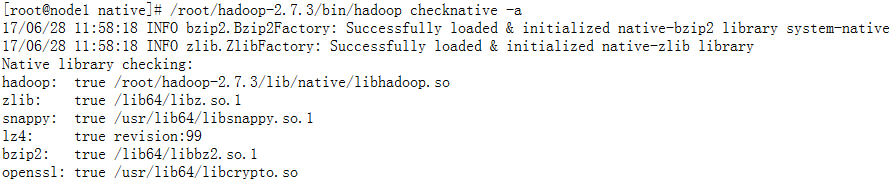

将编译的本地包中的native库替换/root/hadoop-2.7.3/lib/native

[root@node1 ~]# scp -r /root/hadoop-2.7.3/ node2:/root

[root@node1 ~]# scp -r /root/hadoop-2.7.3/ node3:/root

查看自己的Hadoop是32位还是64位

[root@node1 native]# file libhadoop.so.1.0.0

libhadoop.so.1.0.0: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, not stripped

[root@node1 native]# pwd

/root/hadoop-2.7.3/lib/native

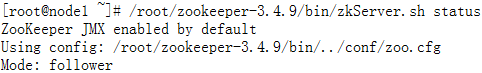

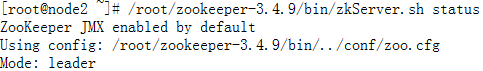

启动ZK

[root@node1 ~]#/root/zookeeper-3.4.9/bin/zkServer.sh start

[root@node2 ~]#/root/zookeeper-3.4.9/bin/zkServer.sh start

[root@node3 ~]#/root/zookeeper-3.4.9/bin/zkServer.sh start

格式化zkfc

[root@node1 ~]# /root/hadoop-2.7.3/bin/hdfs zkfc -formatZK

[root@node1 ~]# /root/zookeeper-3.4.9/bin/zkCli.sh

启动journalnode

[root@node1 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start journalnode

[root@node2 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start journalnode

[root@node3 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start journalnode

Namenode格式化和启动

[root@node1 ~]# /root/hadoop-2.7.3/bin/hdfs namenode -format

[root@node1 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start namenode

[root@node2 ~]# /root/hadoop-2.7.3/bin/hdfs namenode -bootstrapStandby

[root@node2 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start namenode

启动zkfc

[root@node1 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start zkfc

[root@node2 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start zkfc

启动datanode

[root@node1 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start datanode

[root@node2 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start datanode

[root@node3 ~]# /root/hadoop-2.7.3/sbin/hadoop-daemon.sh start datanode

启动yarn

[root@node1 ~]# /root/hadoop-2.7.3/sbin/yarn-daemon.sh start resourcemanager

[root@node2 ~]# /root/hadoop-2.7.3/sbin/yarn-daemon.sh start resourcemanager

[root@node1 ~]# /root/hadoop-2.7.3/sbin/yarn-daemon.sh start nodemanager

[root@node2 ~]# /root/hadoop-2.7.3/sbin/yarn-daemon.sh start nodemanager

[root@node3 ~]# /root/hadoop-2.7.3/sbin/yarn-daemon.sh start nodemanager

[root@node1 ~]# hdfs dfs -chmod -R 777 /

安装MySQL

[root@node1 ~]# yum remove -y mysql-libs

[root@node1 ~]# yum install mysql-server

[root@node1 ~]# service mysqld start

[root@node1 ~]# chkconfig mysqld on

[root@node1 ~]# mysqladmin -u root password 'AAAaaa111'

[root@node1 ~]# mysqladmin -u root -h node1 password 'AAAaaa111'

[root@node1 ~]# mysql -h localhost -u root -p

Enter password: AAAaaa111

mysql> GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'AAAaaa111' WITH GRANT OPTION;

mysql> flush privileges;

[root@node1 ~]# vi /etc/my.cnf

[client]default-character-set=utf8[mysql]default-character-set=utf8[mysqld]character-set-server=utf8lower_case_table_names = 1

[root@node1 ~]# service mysqld restart

HIVE安装

由于官方提供的spark-2.1.1-bin-hadoop2.7.tgz包中集成的Hive是1.2.1,所以Hive版本选择1.2.1

[root@node1 ~]# wget http://archive.apache.org/dist/hive/hive-1.2.1/apache-hive-1.2.1-bin.tar.gz

[root@node1 ~]# tar -xvf apache-hive-1.2.1-bin.tar.gz

将mysql-connector-java-5.6-bin.jar 驱动放在 /root/hive-1.2.1/lib/ 目录下面

[root@node1 ~]# cp /root/apache-hive-1.2.1-bin/conf/hive-env.sh.template /root/apache-hive-1.2.1-bin/conf/hive-env.sh

[root@node1 ~]# vi /root/apache-hive-1.2.1-bin/conf/hive-env.sh

export HADOOP_HOME=/root/hadoop-2.7.3

[root@node1 ~]# cp /root/apache-hive-1.2.1-bin/conf/hive-log4j.properties.template /root/apache-hive-1.2.1-bin/conf/hive-log4j.properties

[root@node1 ~]# vi /root/apache-hive-1.2.1-bin/conf/hive-site.xml

<configuration>

<property>

<name>hive.metastore.uris</name>

<value>thrift://node1:9083</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://node1:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>AAAaaa111</value>

</property>

</configuration>

[root@node1 ~]# vi /etc/init.d/hive-metastore

/root/apache-hive-1.2.1-bin/bin/hive --service metastore >/dev/null 2>&1 &

[root@node1 ~]# chmod 777 /etc/init.d/hive-metastore

[root@node1 ~]# ln -s /etc/init.d/hive-metastore /etc/rc.d/rc3.d/S65hive-metastore

[root@node1 ~]# hive

[root@node1 ~]# mysql -h localhost -u root -p

mysql> alter database hive character set latin1;

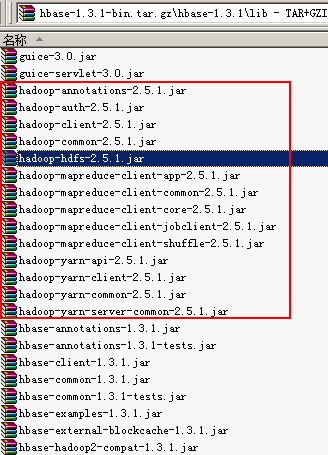

Hbase编译安装

官方提供的是基础Hadoop2.5.1编译的,所以要进行编译:

将pom.xml文件中依赖的hadoop版本修改:

<hadoop-two.version>2.5.1</hadoop-two.version>

修改为

<hadoop-two.version>2.7.3</hadoop-two.version>

<compileSource>1.7</compileSource>

修改为

<compileSource>1.8</compileSource>

例如如下命令打包:

mvn clean package -DskipTests -Prelease assembly:single

/root/hbase-1.3.1/hbase-assembly/target/hbase-1.3.1-bin.tar.gz

下面基于此安装包进行Hbase的安装:

[root@node1 ~]# cp /root/hadoop-2.7.3/etc/hadoop/hdfs-site.xml /root/hadoop-2.7.3/etc/hadoop/core-site.xml /root/hbase-1.3.1/conf/

[root@node1 ~]# vi /root/hbase-1.3.1/conf/hbase-env.sh

export JAVA_HOME=/root/jdk1.8.0_121

export HBASE_MANAGES_ZK=false

[root@node1 ~]# vi /root/hbase-1.3.1/conf/hbase-site.xml

<property><name>hbase.rootdir</name><value>hdfs://mycluster:8020/hbase</value></property><property><name>hbase.cluster.distributed</name><value>true</value></property><property><name>hbase.zookeeper.quorum</name><value>node1:2181,node2:2181,node3:2181</value></property><property><name>hbase.master.port</name><value>60000</value></property><property><name>hbase.master.info.port</name><value>60010</value></property>

<property>

<name>hbase.tmp.dir</name>

<value>/root/hbase-1.3.1/tmp</value>

</property>

<property>

<name>hbase.regionserver.port</name>

<value>60020</value>

</property>

<property>

<name>hbase.regionserver.info.port</name>

<value>60030</value>

</property>

[root@node1 ~]# vi /root/hbase-1.3.1/conf/regionservers

node1node2node3

[root@node1 ~]# mkdir -p /root/hbase-1.3.1/tmp

[root@node1 ~]# vi /root/hbase-1.3.1/conf/hbase-env.sh

# Configure PermSize. Only needed in JDK7. You can safely remove it for JDK8+

#export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"#export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

将etc/profile,及hbase复制到其他两个节点上

[root@node1 ~]# start-hbase.sh

#back-master需要手动起

[root@node2 ~]# hbase-daemon.sh start master

[root@node1 ~]# hbase shell

spark

[root@node1 ~]# cp /root/spark-2.1.1-bin-hadoop2.7/conf/spark-env.sh.template /root/spark-2.1.1-bin-hadoop2.7/conf/spark-env.sh

[root@node1 ~]# vi /root/spark-2.1.1-bin-hadoop2.7/conf/spark-env.sh

export SCALA_HOME=/root/scala-2.11.11export JAVA_HOME=/root/jdk1.8.0_121export HADOOP_HOME=/root/hadoop-2.7.3export HADOOP_CONF_DIR=/root/hadoop-2.7.3/etc/hadoopexport SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=node1:2181,node2:2181,node3:2181 -Dspark.deploy.zookeeper.dir=/spark"

[root@node1 ~]# cp /root/spark-2.1.1-bin-hadoop2.7/conf/slaves.template /root/spark-2.1.1-bin-hadoop2.7/conf/slaves

[root@node1 ~]# vi /root/spark-2.1.1-bin-hadoop2.7/conf/slaves

node1node2node3

[root@node1 ~]# scp -r /root/spark-2.1.1-bin-hadoop2.7 node2:/root

[root@node1 ~]# scp -r /root/spark-2.1.1-bin-hadoop2.7 node3:/root

[root@node1 ~]# /root/spark-2.1.1-bin-hadoop2.7/sbin/start-all.sh

./start.sh

/root/zookeeper-3.4.9/bin/zkServer.sh start

ssh root@node2 'export BASH_ENV=/etc/profile;/root/zookeeper-3.4.9/bin/zkServer.sh start'

ssh root@node3 'export BASH_ENV=/etc/profile;/root/zookeeper-3.4.9/bin/zkServer.sh start'

/root/hadoop-2.7.3/sbin/start-dfs.sh

/root/hadoop-2.7.3/sbin/start-yarn.sh

#如果Yarn做HA,则打开

#ssh root@node2 'export BASH_ENV=/etc/profile;/root/hadoop-2.7.3/sbin/yarn-daemon.sh start resourcemanager'

/root/hadoop-2.7.3/sbin/hadoop-daemon.sh start zkfc

ssh root@node2 'export BASH_ENV=/etc/profile;/root/hadoop-2.7.3/sbin/hadoop-daemon.sh start zkfc'

ssh root@node3 'export BASH_ENV=/etc/profile;/root/hadoop-2.7.3/sbin/hadoop-daemon.sh start zkfc'

/root/hadoop-2.7.3/bin/hdfs haadmin -ns mycluster -failover nn2 nn1

echo 'Y' | ssh root@node1 'export BASH_ENV=/etc/profile;/root/hadoop-2.7.3/bin/yarn rmadmin -transitionToActive --forcemanual rm1'

/root/hbase-1.3.1/bin/start-hbase.sh

#如果HBase做HA,则打开

#ssh root@node2 'export BASH_ENV=/etc/profile;/root/hbase-1.3.1/bin/hbase-daemon.sh start master'

/root/spark-2.1.1-bin-hadoop2.7/sbin/start-all.sh

#如果Spark做HA,则打开

#ssh root@node2 'export BASH_ENV=/etc/profile;/root/spark-2.1.1-bin-hadoop2.7/sbin/start-master.sh'

/root/hadoop-2.7.3/sbin/mr-jobhistory-daemon.sh start historyserver

echo '--------------node1---------------'

jps | grep -v Jps | sort -k 2 -t ' '

echo '--------------node2---------------'

ssh root@node2 "export PATH=/usr/bin:$PATH;jps | grep -v Jps | sort -k 2 -t ' '"

echo '--------------node3---------------'

ssh root@node3 "export PATH=/usr/bin:$PATH;jps | grep -v Jps | sort -k 2 -t ' '"

./stop.sh

/root/spark-2.1.1-bin-hadoop2.7/sbin/stop-all.sh

/root/hbase-1.3.1/bin/stop-hbase.sh

#如果Yarn开HA,则去掉注释

#ssh root@node2 'export BASH_ENV=/etc/profile;/root/hadoop-2.7.3/sbin/yarn-daemon.sh stop resourcemanager'

/root/hadoop-2.7.3/sbin/stop-yarn.sh

/root/hadoop-2.7.3/sbin/stop-dfs.sh

/root/hadoop-2.7.3/sbin/hadoop-daemon.sh stop zkfc

ssh root@node2 'export BASH_ENV=/etc/profile;/root/hadoop-2.7.3/sbin/hadoop-daemon.sh stop zkfc'

/root/zookeeper-3.4.9/bin/zkServer.sh stop

ssh root@node2 'export BASH_ENV=/etc/profile;/root/zookeeper-3.4.9/bin/zkServer.sh stop'

ssh root@node3 'export BASH_ENV=/etc/profile;/root/zookeeper-3.4.9/bin/zkServer.sh stop'

/root/hadoop-2.7.3/sbin/mr-jobhistory-daemon.sh stop historyserver

./shutdown.sh

ssh root@node2 "export PATH=/usr/bin:$PATH;shutdown -h now"

ssh root@node3 "export PATH=/usr/bin:$PATH;shutdown -h now"

shutdown -h now

./reboot.sh

ssh root@node2 "export PATH=/usr/bin:$PATH;reboot"

ssh root@node3 "export PATH=/usr/bin:$PATH;reboot"

reboot