1. 综述

首先,推流直播的配置文件如下:

# rtmp.conf

listen 1935;

max_connections 1000;

daemon off;

srs_log_tank console;

vhost __defaultVhost__ {

}

搭建的简陋直播步骤如下:

- 启动 srs:./obj/srs -c ./conf/rtmp.conf;

- 设置并开启 obs 推流,obs 的视频来源随便,可以直接是视频获取设备或本地文件,此外 obs 的设置如下图:

注:必须填 "流名称",因为 SRS 不支持没有流名称的请求。 - 开启 obs 推流后,即可使用另一个客户端向 SRS 播放 obs 推流的视频,这里用 vlc 进行播放,vlc 播放流填:

rtmp://192.168.56.101:1935/live/livestream

obs 推流过程分析可见如下链接:

- SRS 如何建立对服务器端口的监听,以便监听客户端的连接请求: SRS之监听端口的管理:RTMP

- SRS 的主线程循环休眠,以便调度其他线程运行,如监听端口的线程 tcp:

SRS之SrsServer::cycle() - SRS 监听端口的线程监听到有客户端的连接请求后,会 accept 客户端的连接,并创建一个 conn 线程,专用于处理与该客户端的请求: SRS之RTMP连接处理线程conn:接收客户端推流

- 在 conn 线程的循环开始时,首先服务器会与客户端进行 handshake 过程: SRS之RTMP handshake

- handshake 成功后,会接收客户端 handshake 后的第一个命令,一般为 connect('xxx'): SRS之SrsRtmpServer::connect_app详解

- 接着进入 SrsRtmpConn::service_cycle 函数: SRS之SrsRtmpConn::service_cycle详解

- 在 SrsRtmpConn::service_cycle 函数开始向客户端发送 应答窗口大小(5)、设置流带宽(6)、回复客户端 connect 的响应等消息后,开始进入循环,此时,调用 stream_service_cycle 函数: SRS之SrsRtmpConn::stream_service_cycle详解

- 在 stream_service_cycle 中,首先会鉴别客户端要做的实际请求是什么,如 publish 或 play,若为 FMLE 类型的 publish,则会调用 start_fmle_publish 函数与客户端先进行一些列消息的交互,然后开始处理真正的推流: SRS之SrsRtmpConn::publishing详解

- 若客户端为 publish,则在 publishing 函数中,会创建一个专门用于接收客户端推流数据的线程 recv,该线程会将推流数据缓存到 consumer(若此时有客户端播放推流视频的话) 中:

SRS之接收推流线程:recv

下面即开始在第 8 步的基础上分析:vlc 连接 SRS,请求播放 obs 推流的的视频(前面建立连接的过程和上面 1~7 差不多)。

下面的分析会简化一下源码。

2. SrsRtmpConn::stream_service_cycle

int SrsRtmpConn::stream_service_cycle()

{

int ret = ERROR_SUCCESS;

SrsRtmpConnType type;

/* 首先,鉴别客户端连接的类型,这里应该为 play */

if ((ret = rtmp->identify_client(res->stream_id, type, req->stream, req->duration))

!= ERROR_SUCCESS) {

...

}

/* 对 url、host 这些数据进行简化,如去掉空格或其他不必要的字符 */

req->strip();

/* 若配置文件中没有配置 security,则忽略 */

// sercurity check

if ((ret = security->check(type, ip, req)) != ERROR_SUCCESS) {

...

}

/* SRS 不支持空的流名称,因为对于 HLS 可能会通过空的流名称写到一个文件中 */

if (req->stream.empty()) {

...

}

/* 设置服务器 send/recv 的超时时间,这里都为 30*1000*1000LL */

rtmp->set_recv_timeout(SRS_CONSTS_RTMP_RECV_TIMEOUT_US);

rtmp->set_send_timeout(SRS_CONSTS_RTMP_SEND_TIMEOUT_US);

/* 找到一个 source 来为该客户端提供服务 */

SrsSource* source = NULL;

if ((ret = SrsSource::fetch_or_create(req, server, &source)) != ERROR_SUCCESS) {

...

}

/* update the statistic when source disconveried. */

...

/* 若配置文件中没有配置 mode 的话,默认返回 false */

bool vhost_is_edge = _srs_config->get_vhost_is_edge(req->vhost);

/* 若配置文件中没有配置 gop_cache,则默认开始 gop_cache */

bool enabled_cache = _srs_config->get_app_cache(req->vhost);

source->set_cache(enabled_cache);

/* 这里应为 SrsRtmpConnPlay */

client_type = type;

switch (type) {

case SrsRtmpConnPlay: {

/* response connection start play */

if ((ret = rtmp->start_play(res->stream_id)) != ERROR_SUCCESS) {

...

}

/* 若配置文件中没有配置 http_hooks,则忽略该函数 */

if ((ret = http_hooks_on_play()) != ERROR_SUCCESS) {

...

}

/* 这里开始向客户端发送 obs 推的流 */

ret = playing(source);

http_hooks_on_stop();

return ret;

}

...

}

return ret;

}

2.1 SrsRtmpServer::identify_client

该函数是通过接收一些客户端发来的消息来鉴别该客户端的请求的类型:publish or play。

/*

* recv some mesage to identify the client.

* @stream_id, client will createStream to play or publish by flash,

* the stream_id used to response the createStream request.

* @type, output the client type.

* @stream_name, output the client publish/play stream name. @see: SrsRequest.stream

* @duration, output the play client duration. @see: SrsRequest.duration

*/

int SrsRtmpServer::identify_client(int stream_id, SrsRtmpConnType& type,

string& stream_name, double& duration)

{

type = SrsRtmpConnUnknown;

int ret = ERROR_SUCCESS;

while (true) {

SrsCommonMessage* msg = NULL;

/* 获取一个完整的消息 */

if ((ret = protocol->recv_message(&msg)) != ERROR_SUCCESS) {

...

}

SrsAutoFree(SrsCommonMessage, msg);

SrsMessageHeader& h = msg->header;

if (h.is_ackledgement() || h.is_set_chunk_size() ||

h.is_window_ackledgement_size() || h.is_user_control_message()) {

continue;

}

SrsPacket* pkt = NULL;

/* 解析该消息 */

if ((ret = protocol->decode_message(msg, &pkt)) != ERROR_SUCCESS) {

...

}

SrsAutoFree(SrsPacket, pkt);

/* 接收到的消息为 createStream('livestream') */

if (dynamic_cast<SrsCreateStreamPacket*>(pkt)) {

srs_info("identify client by create stream, play or flash publish.");

return identify_create_stream_client(dynamic_cast<SrsCreateStreamPacket*>(pkt),

stream_id, type, stream_name, duration);

}

...

}

return ret;

}

该函数检测接收到的消息为 createStream 后,接着调用 identify_create_stream_client 做进一步的处理。

2.1.1 SrsRtmpServer::identify_create_stream_client

int SrsRtmpServer::identify_create_stream_client(SrsCreateStreamPacket* req, int stream_id,

SrsRtmpConnType& type, string& stream_name, double& duration)

{

int ret = ERROR_SUCCESS;

if (true) {

/* 构造一个用于响应 createStream 消息的类 */

SrsCreateStreamResPacket* pkt =

new SrsCreateStreamResPacket(req->transaction_id, stream_id);

if ((ret = protocol->send_and_free_packet(pkt, 0)) != ERROR_SUCCESS) {

...

}

}

while (true) {

SrsCommonMessage* msg = NULL;

if ((ret = protocol->recv_message(&msg)) != ERROR_SUCCESS) {

...

}

SrsAutoFree(SrsCommonMessage, msg);

SrsMessageHeader& h = msg->header;

if (h.is_ackledgement() || h.is_set_chunk_size() ||

h.is_window_ackledgement_size() || h.is_user_control_message()) {

continue;

}

if (!h.is_amf0_command() && !h.is_amf3_command()) {

srs_trace("identify ignore messages except "

"AMF0/AMF3 command message. type=%#x", h.message_type);

continue;

}

SrsPacket* pkt = NULL;

if ((ret = protocol->decode_message(msg, &pkt)) != ERROR_SUCCESS) {

...

}

/* 直到接收到 play 才返回 */

SrsAutoFree(SrsPacket, pkt);

if (dynamic_cast<SrsPlayPacket*>(pkt)) {

srs_info("level1 identify client by play.");

return identify_play_client(dynamic_cast<SrsPlayPacket*>(pkt),

type, stream_name, duration);

}

...

}

return ret;

}

2.1.2 SrsRtmpServer::identify_play_client

int SrsRtmpServer::identify_play_client(SrsPlayPacket* req, SrsRtmpConnType& type,

string& stream_name, double& duration)

{

int ret = ERROR_SUCCESS;

type = SrsRtmpConnPlay;

/* 客户端请求播放的流名称,可知为 livestream */

stream_name = req->stream_name;

duration = req->duration;

srs_info("identity client type=play, stream_name=%s, duration=%.2f",

stream_name.c_str(), duration);

return ret;

}

鉴别到客户端请求的行为为 play 后,接着为该请求获取一个 SrsSource 类的 source,用于为该客户端的请求提供服务。

2.2 SrsSource::fetch_or_create

/*

* create source when fetch from cache failed.

* @param r the client request.

* @param h the event handler for source.

* @param pps the matched source, if success never be NULL.

*/

int SrsSource::fetch_or_create(SrsRequest* r, ISrsSourceHandler* h, SrsSource** pps)

{

int ret = ERROR_SUCCESS;

/* 先从全局变量 SrsSource::pool 中寻找是否存在该 stream_url 对应的 source */

SrsSource* source = NULL;

if ((source = fetch(r)) != NULL) {

*pps = source;

return ret;

}

/* 若不存在,下面则是新构建一个,并将该 source 放入到 pool 中 */

/* 根据 vhost/app/stream 生成一个 stream_url */

string stream_url = r->get_stream_url();

string vhost = r->vhost;

// should always not exists for create a source.

srs_assert (pool.find(stream_url) == pool.end());

/* 构造一个新的 source */

source = new SrsSource();

if ((ret = source->initialize(r, h)) != ERROR_SUCCESS) {

srs_freep(source);

return ret;

}

/* 将该 source 以 stream_url 为索引值放入到 pool 中 */

pool[stream_url] = source;

srs_info("create new source for url=%s, vhost=%s", stream_url.c_str(), vhost.c_str());

*pps = source;

return ret;

}

注:该 source 是一个公共 source,是 vhost/app/stream 相同的 publish 和 play 共同使用。

2.2.2 构造 SrsSource

/* live streaming source. */

SrsSource::SrsSource()

{

_req = NULL;

jitter_algorithm = SrsRtmpJitterAlgorithmOFF;

mix_correct = false;

mix_queue = new SrsMixQueue();

#ifdef SRS_AUTO_HLS

hls = new SrsHls();

#endif

#ifdef SRS_AUTO_DVR

dvr = new SrsDvr();

#endif

#ifdef SRS_AUTO_TRANSCODE

encoder = new SrsEncoder();

#endif

#ifdef SRS_AUTO_HDS

hds = new SrsHds(this);

#endif

/* cache_metadata: 缓存元数据

* cache_sh_video: 缓存 sps,pps

* cache_sh_audio: 缓存 audio sequence header */

cache_metadata = cache_sh_video = cache_sh_audio = NULL;

_can_publish = true;

_pre_source_id = _source_id = -1;

die_at = -1;

play_edge = new SrsPlayEdge();

publish_edge = new SrsPublishEdge();

/* 默认开启 gop_cache */

gop_cache = new SrsGopCache();

aggregate_stream = new SrsStream();

is_monotonically_increase = false;

last_packet_time = 0;

_srs_config->subscribe(this);

atc = false;

}

接下来,开始响应客户端的 play 命名。

2.3 SrsRtmpServer::start_play

int SrsRtmpServer::start_play(int stream_id)

{

int ret = ERROR_SUCCESS;

// StreamBegin

if (true) {

SrsUserControlPacket* pkt = new SrsUserControlPacket();

pkt->event_type = SrsPCUCStreamBegin;

pkt->event_data = stream_id;

/* 向客户端发送 Stream Begin 1 的用户控制消息 */

if ((ret = protocol->send_and_free_packet(pkt, 0)) != ERROR_SUCCESS) {

...

}

}

// onStatus(NetStream.Play.Reset)

if (true) {

SrsOnStatusCallPacket* pkt = new SrsOnStatusCallPacket();

pkt->data->set(StatusLevel, SrsAmf0Any::str(StatusLevelStatus));

pkt->data->set(StatusCode, SrsAmf0Any::str(StatusCodeStreamReset));

pkt->data->set(StatusDescription,

SrsAmf0Any::str("Playing and resetting stream."));

pkt->data->set(StatusDetails, SrsAmf0Any::str("stream"));

pkt->data->set(StatusClientId, SrsAmf0Any::str(RTMP_SIG_CLIENT_ID));

if ((ret = protocol->send_and_free_packet(pkt, stream_id)) != ERROR_SUCCESS) {

...

}

}

// onStatus(NetStream.Play.Start)

if (true) {

SrsOnStatusCallPacket* pkt = new SrsOnStatusCallPacket();

pkt->data->set(StatusLevel, SrsAmf0Any::str(StatusLevelStatus));

pkt->data->set(StatusCode, SrsAmf0Any::str(StatusCodeStreamStart));

pkt->data->set(StatusDescription, SrsAmf0Any::str("Started playing stream."));

pkt->data->set(StatusDetails, SrsAmf0Any::str("stream"));

pkt->data->set(StatusClientId, SrsAmf0Any::str(RTMP_SIG_CLIENT_ID));

if ((ret = protocol->send_and_free_packet(pkt, stream_id)) != ERROR_SUCCESS) {

...

}

}

// |RtmpSampleAccess(false, false)

if (true) {

SrsSampleAccessPacket* pkt = new SrsSampleAccessPacket();

// allow audio/video sample.

// @see: https://github.com/ossrs/srs/issues/49

pkt->audio_sample_access = true;

pkt->video_sample_access = true;

if ((ret = protocol->send_and_free_packet(pkt, stream_id)) != ERROR_SUCCESS) {

...

}

srs_info("send |RtmpSampleAccess(false, false) message success.");

}

// onStatus(NetStream.Data.Start)

if (true) {

SrsOnStatusDataPacket* pkt = new SrsOnStatusDataPacket();

pkt->data->set(StatusCode, SrsAmf0Any::str(StatusCodeDataStart));

if ((ret = protocol->send_and_free_packet(pkt, stream_id)) != ERROR_SUCCESS) {

...

}

srs_info("send onStatus(NetStream.Data.Start) message success.");

}

srs_info("start play success.");

return ret;

}

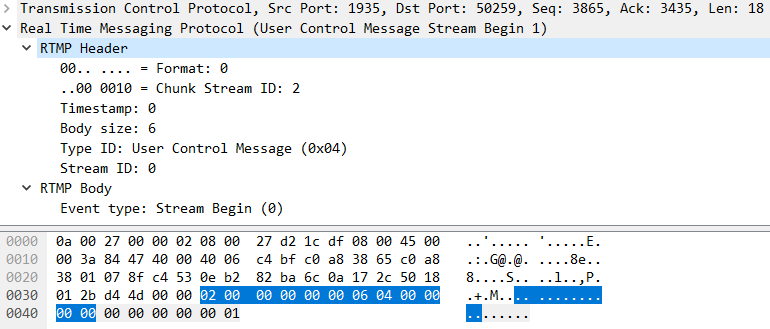

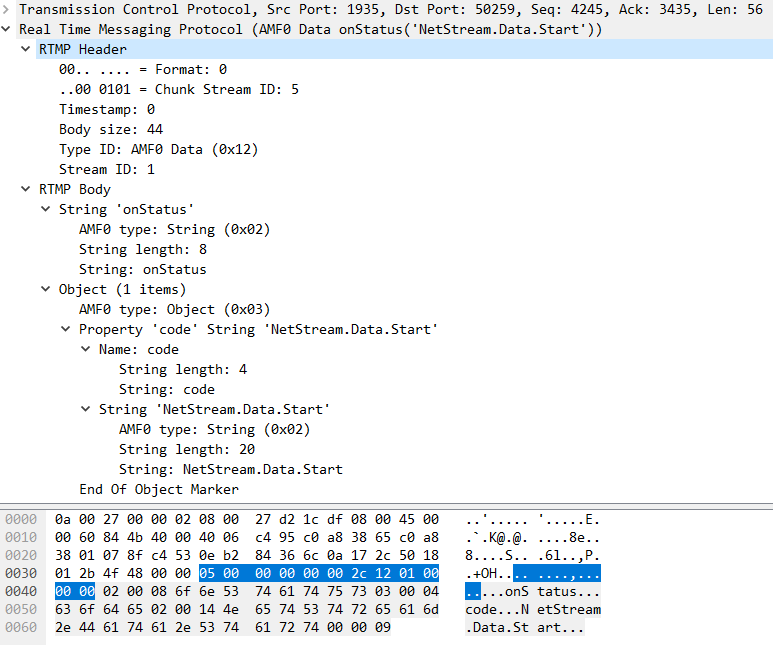

该函数依次向客户端发送的消息如下几幅图。

send: StreamBegin

send: onStatus(NetStream.Play.Reset)

send: onStatus(NetStream.Play.Start)

send: |RtmpSampleAccess(false, false)

send: onStatus(NetStream.Data.Start)

3. SrsRtmpConn::playing

int SrsRtmpConn::playing(SrsSource* source)

{

int ret = ERROR_SUCCESS;

/* create consumer of source. */

SrsConsumer* consumer = NULL;

/* 构建一个 consumer,并将 consumer 放入到该 source 中的 consumers 容器中,

* 然后往该 consumer 中填加 metadata,audio and video sequence header,

* gop_cache 中缓存的音视频数据 */

if ((ret = source->create_consumer(this, consumer)) != ERROR_SUCCESS) {

...

}

SrsAutoFree(SrsConsumer, consumer);

/* 构建一个接收线程 */

/* use isolate thread to recv,

* @see: https://github.com/ossrs/srs/issues/217 */

SrsQueueRecvThread trd(consumer, rtmp, SRS_PERF_MW_SLEEP);

/* start isolate recv thread */

if ((ret = trd.start()) != ERROR_SUCCESS) {

...

}

/* delivery message for clients playing stream. */

wakable = consumer;

ret = do_playing(source, consumer, &trd);

wakable = NULL;

/* stop isolate recv thread */

trd.stop();

/* warn for the message is dropped. */

if (!trd.empty()) {

srs_warn("drop the received %d messages", trd.size());

}

return ret;

}

3.1 SrsSource::create_consumer

/* create consumer and dumps packets in cache.

* @param consumer, output the create consumer.

* @param ds, whether dumps the sequence header.

* @param dm, whether dumps the metadata.

* @param dg, whether dumps the gop cache.

* 缺省 ds = dm = dg = true

*/

int SrsSource::create_consumer(SrsConnection* conn, SrsConsumer*& consumer,

bool ds, bool dm, bool dg)

{

int ret = ERROR_SUCCESS;

/* 构建一个 consumer,并将其放入到该 source 中的 consumers 容器中,

* 以便服务器接收 obs 推流数据时,检测该 source 中的 consumers 容器

* 中有 consumer,则将其取出,往其中放入接收到的推流数据 */

consumer = new SrsConsumer(this, conn);

consumers.push_back(consumer);

/* 若配置文件中没有配置 queue_length,则默认队列大小为 30 */

double queue_size = _srs_config->get_queue_length(_req->vhost);

/* 设置该 consumer 中 queue 的大小,即可以存放的音视频消息个数 */

consumer->set_queue_size(queue_size);

/* atc 默认禁止 */

/* if atc, update the sequence header to gop cache time. */

if (atc && !gop_cache->empty()) {

if (cache_metadata) {

cache_metadata->timestamp = gop_cache->start_time();

}

if (cache_sh_video) {

cache_sh_video->timestamp = gop->cache->start_time();

}

if (cache_sh_audio) {

cache_sh_audio->timestamp = gop_cache->start_time();

}

}

/* 最开始,先将 metadata 放入到 consumer 的 queue 中 */

/* copy metadata. */

if (dm && cache_metadata &&

(ret = consumer->enqueue(cache_metadata, atc, jitter_algorithm))

!= ERROR_SUCCESS) {

...

}

/* 其次是将 audio sequence header 放入到 consumer 中 */

/* copy sequence header

* copy audio sequence first, for hls to fast parse the "right" audio codec.

* @see https://github.com/ossrs/srs/issues/301 */

if (ds && cache_sh_audio &&

(ret = consumer->enqueue(cache_sh_audio, atc, jitter_algorithm))

!= ERROR_SUCCESS) {

...

}

/* 再接着将 video sequence header,即 sps,pps 放入到 consumer 中 */

if (ds && cache_sh_video &&

(ret = consumer->enqueue(cache_sh_video, atc, jitter_algotithm))

!= ERROR_SUCCESS) {

...

}

/* 最后将 gop_cache 中缓存的一组 GOP 放入到 consumer 中 */

/* copy gop cache to client. */

if (dg && (ret = gop_cache->dump(consumer, atc, jitter_algorithm)) != ERROR_SUCCESS) {

return ret;

}

/* 以上这些数据都是已经在 SRS 处理 obs 推流的过程中已经缓存好的,

* 这里是在该 consumer 构建好后立即将这些数据放入到 consumer 中 */

/* print status. */

if (dg) {

srs_trace("create consumer, queue_size=%.2f, jitter=%d",

queue_size, jitter_algorithm);

} else {

srs_trace("create consumer, ignore gop cache, jitter=%d", jitter_algorithm);

}

/* 若配置文件中没有配置 mode,则忽略这里 */

/* for edge, when play edge stream, check the state */

if (_srs_config->get_vhost_is_edge(_req->vhost)) {

/* notice edge to start for the first client. */

if ((ret = play_edge->on_client_play()) != ERROR_SUCCESS) {

...

}

}

return ret;

}

3.1.1 构造 SrsConsumer

SrsConsumer::SrsConsumer(SrsSource* s, SrsConnection* c)

{

source = s;

conn = c;

paused = false;

jitter = new SrsRtmpJitter();

queue = new SrsMessageQueue();

should_update_source_id = false;

#ifdef SRS_PERF_QUEUE_COND_WAIT

/* 构造一个条件变量 */

mw_wait = st_cond_new();

mw_min_msgs = 0;

mw_duration = 0;

mw_waiting = false;

#endif

}

3.1.2 st_cond_new

_st_cond_t *st_cond_new(void)

{

_st_cond_t *cvar;

cvar = (_st_cond_t *)calloc(1, sizeof(_st_cond_t));

if (cvar) {

/* 初始化一个该条件变量上的等待队列,用于存放等待该

* 条件变量的线程 */

ST_INIT_CLIST(&cvar->wait_q);

}

return cvar;

}

3.1.3 SrsGopCache::dump

/* dump the cached gop to consumer. */

int SrsGopCache::dump(SrsConsumer* consumer, bool atc,

SrsRtmpJitterAlgorithm jitter_algorithm)

{

int ret = ERROR_SUCCESS;

/* 遍历 gop_cache 中缓存的每一个 msg,将其放入到 consumer 中 */

std::vector<SrsSharedPtrMessage*>::Iterator it;

for (it = gop_cache.begin(); it != gop_cache.end(); ++it) {

SrsSharedPtrMessage* msg = *it;

if ((ret = consumer->enqueue(msg, atc, jitter_algorithm)) != ERROR_SUCCESS) {

...

}

}

return ret;

}

3.2 构建 SrsQueueRecvThread 线程

对于 publish 连接,只有非常少的消息需要发送,一般都是接收消息,所以适合单独起线程读,做 merged read 可以提升性能。

/*

* the recv thread used to replace the timeout recv,

* which hurt performance for the epoll_ctrl is frequently used.

* @see: SrsRtmpConn::playing

* @see: https://github.com/ossrs/srs/issues/217

*/

SrsQueueRecvThread::SrsQueueRecvThread(SrsConsumer* consumer,

SrsRtmpServer* rtmp_sdk, int timeout_ms)

: trd(this, rtmp_sdk, timeout_ms)

{

_consumer = consumer;

rtmp = rtmp_sdk;

recv_error_code = ERROR_SUCCESS;

}

该 SrsQueueRecvThread 中定义了 SrsRecvThread 类的对象 trd,因此在构造 SrsQueueRecvThread 前会先构造 SrsRecvThread 类的对象 trd。

3.2.1 SrsRecvThread 构造函数

SrsRecvThread::SrsRecvThread(ISrsMessageHandler* msg_handler,

SrsRtmpServer* rtmp_sdk, int timeout_ms)

{

timeout = timeout_ms;

handler = msg_handler;

rtmp = rtmp_sdk;

trd = new SrsReusableThread2("recv", this);

}

该 SrsRecvThread 构造函数中接着构造 SrsReusableThread2 类对象,trd 指向该对象。

3.2.2 SrsReusableThread2 构造函数

SrsReusableThread2::SrsReusableThread2(const char* n, ISrsReusableThread2Handler* h,

int64_t interval_us)

{

handler = h;

pthread = new internal::SrsThread(n, this, interval_us, true);

}

接着构造 internal::SrsThread 类对象。

3.2.3 SrsThread 构造函数

/**

* initialize the thread.

* @param name, human readable name for st debug.

* @param thread_handler, the cycle handler for the thread.

* @param interval_us, the sleep interval when cycle finished.

* @param joinable, if joinable, other thread must stop the thread.

* @remark if joinable, thread never quit itself, or memory leak.

* @see: https://github.com/ossrs/srs/issues/78

* @remark about st debug, see st-1.9/README, _st_iterate_threads_flag

*/

SrsThread::SrsThread(const char* name, ISrsThreadHandler* thread_handler,

int64_t interval_us, bool joinable)

{

_name = name;

handler = thread_handler;

cycle_interval_us = interval_us;

tid = NULL;

loop = false;

really_terminated = true;

_cid = -1;

_joinable = joinable;

disposed = false;

// in start(), the thread cycle method maybe stop and remove the thread itself,

// and the thread start() is waiting for the _cid, and segment fault then.

// @see https://github.com/ossrs/srs/issues/110

// thread will set _cid, callback on_thread_start(), then wait for the can_run signal.

can_run = false;

}

SrsQueueRecvThread、SrsRecvThread、SrsReusableThread2、SrsThread 之间的关系图

构造好 SrsQueueRecvThread 后,接着启动该线程。

3.3 SrsQueueRecvThread::start

int SrsQueueRecvThread::start()

{

return trd.start();

}

该函数直接调用 SrsRecvThread::start 函数。

3.3.1 SrsRecvThread::start

int SrsRecvThread::start()

{

return trd->start();

}

接着 SrsReusableThread2::start 函数.

3.3.2 SrsReusableThread2::start

int SrsReusableThread2::start()

{

return pthread->start();

}

该函数最终调用该 SrsThread::start 函数,该函数会调用 st_thread_create 创建一个线程,并将其添加到 run 队列中,等待虚拟处理器的调度。

3.3.3 SrsThread::start

int SrsThread::start()

{

int ret = ERROR_SUCCESS;

if(tid) {

srs_info("thread %s already running.", _name);

return ret;

}

/* 创建一个线程,线程函数为 thread_fun */

if((tid = st_thread_create(thread_fun, this, (_joinable? 1:0), 0)) == NULL){

ret = ERROR_ST_CREATE_CYCLE_THREAD;

srs_error("st_thread_create failed. ret=%d", ret);

return ret;

}

disposed = false;

// we set to loop to true for thread to run.

loop = true;

// wait for cid to ready, for parent thread to get the cid.

while (_cid < 0) {

/* 这里进入休眠,等待上面创建的线程得到调度后,生成一个线程上下文id: _cid,

* 主线程这里才会从休眠状态醒来后跳出循环,设置 can_run 为 true,使上面创建

* 的线程可以进入事件循环中 */

st_usleep(10 * 1000);

}

// now, cycle thread can run.

can_run = true;

return ret;

}

接下来,先分析 SrsQueueRecvThread 线程的线程函数 thread_fun。

4. SrsQueueRecvThread: SrsThread::thread_fun

void* SrsThread::thread_fun(void* arg)

{

SrsThread* obj = (SrsThread*)arg;

srs_assert(obj);

/* 开始进入线程循环 */

obj->thread_cycle();

// for valgrind to detect.

SrsThreadContext* ctx = dynamic_cast<SrsThreadContext*>(_srs_context);

if (ctx) {

ctx->clear_cid();

}

st_thread_exit(NULL);

return NULL;

}

4.1 SrsThread::thread_cycle

void SrsThread::thread_cycle()

{

int ret = ERROR_SUCCESS;

/* 生成属于该线程的线程上下文 id */

_srs_context->generate_id();

srs_info("thread %s cycle start", _name);

_cid = _srs_context->get_id();

srs_assert(handler);

/* 这里调用 SrsReusableThread2::on_thread_start 函数 */

handler->on_thread_start();

// thread is running now.

really_terminated = false;

// wait for cid to ready, for parent thread to get the cid.

while (!can_run && loop) {

/* 休眠,直到 can_run 为 true */

st_usleep(10 * 1000);

}

while (loop) {

/* 该函数什么也没做 */

if ((ret = handler->on_before_cycle()) != ERROR_SUCCESS) {

srs_warn("thread %s on before cycle failed, ignored and retry, ret=%d",

_name, ret);

goto failed;

}

srs_info("thread %s on before cycle success", _name);

/* 调用 SrsReusableThread2::cycle 函数 */

if ((ret = handler->cycle()) != ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret) && !srs_is_system_control_error(ret))

{

srs_warn("thread %s cycle failed, ignored and retry, ret=%d", _name, ret);

}

goto failed;

}

srs_info("thread %s cycle success", _name);

/* 同样忽略该函数 */

if ((ret = handler->on_end_cycle()) != ERROR_SUCCESS) {

srs_warn("thread %s on end cycle failed, ignored and retry, ret=%d",

_name, ret);

goto failed;

}

srs_info("thread %s on end cycle success", _name);

failed:

/* 若循环变量被设置为 false,则跳出该 while 循环 */

if (!loop) {

break;

}

// to improve performance, donot sleep when interval is zero.

// @see: https://github.com/ossrs/srs/issues/237

if (cycle_interval_us != 0) {

st_usleep(cycle_interval_us);

}

}

// readly terminated now.

really_terminated = true;

handler->on_thread_stop();

srs_info("thread %s cycle finished", _name);

}

4.1.1 SrsReusableThread2::on_thread_start

void SrsReusableThread2::on_thread_start()

{

handler->on_thread_start();

}

该函数直接调用 SrsRecvThread::on_thread_start 函数。

4.1.2 SrsRecvThread::on_thread_start

void SrsRecvThread::on_thread_start()

{

// the multiple messages writev improve performance large,

// but the timeout recv will cause 33% sys call performance,

// to use isolate thread to recv, can improve about 33% performance.

// @see https://github.com/ossrs/srs/issues/194

// @see: https://github.com/ossrs/srs/issues/217

rtmp->set_recv_timeout(ST_UTIME_NO_TIMEOUT);

handler->on_thread_start();

}

该函数是设置 isolate recv 线程的接收超时时间为 -1,接着调用 SrsQueueRecvThread::on_thread_start 函数。

4.1.3 SrsQueueRecvThread::on_thread_start

void SrsQueueRecvThread::on_thread_start()

{

// disable the protocol auto response,

// for the isolate recv thread should never send any messages.

rtmp->set_auto_response(false);

}

该函数是禁止 protocol 自动回复客户端的请求(实际是将 auto_response_when_recv 置为 false),因为对于 isolate recv 线程不应该发送任何消息给客户端。

4.2 SrsReusableThread2::cycle

在 SrsThread::thread_cycle 函数中,会调用该函数进行事件循环的处理.

int SrsReusableThread2::cycle()

{

return handler->cycle();

}

该函数接着调用 SrsRecvThread::cycle 函数。

4.2.1 SrsRecvThread::cycle

int SrsRecvThread::cycle()

{

int ret = ERROR_SUCCESS;

/* 检测线程是否已被中断了,即 loop 循环变量是否已经为 false */

while (!trd->interrupted()) {

/* 这里调用 SrsQueueRecvThread::can_handle 函数进行检测,

* 主要是检测 SrsQueueRecvThread 的 queue 容器是否为空,

* 若为空,则可以继续往下处理,即接收新的消息,否则,进入

* 休眠。这样使该线程仅接收一条消息,并处理它 */

if (!handler->can_handler()) {

/* 若开始检测到 queue 为空,则下面开始接收消息,若接收到消息,

* 则将消息放入到 queue 中,同时唤醒等待消息的线程,这里即为

* consumer 所在的线程,让该线程来处理这个消息,而这个接收消息的

* 线程在这里进入休眠,直到 consumer 处理完这个消息,并将其从 queue

* 中移除,该 recv 才会再次从休眠中醒来,并接收新的消息 */

st_usleep(timeout * 1000);

continue;

}

SrsCommonMessage* msg = NULL;

/* recv and handle message. */

ret = rtmp->recv_message(&msg);

if (ret == ERROR_SUCCESS) {

ret = handle->handle(msg);

}

if (ret != ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret) && !srs_is_system_control_error(ret))

{

srs_error("thread process message failed. ret=%d", ret);

}

// we use no timeout to recv, should never got any error.

trd->interrupt();

// notice the handler got a recv error.

handler->on_recv_error(ret);

return ret;

}

}

return ret;

}

4.2.2 SrsQueueRecvThread::can_handle

bool SrsQueueRecvThread::can_handle()

{

/* we only recv one message and then process it,

* for the message may cause the thread to stop,

* when stop, the thread is freed, so the messages

* are dropped. */

return empty();

}

4.2.3 SrsQueueRecvThread::empty

bool SrsQueueRecvThread::empty()

{

return queue.empty();

}

该函数检测 SrsQueueRecvThread 中的 queue 容器是否为空,若为空,返回 true。

4.2.4 SrsQueueRecvThread::handle

若在 SrsRecvThread::cycle 函数循环中检测到 SrsQueueRecvThread 的 queue 为空,则开始接收一条消息,成功接收到消息后,紧接着处理调用该函数处理该消息。

int SrsQueueRecvThread::handle(SrsCommonMessage* msg)

{

/*

* put into queue, the send thread will get and process it,

* @see SrsRtmpConn::process_play_control_msg

*/

queue.push_back(msg);

#ifdef SRS_PERF_QUEUE_COND_WAIT

if (_consumer) {

_consumer->wakeup();

}

#endif

return ERROR_SUCCESS;

}

该函数中将接收到的消息放入到 queue 队列中,然后检测到 _consumer 不为 NULL 时,则调用该 _consumer 的 wakeup 函数。

4.2.5 SrsConsumer::wakeup

/*

* when the consumer(for player) got msg from recv thread,

* it must be processed for maybe it's a close msg, so the cond

* wait must be wakeup.

*/

void SrsConsumer::wakeup()

{

#ifdef SRS_PERF_QUEUE_WAIT

if (mw_waiting) {

/* 唤醒在 mw_wait 条件变量上休眠的线程 */

st_cond_signal(mw_wait);

mw_waiting = false;

}

#endif

}

4.2.6 st_cond_signal

int st_cond_signal(_st_cond_t *cvar)

{

/* 第二个参数为 0,表明若有多个线程在该条件变量上

* 休眠,则仅唤醒第一个 */

return _st_cond_signal(cvar, 0);

}

4.2.7 _st_cond_signal

static int _st_cond_signal(_st_cond_t *cvar, int broacast)

{

_st_thread_t *thread;

_st_clist_t *q;

/* 若有线程正在该 mw_wait 条件变量上休眠,则遍历所有 wait_q 上的线程

* */

for (q = cvar->wait_q.next; q != &cvar->wait_q; q = q->next) {

/* 根据 _st_thread_t 的成员 wait_links,定位到该 _st_thread_t 的起始地址,

* 返回该起始地址 */

thread = _ST_THREAD_WAITQ_PTR(q);

/* 检测该线程的状态是否是正在等待条件变量中 */

if (thread->state == _ST_ST_COND_WAIT) {

/* 若在休眠,则将其从休眠队列中移除 */

if (thread->flags & _ST_FL_ON_SLEEPQ)

_ST_DEL_SLEEPQ(thread);

/* Make thread runnable */

thread->state = _ST_ST_RUNNABLE;

_ST_ADD_RUNQ(thread);

if (!broadcast)

break;

}

}

return 0;

}

总结 SrsQueueRecvThread 线程:

- 接收客户端发来的消息,然后将该消息放入到 SrsQueueRecvThread 中的 queue 容器中;

- 检测是否有 _consumer 正在等待该 recv 线程接收到消息,若有,则唤醒该等待的线程。

4.3 st_thread_exit

当 SrsQueueRecvThread 线程从线程循环中退出时,这里需要调用 st_thread_exit 清空该线程的资源,以便正确结束该线程。

void st_thread_exit(void *retval)

{

_st_thread_t *thread = _ST_CURRENT_THREAD();

thread->retval = retval;

_st_thread_cleanup(thread);

_st_active_count--;

if (thread->term) {

/* Put thread on the zombie queue */

thread->state = _ST_ST_ZOMBIE;

_ST_ADD_ZOMBIEQ(thread);

/* Notify on our termination condition variable */

st_cond_signal(thread->term);

/* Switch context and come back later */

_ST_SWITCH_CONTEXT(thread);

/* Continue the cleanup */

st_cond_destroy(thread->term);

thread->term = NULL;

}

#ifdef DEBUG

_ST_DEL_THREADQ(thread);

#endif

if (!(thread->flags & _ST_FL_PRINORDIAL))

_st_stack_free(thread->stack);

/* Find another thread to run */

_ST_SWITCH_CONTEXT(thread);

/* Not going to land here. */

}

4.3.1 _st_thread_cleanup

/* Free up all per-thread private data */

void _st_thread_cleanup(_st_thread_t *thread)

{

int key;

for (key = 0; key < key_max; key++) {

if (thread->private_data[key] && _st_destructors[key]) {

(*_st_destructors[key])(thread->private_data[key]);

thread->private_data[key] = NULL;

}

}

}

接下来回到 SrsRtmpConn::playing 函数中,接着处理 do_playing 函数,该函数是将音视频消息发送给播放客户端。

5. SrsRtmpConn::do_playing

int SrsRtmpConn::do_playing(SrsSource* source, SrsConsumer* consumer,

SrsQueueRecvThread* trd)

{

int ret = ERROR_SUCCESS;

srs_assert(consumer != NULL);

/* 若配置文件中没有配置 refer_play,则忽略这里 */

if ((ret = refer->check(req->pageUrl, _srs_config->get_refer_play(req->vhost)))

!= ERROR_SUCCESS) {

...

}

/* initialize other components */

SrsPithyPrint* pprint = SrsPithyPrint::create_rtmp_play();

SrsAutoFree(SrsPithyPrint, pprint);

SrsMessageArray msgs(SRS_PERF_MW_MSGS);

bool user_specified_duration_to_stop = (req->duration > 0);

int64_t starttime = -1;

/* setup the realtime. */

/* 若配置文件中没有配置 min_latency,则默认返回 false */

realtime = _srs_config->get_realtime_enabled(req->vhost);

/* setup the mw config.

* when mw_sleep changed, resize the socket send buffer */

mw_enabled = true;

/* 若配置文件中没有配置 mw_latency,则默认返回 350 */

change_mw_sleep(_srs_config->get_mw_sleep_ms(req->vhost));

/* initialize the send_min_interval: 没有配置 send_min_interval,则默认 0.0 */

send_min_interval = _srs_config->get_send_min_interval(req->vhost);

/* set the sock options. */

set_sock_options();

while (!disposed) {

/* collect elapse for pithy print. */

pprint->elapse();

/* when source is set to expired, disconnect it. */

if (expired) {

ret = ERROR_USER_DISCONNECT;

return ret;

}

/* to use isolate thread to recv, can improve about 33% performance.

* @see: https://github.com/ossrs/srs/issues/196

* @see: https://github.com/ossrs/srs/issues/217 */

while (!trd->empty()) {

/* 从该 SrsQueueRecvThread 的成员 queue 容器中取出一个消息,并将其

* 从 queue 中移除 */

SrsCommonMessage* msg = trd->pump();

/* 处理该消息 */

if ((ret = process_play_control_msg(consumer, msg)) != ERROR_SUCCESS) {

if (!srs_is_system_control_error(ret) &&

!srs_is_client_gracefully_close(ret)) {

...

}

return ret;

}

}

/* quit when recv thread error. */

if ((ret = trd->error_code()) != ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret) && !srs_is_system_control_error(ret))

{

...

}

return ret;

}

#ifdef SRS_PERF_QUEUE_COND_WAIT

/* for send wait time debug */

srs_verbose("send thread now=%"PRId64"us, wait %dms",

srs_update_system_time_ms(), mw_sleep);

/* wait for message to incoming.

* @see https://github.com/ossrs/srs/issues/251

* @see https://github.com/ossrs/srs/issues/257 */

if (realtime) {

/* for realtime, min required msgs is 0, send shen got one+ msgs. */

consumer->wait(0, mw_sleep);

} else {

/* for no-realtime, got some msgs then send. */

consumer->wait(SRS_PERF_MW_MIN_MSGS, mw_sleep);

}

/* for send wait time debug. */

srs_verbose("send thread now=%"PRId64"us wakeup", srs_update_system_time_ms());

#endif

/* get messages from consumer.

* each msg in mesg.msgs must be free, for the SrsMessageArray never free them.

* @remark when enable send_min_interval, only fetch one message a time. */

int count = (send_min_interval > 0) ? 1 : 0;

if ((ret = consumer->dump_packets(&msgs, count)) != ERROR_SUCCESS) {

...

}

/* reportable */

if (pprint->can_print()) {

kbps->sample();

}

/* we use wait timeout to get messages,

* for min latency event no message incoming,

* so the count maybe zero. */

if (count > 0) {

...

}

if (count <= 0) {

#ifndef SRS_PERF_QUEUE_COND_WAIT

srs_info("mw sleep %dms for no msg", mw_sleep);

st_usleep(mw_sleep * 1000);

#else

srs_verbose("mw wait %dms and got nothing.", mw_sleep);

#endif

/* ignore when nothing got. */

continue;

}

srs_info("got %d msgs, min=%d, mw=%d", count, SRS_PERF_MW_MIN_MSGS, mw_sleep);

/* only when user specifies the duration,

* we start to collect the duration for each message. */

if (user_specified_duration_to_stop) {

for (int i = 0; i < count; i++) {

SrsSharedPtrMessage* msg = msgs.msgs[i];

/* foreach msg, collect the duration.

* @remark: never use msg when sent it,

* for the protocol sdk will free it. */

if (starttime < 0 || starttime > msg->timestamp) {

starttime = msg->timestamp;

}

duration += msg->timestamp - starttime;

starttime = msg->timestamp;

}

}

/* sendout messages, all messages are freed by send_and_free_messages().

* no need to assert msg, for the rtmp will assert it. */

if (count > 0 &&

(ret = rtmp->send_and_free_messages(msgs.msgs, count, res->stream_id))

!= ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret)) {

...

}

return ret;

}

/* if duration specified, and exceed it, stop play live.

* @see: https://github.com/ossrs/srs/issues/45 */

if (user_specified_duration_to_stop) {

if (duration >= (int64_t)req->duration) {

ret = ERROR_RTMP_DURATION_EXCEED;

srs_trace("stop live for duration exceed. ret=%d", ret);

return ret;

}

}

/* apply the minimal interval for delivery stream in ms. */

if (send_min_interval > 0) {

st_usleep((int64_t)(send_min_interval * 1000));

}

}

return ret;

}

5.1 SrsMessageArray 构造

the class to auto free the shared ptr message array. when need to get some messages, for instance, from Consumer queue, create a message array, whose msgs can used to accept the msgs, the send each message and set to NULL.

@remark: user must free all msgs in array, for the SRS2.0 protocol stack provides an api to send message, @see send_and_free_messages

/* create msg array, initialize array to NULL ptrs. */

SrsMessageArray::SrsMessageArray(int max_msgs)

{

srs_assert(max_msgs > 0);

/* when user already send the msg in msgs, please set to NULL,

* for instance, msg= msgs.msgs[i], msgs.msgs[i]=NULL, send(msg),

* where send(msg) will always send and free it. */

msgs = new SrsSharedPtrMessage*[max_msgs];

max = max_msgs;

/* zero initialize the message array. */

zero(max_msgs);

}

5.2 SrsRtmpConn::process_play_control_msg

int SrsRtmpConn::process_play_control_msg(SrsConsumer* consumer, SrsCommonMessage* msg)

{

int ret = ERROR_SUCCESS;

if (!msg) {

return ret;

}

SrsAutoFree(SrsCommonMessage, msg);

if (!msg->header.is_amf0_command() && !msg->header.is_amf3_command()) {

return ret;

}

SrsPacket* pkt = NULL;

if ((ret = rtmp->decode_message(msg, &pkt)) != ERROR_SUCCESS) {

return ret;

}

SrsAutoFree(SrsPacket, pkt);

SrsCloseStreamPacket* close = dynamic_cast<SrsCloseStreamPacket*>(pkt);

if (close) {

ret = ERROR_CONTROL_RTMP_CLOSE;

srs_trace("system control message: rtmp close stream. ret=%d", ret);

return ret;

}

/* call msg,

* support response nul first,

* @see https://github.com/ossrs/srs/issues/106

* TODO: FIXME: response in right way, or forward in edge mode. */

SrsCallPacket* call = dynamic_cast<SrsCallPacket*>(pkt);

if (call) {

/* only response it when transaction id not zero,

* for the zero means donot need response. */

if (call->transaction_id > 0) {

SrsCallResPacket* res = new SrsCallResPacket(call->transaction_id);

res->command_object = SrsAmf0Any::null();

res->response = SrsAmf0Any::null();

if ((ret = rtmp->send_and_free_packet(res, 0)) != ERROR_SUCCESS) {

if (!srs_is_system_control_error(ret) &&

!srs_is_client_gracefully_close(ret)) {

...

}

return ret;

}

}

return ret;

}

/* pause */

SrsPausePacket* pause = dynamic_cast<SrsPausePacket*>(pkt);

if (pause) {

if ((ret = rtmp->on_play_client_pause(res->stream_id, pause->is_pause))

!= ERROR_SUCCESS) {

return ret;

}

if ((ret = consumer->on_play_client_pause(pause->is_pause)) != ERROR_SUCCESS) {

srs_error("consumer process play client pause failed. ret=%d", ret);

return ret;

}

srs_info("process pause success, is_pause=%d, time=%d.",

pause->is_pause, pause->time_ms);

return ret;

}

// other msg.

srs_info("ignore all amf0/amf3 command except pause and video control.");

return ret;

}

5.3 SrsConsumer::wait

#ifdef SRS_PERF_QUEUE_COND_WAIT

/*

* wait for messages incoming, at least nb_msgs and in duration.

* @param nb_msgs the messages count to wait.

* @param duration the message duration to wait.

*/

void SrsConsumer::wait(int nb_msgs, int duration)

{

/* 若接收到客户端发来的 puase 消息,则会设置该变量为 true,

* 使当前线程进入休眠,直到客户端取消暂停为止 */

if (paused) {

st_usleep(SRS_CONSTS_RTMP_PULSE_TIMEOUT_US);

return;

}

/* 传入的值为 8,即表明 queue 中最少也需要有 8 条音视频消息 */

mw_min_msgs = nb_msgs;

mw_duration = duration;

/* 获取已经缓存的所有音视频的时长 */

int duration_ms = queue->duration();

/* 若缓存的音视频消息个数大于最小合并发送的消息个数,则为 true */

bool match_min_msgs = queue->size() > mw_min_msgs;

/* when duration ok, signal to flush. */

if (match_min_msgs && duration_ms > mw_duration) {

return;

}

/* the enqueue will notify this cond. */

mw_waiting = true;

/* use cond block wait for high performance mode. */

st_cond_wait(mw_wait);

}

#endif

5.3.1 st_cond_wait

int st_cond_wait(_st_cond_t *cvar)

{

return st_cond_timedwait(cvar, ST_UTIME_NO_TIMEOUT);

}

5.3.2 st_cond_timedwait

int st_cond_timedwait(_st_cond_t *cvar, st_utime_t timeout)

{

_st_thread_t *me = _ST_CURRENT_THREAD();

int rv;

if (me->flags & _ST_FL_INTERRUPT) {

me->flags &= ~_ST_FL_INTERRUPT;

errno = EINTR;

return -1;

}

/* Put caller thread on the condition variable's wait queue */

me->state = _ST_ST_COND_WAIT;

ST_APPEND_LINK(&me->wait_links, &cvar->wait_q);

if (timeout != ST_UTIME_NO_TIMEOUT)

_ST_ADD_SLEEPQ(me, timeout);

_ST_SWITCH_CONTEXT(me);

ST_REMOVE_LINK(&me->wait_links);

rv = 0;

if (me->flags & _ST_FL_TIMEDOUT) {

me->flags &= ~_ST_FL_TIMEDOUT;

errno = ETIME;

rv = -1;

}

if (me->flags & _ST_FL_INTERRUPT) {

me->flags &= ~_ST_FL_INTERRUPT;

errno = EINTR;

rv = -1;

}

return rv;

}

5.4 SrsConsumer::dump_packets

/*

* get packets in consumer queue.

* @param msgs the msgs array to dump packets to send.

* @param count the count in array, intput and output param.

* @remark user can specifies the count to get specified msgs; 0 to get all if possible.

*/

int SrsConsumer::dump_packets(SrsMessageArray* msgs, int& count)

{

int ret = ERROR_SUCCESS;

srs_assert(count >= 0);

srs_assert(msgs->max > 0);

/* the count used as input to reset the max if positive. */

int max = count ? srs_min(count, msgs->max) : msgs->max;

/* the count specifies the max acceptable count,

* here maybe 1+, and we must set to 0 when got nothing. */

count = 0;

if (should_update_source_Id) {

srs_trace("update source_id=%d[%d]", source->source_id(), source->source_id());

should_update_source_id = false;

}

/* paused, return nothing. */

if (paused) {

return ret;

}

/* pump msgs from queue. */

if ((ret = queue->dump_packets(max, msgs->msgs, count)) != ERROR_SUCCESS) {

return ret;

}

return ret;

}

5.4.1 SrsMessageQueue::dump_packets

/*

* get packets in consumer queue.

* @pmsgs SrsSharedPtrMessage*[], used to store the msgs, user must alloc it.

* @count the count in array, output param.

* @max_count the max count to dequeue, must be positive.

*/

int SrsMessageQueue::dump_packets(int max_count, SrsSharedPtrMessage* pmsgs, int& count)

{

int ret = ERROR_SUCCESS;

/* 获取已经缓存多少条音视频消息 */

int nb_msgs = (int)msgs.size();

if (nb_msgs <= 0) {

return ret;

}

srs_assert(max_count > 0);

count = srs_min(max_count, nb_msgs);

/* 获取缓存所有音视频消息的数组 msgs 的首地址 */

SrsSharedPtrMessage** omsgs = msgs.data();

/* 将 omsgs 中 count 个消息拷贝到 pmsgs 中 */

for (int i = 0; i < count; i++) {

pmsgs[i] = omsgs[i];

}

SrsSharedPtrMessage* last = omsgs[count - 1];

av_start_time = last->timestamp;

if (count >= nb_msgs) {

/* the pmsgs is bit enough and clear msgs at most time. */

msgs.clear();

} else {

/* erase some vector elements may cause memory copy,

* maybe can use more efficient vector.swap to avoip copy.

* @remark for the pmsgs is big enough, for instance, SRS_PERF_MW_MSGS 128,

* the rtmp play client will get 128ms once, so this brance rarely execute. */

msgs.erase(msgs.begin(), msgs.begin() + count);

}

return ret;

}

5.5 SrsRtmpServer::send_and_free_messages

/*

* send the RTMP message and always free it.

* user must never free or use the msg after this method,

* for it will always free the msg.

* @param msgs, the msgs to send out, never be NULL.

* @param nb_msgs, the size of msgs to send out.

* @param stream_id, the stream id of packet to send over, 0 for control message.

*

* @remark, performance issue, to suport 6K+ 250kbps client,

* @see https://github.com/ossrs/srs/issues/194

*/

int SrsRtmpServer::send_and_free_messages(SrsSharedPtrMessage** msgs,

int nb_msgs, int stream_id)

{

return protocol->send_adn_free_messages(msgs, nb_msgs, stream_id);

}

5.5.1 SrsProtocol::send_and_free_messages

/**

* send the RTMP message and always free it.

* user must never free or use the msg after this method,

* for it will always free the msg.

* @param msgs, the msgs to send out, never be NULL.

* @param nb_msgs, the size of msgs to send out.

* @param stream_id, the stream id of packet to send over, 0 for control message.

*/

int SrsProtocol::send_and_free_messages(SrsSharedPtrMessage** msgs,

int nb_msgs, int stream_id)

{

/* always not NULL msg. */

srs_assert(msgs);

srs_assert(nb_msgs > 0);

/* update the stream id in header. */

for (int i = 0; i < nb_msgs; i++) {

SrsSharedPtrMessage* msg = msgs[i];

if (!msg) {

continue;

}

/* check perfer cid and stream,

* when one msg stream id is ok, ignore left. */

if (msg->check(stream_id)) {

break;

}

}

/* donot use the auto free to free the msg,

* for performance issue. */

int ret = do_send_messages(msgs, nb_msgs);

/* 将所有消息发送出去后,释放这些消息所占的内存 */

for (int i = 0; i < nb_msgs; i++) {

SrsSharedPtrMessage* msg = msgs[i];

srs_freep(msg);

}

/* donot flush when send failed */

if (ret != ERROR_SUCCESS) {

return ret;

}

/* flush messages in manual queue */

if ((ret = manual_response_flush()) != ERROR_SUCCESS) {

return ret;

}

print_debug_info();

return ret;

}

5.5.2 SrsSharedPtrMessage::check

/**

* check perfer cid and stream id.

* @return whether stream id already set.

*/

int SrsSharedPtrMessage::check(int stream_id)

{

/* we donot use the complex basic header,

* ensure the basic header is 1bytes */

if (ptr->header.perfer_cid < 2) {

srs_info("change the chunk_id=%d to default=%d",

ptr->header.perfer_cid, RTMP_CID_ProtocolControl);

ptr->header.perfer_cid = RTMP_CID_ProtocolControl;

}

/* we assume that the stream_id in a group must be the same. */

if (this->stream_id == stream_id) {

return true;

}

this->stream_id = stream_id;

return false;

}

5.5.3 SrsProtocol::do_send_messages

/*

* how many msgs can be send entirely.

* for play clients to get msgs then totally send out.

* for the mw sleep set to 1800, the msgs is about 133.

* @remark, recomment to 128.

*/

#define SRS_PERF_MW_MSGS 128

/*

* for performance issue,

* the c0c3 cache, @see https://github.com/ossrs/srs/issues/194

* c0c3 cache for multiple messages for each connections.

* each c0 <= 16bytes, suppose the chunk size is 64k,

* each message send in a chunk which needs only a c0 header,

* so the c0c3 cache should be (SRS_PERF_MW_MSGS * 16)

*

* @remark, SRS will try another loop when c0c3 cache dry, for we cannot realloc it.

* wo we use larger c0c3 cache, that is (SRS_PERF_MW_MSGS * 32)

*/

#define SRS_CONSTS_C0C3_HEADERS_MAX (SRS_PERF_MW_MSGS * 32)

int SrsProtocol::do_send_messages(SrsSharedPtrMessage** msgs, int nb_msgs)

{

int ret = ERROR_SUCCESS;

#ifdef SRS_PERF_COMPLEX_SEND

/*

* out_iovs:

* cache for multiple message send,

* initialize to iovec[SRS_CONSTS_IOVS_MAX] and realloc when consumed,

* it's ok to realloc the iovs cache, for all ptr is ok.

*/

int iov_index = 0;

iovec* iovs = out_iovs + iov_index;

/*

* out_c0c3_caches:

* output header cache.

* used for type0, 11bytes(or 15bytes with extended timestamp) header.

* or for type3, 1bytes(or 5bytes with extended timestamp) header.

* the c0c3 caches must use unit SRS_CONSTS_RTMP_MAX_FMT0_HEADER_SIZE bytes.

*

* @remark, the c0c3 cache cannot be realloc.

*/

int c0c3_cache_index = 0;

char* c0c3_cache = out_c0c3_caches + c0c3_cache_index;

/* try to send use the c0c3 header cache,

* if cache is consumed, try another loop. */

for (int i = 0; i < nb_msgs; i++) {

SrsSharedPtrMessage* msg = msgs[i];

if (!msg) {

continue;

}

/* ignore empty message. */

if (!msg->payload || msg->size <= 0) {

srs_info("ignore empty message.");

continue;

}

/* p set to current write position,

* it's ok when payload is NULL and size is 0. */

char* p = msg->payload;

char* pend = msg->payload + msg->size;

/* always write the header event payload is empty. */

while (p < pend) {

/* always has header */

int nb_cache = SRS_CONSTS_C0C3_HEADERS_MAX - c0c3_cache_index;

int nbh = msg->chunk_header(c0c3_cache, nb_cache, p == msg->payload);

srs_assert(nbh > 0);

/* header iov */

iovs[0].iov_base = c0c3_cache;

iovs[0].iov_len = nbh;

/* payload iov */

int payload_size = srs_min(out_chunk_size, (int)(pend - p));

iovs[1].iov_base = p;

iovs[1].iov_len = payload_size;

/* consumer sendout bytes. */

p += payload_size;

/* realloc the iovs if exceed,

* for we donot know how many messages maybe to send entirely,

* we just alloc the iovs, it's ok. */

if (iov_index >= nb_out_iovs - 2) {

srs_warn("resize iovs %d => %d, max_msgs=%d",

nb_out_iovs, nb_out_iovs + SRS_CONSTS_IOVS_MAX,

SRS_PERF_MW_MSGS);

nb_out_iovs += SRS_CONSTS_IOVS_MAX;

int realloc_size = sizeof(iovec) * nb_out_iovs;

out_iovs = (iovec*)realloc(out_iovs, realloc_size);

}

/* to next pair of iovs */

iov_index += 2;

iovs = out_iovs + iov_index;

/* to next c0c3 header cache */

c0c3_cache_index += nbh;

c0c3_cache = out_c0c3_caches + coc3_cache_index;

/* the cache header should never be realloc again,

* for the ptr is set to iovs, so we just warn user to set larger

* and use another loop to send again. */

int c0c3_left = SRS_CONSTS_C0C3_HEADERS_MAX - c0c3_cache_index;

/* 剩下的空间不足以存放一个 rtmp header */

if (c0c3_left < SRS_CONSTS_RTMP_MAX_FMT0_HEADER_SIZE) {

/* only warn once for a connection. */

if (!warned_c0c3_cache_dry) {

srs_warn("c0c3 cache header too small, recoment to %d",

SRS_CONSTS_C0C3_HEADERS_MAX +

SRS_CONSTS_RTMP_MAX_FMT0_HEADER_SIZE);

/* whether warned user to increase the c0c3 header cache. */

warned_c0c3_cache_dry = true;

}

/* 若 c0c3 的空间都消耗完了,则把所有的消息都发送出去,然后复位 c0c3 */

/* when c0c3 cache dry,

* sendout all messages and reset the cache, then send again. */

if ((ret = do_iovs_send(out_iovs, iov_index)) != ERROR_SUCCESS) {

return ret;

}

/* reset caches, while these cache ensure

* at least we can sendout a chunk */

iov_index = 0;

iovs = out_iovs + iov_index;

c0c3_cache_index = 0;

c0c3_cache = out_c0c3_caches + c0c3_cache_index;

}

}

}

/* maybe the iovs already sendout when c0c3 cache dry,

* so just ignore when no iovs to send. */

if (iov_index <= 0) {

return ret;

}

srs_info("mw %d msgs in %d iovs, max_msgs=%d, nb_out_iovs=%d",

nb_msgs, iov_index, SRS_PERF_MW_MSGS, nb_out_iovs);

return do_iovs_send(out_iovs, iov_index);

#else

/* try to send use the c0c3 header cache,

* if cache is consumed, try another loop. */

for (int i = 0; i < nb_msgs; i++) {

SrsSharedPtrMessage* msg = msgs[i];

if (!msg) {

continue;

}

// ignore empty message.

if (!msg->payload || msg->size <= 0) {

srs_info("ignore empty message.");

continue;

}

// p set to current write position,

// it's ok when payload is NULL and size is 0.

char* p = msg->payload;

char* pend = msg->payload + msg->size;

// always write the header event payload is empty.

while (p < pend) {

// for simple send, send each chunk one by one

iovec* iovs = out_iovs;

char* c0c3_cache = out_c0c3_caches;

int nb_cache = SRS_CONSTS_C0C3_HEADERS_MAX;

// always has header

int nbh = msg->chunk_header(c0c3_cache, nb_cache, p == msg->payload);

srs_assert(nbh > 0);

// header iov

iovs[0].iov_base = c0c3_cache;

iovs[0].iov_len = nbh;

// payload iov

int payload_size = srs_min(out_chunk_size, pend - p);

iovs[1].iov_base = p;

iovs[1].iov_len = payload_size;

// consume sendout bytes.

p += payload_size;

if ((ret = skt->writev(iovs, 2, NULL)) != ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret)) {

srs_error("send packet with writev failed. ret=%d", ret);

}

return ret;

}

}

}

return ret;

#endif

}

5.5.4 SrsSharedPtrMessage::chunk_header

/*

* generate the chunk header to cache.

* @return the size of header.

*/

int SrsSharedPtrMessage::chunk_header(char* cache, int nb_cache, bool c0)

{

/* c0 为 true,代表需要生成的是该消息的第一个chunk,即

* 此时 chunk basic header 的 fmt 为 0 */

if (c0) {

return srs_chunk_header_c0(

ptr->header.perfer_cid, timestamp, ptr->header.payload_length,

ptr->header.message_type, stream_id,

cache, nb_cache);

/* 否则,此时需要生成的不是该消息的第一个 chunk,则

* fmt 为 3 */

} else {

return srs_chunk_header_c3(

ptr->header.perfer_cid, timestamp,

cache, nb_cache);

}

}

5.5.5 srs_chunk_header_c0

假设需要生成的是该消息的第一个 chunk。

/*

* max rtmp header size.

* 1byte basic header

* 11bytes message header

* 4bytes timestamp header

* that is, 1+11+4 = 16bytes

*/

#define SRS_CONSTS_RTMP_MAX_FMT0_HEADER_SIZE 16

/*

* generate the c0 chunk header for msg.

* @param cache, the cache to write header.

* @param nb_cache, the size of cache.

* @param the size of header. 0 if cache not enough.

*/

int srs_chunk_header_c0(

int perfer_cid, u_int32_t timestamp, int32_t payload_length,

int8_t message_type, int32_t stream_id,

char* cache, int nb_cache)

{

/* to directly set the field. */

char* pp = NULL;

/* generate the header */

char* p = cache;

/* no header */

if (nb_cache < SRS_COSNTS_RTMP_MAX_FMT0_HEADER_SIZE) {

return 0;

}

/* write new chunk stream header, fmt is 0 */

*p++ = 0x00 | (perfer_cid & 0x3F);

/* chunk message header, 11 bytes

* timestamp, 3bytes, big-endian */

if (timestamp < RTMP_EXTENDED_TIMESTAMP) {

pp = (char*)×tamp;

*p++ = pp[2];

*p++ = pp[1];

*p++ = pp[0];

} else {

*p++ = 0xFF;

*p++ = 0xFF;

*p++ = 0xFF;

}

/* message_length, 3bytes, big-endian */

pp = (char*)&payload_length;

*p++ = pp[2];

*p++ = pp[1];

*p++ = pp[0];

/* message_type, 1byte */

*p++ = message_type;

/* stream_id, 4bytes, little-endian */

pp = (char*)&stream_id;

*p++ = pp[0];

*p++ = pp[1];

*p++ = pp[2];

*p++ = pp[3];

/*

* for c0

* chunk extended timestamp header, 0 or 4 bytes, big-endian

*

* for c3:

* chunk extenede timestamp header, 0 or 4 bytes, big-endian

* Extended Timestamp

* This field is transmitted only when the normal time stamp in the

* chunk message header is set to 0x00ffffff. If normal time stamp is

* set to any value less than 0x00ffffff, this field MUST NOT be

* present. This field MUST NOT be present if the timestamp field is not

* present. Type 3 chunks MUST NOT have this field.

* adobe changed for Type3 chunk:

* FMLE always sendout the extended-timestamp,

* must send the extended-timestamp to FMS,

* must send the extended-timestamp to flash-player.

* @see: ngx_rtmp_prepare_message

* @see: http://blog.csdn.net/win_lin/article/details/13363699

* TODO: FIXME: extract to outer.

*/

if (timestamp >= RTMP_EXTENDED_TIMESTAMP) {

pp = (char*)×tamp;

*p++ = pp[3];

*p++ = pp[2];

*p++ = pp[1];

*p++ = pp[0];

}

/* always has header */

return p - cache;

}

5.5.6 srs_chunk_header_c3

若当前需要生成的是 fmt 为 3 的 rtmp header。

/*

* max rtmp header size:

* 1bytes basic header,

* 4bytes timestamp header,

* that is, 1+4=5bytes.

*/

/* always use fmt0 as cache. */

#define SRS_CONSTS_RTMP_MAX_FMT3_HEADER_SIZE 5

int srs_chunk_header_c3(

int perfer_cid, u_int32_t timestamp,

char* cache, int nb_cache)

{

/* to directly set the field */

char* pp = NULL;

/* generate the header */

char* p = cache;

/* no header */

if (nb_cache < SRS_CONSTS_RTMP_MAX_FMT3_HEADER_SIZE) {

return 0;

}

/*

* write no message header chunk stream, fmt is 3

* @remark, if perfer_cid > 0x3F, that is, use 2B/3B chunk header,

* SRS will rollback to 1B chunk header.

*/

*p++ = 0xc0 | (perfer_cid & 0x3F);

if (timestamp >= RTMP_EXTENDED_TIMESTAMP) {

pp = (char*)×tamp;

*p++ = pp[3];

*p++ = pp[2];

*p++ = pp[1];

*p++ = pp[0];

}

/* always has header */

return p - cache;

}

5.5.7 do_iovs_send

当 do_send_messages 的 for 循环中将所有要发送给客户端的音视频消息都缓存到 out_iovs 中时,将调用 do_iovs_send 函数将这些消息都发送出去。

/*

* send iovs. send multiple times if exceed limits.

*/

int SrsProtocol::do_iovs_send(iovec* iovs, int size)

{

/* skt: underlayer socket object, send/recv bytes. */

return srs_write_large_iovs(skt, iovs, size);

}

5.5.8 srs_write_large_iovs

/* write large numbers of iovs. */

int srs_write_large_iovs(ISrsProtocolReaderWriter* skt, iovec* iovs,

int size, ssize_t* pnwrite)

{

int ret = ERROR_SUCCESS;

/* the limits of writev iovs.

* for srs-librtmp, @see https://github.com/ossrs/srs/issues/213 */

#ifndef _WIN32

/* for linux, generally it's 1024 */

static int limits = (int)sysconf(_SC_IOV_MAX);

#else

static int limits = 1024;

#endif

/* send in a time. */

if (size < limits) {

if ((ret = skt->writev(iovs, size, pnwrite)) != ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret)) {

srs_error("send with writev failed. ret=%d", ret);

}

return ret;

}

return ret;

}

/* send in multiple times. */

int cur_iov = 0;

while (cur_iov < size) {

int cur_count = srs_min(limits, size - cur_iov);

if ((ret = skt->writev(iovs + cur_iov, cur_coutn, pnwrite)) != ERROR_SUCCESS) {

if (!srs_is_client_gracefully_close(ret)) {

srs_error("send with writev failed. ret=%d", ret);

}

return ret;

}

cur_iov += cur_count;

}

return ret;

}

5.5.9 SrsProtocol::manual_response_flush

int SrsProtocol::manual_response_flush()

{

int ret = ERROR_SUCCESS;

if (manual_response_queue.empty()) {

reutrn ret;

}

std::vector<SrsPacket*>::iterator it;

for (it = manual_response_queue.begin(); it != manual_response_queue.end(); ) {

SrsPacket* pkt = *it;

/* erase this packet, the send api always free it. */

it = manual_response_queue.erase(it);

/* use underlayer api to send, donot flush again. */

if ((ret = do_send_and_free_packet(pkt, 0)) != ERROR_SUCCESS) {

return ret;

}

}

return ret;

}