在Linux上安装Zookeeper及Kafka

1.安装Zookeeper后,在其配置文件zoo.cfg中添加:

dataLogDir=******************

然后运行命令:zkServer.sh start 启动Zookeeper。

2.安装Kafka

安装Kafka后,运行命令:./bin/kafka-server-start.sh ./config/server.properties,命令启动kafka.

3.生产者代码:

首先引入Kafka的client jar包

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.11.0.1</version>

</dependency>

public class Producer { // Topic private static final String topic = "kafkaTopic1"; public static void aaa() { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.102.128:9092"); props.put("acks", "0"); props.put("group.id", "1111"); props.put("retries", "0"); props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); //生产者实例 KafkaProducer<String, String> producer = new KafkaProducer<String, String>(props); int i = 1; // 发送消息 while (true) { System.out.println("--------------生产开始:--------------"); producer.send(new ProducerRecord<String, String>(topic, "key:" + i, "value:" + i)); System.out.println("key:" + i + " " + "value:" + i); i++; if (i >= 10) { break; } } } }

4.引入消费者代码:

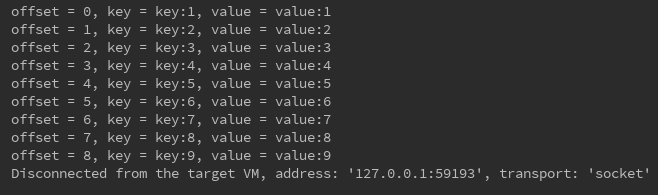

public class Consumer { private static final String topic = "kafkaTopic1"; public static void aaaa() { Properties props = new Properties(); props.put("bootstrap.servers", "192.168.102.128:9092"); props.put("group.id", "1111"); props.put("enable.auto.commit", "true"); props.put("auto.commit.interval.ms", "1000"); props.put("auto.offset.reset", "earliest"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); consumer.subscribe(Arrays.asList(topic)); while (true) { ConsumerRecords<String, String> records = consumer.poll(1000); for (ConsumerRecord<String, String> record : records) { System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), record.value()); //i++; } } } }

5.运行:

运行生产者代码,将数据推入kafka,然后运行消费者代码,发现系统运行到:

ConsumerRecords<String, String> records = consumer.poll(1000);

系统卡住不动,后台报错:

Marking the coordinator linux-kafka:9092 (id: 2147483647 rack: null) dead for group 1111

经过网上查询,发现是Kafka配置文件中没有配置监听端口所致,在配置文件中添加:

listeners=PLAINTEXT://IP:9092

保存后,重启Kafka及生产者、消费者代码,一切正常,消费端运行结果如下: