1、XPath:from lxml import etree

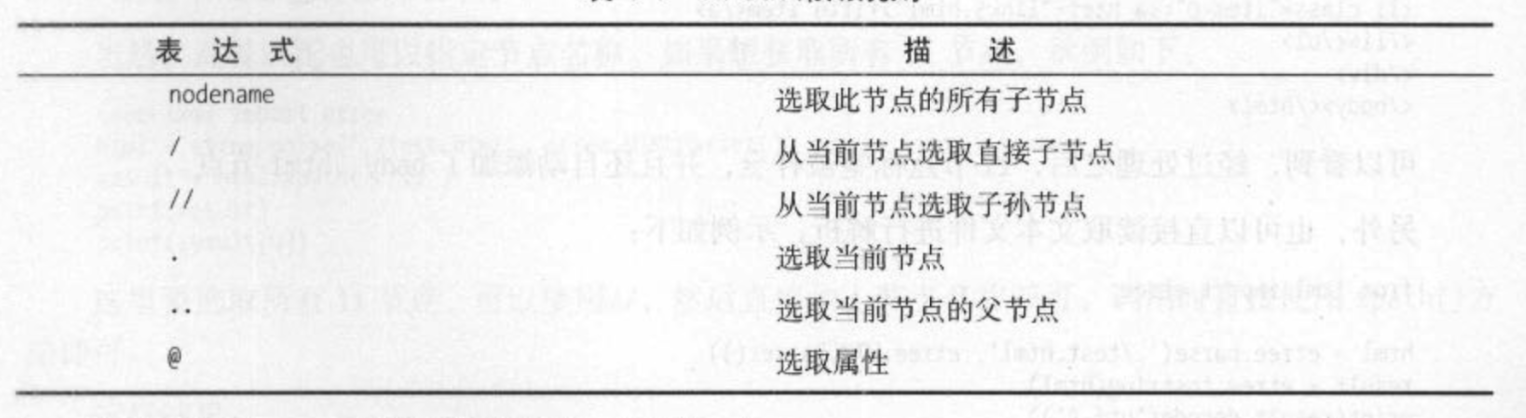

选取节点(所有节点:*)

属性匹配

html.xpath('节点名称[@属性名称="属性"]')

html.xpath('节点名称[contains(@属性名称, "属性")]') 多属性匹配选一匹配

如:<p class="a b">....</p>

html.xpath('//p[contains(@class, "a")]')

html.xpath('节点名称[contains(@属性名称, "属性") and @属性名称="属性"]') 多属性匹配两个都匹配

如:<p class="a" name="b">....</p>

html.xpath('//p[contains(@class, "a") and @name="b"]')

文本获取:/text()

html.xpath('节点名称[@属性名称="属性"]/text()')

属性获取:@属性名称

html.xpath('节点名称/@属性名称')

2、Beautiful Soup:form bs4 import BeautifulSoup

3、PyQuery:from pyquery import PyQuery as pq

属性获取:.attr(属性名称)或者.attr.属性名称

文本获取:.text()

from lxml import etree import re import requests def get_html(url): headers = { 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36'} try: r = requests.get(url, headers=headers) r.raise_for_status() return r.text except: print('status_code is not 200') return None def parse_str(str): str = re.match('s+主演:(.*?)s+', str) return str.group(1) def parse_time(str): txt = re.search('d{4}(-d{2}-d{2})*', str) return txt.group() def parse_html(html, info_list): html = etree.HTML(html) names = html.xpath('//p[@class="name"]/a/text()') ranks = html.xpath('//i[contains(@class, "board-index")]/text()') stars = list(map(parse_str, html.xpath('//p[@class="star"]/text()'))) times = list(map(parse_time, html.xpath('//p[@class="releasetime"]/text()'))) integers = html.xpath('//i[@class="integer"]/text()') fractions = html.xpath('//i[@class="fraction"]/text()') for rank, name, actor, ts, integer, fraction in zip(ranks, names, stars, times, integers, fractions): info_list.append({ 'rank': rank, 'name': name, 'actor': actor, 'time': ts, 'score': integer + fraction }) if __name__ == '__main__': url = 'http://maoyan.com/board/4' info_list = [] for i in range(10): path = url + '?offset=' + str(i*10) print(path) html = get_html(path) if html: parse_html(html, info_list) for info in info_list: print(info)

from pyquery import PyQuery import re import requests def get_html(url): headers = { 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.102 Safari/537.36'} try: r = requests.get(url, headers=headers) r.raise_for_status() return r.text except: print('status_code is not 200') return None def parse_time(str): txt = re.search('d{4}(-d{2}-d{2})*', str) return txt.group() def parse_html(html, info_list): doc = PyQuery(html) dd_nodes = doc('dl.board-wrapper') ranks = dd_nodes('.board-index').items() names = dd_nodes('.name').items() actors = dd_nodes('.star').items() times = dd_nodes('.releasetime').items() integers = dd_nodes('.integer').items() fractions = dd_nodes('.fraction').items() for rank, name, actor, ts, integer, fraction in zip(ranks, names, actors, times, integers, fractions): info_list.append({ 'rank': rank.text(), 'name': name.text(), 'actor': actor.text().replace('主演:', ''), 'time': parse_time(ts.text()), 'score': integer.text() + fraction.text() }) if __name__ == '__main__': url = 'http://maoyan.com/board/4' info_list = [] for i in range(10): path = url + '?offset=' + str(i*10) print(path) html = get_html(path) if html: parse_html(html, info_list) for info in info_list: print(info)