元学习——MAML、Reptile与ANIL

作者:凯鲁嘎吉 - 博客园 http://www.cnblogs.com/kailugaji/

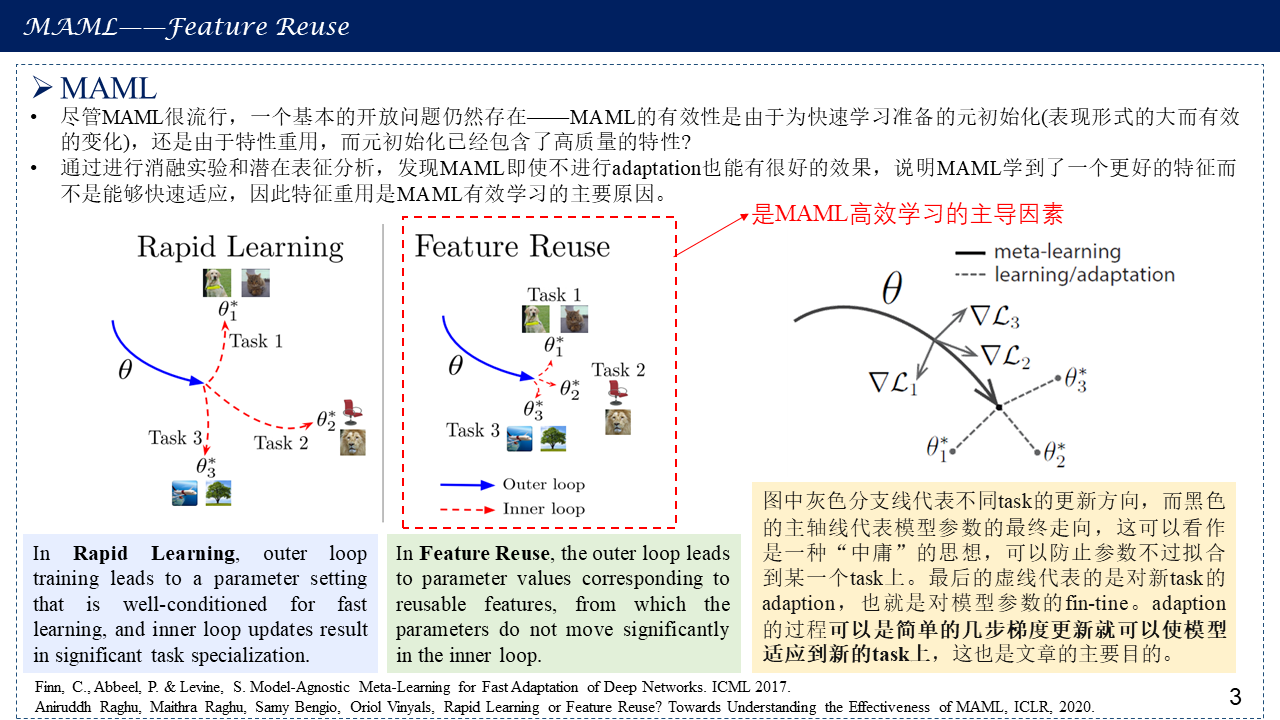

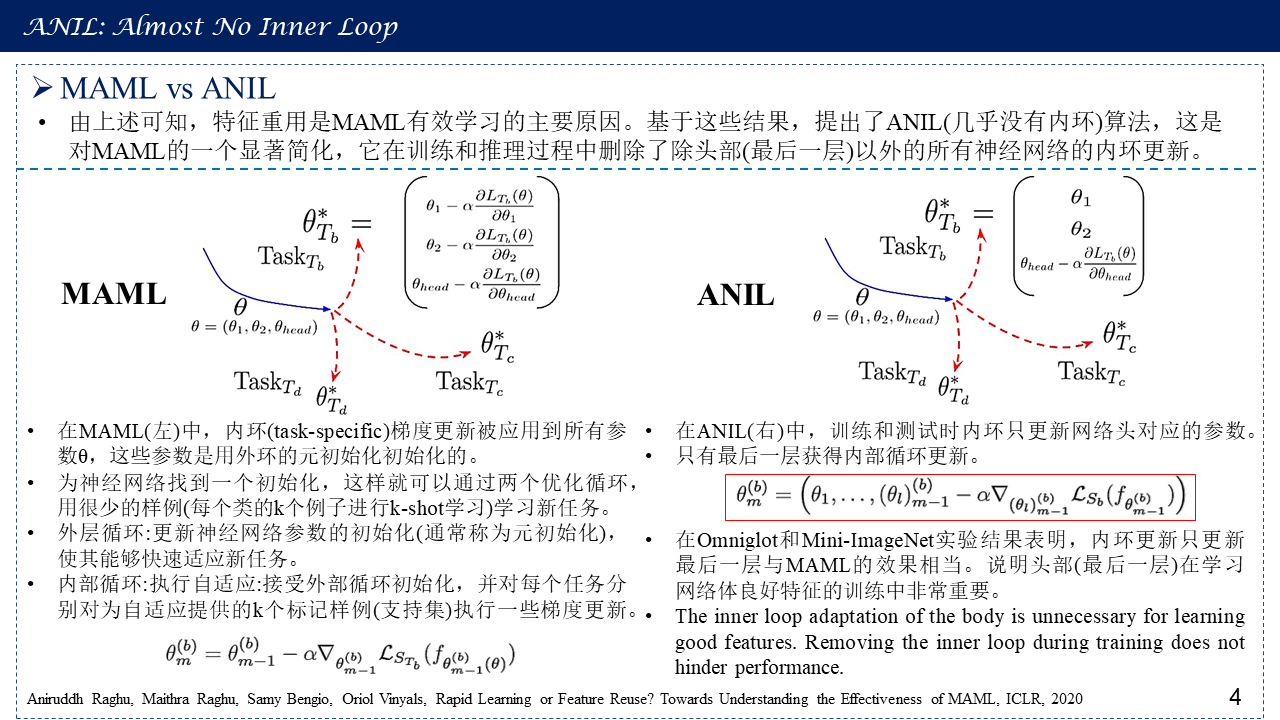

之前介绍过元学习——从MAML到MAML++,这次在此基础上进一步探讨,深入了解MAML的本质,引出MAML高效学习的原因究竟是快速学习,学到一个很厉害的初始化参数,还是特征重用,初始化参数与最终结果很接近?因此得到ANIL(Almost No Inner Loop),随后我们阅读了Reptile——On first-order meta-learning algorithms,另一种元学习方法,并比较了MAML、Reptile与模型预训练之间的区别。

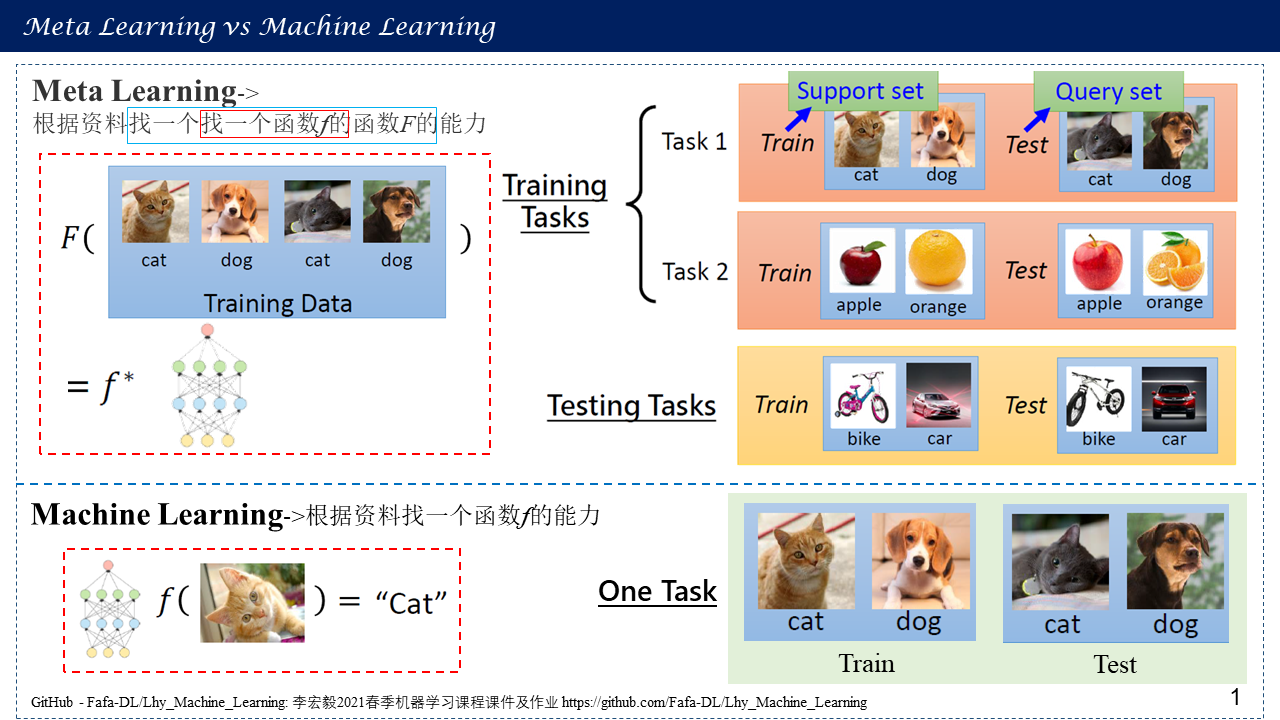

1. Meta Learning vs Machine Learning

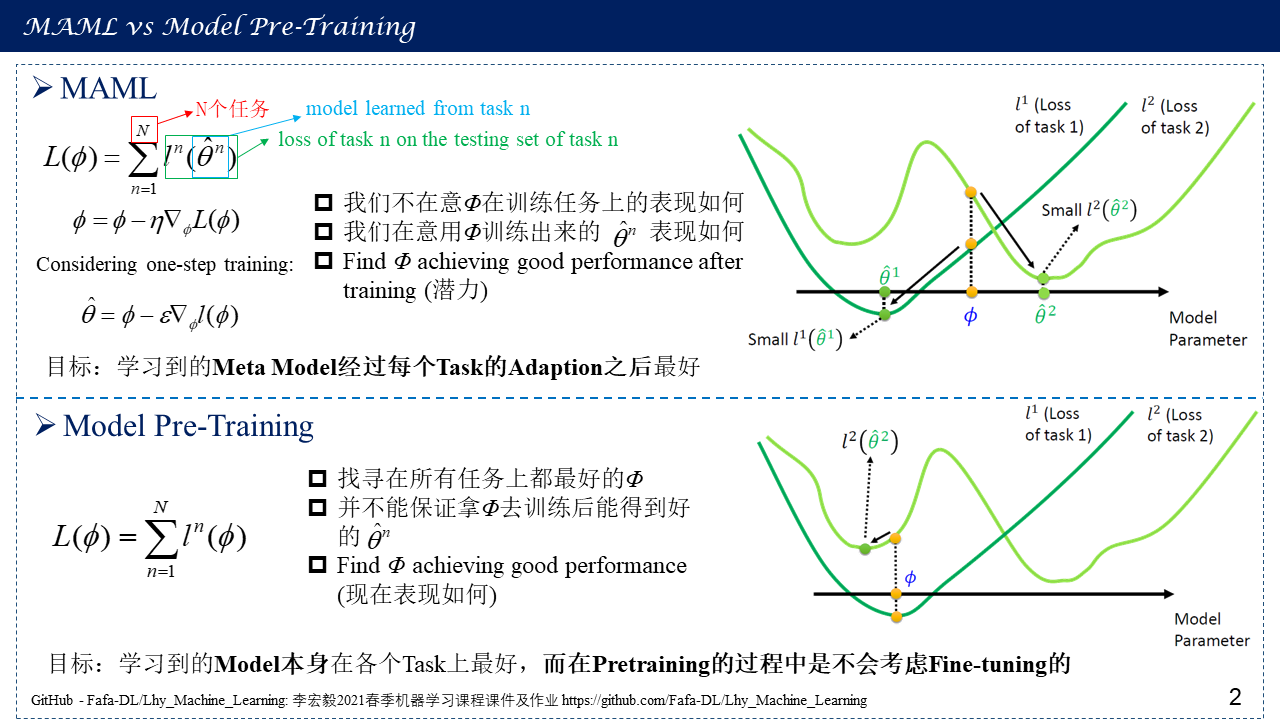

2. MAML vs Model Pre-Training

3. MAML——Feature Reuse

4. MAML vs ANIL

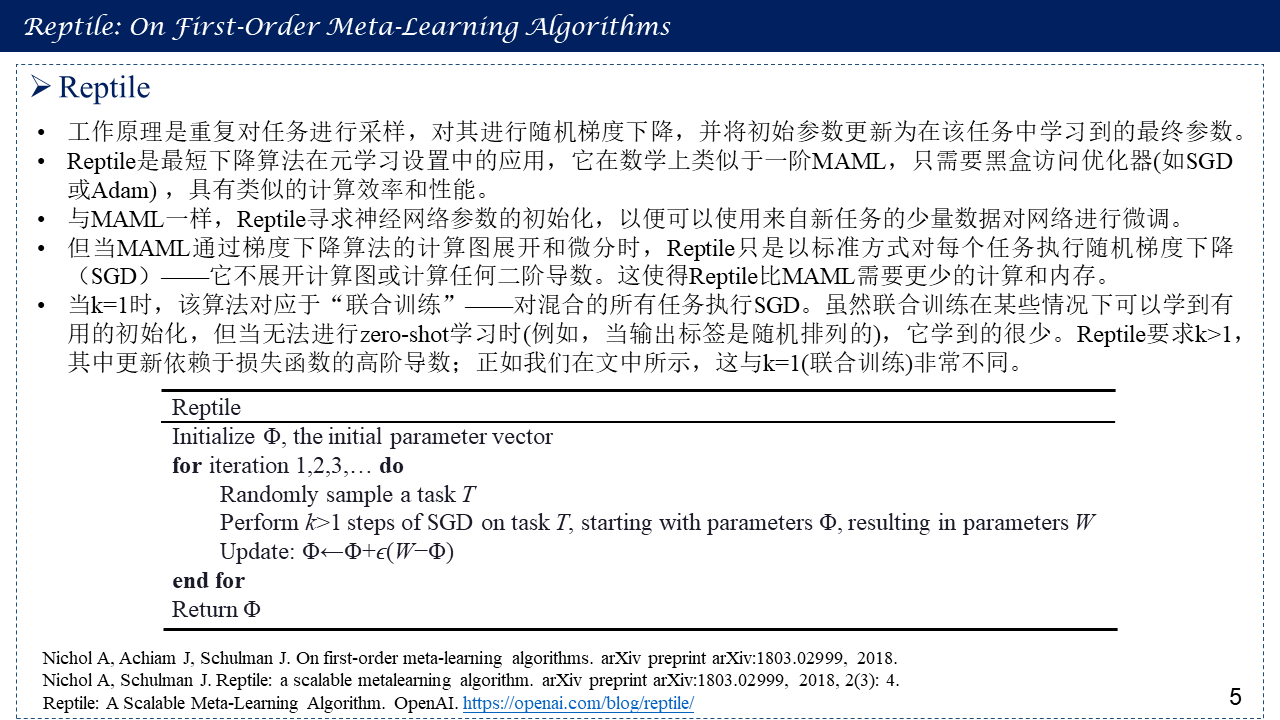

5. Reptile: On First-Order Meta-Learning Algorithms

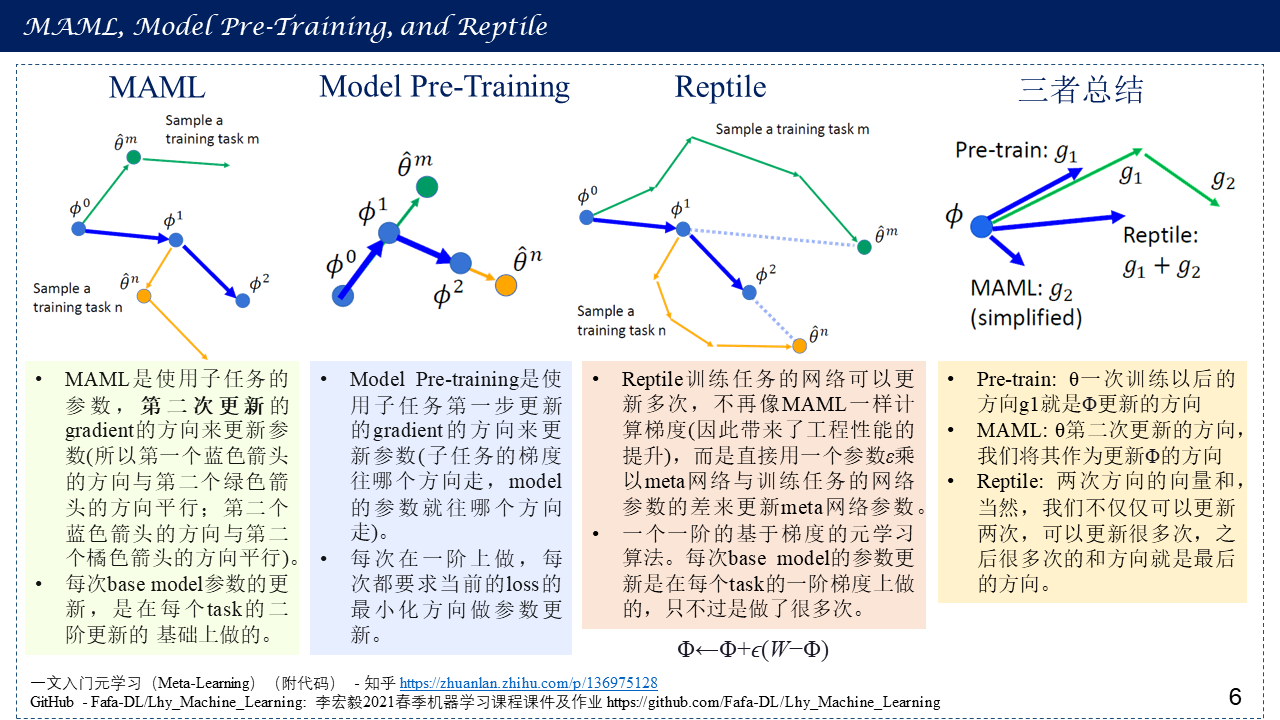

6. MAML, Model Pre-Training, and Reptile

7. 参考文献

[1] GitHub - Fafa-DL/Lhy_Machine_Learning: 李宏毅2021春季机器学习课程课件及作业 https://github.com/Fafa-DL/Lhy_Machine_Learning

[2] Finn, C., Abbeel, P. & Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. ICML 2017.

[3] Aniruddh Raghu, Maithra Raghu, Samy Bengio, Oriol Vinyals, Rapid Learning or Feature Reuse? Towards Understanding the Effectiveness of MAML, ICLR, 2020.

[4] Nichol A, Achiam J, Schulman J. On first-order meta-learning algorithms. arXiv preprint arXiv:1803.02999, 2018.

[5] Nichol A, Schulman J. Reptile: a scalable metalearning algorithm. arXiv preprint arXiv:1803.02999, 2018, 2(3): 4.

[6] Reptile: A Scalable Meta-Learning Algorithm. OpenAI. https://openai.com/blog/reptile/

[7] 一文入门元学习(Meta-Learning)(附代码) - 知乎 https://zhuanlan.zhihu.com/p/136975128