Advice for Applying Machine Learning

Applying machine learning in practice is not always straightforward. In this module, we share best practices for applying machine learning in practice, and discuss the best ways to evaluate performance of the learned models.

7 videos, 7 readings

Video: Deciding What to Try Next

By now you have seen a lot of different learning algorithms.

0:03

And if you've been following along these videos you should consider yourself an expert on many state-of-the-art machine learning techniques. But even among people that know a certain learning algorithm. There's often a huge difference between someone that really knows how to powerfully and effectively apply that algorithm, versus someone that's less familiar with some of the material that I'm about to teach and who doesn't really understand how to apply these algorithms and can end up wasting a lot of their time trying things out that don't really make sense.

0:34

What I would like to do is make sure that if you are developing machine learning systems, that you know how to choose one of the most promising avenues to spend your time pursuing. And on this and the next few videos I'm going to give a number of practical suggestions, advice, guidelines on how to do that. And concretely what we'd focus on is the problem of, suppose you are developing a machine learning system or trying to improve the performance of a machine learning system, how do you go about deciding what are the proxy avenues to try

1:07

next?

1:09

To explain this, let's continue using our example of learning to predict housing prices. And let's say you've implement and regularize linear regression. Thus minimizing that cost function j. Now suppose that after you take your learn parameters, if you test your hypothesis on the new set of houses, suppose you find that this is making huge errors in this prediction of the housing prices.

1:33

The question is what should you then try mixing in order to improve the learning algorithm?

1:39

There are many things that one can think of that could improve the performance of the learning algorithm.

1:44

One thing they could try, is to get more training examples. And concretely, you can imagine, maybe, you know, setting up phone surveys, going door to door, to try to get more data on how much different houses sell for.

1:57

And the sad thing is I've seen a lot of people spend a lot of time collecting more training examples, thinking oh, if we have twice as much or ten times as much training data, that is certainly going to help, right? But sometimes getting more training data doesn't actually help and in the next few videos we will see why, and we will see how you can avoid spending a lot of time collecting more training data in settings where it is just not going to help.

2:22

Other things you might try are to well maybe try a smaller set of features. So if you have some set of features such as x1, x2, x3 and so on, maybe a large number of features. Maybe you want to spend time carefully selecting some small subset of them to prevent overfitting.

2:38

Or maybe you need to get additional features. Maybe the current set of features aren't informative enough and you want to collect more data in the sense of getting more features.

2:48

And once again this is the sort of project that can scale up the huge projects can you imagine getting phone surveys to find out more houses, or extra land surveys to find out more about the pieces of land and so on, so a huge project. And once again it would be nice to know in advance if this is going to help before we spend a lot of time doing something like this. We can also try adding polynomial features things like x2 square x2 square and product features x1, x2. We can still spend quite a lot of time thinking about that and we can also try other things like decreasing lambda, the regularization parameter or increasing lambda.

3:23

Given a menu of options like these, some of which can easily scale up to six month or longer projects.

3:31

Unfortunately, the most common method that people use to pick one of these is to go by gut feeling. In which what many people will do is sort of randomly pick one of these options and maybe say, "Oh, lets go and get more training data." And easily spend six months collecting more training data or maybe someone else would rather be saying, "Well, let's go collect a lot more features on these houses in our data set." And I have a lot of times, sadly seen people spend, you know, literally 6 months doing one of these avenues that they have sort of at random only to discover six months later that that really wasn't a promising avenue to pursue.

4:07

Fortunately, there is a pretty simple technique that can let you very quickly rule out half of the things on this list as being potentially promising things to pursue. And there is a very simple technique, that if you run, can easily rule out many of these options,

4:24

and potentially save you a lot of time pursuing something that's just is not going to work.

4:29

In the next two videos after this, I'm going to first talk about how to evaluate learning algorithms.

4:36

And in the next few videos after that, I'm going to talk about these techniques,

4:42

which are called the machine learning diagnostics.

4:46

And what a diagnostic is, is a test you can run, to get insight into what is or isn't working with an algorithm, and which will often give you insight as to what are promising things to try to improve a learning algorithm's

5:03

performance. We'll talk about specific diagnostics later in this video sequence. But I should mention in advance that diagnostics can take time to implement and can sometimes, you know, take quite a lot of time to implement and understand but doing so can be a very good use of your time when you are developing learning algorithms because they can often save you from spending many months pursuing an avenue that you could have found out much earlier just was not going to be fruitful.

5:32

So in the next few videos, I'm going to first talk about how evaluate your learning algorithms and after that I'm going to talk about some of these diagnostics which will hopefully let you much more effectively select more of the useful things to try mixing if your goal to improve the machine learning system.

Video: Evaluating a Hypothesis

In this video, I would like to talk about how to evaluate a hypothesis that has been learned by your algorithm. In later videos, we will build on this to talk about how to prevent in the problems of overfitting and underfitting as well. When we fit the parameters of our learning algorithm we think about choosing the parameters to minimize the training error. One might think that getting a really low value of training error might be a good thing, but we have already seen that just because a hypothesis has low training error, that doesn't mean it is necessarily a good hypothesis. And we've already seen the example of how a hypothesis can overfit. And therefore fail to generalize the new examples not in the training set. So how do you tell if the hypothesis might be overfitting. In this simple example we could plot the hypothesis h of x and just see what was going on. But in general for problems with more features than just one feature, for problems with a large number of features like these it becomes hard or may be impossible to plot what the hypothesis looks like and so we need some other way to evaluate our hypothesis. The standard way to evaluate a learned hypothesis is as follows. Suppose we have a data set like this. Here I have just shown 10 training examples, but of course usually we may have dozens or hundreds or maybe thousands of training examples. In order to make sure we can evaluate our hypothesis, what we are going to do is split the data we have into two portions. The first portion is going to be our usual training set

1:42

and the second portion is going to be our test set, and a pretty typical split of this all the data we have into a training set and test set might be around say a 70%, 30% split. Worth more today to grade the training set and relatively less to the test set. And so now, if we have some data set, we run a sine of say 70% of the data to be our training set where here "m" is as usual our number of training examples and the remainder of our data might then be assigned to become our test set. And here, I'm going to use the notation m subscript test to denote the number of test examples. And so in general, this subscript test is going to denote examples that come from a test set so that x1 subscript test, y1 subscript test is my first test example which I guess in this example might be this example over here. Finally, one last detail whereas here I've drawn this as though the first 70% goes to the training set and the last 30% to the test set. If there is any sort of ordinary to the data. That should be better to send a random 70% of your data to the training set and a random 30% of your data to the test set. So if your data were already randomly sorted, you could just take the first 70% and last 30% that if your data were not randomly ordered, it would be better to randomly shuffle or to randomly reorder the examples in your training set. Before you know sending the first 70% in the training set and the last 30% of the test set. Here then is a fairly typical procedure for how you would train and test the learning algorithm and the learning regression. First, you learn the parameters theta from the training set so you minimize the usual training error objective j of theta, where j of theta here was defined using that 70% of all the data you have. There is only the training data. And then you would compute the test error. And I am going to denote the test error as j subscript test. And so what you do is take your parameter theta that you have learned from the training set, and plug it in here and compute your test set error. Which I am going to write as follows. So this is basically the average squared error as measured on your test set. It's pretty much what you'd expect. So if we run every test example through your hypothesis with parameter theta and just measure the squared error that your hypothesis has on your m subscript test, test examples. And of course, this is the definition of the test set error if we are using linear regression and using the squared error metric. How about if we were doing a classification problem and say using logistic regression instead. In that case, the procedure for training and testing say logistic regression is pretty similar first we will do the parameters from the training data, that first 70% of the data. And it will compute the test error as follows. It's the same objective function as we always use but we just logistic regression, except that now is define using our m subscript test, test examples. While this definition of the test set error j subscript test is perfectly reasonable. Sometimes there is an alternative test sets metric that might be easier to interpret, and that's the misclassification error. It's also called the zero one misclassification error, with zero one denoting that you either get an example right or you get an example wrong. Here's what I mean. Let me define the error of a prediction. That is h of x. And given the label y as equal to one if my hypothesis outputs the value greater than equal to five and Y is equal to zero or if my hypothesis outputs a value of less than 0.5 and y is equal to one, right, so both of these cases basic respond to if your hypothesis mislabeled the example assuming your threshold at an 0.5. So either thought it was more likely to be 1, but it was actually 0, or your hypothesis stored was more likely to be 0, but the label was actually 1. And otherwise, we define this error function to be zero. If your hypothesis basically classified the example y correctly. We could then define the test error, using the misclassification error metric to be one of the m tests of sum from i equals one to m subscript test of the error of h of x(i) test comma y(i). And so that's just my way of writing out that this is exactly the fraction of the examples in my test set that my hypothesis has mislabeled. And so that's the definition of the test set error using the misclassification error of the 0 1 misclassification metric. So that's the standard technique for evaluating how good a learned hypothesis is. In the next video, we will adapt these ideas to helping us do things like choose what features like the degree polynomial to use with the learning algorithm or choose the regularization parameter for learning algorithm.

Reading: Evaluating a Hypothesis

Evaluating a Hypothesis

Once we have done some trouble shooting for errors in our predictions by:

- Getting more training examples

- Trying smaller sets of features

- Trying additional features

- Trying polynomial features

- Increasing or decreasing (lambda)

We can move on to evaluate our new hypothesis.

A hypothesis may have a low error for the training examples but still be inaccurate (because of overfitting). Thus, to evaluate a hypothesis, given a dataset of training examples, we can split up the data into two sets: a training set and a test set. Typically, the training set consists of 70 % of your data and the test set is the remaining 30 %.

The new procedure using these two sets is then:

- Learn (Theta) and minimize (J_{train}(Theta)) using the training set

- Compute the test set error (J_{test}(Theta))

The test set error

- For linear regression: (J_{test}(Theta) = dfrac{1}{2m_{test}} sum_{i=1}^{m_{test}}(h_Theta(x^{(i)}_{test}) - y^{(i)}_{test})^2)

- For classification ~ Misclassification error (aka 0/1 misclassification error):

This gives us a binary 0 or 1 error result based on a misclassification. The average test error for the test set is:

( ext{Test Error} = dfrac{1}{m_{test}} sum^{m_{test}}_{i=1} err(h_Theta(x^{(i)}_{test}), y^{(i)}_{test}))

This gives us the proportion of the test data that was misclassified.

Video: Model Selection and Train/Validation/Test Sets

Suppose you're left to decide what degree of polynomial to fit to a data set. So that what features to include that gives you a learning algorithm. Or suppose you'd like to choose the regularization parameter longer for learning algorithm. How do you do that? This account model selection process. Browsers, and in our discussion of how to do this, we'll talk about not just how to split your data into the train and test sets, but how to switch data into what we discover is called the train, validation, and test sets. We'll see in this video just what these things are, and how to use them to do model selection. We've already seen a lot of times the problem of overfitting, in which just because a learning algorithm fits a training set well, that doesn't mean it's a good hypothesis. More generally, this is why the training set's error is not a good predictor for how well the hypothesis will do on new example. Concretely, if you fit some set of parameters. Theta0, theta1, theta2, and so on, to your training set. Then the fact that your hypothesis does well on the training set. Well, this doesn't mean much in terms of predicting how well your hypothesis will generalize to new examples not seen in the training set. And a more general principle is that once your parameter is what fit to some set of data. Maybe the training set, maybe something else. Then the error of your hypothesis as measured on that same data set, such as the training error, that's unlikely to be a good estimate of your actual generalization error. That is how well the hypothesis will generalize to new examples. Now let's consider the model selection problem. Let's say you're trying to choose what degree polynomial to fit to data. So, should you choose a linear function, a quadratic function, a cubic function? All the way up to a 10th-order polynomial.

1:51

So it's as if there's one extra parameter in this algorithm, which I'm going to denote d, which is, what degree of polynomial. Do you want to pick. So it's as if, in addition to the theta parameters, it's as if there's one more parameter, d, that you're trying to determine using your data set. So, the first option is d equals one, if you fit a linear function. We can choose d equals two, d equals three, all the way up to d equals 10. So, we'd like to fit this extra sort of parameter which I'm denoting by d. And concretely let's say that you want to choose a model, that is choose a degree of polynomial, choose one of these 10 models. And fit that model and also get some estimate of how well your fitted hypothesis was generalize to new examples. Here's one thing you could do. What you could, first take your first model and minimize the training error. And this would give you some parameter vector theta. And you could then take your second model, the quadratic function, and fit that to your training set and this will give you some other. Parameter vector theta. In order to distinguish between these different parameter vectors, I'm going to use a superscript one superscript two there where theta superscript one just means the parameters I get by fitting this model to my training data. And theta superscript two just means the parameters I get by fitting this quadratic function to my training data and so on. By fitting a cubic model I get parenthesis three up to, well, say theta 10. And one thing we ccould do is that take these parameters and look at test error. So I can compute on my test set J test of one, J test of theta two, and so on.

3:47

J test of theta three, and so on.

3:53

So I'm going to take each of my hypotheses with the corresponding parameters and just measure the performance of on the test set. Now, one thing I could do then is, in order to select one of these models, I could then see which model has the lowest test set error. And let's just say for this example that I ended up choosing the fifth order polynomial. So, this seems reasonable so far. But now let's say I want to take my fifth hypothesis, this, this, fifth order model, and let's say I want to ask, how well does this model generalize?

4:27

One thing I could do is look at how well my fifth order polynomial hypothesis had done on my test set. But the problem is this will not be a fair estimate of how well my hypothesis generalizes. And the reason is what we've done is we've fit this extra parameter d, that is this degree of polynomial. And what fits that parameter d, using the test set, namely, we chose the value of d that gave us the best possible performance on the test set. And so, the performance of my parameter vector theta5, on the test set, that's likely to be an overly optimistic estimate of generalization error. Right, so, that because I had fit this parameter d to my test set is no longer fair to evaluate my hypothesis on this test set, because I fit my parameters to this test set, I've chose the degree d of polynomial using the test set. And so my hypothesis is likely to do better on this test set than it would on new examples that it hasn't seen before, and that's which is, which is what I really care about. So just to reiterate, on the previous slide, we saw that if we fit some set of parameters, you know, say theta0, theta1, and so on, to some training set, then the performance of the fitted model on the training set is not predictive of how well the hypothesis will generalize to new examples. Is because these parameters were fit to the training set, so they're likely to do well on the training set, even if the parameters don't do well on other examples. And, in the procedure I just described on this line, we just did the same thing. And specifically, what we did was, we fit this parameter d to the test set. And by having fit the parameter to the test set, this means that the performance of the hypothesis on that test set may not be a fair estimate of how well the hypothesis is, is likely to do on examples we haven't seen before. To address this problem, in a model selection setting, if we want to evaluate a hypothesis, this is what we usually do instead. Given the data set, instead of just splitting into a training test set, what we're going to do is then split it into three pieces. And the first piece is going to be called the training set as usual.

6:50

So let me call this first part the training set.

6:54

And the second piece of this data, I'm going to call the cross validation set. [SOUND] Cross validation. And the cross validation, as V-D. Sometimes it's also called the validation set instead of cross validation set. And then the loss can be to call the usual test set. And the pretty, pretty typical ratio at which to split these things will be to send 60% of your data's, your training set, maybe 20% to your cross validation set, and 20% to your test set. And these numbers can vary a little bit but this integration be pretty typical. And so our training sets will now be only maybe 60% of the data, and our cross-validation set, or our validation set, will have some number of examples. I'm going to denote that m subscript cv. So that's the number of cross-validation examples.

7:52

Following our early notational convention I'm going to use xi cv comma y i cv, to denote the i cross validation example. And finally we also have a test set over here with our m subscript test being the number of test examples. So, now that we've defined the training validation or cross validation and test sets. We can also define the training error, cross validation error, and test error. So here's my training error, and I'm just writing this as J subscript train of theta. This is pretty much the same things. These are the same thing as the J of theta that I've been writing so far, this is just a training set error you know, as measuring a training set and then J subscript cv my cross validation error, this is pretty much what you'd expect, just like the training error you've set measure it on a cross validation data set, and here's my test set error same as before.

8:49

So when faced with a model selection problem like this, what we're going to do is, instead of using the test set to select the model, we're instead going to use the validation set, or the cross validation set, to select the model. Concretely, we're going to first take our first hypothesis, take this first model, and say, minimize the cross function, and this would give me some parameter vector theta for the new model. And, as before, I'm going to put a superscript 1, just to denote that this is the parameter for the new model. We do the same thing for the quadratic model. Get some parameter vector theta two. Get some para, parameter vector theta three, and so on, down to theta ten for the polynomial. And what I'm going to do is, instead of testing these hypotheses on the test set, I'm instead going to test them on the cross validation set. And measure J subscript cv, to see how well each of these hypotheses do on my cross validation set.

9:53

And then I'm going to pick the hypothesis with the lowest cross validation error. So for this example, let's say for the sake of argument, that it was my 4th order polynomial, that had the lowest cross validation error. So in that case I'm going to pick this fourth order polynomial model. And finally, what this means is that that parameter d, remember d was the degree of polynomial, right? So d equals two, d equals three, all the way up to d equals 10. What we've done is we'll fit that parameter d and we'll say d equals four. And we did so using the cross-validation set. And so this degree of polynomial, so the parameter, is no longer fit to the test set, and so we've not saved away the test set, and we can use the test set to measure, or to estimate the generalization error of the model that was selected. By the of them. So, that was model selection and how you can take your data, split it into a training, validation, and test set. And use your cross validation data to select the model and evaluate it on the test set.

10:59

One final note, I should say that in. The machine learning, as of this practice today, there aren't many people that will do that early thing that I talked about, and said that, you know, it isn't such a good idea, of selecting your model using this test set. And then using the same test set to report the error as though selecting your degree of polynomial on the test set, and then reporting the error on the test set as though that were a good estimate of generalization error. That sort of practice is unfortunately many, many people do do it. If you have a massive, massive test that is maybe not a terrible thing to do, but many practitioners, most practitioners that machine learnimg tend to advise against that. And it's considered better practice to have separate train validation and test sets. I just warned you to sometimes people to do, you know, use the same data for the purpose of the validation set, and for the purpose of the test set. You need a training set and a test set, and that's good, that's practice, though you will see some people do it. But, if possible, I would recommend against doing that yourself.

Reading: Model Selection and Train/Validation/Test Sets

Model Selection and Train/Validation/Test Sets

Just because a learning algorithm fits a training set well, that does not mean it is a good hypothesis. It could over fit and as a result your predictions on the test set would be poor. The error of your hypothesis as measured on the data set with which you trained the parameters will be lower than the error on any other data set.

Given many models with different polynomial degrees, we can use a systematic approach to identify the 'best' function. In order to choose the model of your hypothesis, you can test each degree of polynomial and look at the error result.

One way to break down our dataset into the three sets is:

- Training set: 60%

- Cross validation set: 20%

- Test set: 20%

We can now calculate three separate error values for the three different sets using the following method:

- Optimize the parameters in Θ using the training set for each polynomial degree.

- Find the polynomial degree d with the least error using the cross validation set.

- Estimate the generalization error using the test set with (J_{test}(Theta^{(d)})), (d = theta from polynomial with lower error);

This way, the degree of the polynomial d has not been trained using the test set.

Video: Diagnosing Bias vs. Variance

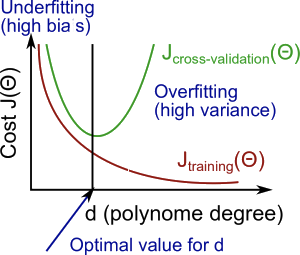

If you run a learning algorithm and it doesn't do as long as you are hoping, almost all the time, it will be because you have either a high bias problem or a high variance problem, in other words, either an underfitting problem or an overfitting problem. In this case, it's very important to figure out which of these two problems is bias or variance or a bit of both that you actually have. Because knowing which of these two things is happening would give a very strong indicator for whether the useful and promising ways to try to improve your algorithm. In this video, I'd like to delve more deeply into this bias and variance issue and understand them better as was figure out how to look in a learning algorithm and evaluate or diagnose whether we might have a bias problem or a variance problem since this will be critical to figuring out how to improve the performance of a learning algorithm that you will implement. So, you've already seen this figure a few times where if you fit two simple hypothesis like a straight line that underfits the data, if you fit a two complex hypothesis, then that might fit the training set perfectly but overfit the data and this may be hypothesis of some intermediate level of complexities of some maybe degree two polynomials or not too low and not too high degree that's like just right and gives you the best generalization error over these options. Now that we're armed with the notion of chain training and validation in test sets, we can understand the concepts of bias and variance a little bit better. Concretely, let's let our training error and cross validation error be defined as in the previous videos. Just say the squared error, the average squared error, as measured on the training sets or as measured on the cross validation set. Now, let's plot the following figure. On the horizontal axis I'm going to plot the degree of polynomial. So, as I go to the right I'm going to be fitting higher and higher order polynomials. So where the left of this figure where maybe d equals one, we're going to be fitting very simple functions whereas we're here on the right of the horizontal axis, I have much larger values of ds, of a much higher degree polynomial. So here, that's going to correspond to fitting much more complex functions to your training set. Let's look at the training error and the cross validation error and plot them on this figure. Let's start with the training error. As we increase the degree of the polynomial, we're going to be able to fit our training set better and better and so if d equals one, then there is high training error, if we have a very high degree of polynomial our training error is going to be really low, maybe even 0 because will fit the training set really well. So, as we increase the degree of polynomial, we find typically that the training error decreases. So I'm going to write J subscript train of theta there, because our training error tends to decrease with the degree of the polynomial that we fit to the data. Next, let's look at the cross-validation error or for that matter, if we look at the test set error, we'll get a pretty similar result as if we were to plot the cross validation error. So, we know that if d equals one, we're fitting a very simple function and so we may be underfitting the training set and so it's going to be very high cross-validation error. If we fit an intermediate degree polynomial, we had d equals two in our example in the previous slide, we're going to have a much lower cross-validation error because we're finding a much better fit to the data. Conversely, if d were too high. So if d took on say a value of four, then we're again overfitting, and so we end up with a high value for cross-validation error. So, if you were to vary this smoothly and plot a curve, you might end up with a curve like that where that's JCV of theta. Again, if you plot J test of theta you get something very similar. So, this sort of plot also helps us to better understand the notions of bias and variance. Concretely, suppose you have applied a learning algorithm and it's not performing as well as you are hoping, so if your cross-validation set error or your test set error is high, how can we figure out if the learning algorithm is suffering from high bias or suffering from high variance? So, the setting of a cross-validation error being high corresponds to either this regime or this regime. So, this regime on the left corresponds to a high bias problem. That is, if you are fitting a overly low order polynomial such as a d equals one when we really needed a higher order polynomial to fit to data, whereas in contrast this regime corresponds to a high variance problem. That is, if d the degree of polynomial was too large for the data set that we have, and this figure gives us a clue for how to distinguish between these two cases. Concretely, for the high bias case, that is the case of underfitting, what we find is that both the cross validation error and the training error are going to be high. So, if your algorithm is suffering from a bias problem, the training set error will be high and you might find that the cross validation error will also be high. It might be close, maybe just slightly higher, than the training error. So, if you see this combination, that's a sign that your algorithm may be suffering from high bias. In contrast, if your algorithm is suffering from high variance, then if you look here, we'll notice that J train, that is the training error, is going to be low. That is, you're fitting the training set very well, whereas your cross validation error assuming that this is, say, the squared error which we're trying to minimize say, whereas in contrast your error on a cross validation set or your cross function or cross validation set will be much bigger than your training set error. So, this is a double greater than sign. That's the map symbol for much greater thans, denoted by two greater than signs. So if you see this combination of values, then that's a clue that your learning algorithm may be suffering from high variance and might be overfitting. The key that distinguishes these two cases is, if you have a high bias problem, your training set error will also be high is your hypothesis just not fitting the training set well. If you have a high variance problem, your training set error will usually be low, that is much lower than your cross-validation error. So hopefully that gives you a somewhat better understanding of the two problems of bias and variance. I still have a lot more to say about bias and variance in the next few videos, but what we'll see later is that by diagnosing whether a learning algorithm may be suffering from high bias or high variance, I'll show you even more details on how to do that in later videos. But we'll see that by figuring out whether a learning algorithm may be suffering from high bias or high variance or combination of both, that that would give us much better guidance for what might be promising things to try in order to improve the performance of a learning algorithm.

Reading: Diagnosing Bias vs. Variance

Diagnosing Bias vs. Variance

In this section we examine the relationship between the degree of the polynomial d and the underfitting or overfitting of our hypothesis.

- We need to distinguish whether bias or variance is the problem contributing to bad predictions.

- High bias is underfitting and high variance is overfitting. Ideally, we need to find a golden mean between these two.

The training error will tend to decrease as we increase the degree d of the polynomial.

At the same time, the cross validation error will tend to decrease as we increase d up to a point, and then it will increase as d is increased, forming a convex curve.

High bias (underfitting): both (J_{train}(Theta)) and (J_{CV}(Theta)) will be high. Also, (J_{CV}(Theta) approx J_{train}(Theta)).

High variance (overfitting): (J_{train}(Theta)) will be low and (J_{CV}(Theta)) will be much greater than (J_{train}(Theta)).

The is summarized in the figure below:

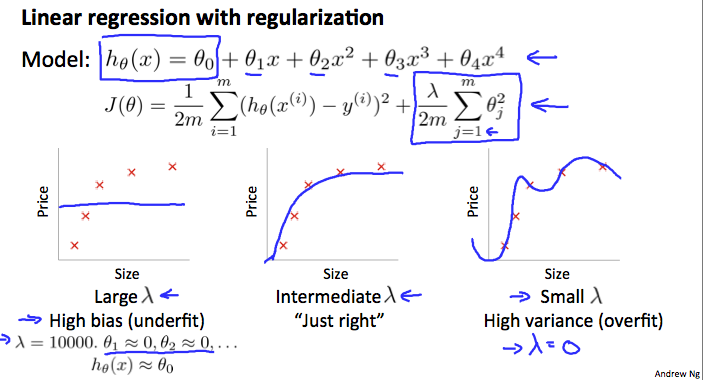

Video: Regularization and Bias/Variance

You've seen how regularization can help prevent over-fitting. But how does it affect the bias and variances of a learning algorithm? In this video I'd like to go deeper into the issue of bias and variances and talk about how it interacts with and is affected by the regularization of your learning algorithm.

0:22

Suppose we're fitting a high auto polynomial, like that showed here, but to prevent over fitting we need to use regularization, like that shown here. So we have this regularization term to try to keep the values of the prem to small. And as usual, the regularizations comes from J = 1 to m, rather than j = 0 to m. Let's consider three cases. The first is the case of the very large value of the regularization parameter lambda, such as if lambda were equal to 10,000. Some huge value.

0:54

In this case, all of these parameters, theta 1, theta 2, theta 3, and so on would be heavily penalized and so we end up with most of these parameter values being closer to zero. And the hypothesis will be roughly h of x, just equal or approximately equal to theta zero. So we end up with a hypothesis that more or less looks like that, more or less a flat, constant straight line. And so this hypothesis has high bias and it badly under fits this data set, so the horizontal straight line is just not a very good model for this data set. At the other extreme is if we have a very small value of lambda, such as if lambda were equal to zero. In that case, given that we're fitting a high order polynomial, this is a usual over-fitting setting. In that case, given that we're fitting a high-order polynomial, basically, without regularization or with very minimal regularization, we end up with our usual high-variance, over fitting setting. This is basically if lambda is equal to zero, we're just fitting with our regularization, so that over fits the hypothesis. And it's only if we have some intermediate value of longer that is neither too large nor too small that we end up with parameters data that give us a reasonable fit to this data. So, how can we automatically choose a good value for the regularization parameter?

2:19

Just to reiterate, here's our model, and here's our learning algorithm's objective. For the setting where we're using regularization, let me define J train(theta) to be something different, to be the optimization objective, but without the regularization term. Previously, in an earlier video, when we were not using regularization I define J train of data to be the same as J of theta as the cause function but when we're using regularization when the six well under term we're going to define J train my training set to be just my sum of squared errors on the training set or my average squared error on the training set without taking into account that regularization. And similarly I'm then also going to define the cross validation sets error and to test that error as before to be the average sum of squared errors on the cross validation in the test sets so just to summarize my definitions of J train J CU and J test are just the average square there one half of the other square record on the training validation of the test set without the extra regularization term. So, this is how we can automatically choose the regularization parameter lambda. So what I usually do is maybe have some range of values of lambda I want to try out. So I might be considering not using regularization or here are a few values I might try lambda considering lambda = 0.01, 0.02, 0.04, and so on. And I usually set these up in multiples of two, until some maybe larger value if I were to do these in multiples of 2 I'd end up with a 10.24. It's 10 exactly, but this is close enough. And the three to four decimal places won't effect your result that much. So, this gives me maybe 12 different models. And I'm trying to select a month corresponding to 12 different values of the regularization of the parameter lambda. And of course you can also go to values less than 0.01 or values larger than 10 but I've just truncated it here for convenience. Given the issue of these 12 models, what we can do is then the following, we can take this first model with lambda equals zero and minimize my cost function J of data and this will give me some parameter of active data. And similar to the earlier video, let me just denote this as theta super script one.

4:49

And then I can take my second model with lambda set to 0.01 and minimize my cost function now using lambda equals 0.01 of course. To get some different parameter vector theta. Let me denote that theta(2). And for that I end up with theta(3). So if part for my third model. And so on until for my final model with lambda set to 10 or 10.24, I end up with this theta(12). Next, I can talk all of these hypotheses, all of these parameters and use my cross validation set to validate them so I can look at my first model, my second model, fit to these different values of the regularization parameter, and evaluate them with my cross validation set based in measure the average square error of each of these square vector parameters theta on my cross validation sets. And I would then pick whichever one of these 12 models gives me the lowest error on the trans validation set. And let's say, for the sake of this example, that I end up picking theta 5, the 5th order polynomial, because that has the lowest cause validation error. Having done that, finally what I would do if I wanted to report each test set error, is to take the parameter theta 5 that I've selected, and look at how well it does on my test set. So once again, here is as if we've fit this parameter, theta, to my cross-validation set, which is why I'm setting aside a separate test set that I'm going to use to get a better estimate of how well my parameter vector, theta, will generalize to previously unseen examples. So that's model selection applied to selecting the regularization parameter lambda. The last thing I'd like to do in this video is get a better understanding of how cross validation and training error vary as we vary the regularization parameter lambda. And so just a reminder right, that was our original cost on j of theta. But for this purpose we're going to define training error without using a regularization parameter, and cross validation error without using the regularization parameter.

7:07

And what I'd like to do is plot this Jtrain and plot this Jcv, meaning just how well does my hypothesis do on the training set and how does my hypothesis do when it cross validation sets. As I vary my regularization parameter lambda.

7:27

So as we saw earlier if lambda is small then we're not using much regularization

7:35

and we run a larger risk of over fitting whereas if lambda is large that is if we were on the right part of this horizontal axis then, with a large value of lambda, we run the higher risk of having a biased problem, so if you plot J train and J cv, what you find is that, for small values of lambda, you can fit the trading set relatively way cuz you're not regularizing. So, for small values of lambda, the regularization term basically goes away, and you're just minimizing pretty much just gray arrows. So when lambda is small, you end up with a small value for Jtrain, whereas if lambda is large, then you have a high bias problem, and you might not feel your training that well, so you end up the value up there. So Jtrain of theta will tend to increase when lambda increases, because a large value of lambda corresponds to high bias where you might not even fit your trainings that well, whereas a small value of lambda corresponds to, if you can really fit a very high degree polynomial to your data, let's say. After the cost validation error we end up with a figure like this,

8:51

where over here on the right, if we have a large value of lambda, we may end up under fitting, and so this is the bias regime. And so the cross validation error will be high. Let me just leave all of that to this Jcv (theta) because so, with high bias, we won't be fitting, we won't be doing well in cross validation sets, whereas here on the left, this is the high variance regime, where we have two smaller value with longer, then we may be over fitting the data. And so by over fitting the data, then the cross validation error will also be high. And so, this is what the cross validation error and what the trading error may look like on a trading stance as we vary the regularization parameter lambda. And so once again, it will often be some intermediate value of lambda that is just right or that works best In terms of having a small cross validation error or a small test theta. And whereas the curves I've drawn here are somewhat cartoonish and somewhat idealized so on the real data set the curves you get may end up looking a little bit more messy and just a little bit more noisy then this. For some data sets you will really see these for sorts of trends and by looking at a plot of the hold-out cross validation error you can either manual, automatically try to select a point that minimizes the cross validation error and select the value of lambda corresponding to low cross validation error. When I'm trying to pick the regularization parameter lambda for learning algorithm, often I find that plotting a figure like this one shown here helps me understand better what's going on and helps me verify that I am indeed picking a good value for the regularization parameter monitor. So hopefully that gives you more insight into regularization and it's effects on the bias and variance of a learning algorithm. By now you've seen bias and variance from a lot of different perspectives. And what we like to do in the next video is take all the insights we've gone through and build on them to put together a diagnostic that's called learning curves, which is a tool that I often use to diagnose if the learning algorithm may be suffering from a bias problem or a variance problem, or a little bit of both.

Reading: Regularization and Bias/Variance

Regularization and Bias/Variance

Note: [The regularization term below and through out the video should be (frac lambda {2m} sum _{j=1}^n heta_j ^2) and NOT (frac lambda {2m} sum _{j=1}^m heta_j ^2)]

In the figure above, we see that as (lambda) increases, our fit becomes more rigid. On the other hand, as (lambda) approaches 0, we tend to over overfit the data. So how do we choose our parameter (lambda) to get it 'just right' ? In order to choose the model and the regularization term λ, we need to:

- Create a list of lambdas (i.e. λ∈{0,0.01,0.02,0.04,0.08,0.16,0.32,0.64,1.28,2.56,5.12,10.24});

- Create a set of models with different degrees or any other variants.

- Iterate through the (lambda)s and for each (lambda) go through all the models to learn some (Theta).

- Compute the cross validation error using the learned Θ (computed with λ) on the (J_{CV}(Theta)) without regularization or λ = 0.

- Select the best combo that produces the lowest error on the cross validation set.

- Using the best combo Θ and λ, apply it on (J_{test}(Theta)) to see if it has a good generalization of the problem.

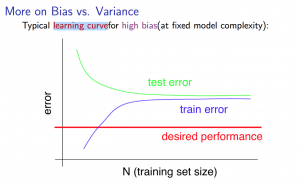

Video: Learning Curves

In this video, I'd like to tell you about learning curves.

0:03

Learning curves is often a very useful thing to plot. If either you wanted to sanity check that your algorithm is working correctly, or if you want to improve the performance of the algorithm.

0:13

And learning curves is a tool that I actually use very often to try to diagnose if a physical learning algorithm may be suffering from bias, sort of variance problem or a bit of both.

0:27

Here's what a learning curve is. To plot a learning curve, what I usually do is plot j train which is, say,

0:35

average squared error on my training set or Jcv which is the average squared error on my cross validation set. And I'm going to plot that as a function of m, that is as a function of the number of training examples I have. And so m is usually a constant like maybe I just have, you know, a 100 training examples but what I'm going to do is artificially with use my training set exercise. So, I deliberately limit myself to using only, say, 10 or 20 or 30 or 40 training examples and plot what the training error is and what the cross validation is for this smallest training set exercises. So let's see what these plots may look like. Suppose I have only one training example like that shown in this this first example here and let's say I'm fitting a quadratic function. Well, I

1:22

have only one training example. I'm going to be able to fit it perfectly right? You know, just fit the quadratic function. I'm going to have 0 error on the one training example. If I have two training examples. Well the quadratic function can also fit that very well. So,

1:37

even if I am using regularization, I can probably fit this quite well. And if I am using no neural regularization, I'm going to fit this perfectly and if I have three training examples again. Yeah, I can fit a quadratic function perfectly so if m equals 1 or m equals 2 or m equals 3,

1:54

my training error on my training set is going to be 0 assuming I'm not using regularization or it may slightly large in 0 if I'm using regularization and by the way if I have a large training set and I'm artificially restricting the size of my training set in order to J train. Here if I set M equals 3, say, and I train on only three examples, then, for this figure I am going to measure my training error only on the three examples that actually fit my data too

2:27

and so even I have to say a 100 training examples but if I want to plot what my training error is the m equals 3. What I'm going to do

2:34

is to measure the training error on the three examples that I've actually fit to my hypothesis 2.

2:41

And not all the other examples that I have deliberately omitted from the training process. So just to summarize what we've seen is that if the training set size is small then the training error is going to be small as well. Because you know, we have a small training set is going to be very easy to fit your training set very well may be even perfectly now say we have m equals 4 for example. Well then a quadratic function can be a longer fit this data set perfectly and if I have m equals 5 then you know, maybe quadratic function will fit to stay there so so, then as my training set gets larger.

3:16

It becomes harder and harder to ensure that I can find the quadratic function that process through all my examples perfectly. So in fact as the training set size grows what you find is that my average training error actually increases and so if you plot this figure what you find is that the training set error that is the average error on your hypothesis grows as m grows and just to repeat when the intuition is that when m is small when you have very few training examples. It's pretty easy to fit every single one of your training examples perfectly and so your error is going to be small whereas when m is larger then gets harder all the training examples perfectly and so your training set error becomes more larger now, how about the cross validation error. Well, the cross validation is my error on this cross validation set that I haven't seen and so, you know, when I have a very small training set, I'm not going to generalize well, just not going to do well on that. So, right, this hypothesis here doesn't look like a good one, and it's only when I get a larger training set that, you know, I'm starting to get hypotheses that maybe fit the data somewhat better. So your cross validation error and your test set error will tend to decrease as your training set size increases because the more data you have, the better you do at generalizing to new examples. So, just the more data you have, the better the hypothesis you fit. So if you plot j train, and Jcv this is the sort of thing that you get. Now let's look at what the learning curves may look like if we have either high bias or high variance problems. Suppose your hypothesis has high bias and to explain this I'm going to use a, set an example, of fitting a straight line to data that, you know, can't really be fit well by a straight line.

5:09

So we end up with a hypotheses that maybe looks like that.

5:13

Now let's think what would happen if we were to increase the training set size. So if instead of five examples like what I've drawn there, imagine that we have a lot more training examples.

5:25

Well what happens, if you fit a straight line to this. What you find is that, you end up with you know, pretty much the same straight line. I mean a straight line that just cannot fit this data and getting a ton more data, well the straight line isn't going to change that much. This is the best possible straight-line fit to this data, but the straight line just can't fit this data set that well. So, if you plot across validation error,

5:49

this is what it will look like.

5:51

Option on the left, if you have already a miniscule training set size like you know, maybe just one training example and is not going to do well. But by the time you have reached a certain number of training examples, you have almost fit the best possible straight line, and even if you end up with a much larger training set size, a much larger value of m, you know, you're basically getting the same straight line, and so, the cross-validation error - let me label that - or test set error or plateau out, or flatten out pretty soon, once you reached beyond a certain the number of training examples, unless you pretty much fit the best possible straight line. And how about training error? Well, the training error will again be small.

6:34

And what you find in the high bias case is that the training error will end up close to the cross validation error, because you have so few parameters and so much data, at least when m is large. The performance on the training set and the cross validation set will be very similar.

6:53

And so, this is what your learning curves will look like, if you have an algorithm that has high bias.

7:00

And finally, the problem with high bias is reflected in the fact that both the cross validation error and the training error are high, and so you end up with a relatively high value of both Jcv and the j train.

7:15

This also implies something very interesting, which is that, if a learning algorithm has high bias, as we get more and more training examples, that is, as we move to the right of this figure, we'll notice that the cross validation error isn't going down much, it's basically fattened up, and so if learning algorithms are really suffering from high bias.

7:36

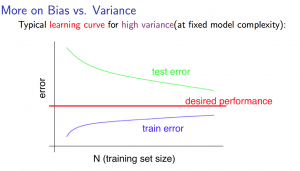

Getting more training data by itself will actually not help that much,and as our figure example in the figure on the right, here we had only five training. examples, and we fill certain straight line. And when we had a ton more training data, we still end up with roughly the same straight line. And so if the learning algorithm has high bias give me a lot more training data. That doesn't actually help you get a much lower cross validation error or test set error. So knowing if your learning algorithm is suffering from high bias seems like a useful thing to know because this can prevent you from wasting a lot of time collecting more training data where it might just not end up being helpful. Next let us look at the setting of a learning algorithm that may have high variance.

8:21

Let us just look at the training error in a around if you have very smart training set like five training examples shown on the figure on the right and if we're fitting say a very high order polynomial,

8:34

and I've written a hundredth degree polynomial which really no one uses, but just an illustration.

8:39

And if we're using a fairly small value of lambda, maybe not zero, but a fairly small value of lambda, then we'll end up, you know, fitting this data very well that with a function that overfits this. So, if the training set size is small, our training error, that is, j train of theta will be small.

9:03

And as this training set size increases a bit, you know, we may still be overfitting this data a little bit but it also becomes slightly harder to fit this data set perfectly, and so, as the training set size increases, we'll find that j train increases, because it is just a little harder to fit the training set perfectly when we have more examples, but the training set error will still be pretty low. Now, how about the cross validation error? Well, in high variance setting, a hypothesis is overfitting and so the cross validation error will remain high, even as we get you know, a moderate number of training examples and, so maybe, the cross validation error may look like that. And the indicative diagnostic that we have a high variance problem,

9:50

is the fact that there's this large gap between the training error and the cross validation error.

9:57

And looking at this figure. If we think about adding more training data, that is, taking this figure and extrapolating to the right, we can kind of tell that, you know the two curves, the blue curve and the magenta curve, are converging to each other. And so, if we were to extrapolate this figure to the right, then it seems it likely that the training error will keep on going up and the

10:27

cross-validation error would keep on going down. And the thing we really care about is the cross-validation error or the test set error, right? So in this sort of figure, we can tell that if we keep on adding training examples and extrapolate to the right, well our cross validation error will keep on coming down. And, so, in the high variance setting, getting more training data is, indeed, likely to help. And so again, this seems like a useful thing to know if your learning algorithm is suffering from a high variance problem, because that tells you, for example that it may be be worth your while to see if you can go and get some more training data.

11:03

Now, on the previous slide and this slide, I've drawn fairly clean fairly idealized curves. If you plot these curves for an actual learning algorithm, sometimes you will actually see, you know, pretty much curves, like what I've drawn here. Although, sometimes you see curves that are a little bit noisier and a little bit messier than this. But plotting learning curves like these can often tell you, can often help you figure out if your learning algorithm is suffering from bias, or variance or even a little bit of both. So when I'm trying to improve the performance of a learning algorithm, one thing that I'll almost always do is plot these learning curves, and usually this will give you a better sense of whether there is a bias or variance problem.

11:44

And in the next video we'll see how this can help suggest specific actions is to take, or to not take, in order to try to improve the performance of your learning algorithm.

Reading: Learning Curves

Learning Curves

Training an algorithm on a very few number of data points (such as 1, 2 or 3) will easily have 0 errors because we can always find a quadratic curve that touches exactly those number of points. Hence:

- As the training set gets larger, the error for a quadratic function increases.

- The error value will plateau out after a certain m, or training set size.

Experiencing high bias:

Low training set size: causes (J_{train}(Theta)) to be low and (J_{CV}(Theta)) to be high.

Large training set size: causes both (J_{train}(Theta)) and (J_{CV}(Theta)) to be high with (J_{train}(Theta)≈J_{CV}(Theta)).

If a learning algorithm is suffering from high bias, getting more training data will not (by itself) help much.

Experiencing high variance:

Low training set size: (J_{train}(Theta)) will be low and (J_{CV}(Theta)) will be high.

Large training set size: (J_{train}(Theta)) increases with training set size and (J_{CV}(Theta)) continues to decrease without leveling off. Also, (J_{train}(Theta) < J_{CV}(Theta)) but the difference between them remains significant.

If a learning algorithm is suffering from high variance, getting more training data is likely to help.

Video: Deciding What to Do Next Revisited

We've talked about how to evaluate learning algorithms, talked about model selection, talked a lot about bias and variance. So how does this help us figure out what are potentially fruitful, potentially not fruitful things to try to do to improve the performance of a learning algorithm.

0:15

Let's go back to our original motivating example and go for the result.

0:21

So here is our earlier example of maybe having fit regularized linear regression and finding that it doesn't work as well as we're hoping. We said that we had this menu of options. So is there some way to figure out which of these might be fruitful options? The first thing all of this was getting more training examples. What this is good for, is this helps to fix high variance.

0:45

And concretely, if you instead have a high bias problem and don't have any variance problem, then we saw in the previous video that getting more training examples,

0:54

while maybe just isn't going to help much at all. So the first option is useful only if you, say, plot the learning curves and figure out that you have at least a bit of a variance, meaning that the cross-validation error is, you know, quite a bit bigger than your training set error. How about trying a smaller set of features? Well, trying a smaller set of features, that's again something that fixes high variance.

1:17

And in other words, if you figure out, by looking at learning curves or something else that you used, that have a high bias problem; then for goodness sakes, don't waste your time trying to carefully select out a smaller set of features to use. Because if you have a high bias problem, using fewer features is not going to help. Whereas in contrast, if you look at the learning curves or something else you figure out that you have a high variance problem, then, indeed trying to select out a smaller set of features, that might indeed be a very good use of your time. How about trying to get additional features, adding features, usually, not always, but usually we think of this as a solution

1:54

for fixing high bias problems. So if you are adding extra features it's usually because

2:01

your current hypothesis is too simple, and so we want to try to get additional features to make our hypothesis better able to fit the training set. And similarly, adding polynomial features; this is another way of adding features and so there is another way to try to fix the high bias problem.

2:21

And, if concretely if your learning curves show you that you still have a high variance problem, then, you know, again this is maybe a less good use of your time.

2:30

And finally, decreasing and increasing lambda. This are quick and easy to try, I guess these are less likely to be a waste of, you know, many months of your life. But decreasing lambda, you already know fixes high bias.

2:45

In case this isn't clear to you, you know, I do encourage you to pause the video and think through this that convince yourself that decreasing lambda helps fix high bias, whereas increasing lambda fixes high variance.

2:59

And if you aren't sure why this is the case, do pause the video and make sure you can convince yourself that this is the case. Or take a look at the curves that we were plotting at the end of the previous video and try to make sure you understand why these are the case.

3:15

Finally, let us take everything we have learned and relate it back to neural networks and so, here is some practical advice for how I usually choose the architecture or the connectivity pattern of the neural networks I use.

3:30

So, if you are fitting a neural network, one option would be to fit, say, a pretty small neural network with you know, relatively few hidden units, maybe just one hidden unit. If you're fitting a neural network, one option would be to fit a relatively small neural network with, say,

3:48

relatively few, maybe only one hidden layer and maybe only a relatively few number of hidden units. So, a network like this might have relatively few parameters and be more prone to underfitting.

4:00

The main advantage of these small neural networks is that the computation will be cheaper.

4:05

An alternative would be to fit a, maybe relatively large neural network with either more hidden units--there's a lot of hidden in one there--or with more hidden layers.

4:16

And so these neural networks tend to have more parameters and therefore be more prone to overfitting.

4:22

One disadvantage, often not a major one but something to think about, is that if you have a large number of neurons in your network, then it can be more computationally expensive.

4:33

Although within reason, this is often hopefully not a huge problem.

4:36

The main potential problem of these much larger neural networks is that it could be more prone to overfitting and it turns out if you're applying neural network very often using a large neural network often it's actually the larger, the better

4:50

but if it's overfitting, you can then use regularization to address overfitting, usually using a larger neural network by using regularization to address is overfitting that's often more effective than using a smaller neural network. And the main possible disadvantage is that it can be more computationally expensive.

5:10

And finally, one of the other decisions is, say, the number of hidden layers you want to have, right? So, do you want one hidden layer or do you want three hidden layers, as we've shown here, or do you want two hidden layers?

5:23

And usually, as I think I said in the previous video, using a single hidden layer is a reasonable default, but if you want to choose the number of hidden layers, one other thing you can try is find yourself a training cross-validation, and test set split and try training neural networks with one hidden layer or two hidden layers or three hidden layers and see which of those neural networks performs best on the cross-validation sets. You take your three neural networks with one, two and three hidden layers, and compute the cross validation error at Jcv and all of them and use that to select which of these is you think the best neural network.

6:02

So, that's it for bias and variance and ways like learning curves, who tried to diagnose these problems. As far as what you think is implied, for one might be truthful or not truthful things to try to improve the performance of a learning algorithm.

6:16

If you understood the contents of the last few videos and if you apply them you actually be much more effective already and getting learning algorithms to work on problems and even a large fraction, maybe the majority of practitioners of machine learning here in Silicon Valley today doing these things as their full-time jobs.

6:35

So I hope that these pieces of advice on by experience in diagnostics

6:42

will help you to much effectively and powerfully apply learning and get them to work very well.

Reading: Deciding What to do Next Revisited

Deciding What to Do Next Revisited

Our decision process can be broken down as follows:

- Getting more training examples: Fixes high variance

- Trying smaller sets of features: Fixes high variance

- Adding features: Fixes high bias

- Adding polynomial features: Fixes high bias

- Decreasing λ: Fixes high bias

- Increasing λ: Fixes high variance.

Diagnosing Neural Networks

- A neural network with fewer parameters is prone to underfitting. It is also computationally cheaper.

- A large neural network with more parameters is prone to overfitting. It is also computationally expensive. In this case you can use regularization (increase λ) to address the overfitting.

Using a single hidden layer is a good starting default. You can train your neural network on a number of hidden layers using your cross validation set. You can then select the one that performs best.

Model Complexity Effects:

- Lower-order polynomials (low model complexity) have high bias and low variance. In this case, the model fits poorly consistently.

- Higher-order polynomials (high model complexity) fit the training data extremely well and the test data extremely poorly. These have low bias on the training data, but very high variance.

- In reality, we would want to choose a model somewhere in between, that can generalize well but also fits the data reasonably well.

Reading: Lecture Slides

Programming: Regularized Linear Regression and Bias/Variance

Download the programming assignment here. This ZIP file contains the instructions in a PDF and the starter code. You may use either MATLAB or Octave (>= 3.8.0).

Machine Learning System Design

To optimize a machine learning algorithm, you’ll need to first understand where the biggest improvements can be made. In this module, we discuss how to understand the performance of a machine learning system with multiple parts, and also how to deal with skewed data.

5 videos, 3 readings

Video: Prioritizing What to Work On

In the next few videos I'd like to talk about machine learning system design.

0:05

These videos will touch on the main issues that you may face when designing a complex machine learning system,

0:12

and will actually try to give advice on how to strategize putting together a complex machine learning system.

0:18

In case this next set of videos seems a little disjointed that's because these videos will touch on a range of the different issues that you may come across when designing complex learning systems.

0:29

And even though the next set of videos may seem somewhat less mathematical, I think that this material may turn out to be very useful, and potentially huge time savers when you're building big machine learning systems.

0:42

Concretely, I'd like to begin with the issue of prioritizing how to spend your time on what to work on, and I'll begin with an example on spam classification.

0:55

Let's say you want to build a spam classifier.

0:58

Here are a couple of examples of obvious spam and non-spam emails.

1:03

if the one on the left tried to sell things. And notice how spammers will deliberately misspell words, like Vincent with a 1 there, and mortgages.

1:14

And on the right as maybe an obvious example of non-stamp email, actually email from my younger brother.

1:21

Let's say we have a labeled training set of some number of spam emails and some non-spam emails denoted with labels y equals 1 or 0, how do we build a classifier using supervised learning to distinguish between spam and non-spam?

1:38

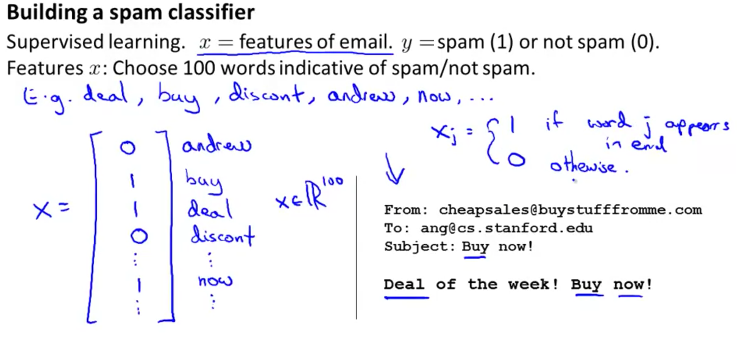

In order to apply supervised learning, the first decision we must make is how do we want to represent x, that is the features of the email. Given the features x and the labels y in our training set, we can then train a classifier, for example using logistic regression.

1:56

Here's one way to choose a set of features for our emails.

2:00

We could come up with, say, a list of maybe a hundred words that we think are indicative of whether e-mail is spam or non-spam, for example, if a piece of e-mail contains the word 'deal' maybe it's more likely to be spam if it contains the word 'buy' maybe more likely to be spam, a word like 'discount' is more likely to be spam, whereas if a piece of email contains my name,

2:23

Andrew, maybe that means the person actually knows who I am and that might mean it's less likely to be spam.

2:31

And maybe for some reason I think the word "now" may be indicative of non-spam because I get a lot of urgent emails, and so on, and maybe we choose a hundred words or so.

2:42

Given a piece of email, we can then take this piece of email and encode it into a feature vector as follows. I'm going to take my list of a hundred words and sort them in alphabetical order say. It doesn't have to be sorted. But, you know, here's a, here's my list of words, just count and so on, until eventually I'll get down to now, and so on and given a piece of e-mail like that shown on the right, I'm going to check and see whether or not each of these words appears in the e-mail and then I'm going to define a feature vector x where in this piece of an email on the right, my name doesn't appear so I'm gonna put a zero there. The word "by" does appear,

3:26

so I'm gonna put a one there and I'm just gonna put one's or zeroes. I'm gonna put a one even though the word "by" occurs twice. I'm not gonna recount how many times the word occurs.

3:37

The word "due" appears, I put a one there. The word "discount" doesn't appear, at least not in this this little short email, and so on. The word "now" does appear and so on. So I put ones and zeroes in this feature vector depending on whether or not a particular word appears. And in this example my feature vector would have to mention one hundred,

4:02

if I have a hundred, if if I chose a hundred words to use for this representation and each of my features Xj will basically be 1 if

4:16

you have a particular word that, we'll call this word j, appears in the email and Xj

4:22

would be zero otherwise.

4:25

Okay. So that gives me a feature representation of a piece of email. By the way, even though I've described this process as manually picking a hundred words, in practice what's most commonly done is to look through a training set, and in the training set depict the most frequently occurring n words where n is usually between ten thousand and fifty thousand, and use those as your features. So rather than manually picking a hundred words, here you look through the training examples and pick the most frequently occurring words like ten thousand to fifty thousand words, and those form the features that you are going to use to represent your email for spam classification.

5:05

Now, if you're building a spam classifier one question that you may face is, what's the best use of your time in order to make your spam classifier have higher accuracy, you have lower error.

5:18

One natural inclination is going to collect lots of data. Right? And in fact there's this tendency to think that, well the more data we have the better the algorithm will do. And in fact, in the email spam domain, there are actually pretty serious projects called Honey Pot Projects, which create fake email addresses and try to get these fake email addresses into the hands of spammers and use that to try to collect tons of spam email, and therefore you know, get a lot of spam data to train learning algorithms. But we've already seen in the previous sets of videos that getting lots of data will often help, but not all the time.

5:54

But for most machine learning problems, there are a lot of other things you could usually imagine doing to improve performance.

6:00

For spam, one thing you might think of is to develop more sophisticated features on the email, maybe based on the email routing information.

6:09

And this would be information contained in the email header.

6:13

So, when spammers send email, very often they will try to obscure the origins of the email, and maybe use fake email headers.

6:22

Or send email through very unusual sets of computer service. Through very unusual routes, in order to get the spam to you. And some of this information will be reflected in the email header.

6:35

And so one can imagine,

6:38

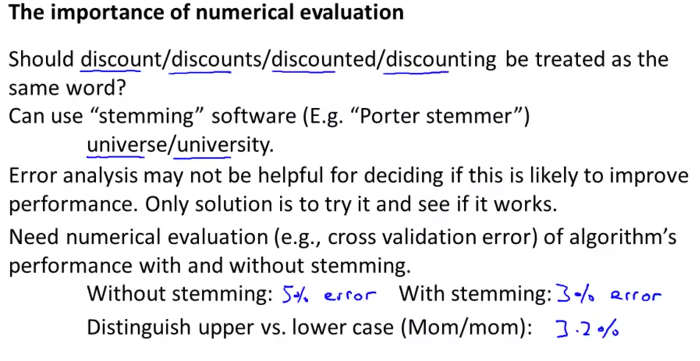

looking at the email headers and trying to develop more sophisticated features to capture this sort of email routing information to identify if something is spam. Something else you might consider doing is to look at the email message body, that is the email text, and try to develop more sophisticated features. For example, should the word 'discount' and the word 'discounts' be treated as the same words or should we have treat the words 'deal' and 'dealer' as the same word? Maybe even though one is lower case and one in capitalized in this example. Or do we want more complex features about punctuation because maybe spam

7:12

is using exclamation marks a lot more. I don't know. And along the same lines, maybe we also want to develop more sophisticated algorithms to detect and maybe to correct to deliberate misspellings, like mortgage, medicine, watches.

7:25

Because spammers actually do this, because if you have watches

7:29

with a 4 in there then well, with the simple technique that we talked about just now, the spam classifier might not equate this as the same thing as the word "watches," and so it may have a harder time realizing that something is spam with these deliberate misspellings. And this is why spammers do it.

7:48

While working on a machine learning problem, very often you can brainstorm lists of different things to try, like these. By the way, I've actually worked on the spam problem myself for a while. And I actually spent quite some time on it. And even though I kind of understand the spam problem, I actually know a bit about it, I would actually have a very hard time telling you of these four options which is the best use of your time so what happens, frankly what happens far too often is that a research group or product group will randomly fixate on one of these options. And sometimes that turns out not to be the most fruitful way to spend your time depending, you know, on which of these options someone ends up randomly fixating on. By the way, in fact, if you even get to the stage where you brainstorm a list of different options to try, you're probably already ahead of the curve. Sadly, what most people do is instead of trying to list out the options of things you might try, what far too many people do is wake up one morning and, for some reason, just, you know, have a weird gut feeling that, "Oh let's have a huge honeypot project to go and collect tons more data" and for whatever strange reason just sort of wake up one morning and randomly fixate on one thing and just work on that for six months.

9:03

But I think we can do better. And in particular what I'd like to do in the next video is tell you about the concept of error analysis

9:11

and talk about the way where you can try to have a more systematic way to choose amongst the options of the many different things you might work, and therefore be more likely to select what is actually a good way to spend your time, you know for the next few weeks, or next few days or the next few months.

Reading: Prioritizing What to Work On

Prioritizing What to Work On

System Design Example:

Given a data set of emails, we could construct a vector for each email. Each entry in this vector represents a word. The vector normally contains 10,000 to 50,000 entries gathered by finding the most frequently used words in our data set. If a word is to be found in the email, we would assign its respective entry a 1, else if it is not found, that entry would be a 0. Once we have all our x vectors ready, we train our algorithm and finally, we could use it to classify if an email is a spam or not.

So how could you spend your time to improve the accuracy of this classifier?

- Collect lots of data (for example "honeypot" project but doesn't always work)