今天简单使用了下CoreML , 我的这个模型功能主要是 打开摄像头,然后对准物体,会自动帮我们识别摄像头中的物体,并且给我们大概的百分比值

代码如下:

@IBAction func startClick(_ sender: Any) {

startFlag = !startFlag

if startFlag {

startCaptureVideo()

startButton.setTitle("stop", for: .normal)

}else {

startButton.setTitle("start", for: .normal)

stop()

}

}

//1、初始化CaptureSeason

lazy var captureSession:AVCaptureSession? = {

//1.1 实例化

let captureSession = AVCaptureSession()

captureSession.sessionPreset = .photo

//1.2 获取默认设备

guard let captureDevice = AVCaptureDevice.default(for: .video) else {

return nil

}

guard let captureDeviceInput = try? AVCaptureDeviceInput(device: captureDevice) else {

return nil

}

captureSession.addInput(captureDeviceInput)

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

view.layer.addSublayer(previewLayer)

previewLayer.frame = view.frame

let dataOutput = AVCaptureVideoDataOutput()

dataOutput.setSampleBufferDelegate(self, queue: DispatchQueue(label: "kingboVision"))

captureSession.addOutput(dataOutput)

return captureSession

}()

func startCaptureVideo(){

captureSession?.startRunning()

}

func stop() {

captureSession?.stopRunning()

}

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

print("Camera was safe ")

guard let pixelBuffer:CVPixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else

{

print("nil object ")

return

}

guard let model = try? VNCoreMLModel(for: SqueezeNet().model) else {return}

let request = VNCoreMLRequest(model: model) { [unowned self](finishRequest, error) in

//

guard let results = finishRequest.results as? [VNClassificationObservation] else {

return

}

guard let first = results.first else {

return

}

DispatchQueue.main.async {

self.descriptionLabel.text = "(first.identifier): (first.confidence * 100)%,"

}

}

print("start to VN")

try? VNImageRequestHandler(cvPixelBuffer: pixelBuffer, options: [:]).perform([request])

}

分为以下几步操作

1、我们工程inf.plist 文件中添加摄像头权限说明

Privacy - Camera Usage Description 相机权限设置 Privacy - Microphone Usage Description 麦克风权限设置

2、头文件引用

import AVKit import Vision

3、准备模型

模型准备我这里是使用App 官网上的SqueezeNet模型

4、将模型拖动到工程里面即可

5、代码编写部分

5.1 初始化AVCaptureSession

let captureSession = AVCaptureSession()

captureSession.sessionPreset = .photo

5.2 获取当前iPhone 设备控制,当作输入数据来源

guard let captureDevice = AVCaptureDevice.default(for: .video) else {

return nil

}

captureSession.addInput(captureDeviceInput)

5.3 设置输出数据

//设置输出数据处理对象

let dataOutput = AVCaptureVideoDataOutput() dataOutput.setSampleBufferDelegate(self, queue: DispatchQueue(label: "kingboVision")) captureSession.addOutput(dataOutput)

AVCaptureVideoDataOutput 设置代理,处理数据流图片数据,因此当前ViewController需要实现AVCaptureVideoDataOutputSampleBufferDelegate 的代理,如下

class ViewController: UIViewController ,AVCaptureVideoDataOutputSampleBufferDelegate{

......

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

//将视频数据转成CVPixBuffer

guard let pixelBuffer:CVPixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else

{

print("nil object ")

return

}

// 这里SquzzedNet是我从苹果中直接获取的模型

guard let model = try? VNCoreMLModel(for: SqueezeNet().model) else {return}

let request = VNCoreMLRequest(model: model) { [unowned self](finishRequest, error) in

//处理数据分析进行业务处理

guard let results = finishRequest.results as? [VNClassificationObservation] else {

return

}

guard let first = results.first else {

return

}

DispatchQueue.main.async {

self.descriptionLabel.text = "(first.identifier): (first.confidence * 100)%,"

}

}

print("start to VN")

try? VNImageRequestHandler(cvPixelBuffer: pixelBuffer, options: [:]).perform([request])

}

.....

}

5.4 将当前的摄像头显示在指定的View 上,(我们这里使用当前VC 的view)

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

view.layer.addSublayer(previewLayer)

previewLayer.frame = view.frame

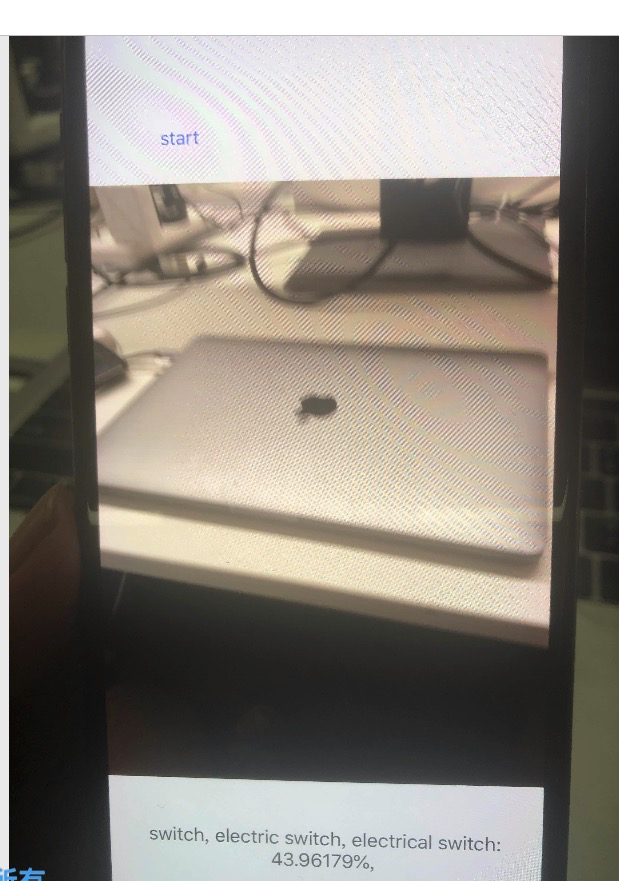

效果如下: