前提:完成hadoop + kerberos安全环境搭建。

安装配置spark client:

1. wget https://d3kbcqa49mib13.cloudfront.net/spark-2.2.0-bin-hadoop2.7.tgz

2. 配置

指定hadoop路径

vim conf/spark-env.sh HADOOP_CONF_DIR=/xxx/soft/hadoop-2.7.3/etc/hadoop

配置环境变量:

vim /etc/profile export SPARK_HOME=/xxx/soft/spark-2.2.0-bin-hadoop2.7

分配kerberos

kadmin.local addprinc -randkey sparkclient01@JENKIN.COM xst -k /var/kerberos/krb5kdc/keytab/sparkclient01.keytab sparkclient01@JENKIN.COM

将keytab分发给spark client

scp /var/kerberos/krb5kdc/keytab/sparkclient01.keytab hadoop1:/xxx/soft/spark-2.2.0-bin-hadoop2.7/

在hdfs上建立文件夹:( eventLog.dir )

hadoop fs -mkdir -p /jenkintest/tmp/spark01 hadoop fs -ls /jenkintest/tmp/

启动client:

cd ./bin ./spark-submit --class org.apache.spark.examples.SparkPi --conf spark.eventLog.dir=hdfs://jenkintest/tmp/spark01 --master yarn --deploy-mode client --driver-memory 4g --principal sparkclient01 --keytab /xxx/soft/spark-2.2.0-bin-hadoop2.7/sparkclient01.keytab --executor-memory 1g --executor-cores 1 $SPARK_HOME/examples/jars/spark-examples*.jar 10

命令解释:

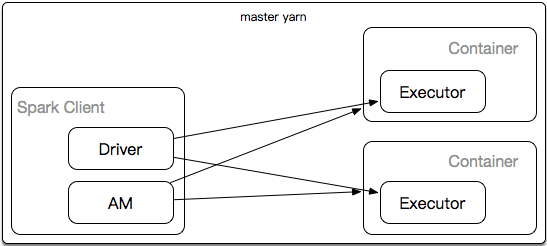

--master yarn //代表spark任务在yarn上

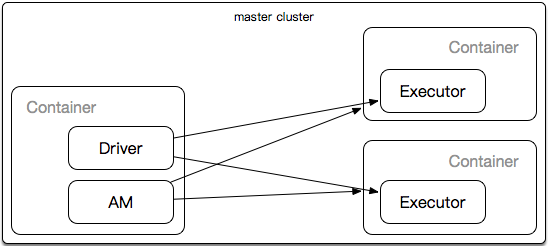

--master cluser //代表spark 在yarn集群上

AM负责在yarn上申请资源,运行在container。

spark通过Driver控制Executor。

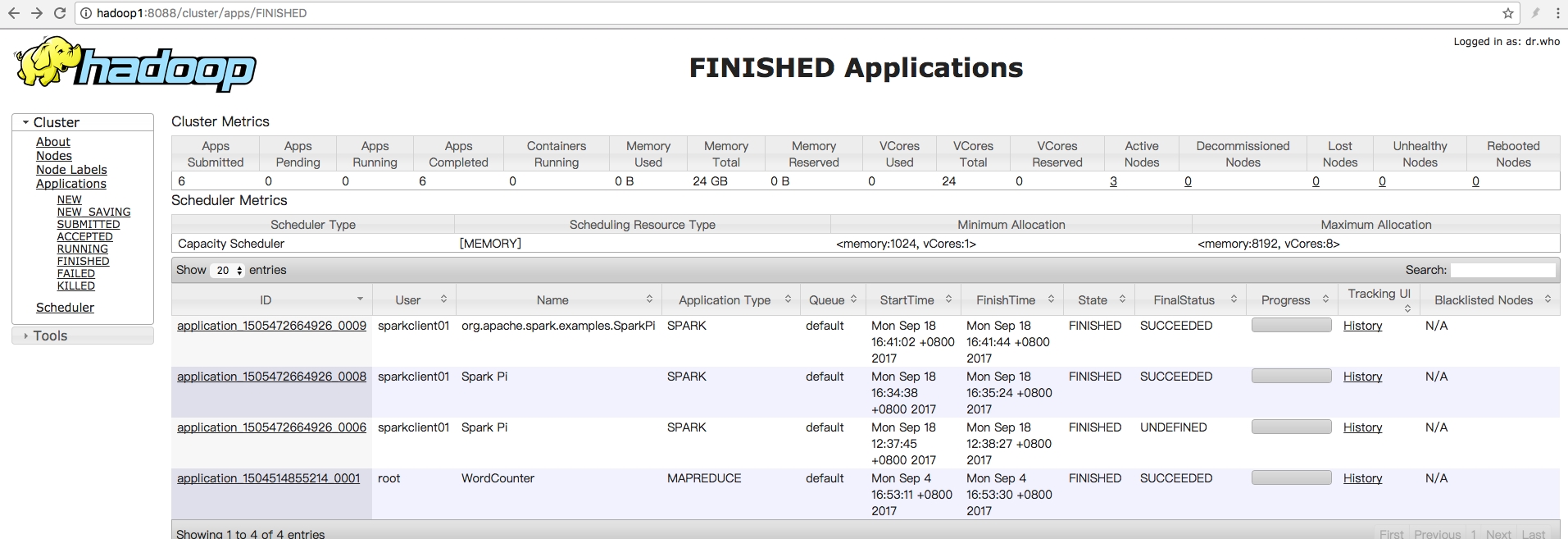

运行结果: