作者:果果是枚开心果

链接:https://zhuanlan.zhihu.com/p/20895858

来源:知乎

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

谈吐风生,援笔立成

——生成式模型 选录

翻译:尹肖贻

校对:孙月杰

机器学习之中,以产生(/生成)式模型最为波云诡谲,近来多得学界巨擘青睐。现掬三则材料,翻译此处,以备讨论。

同仁多谙熟机器学习,朝乾而夕惕。此番多有班门弄斧之嫌;倘有不周之处,贻笑大方,望读者不吝赐教。

- 生成式模型的魔术

[Extraction] Understanding and Implementing Deepmind's DRAW Model

【取自】理解和实现Deepmind小组的DRAW模型

By Eric Jang

…We'd like to be able to draw samples c∼P, or basically generate new pictures of houses that may not even exist in real life. However, the distribution of pixel values over these kinds of images is very complex. Sampling the correct images needs to account for many long-range spatial and semantic correlations,

我们考虑这样的任务:尝试生成图中的建筑的图像。每个图像c~P,P代表某种代表“房子”的概率分布,可以是现实生活中的,也可以是虚构的。可是如何确定图片中每个像素的值,是极其复杂的。采样到正确的图像,需要许多大尺度空间或语义的联系,

such as:

Adjacent pixels tend to be similar (a property of the distribution of natural images)

The top half of the image is usually sky-colored

The roof and body of the house probably have different colors

Any windows are likely to be reflecting the color of the sky

The house has to be structurally stable

The house has to be made out of materials like brick, wood, and cement rather than cotton or meat

... and so on.

比方说:l 相邻像素倾向于相似(自然图像的一个性质);l 图像的上半部分往往是天空的颜色;l 房顶跟房体很可能颜色不一样;l 窗子很可能反射着天空的色彩;l 房子必须有坚固的结构;l 房子由瓦、木头、水泥什么的造出来的,而不是棉花、肉什么的;l ……

We need to specify these relationships via equations and code, even if implicitly rather than analytically (writing down the 360,000-dimensional equations for P).

我们把这些关系写成等式与代码,即使内隐的而非分析的(写成360,000维的等式来拟合概率分布P)【注:360000=600^2,是作者前文设定的图像大小】

Trying to write down the equations that describe "house pictures" may seem insane, but in the field of Machine Learning, this is an area of intense research effort known as Generative Modeling.

试图写出等式来描述“房舍图像”,乍看起来非常“疯狂”。在机器学习领域,这样“疯狂”地研究产生(或描述)图像规则的领域,叫做生成式建模。

Formally, generative models allow us to create observation data out of thin air by sampling from the joint distribution over observation data and class labels. That is, when you sample from a generative distribution, you get back a tuple consisting of an image and a class label.

确切地说,生成式模型让我们可以,通过在观测数据并分类标签的联合分布上采样的方式,从无到有地产生出观测数据(create observation data out of thin air)。换句话说,当我们在生成式模型的分布上采样,我们得到图像与其标签构成的元组。

This is in contrast to discriminative models, which can only sample from the distribution of class labels, conditioned on observations (you need to supply it with the image for it to tell you what it is). Generative models are much harder to create than discriminative models.

与判别式模型相对照,后者的采样空间是给定观测图像条件下的类别标签。(即,你需要告诉算法,图像里面是什么 )。从这个对比不难发现,建立生成式模型比判别式模型,难度大得多。

There have been some awesome papers in the last couple of years that have improved generative modeling performance by combining them with Deep Learning techniques. Many researchers believe that breakthroughs in generative models are key to solving ``unsupervised learning'', and maybe even general AI, so this stuff gets a lot of attention.

近几年,深度学习技术对生成式模型有所建树。许多研究者认为,生成式模型方面的突破,是解决“非监督学习”的关键,乃至于广义人工智能的根基,所以生成式成为时代的宠儿。

生成式模型与判别式模型,你们分别是什么?

- [Extraction] On Discriminative vs. Generative classifiers: A comparison of logistic regression and naïve Bayes

【摘录】判别式分类器pk生成式分类器:逻辑回归与朴素贝叶斯的对比

By Andrew Y. Ng, Michael I. Jordan

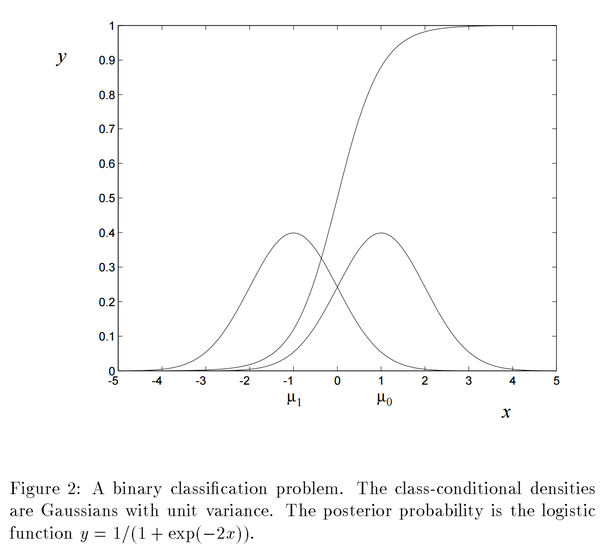

Generative classifiers learn a model of the joint probability, p(x, y), of the inputs x and the label y, and make their predictions by using Bayes rules to calculate p(y|x), and then picking the most likely label y.

生成式分类器研究数据的联合分布p(x, y),其中输入数据为x,标签为y。通过贝叶斯规则计算p(y|x),选取最可能的标签y。

Discriminative classifiers model the posterior p(y|x) directly, or learn a direct map from inputs x to the class labels.

判别式分类器直接模拟后验分布p(y|x),即从输入x直接得到它的类别标签。

There are several compelling reasons for using discriminative rather than generative classifiers, one of which, succinctly articulated by Vapnik [6], is that "one should solve the [classification] problem directly and never solve a more general problem as an intermediate step [such as modeling p(x|y)]."

有许多有力的理由,支持我们使用判别式分类器,而不是生成式分类器。其中一个,来源于Vapnik的原话,简洁而有力:“我们在解决(分类)问题时,应该直接求解,而不是先解决一个更宽泛的问题作为中间步骤(比如建模p(x|y))”。

Indeed, leaving aside computational issues and matters such as handling missing data, the prevailing consensus seems to be that discriminative classifiers are almost always to be preferred to generative ones.

的确,即使撇开计算问题与数据缺失的情况不谈,学界的共识也倾向于认为,判别式模型比生成式模型更好。

Another piece of prevailing folk wisdom is that the number of examples needed to fit a model is often roughly linear in the number of free parameters of a model. This has its theoretical basis in the observation that for "many" models, the VC dimension is roughly linear or at most some low-order polynomial in the number of parameters, and it is known that sample complexity in the discriminative setting is linear in the VC dimension.

另一类普遍的“民间智慧”认为,模型需要拟合的样本的数量,往往跟模型参数的数量呈近似的线性关系。这在许多模型中有理论基础,VC维与参数接近线性相关,至多也只是呈较低次数的多项式关系。众所周知,在判别集合中采样的复杂度,跟VC维呈线性关系。

We consider the naive Bayes model (for both discrete and continuous inputs) and its discriminative analog, logistic regression/linear classification, and show: (a) The generative model does indeed have a higher asymptotic error (as the number of training examples becomes large) than the discriminative model, but (b) The generative model may also approach its asymptotic error much faster than the discriminative model-possibly with a number of training examples that is only logarithmic, rather than linear, in the number of parameters. This suggests-and our empirical results strongly support-that, as the number of training examples is increased, there can be two distinct regimes of performance, the first in which the generative model has already approached its asymptotic error and is thus doing better, and the second in which the discriminative model approaches its lower asymptotic error and does better.

我们研究了朴素贝叶斯模型(对于离散跟连续两种输入情况)和它对应的判别式版本逻辑斯蒂回归/线性分类器,得出如下结论:(a)生成式模型(随着训练样本的增大)的确有更高的渐进误差,但是(b)生成式模型达到渐进误差线的速度,比判别式模型快得多,也许只需要参数的对数的量的训练样本,而不是线性的量,就可以达到渐近线。这表明——而且我们原先的结论强有力地支持——随着训练样本的增加,不同的模型表现出不同的性态:生成式模型比对应的判别式模型更快地达到渐进误差线,在判别式模型还未达到它的渐进误差线时,取得长时间的领先;而在那一点以后,判别式模型具有更低的渐进误差,而表现更佳。、

- What would be a practical use case for Generative models?

生成式模型在现实场景下有什么功用咧?

By Yoshua Bengio

Because if you are able to generate the data generating distribution, you probably captured the underlying causal factors. Now, in principle, you are in the best possible position to answer any question about that data. That means AI.

因为如果你掌握了产生数据的概率分布,你很有可能找出了隐含在的数据背后,产生它们的“原因”;所以,在理论上,你处于在极其优越的位置上,回答与数据有关的任何问题。这意味着实现了人工智能的本质。

But maybe this is too abstract of an explanation. A practical use-case is for simulating possible futures when planning a decision and reasoning. As I wrote earlier, I know what to do to avoid making a car accident even though I never experienced one. I actually have zero training example of that category! Nor anything close to it (thankfully). I am able to do so only because I can generate the sequence of events and their consequence for me, if I chose to do some (fatal) action. Self-driving cars? Robots? Dialogue systems? etc.

不过,这个回答太抽象了。较为实际的情况下,生成式模型的功用在于模拟未来的数据,以做出判断或推理。正如我前文所写的那样,虽然没有经历过车祸,我也知道怎样避开这样的事情。可是,我从未参加过躲避车祸的培训!(幸运的是)甚至没有任何一件与之类似的事情!我之所以能够做这件事,因为我能够在(致命的)事件中,按时间的先后,估算事件的发展,并预判它们对我可能造成的影响。自动驾驶车、机器人、人机对话系统,其理论基础都是这样的。

Another practical example is structured outputs, where you want to generate Y conditionally on X. If you have good algorithms for generating Y's in the first place, the conditional extension is pretty straightforward. When Y is a very high-dimensional object (image, sentence, data structure, complex set of actions, choice of a combination of drug treatments, etc.), then these techniques can be useful.

生成式模型另外一个应用的例子是,输出数据如果是结构化的,就可以在条件集X的基础上产生数据Y。如果你手里有一个Y的产生算法,你可以很直接地得到概率条件的延展。当Y处于高维空间的时候(例如图像、句子、数据结构、复杂的行动集合、医药治疗的组合方案等),生成式模型都有用武之地。

We are using images because they are fun and tell us a lot (humans are highly visual animals), which helps to debug and understand the limitations of these algorithms.

我们大多跟图像打交道,因为图像是有趣的,蕴含了丰富的信息(人类是高度依赖视觉的动物)。图像能帮助我们调试、理解算法的制约和边界。

致谢:

感谢中国科学院计算技术研究所刘昕博士有益的建议,以及孙月杰医生对此文稿的辛勤校对。对我的导师及研究小组的同学们一并致以谢忱。