写在前面

Requests是用Python语言编写,基于urllib,采用Apache2 Licensed开源协议的HTTP库。它比urllib更加方便,可以节约我们大量的工作,完全满足HTTP测试需求。

一.请求——request详解

各种请求方法

import requests

requests.post('http://httpbin.org/post')

requests.put('http://httpbin.org/put')

requests.delete('http://httpbin.org/delete')

requests.head('http://httpbin.org/get')

requests.options('http://httpbin.org/get')

1.get请求

import requests

response = requests.get('http://httpbin.org/get')

print(response.text)

带参数的get请求

方法一

直接把参数写在URL里面,但是代码不美观。

import requests

response = requests.get("http://httpbin.org/get?name=lc-snail&age=18)

print(response.url)

#输出 : http://httpbin.org/get?name=lc-snail&age=18

方法二

使用关键字 params,传入data字典来传递参数

import requests

data = {

'name':'lc-snail',

'age':18

}

response = requests.get('http://httpbin.org/get',params=data)

print(response.url)

#输出 http://httpbin.org/get?name=lc-snail&age=18 ,由此可见,通过关键字params也可以实现传递参数

print(type(response))

# 返回的类型为<class 'requests.models.Response'>

print(response.cookies)

#返回的cookie类型为questsCookieJar[]>

2.post请求

import requests

data = {

'name':'lc-snail',

'age':18

}

response = requests.post('http://httpbin.org/post',data=data)

print(response.text)

"""

输出

{

"args": {},

"data": "",

"files": {},

"form": {

"age": "18",

"name": "lc-snail"

},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Content-Length": "20",

"Content-Type": "application/x-www-form-urlencoded",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.23.0",

"X-Amzn-Trace-Id": "Root=1-5ef7f84f-b27abe7201fc3bef06f6c423"

},

"json": null,

"origin": "221.213.55.62",

"url": "http://httpbin.org/post"

}

"""

3.解析Json

import requests

response = requests.get('http://httpbin.org/get')

print(response.text)

print('======================================')

print(response.json())

print('======================================')

print(type(response.json()))

"""

输出

{

"args": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.23.0",

"X-Amzn-Trace-Id": "Root=1-5ef7fdaf-e72b899874ee2408af8a4f42"

},

"origin": "221.213.55.62",

"url": "http://httpbin.org/get"

}

======================================

{'args': {}, 'headers': {'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Host': 'httpbin.org', 'User-Agent': 'python-requests/2.23.0', 'X-Amzn-Trace-Id': 'Root=1-5ef7fdaf-e72b899874ee2408af8a4f42'}, 'origin': '221.213.55.62', 'url': 'http://httpbin.org/get'}

======================================

<class 'dict'>

"""

这在ajax请求时比较常用

4.获取二进制数据

import requests

response = requests.get('https://www.cnblogs.com/favicon.ico')

print(type(response.text),type(response.content))

print(response.text) #response.text是string类型,会出现乱码

print(response.content) #response.content是二进制流,

要把图片、视频、音频保存在本地需要保存二进制流

import requests

response = requests.get('https://www.cnblogs.com/favicon.ico')

with open('favicon.ico','wb') as f:

f.write(response.content)

f.close()

5.添加headers

不加headers,被识别出为爬虫,直接返回400 ,

import requests

response = requests.get('https://www.zhihu.com/explore')

print(response.status_code)

添加headers就可以访问成功

import requests

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'

}

response = requests.get('https://www.zhihu.com/explore',headers=headers)

print(response.status_code)#输出200

二.响应——response详解

import requests

response = requests.get('http://www.baidu.com')

# 获取状态码

print(response.status_code)

#获取headers

print(response.headers)

#获取cookies

print(response.cookies)

#或者url

print(response.url)

#获取history (请求历史)

print(response.history)

根据状态码判断是否请求成功

import requests

response = requests.get('http://www.baidu.com')

if response.status_code == 200:

print('连接成功')

else:

print('连接失败')

三.request高级操作

1.文件上传

import requests

files = {'file':open('favicon.ico','rb')}

response = requests.post('http://httpbin.org/post',files=files)

print(response.text)

2.获取cookie

import requests

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'

}

response = requests.get('https://www.baidu.com',headers=headers)

print(response.cookies) #cookie是一个列表

print('========================')

#遍历所有cookie的name和value

for key,value in response.cookies.items():

print(key + "=" + value)

#指定获取某个具体的cookie值

print('========================')

print(response.cookies['BAIDUID'])

"""

<RequestsCookieJar[<Cookie BAIDUID=03C597A3B8610B57E33D0EF8C9EE0227:FG=1 for .baidu.com/>, <Cookie BIDUPSID=03C597A3B8610B57033E407A0397EFCB for .baidu.com/>, <Cookie H_PS_PSSID=32099_1432_31326_21093_32140_32046_32092_32109 for .baidu.com/>, <Cookie PSTM=1593312991 for .baidu.com/>, <Cookie BDSVRTM=0 for www.baidu.com/>, <Cookie BD_HOME=1 for www.baidu.com/>]>

========================

BAIDUID=03C597A3B8610B57E33D0EF8C9EE0227:FG=1

BIDUPSID=03C597A3B8610B57033E407A0397EFCB

H_PS_PSSID=32099_1432_31326_21093_32140_32046_32092_32109

PSTM=1593312991

BDSVRTM=0

BD_HOME=1

========================

03C597A3B8610B57E33D0EF8C9EE0227:FG=1

"""

3.会话维持

模拟登录

import requests

cookies = {"name":"lc-snail"}

#这里使用关键字cookies把自己的cookie传给服务器,希望下一次使用requests.get时,返回这个cookie

r1 = requests.get('http://httpbin.org/cookies',cookies=cookies)

print(r1.text)

print('=========================')

r2 = response = requests.get('http://httpbin.org/cookies')

print(r2.text) #输出的cookie为空

"""

输出

{

"cookies": {

"name": "lc-snail"

}

}

=========================

{

"cookies": {}

}

"""

'''

实际上调用2次requests.get是互相独立的,相当于用不同的浏览器打开网页。如果想返回刚刚设置的cookie需要保持会话。

'''

解决方案

创建一个Session()对象,利用这个对象来实现模拟登录

import requests

s = requests.Session()

s.get('http://httpbin.org/cookies/set//1234567')

response = s.get('http://httpbin.org/cookies')

print(response.text)

'''

输出

返回值:{

"cookies": {

"number": "1234567"

}

}*

'''

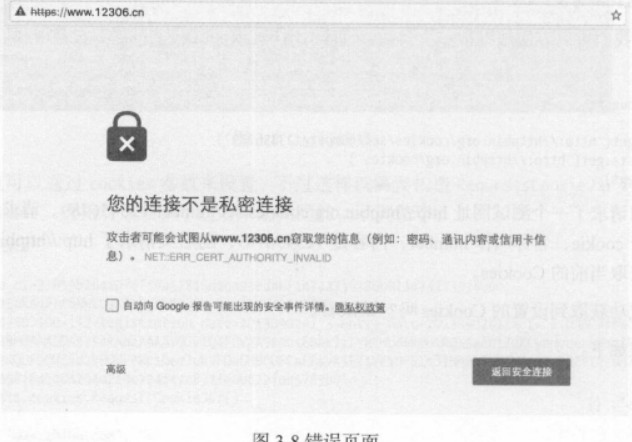

4.证书验证

有时候打开https的网站,而这个网站提供的证书没有通过验证,那么抛出ssl错误导致程序中断。

如图所示

解决方法1——verify参数

为了防止这种情况,可以用varify参数,改为False(默认为True)不进行验证。可以通过验证,但是会报警告信息。

import requests

response = requests.get('https://www.12306.cn',verify=False)

print(response.status_code)

消除警告信息

调用原生的urllib3中的disable_warnings()可以消除警告信息。

import requests

from requests.packages import urllib3

urllib3.disable_warnings()#调用原生的urllib3中的disable_warnings()可以消除警告信息。

response = requests.get('https://www.12306.cn',verify=False)

print(response.status_code)

解决方法2——手动导入证书和key

import requests

response = requests.get('https://www.12306.cn',cert={'parth/server.crt','/path/key'})

print(response.status_code)

注:

我们需要有 crt 和 key 文件,并且指定它们的路径。 注意, 本地私有证书的 key 必须是解密状态, 加密状态的 key 是不支持的。

5.代理设置

import request

proxies = {

"http":"http://127.0.0.1:9998",

"https":"https://127.0.0.1:9998",

}

response = requests.get("https://www.taobao.com",proxies=proxies)

print(response.status_code)

有用户名密码的代理

import request

proxies = {

"http":"http://user:password@127.0.0.1:9998",

}

response = requests.get("https://www.taobao.com",proxies=proxies)

print(response.status_code)

除了HTTP代理之外也支持sock代理

import requests

proxies = {

"http":"sock5://127.0.0.1:9998",

"https":"sock5://127.0.0.1:9998",

}

response = requests.get("https://www.taobao.com",proxies=proxies)

print(response.status_code)

注:

如果要用sock代理需要安装sock库(pip3 install ’ requests[socks ]’)

6.超时设置

import requests

try:

response = requests.get("http://httpbin.org/get",timeout=1)

print(response.status_code)

except requests.ReadTimeout:

print('Timeout')

认证设置

有些网站需要输入用户名和密码才能看到网站内容,则需要验证。

demo —— 传入一个HTTPBasicAuth 类

import requests

response = requests.get("http://httpbin.org/get",auth=HTTPBasicAuth('user','123'))

print(response.status_code)

demo —— 传入字典的方式

import requests

response = requests.get("http://httpbin.org/get",auth={'user':'123'})

print(response.status_code)

异常处理

import requests

from requests.exceptions import ReadTimeout,ConnectionError,RequestException

try:

response = requests.get("http://httpbin.org/get",timeout=0.2)

print(response.status_code)

except ReadTimeout:

print('Timeout')

except ConnectionError:

print("Con error")

except RequestException:

print('Error')

参考《Python 3网络爬虫开发实战 》[崔庆才著]