1.结构化:

- 单条新闻的详情字典:news

- 一个列表页所有单条新闻汇总列表:newsls.append(news)

- 所有列表页的所有新闻汇总列表:newstotal.extend(newsls)

2.转换成pandas的数据结构DataFrame

3.从DataFrame保存到excel

4.从DataFrame保存到sqlite3数据库

import requests import re from bs4 import BeautifulSoup from datetime import datetime import pandas import sqlite3 def getclick(url): m=re.search(r'_(.*).html',url) newsid=m.group(1)[5:] clickurl=('http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80').format(newsid) resc=requests.get(clickurl).text r=re.search(r"hits(.*)",resc).group(1) click=r.lstrip("').html('").rstrip("');") return(int(click)) #print(getclick('http://news.gzcc.cn/html/2017/xiaoyuanxinwen_1018/8347.html')) def getdetail(url): resd=requests.get(url) resd.encoding='utf-8' soupd=BeautifulSoup(resd.text,'html.parser') news={} news['url']=url news['title']=soupd.select('.show-title')[0].text info=soupd.select('.show-info')[0].text #news['dt']=datetime.strptime(info.lstrip('发布时间:')[0,19],'%Y-%m-%d %H:%M:') news['source']= re.search('来源:(.*)点击',info).group(1).strip() news['click']=getclick(url) return(news) #print(getdetail('http://news.gzcc.cn/html/2017/xiaoyuanxinwen_1018/8347.html')) def onepage(pageurl): res=requests.get(pageurl) res.encoding='utf-8' soup=BeautifulSoup(res.text,'html.parser') newsls=[] for news in soup.select('li'): if len(news.select('.news-list-title')) > 0: newsls.append(getdetail(news.select('a')[0]['href'])) return(newsls) #print(onepage('http://news.gzcc.cn/html/xiaoyuanxinwen/')) newstotal=[] gzccurl='http://news.gzcc.cn/html/xiaoyuanxinwen/' newstotal.extend(onepage(gzccurl)) #print(newstotal) res=requests.get(gzccurl) res.encoding='utf-8' soup=BeautifulSoup(res.text,'html.parser') pages=int(soup.select('.a1')[0].text.rstrip('条'))//10+1 for i in range(2,3): listurl='http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) newstotal.extend(onepage(listurl)) #print(len(newstotal)) df=pandas.DataFrame(newstotal) #print(df.head()) #print(df['title']) df.to_excel('gzccnews.xlsx') with sqlite3.connect('gzccnewsdb1.sqlite') as db: df.to_sql('gzccnewsdb1',con=db)

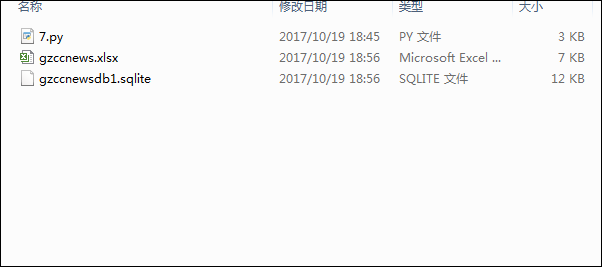

实验结果如下图所示: