from bs4 import BeautifulSoup import requests import os def getdepotdetailcontent(title,url):#爬取每个仓库列表的详情 r=requests.get("https://www.50yc.com"+url).content soup = BeautifulSoup(r,"html.parser") result = soup.find(name='div',attrs={"class":"sm-content"})#返回元素集 content = result.find_all("li")#返回元素集 with open(os.getcwd()+"\depot\"+title+"\depotdetail.txt","w") as f : for i in content: b = i.find("span").text br = i.find("div").text f .write(b.replace(" ","").replace(" ","")+br.replace(" ","")+" "+"****************************"+" ") f.close() def getdepot(page):#爬取仓库列表信息 depotlisthtml = requests.get("https://www.50yc.com/xan"+page).content content = BeautifulSoup(depotlisthtml,"html.parser") tags = content.find_all(name="div",attrs={"class":"bg-hover"}) for i in tags: y = i.find_all(name="img")#返回tag标签 for m in y: if m["src"].startswith("http"): imgurl = m["src"] print(imgurl) title = i.strong.text depotdetailurl = i.a['href'] # print(depotdetailurl) os.mkdir(os.getcwd()+'\depot\'+title+'\') with open(os.getcwd()+'\depot\'+title+'\'+"depot.jpg","wb") as d : d.write(requests.get(imgurl).content) with open(os.getcwd()+'\depot\'+title+'\'+"depot.txt","w") as m: m.write(i.text.replace(" ","")) m.close() getdepotdetailcontent(title,depotdetailurl) for i in range(1,26):#爬取每页的仓库列表与仓库详情 getdepot("/page"+str(i)) print("/page"+str(i))

爬取内容为:

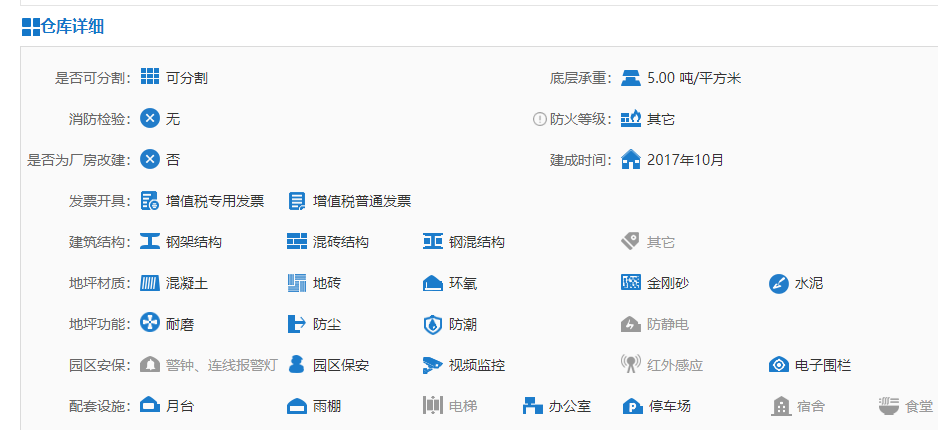

爬取结果如下: