安装

pip install textblob import nltk pip install nltk nltk.download('punkt') # 安装一些语料库, 国内安装有问题

nltk.download('averaged_perceptron_tagger')

基本操作

情感分析

该sentiment属性返回形式的namedtuple 。极性在[-1.0,1.0]范围内浮动。主观性是在[0.0,1.0]范围内的浮动,其中0.0是非常客观的,而1.0是非常主观的。Sentiment(polarity, subjectivity)

text = "I am happy today. I feel sad today." from textblob import TextBlob blob = TextBlob(text) blob.sentences # 能够分句 print(blob.sentences[0].sentiment) print(blob.sentences[1].sentiment) print(blob.sentiment) # Sentiment(polarity=0.15000000000000002, subjectivity=1.0)

拼写纠正

>>> b = TextBlob("I havv goood speling!") >>> print(b.correct()) I have good spelling!

返回的貌似是字符数组,最好用str转成字符串.

原理见 How to Write a Spelling Corrector

例子:amazon评论情感分析

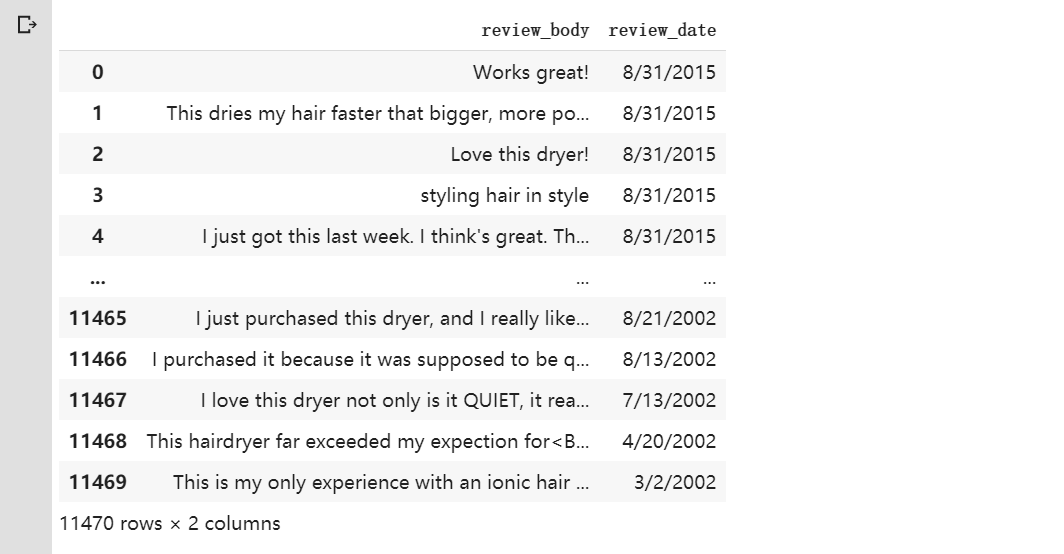

1. 获取原始评论数据

import pandas as pd df=pd.read_csv('hair_dryer.tsv', sep=' ', usecols=['review_body','review_date']) df.head()

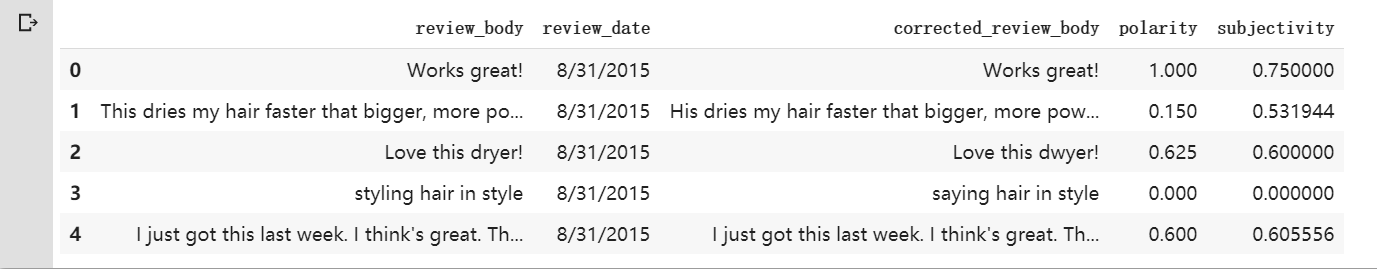

2. 由于评论中有大量的拼写错误,加个单词纠正

def correct(text): b = TextBlob(text) return str(b.correct()) df['corrected_review_body'] = df['review_body'].map(correct) df.head()

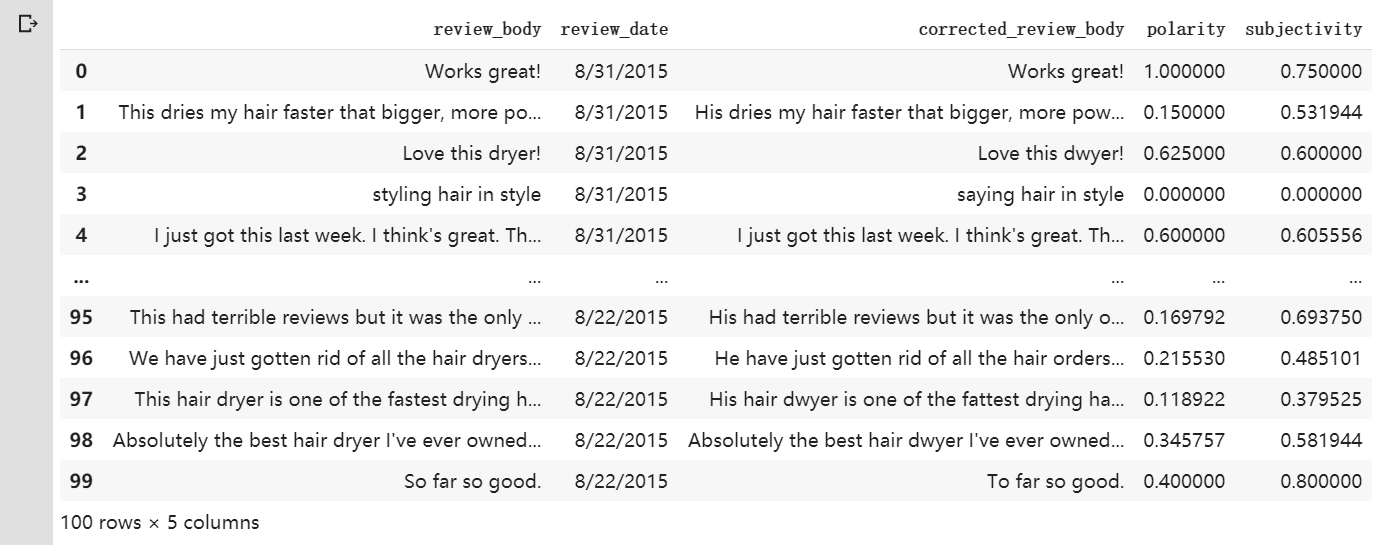

3. 得到情感极性和主观性

def get_polarity(text): testimonial = TextBlob(text) return testimonial.sentiment.polarity def get_subjectivity(text): testimonial = TextBlob(text) return testimonial.sentiment.subjectivity df['polarity'] = df['review_body'].map(get_polarity) df['subjectivity'] = df['review_body'].map(get_subjectivity) print(df)

4. 统计词频

统计词频主要是依靠 collections.Counter(word_list).most_common() 方法来自动统计并排序,如下:

row = df.shape[0] words = "" for i in range(0, row): words = words + " " + p[i] print(words) #汇总每个评论 import collections collections.Counter(words.split()).most_common(50) #显示top50

具体得到某个单词的频数:

coll = collections.Counter(words.split()) coll["good"]

5. 保存结果到CSV

df.to_csv("情感分析.csv")

参考链接:

1. https://textblob.readthedocs.io/en/dev/quickstart.html#get-word-and-noun-phrase-frequencies

2. https://blog.csdn.net/heykid/article/details/62424513#%E7%BB%9F%E8%AE%A1%E8%AF%8D%E9%A2%91

3. https://blog.csdn.net/sinat_29957455/article/details/79059436