资源配置清单用编排yaml的方式来编排Docker,采用RTETful的接口风格,主要的资源对象如下图

自主式Pod资源(不受控制器控制)

资源的清单格式:

一级字段:apiVersion(group/version), kind, metadata(name,namespace,labels,annotations, ...), spec, status(只读)

Pod资源:

spec.containers <[]object>

- name <string>

image <string>

imagePullPolicy <string>

Always, Never, IfNotPresent

修改镜像中的默认应用:

command, args (容器的https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/ )

标签:(重要特性)

key=value

key: 字母、数字、_、-、.

value:可以为空,只能字母或数字开头及结尾,中间可使用字母、数字、_、-、.

例子:

# kubectl get pods -l app (app是key值)

# kubectl get pods --show-labels

# kubectl label pods pod-demo release=canary --overwrite

# kubectl get pods -l release=canary

标签选择器:

等值关系:=,==,!=

集合关系:

KEY in (VALUE1,VALUE2,...)

KEY notin (VALUE1,VALUE2,...)

KEY

!KEY

许多资源支持内嵌字段定义其使用的标签选择器:

matchLabels:直接给定键值

matchExpressions:基于给定的表达式来定义使用标签选择器,{key:"KEY", operator:"OPERATOR", values:[VAL1,VAL2,...]}

操作符:

In, NotIn:values字段的值必须为非空列表;

Exists, NotExists:values字段的值必须为空列表;

nodeSelector <map[string]string>

节点标签选择器,可以影响调度算法。

nodeName <string>

annotations:

与label不同的地方在于,它不能用于挑选资源对象,仅用于为对象提供“元数据”。

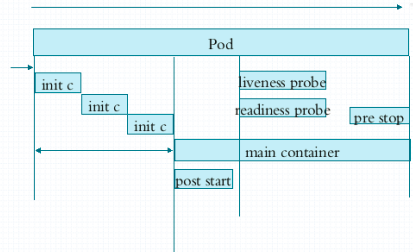

Pod生命周期

状态:Pending, Running, Failed, Succeeded, Unknown

创建Pod经历的过程:->apiServer->etcd保存->scheculer->etcd调度结果->当前节点运行pod(把状态发回apiServer)->etcd保存

Pod生命周期中的重要行为:

1. 初始化容器

2. 容器探测:

liveness

readiness (在生产环境中是必须配置的)

3. 探针类型有三种:

ExecAction、TCPSocketAction、HTTPGetAction

# kubectl explain pod.spec.containers.livenessProbe

4. restartPolicy:

Always, OnFailure, Never. Default to Always.

5. lifecycle

# kubectl explain pods.spec.containers.lifecycle.preStop

# kubectl explain pods.spec.containers.lifecycle.preStart

例子1, ExecAction探测

# vim liveness-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec-pod

namespace: default

spec:

containers:

- name: liveness-exec-container

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 3600"]

livenessProbe:

exec:

command: ["test","-e","/tmp/healthy"]

initialDelaySeconds: 1

periodSeconds: 3

# kubectl create -f liveness-pod.yaml

# kubectl describe pod liveness-exec-pod

State: Running

Started: Thu, 09 Aug 2018 01:39:11 -0400

Last State: Terminated

Reason: Error

Exit Code: 137

Started: Thu, 09 Aug 2018 01:38:03 -0400

Finished: Thu, 09 Aug 2018 01:39:09 -0400

Ready: True

Restart Count: 1

Liveness: exec [test -e /tmp/healthy] delay=1s timeout=1s period=3s #success=1 #failure=3

例子2, HTTPGetAction探测

# vim liveness-http.yaml

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget-pod

namespace: default

spec:

containers:

- name: liveness-httpget-container

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

# kubectl create -f liveness-http.yaml

# kubectl exec -it liveness-httpget-pod -- /bin/sh

rm /usr/share/nginx/html/index.html

# kubectl describe pod liveness-httpget-pod

Restart Count: 1

Liveness: http-get http://:http/index.html delay=1s timeout=1s period=3s #success=1 #failure=3

例子3 readiness的HTTPGetAction探测

# vim readiness-http.yaml

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpget-pod

namespace: default

spec:

containers:

- name: readiness-httpget-container

image: ikubernetes/myapp:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

readinessProbe:

httpGet:

port: http

path: /index.html

initialDelaySeconds: 1

periodSeconds: 3

#kubectl create -f readiness-http.yaml

# kubectl exec -it readiness-httpget-pod -- /bin/sh

/ # rm -f /usr/share/nginx/html/index.html

# kubectl get pods -w

readiness-httpget-pod 0/1 Running 0 1m

此docker的状态是0,不会对外提供服务

例子4 lifetime的postStar启动之后立即执行

# vim pod-postStart.yaml

apiVersion: v1

kind: Pod

metadata:

name: poststart-pod

namespace: default

spec:

containers:

- name: busybox-httpd

image: busybox:latest

imagePullPolicy: IfNotPresent

lifecycle:

postStart:

exec:

command: ['/bin/sh','-c','echo Home_Page >> /tmp/index.html']

command: ['/bin/httpd']

args: ['-f','-h /tmp']

Pod控制器

管理pod的中间层,运行于我们期待的状态。

ReplicaSet:

自动扩容和缩容,取代了之前的ReplicationController,用于管理无状态的docker。Google不建议直接使用

1. 用户期望的pod副本

2. label标签选择器,用于管理pod副本

3. 新建pod根据pod template

ReplicaSet实例:

1. vim replicaset.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

name: myapp-pod

labels:

app: myapp

release: canary

environment: qa

spec:

containers:

- name: myapp-container

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

2. kubectl create -f replicaset.yaml

3. kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-c6f58 1/1 Running 0 3s

myapp-lvjk2 1/1 Running 0 3s

4. kubectl delete pod myapp-c6f58

5. kubectl get pods (生成一个新的pod)

NAME READY STATUS RESTARTS AGE

myapp-lvjk2 1/1 Running 0 2m

myapp-s9hgr 1/1 Running 0 10s

6. kubectl edit rs myapp

replicas: 2改为5

7. kubectl get pods (pod自动增长为5个)

NAME READY STATUS RESTARTS AGE

myapp-h2j68 1/1 Running 0 5s

myapp-lvjk2 1/1 Running 0 8m

myapp-nsv6z 1/1 Running 0 5s

myapp-s9hgr 1/1 Running 0 6m

myapp-wnf2b 1/1 Running 0 5s

# curl 10.244.2.17

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

8. kubectl get pods

ikubernetes/myapp:v1更改为v2

此时运行着的docker不会自到升级

删除一个pod

# kubectl delete pod myapp-h2j68

新的docker myapp-4qg8c 已经升级为v2

# curl 10.244.2.19

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>

只删除一个在线pod,新生成的pod会为新的版本,这种叫金丝雀发布。

依次把所有旧版本的pod删除,新版本的pod自动生成,这种是灰度发布,此种发布要注意系统负载的变化。

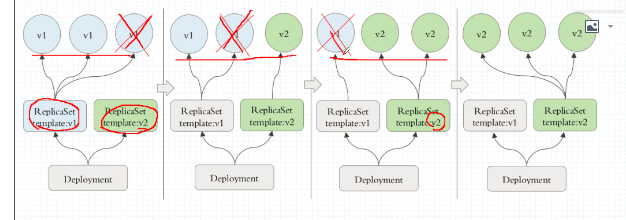

还有一种发布方式是蓝绿发布,如下图

一批完全更新完。一模一样的环境。新创建 一个RS2,删除RS1,或者,创建RS2,和RS1并行,更改service,全指向RS2

Deployment

通过控制ReplicaSet来控制pod,能提供比ReplicaSet更强大的功能。支持滚动更新和回滚。支持更新节奏和更新逻辑。

# kubectl explain deploy

KIND: Deployment

VERSION: extensions/v1beta1 (文档的显示落后于功能,最新apps/v1)

# kubectl explain deploy.spec.strategy

rollingUpdate (控制更新粒度)

# kubectl explain deploy.spec.strategy.rollingUpdate

maxSurge ()

maxUnavailable (最多几个不可用)

两个不可能同时为零,即不能多也不能少。

# kubectl explain deploy.spec

revisionHistoryLimit (保持多少个历史)

Deployment实例

# vim deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

# kubectl apply -f deploy.yaml

# kubectl get rs

NAME DESIRED CURRENT READY AGE

myapp-deploy-69b47bc96d 2 2 2 1m

(69b47bc96d是模板的hash值)

# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deploy-69b47bc96d-f4bp4 1/1 Running 0 3m

myapp-deploy-69b47bc96d-qllnm 1/1 Running 0 3m

# 更改replicas: 3

# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deploy-69b47bc96d-f4bp4 1/1 Running 0 4m

myapp-deploy-69b47bc96d-qllnm 1/1 Running 0 4m

myapp-deploy-69b47bc96d-s6t42 1/1 Running 0 17s

# kubectl describe deploy myapp-deploy

RollingUpdateStrategy: 25% max unavailable, 25% max surge (默认更新策略)

# kubectl get pod -w -l app=myapp (动态监控)

# 更改image: ikubernetes/myapp:v2

# kubectl apply -f deploy.yaml

# kubectl get pod -w -l app=myapp ()

NAME READY STATUS RESTARTS AGE

myapp-deploy-69b47bc96d-f4bp4 1/1 Running 0 6m

myapp-deploy-69b47bc96d-qllnm 1/1 Running 0 6m

myapp-deploy-69b47bc96d-s6t42 1/1 Running 0 2m

myapp-deploy-67f6f6b4dc-tncmc 0/1 Pending 0 1s

myapp-deploy-67f6f6b4dc-tncmc 0/1 Pending 0 1s

myapp-deploy-67f6f6b4dc-tncmc 0/1 ContainerCreating 0 2s

myapp-deploy-67f6f6b4dc-tncmc 1/1 Running 0 4s

# kubectl get rs ()

NAME DESIRED CURRENT READY AGE

myapp-deploy-67f6f6b4dc 3 3 3 54s

myapp-deploy-69b47bc96d 0 0 0 8m

# kubectl rollout history deployment myapp-deploy

# kubectl patch deployment myapp-deploy -p '{"spec":{"replicas":5}}' 用patch方式更改replicas为5

# kubectl get pod -w -l app=myapp

NAME READY STATUS RESTARTS AGE

myapp-deploy-67f6f6b4dc-fc7kj 1/1 Running 0 18s

myapp-deploy-67f6f6b4dc-kssst 1/1 Running 0 5m

myapp-deploy-67f6f6b4dc-tncmc 1/1 Running 0 5m

myapp-deploy-67f6f6b4dc-xdzvc 1/1 Running 0 18s

myapp-deploy-67f6f6b4dc-zjn77 1/1 Running 0 5m

# kubectl patch deployment myapp-deploy -p '{"spec":{"strategy":{"rollingUpdate":{"maxSurge":1,"maxUnavaliable":0}}}}'

# kubectl describe deployment myapp-deploy

RollingUpdateStrategy: 0 max unavailable, 1 max surge

# 更新版本也可用setimage

kubectl set image deployment myapp-deploy myapp=ikubernetes/myapp:v3 && kubectl rollout pause deployment myapp-deploy

# kubectl rollout history deployment myapp-deploy

# kubectl rollout undo deployment myapp-deploy --to-revision=1 (回滚到第一版本)

DaemonSet

确保只运行一个副本,运行在集群中每一个节点上。(也可以部分节点上只运行一个且只有一个pod副本,如监控ssd硬盘)

# kubectl explain ds

# vim filebeat.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: redis

role: logstor

template:

metadata:

labels:

app: redis

role: logstor

spec:

containers:

- name: redis

image: redis:4.0-alpine

ports:

- name: redis

containerPort: 6379

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: myapp-ds

namespace: default

spec:

selector:

matchLabels:

app: filebeat

release: stable

template:

metadata:

labels:

app: filebeat

release: stable

spec:

containers:

- name: filebeat

image: ikubernetes/filebeat:5.6.5-alpine

env:

- name: REDIS_HOST

value: redis.default.svc.cluster.local

- name: REDIS_LOG_LEVEL

value: info

# kubectl apply -f filebeat.yaml

# kubectl get pods -l app=filebeat -o wide (运行两个是因为目前节点数为2,默认不能运行在master,因为master有污点)

filebeat-ds-chxl6 1/1 Running 1 8m 10.244.2.37 node2

filebeat-ds-rmnxq 1/1 Running 0 8m 10.244.1.35 node1

# kubectl logs myapp-ds-r47zj

# kubectl expose deployment redis --port=6379

# kubectl describe ds filebeat

# 支持在线滚动更新

kubectl set image daemonsets filebeat-ds filebeat=ikubernetes/filebeat:5.6.6-alpine

# kubectl explain pod.spec

hostNetwork (DaemonSet可以直接共享主机的网络名称,直接对外提供服务)

Job

按照用记指定的数量启动N个pod资源,要不要重新创建取决于任务有没有完成

Cronjob

周期任务

StatefulSet

有状态应用,每一个pod副本是单独管理的。需要脚本,加入到模板中

TPR: Third Party Resource(1.2开始 1.7废弃)

CDR:customer defined resource(1.8开始)

Operator:封装(etcd,Prometheus只有几个支持 )