ceph对接openstack环境

一、使用rbd方式提供存储如下数据:

(1)image:保存glanc中的image;/var/lib/glance/images/

(2)volume存储:保存cinder的volume;保存创建虚拟机时选择创建新卷;虚拟机磁盘默认目录:/var/lib/nova/instances/

(3)vms的存储:保存创建虚拟机时不选择创建新卷;

二、实施步骤:

(1)客户端也要有cent用户:

useradd cent && echo "123" | passwd --stdin cent

echo -e 'Defaults:cent !requiretty cent ALL = (root) NOPASSWD:ALL' | tee /etc/sudoers.d/ceph

chmod 440 /etc/sudoers.d/ceph

(2)openstack要用ceph的节点(比如compute-node和storage-node)安装下载的软件包:

yum localinstall ./* -y

或则:每个节点安装 clients(要访问ceph集群的节点):

yum install python-rbd

yum install ceph-common

如果先采用上面的方式安装客户端,其实这两个包在rpm包中早已经安装过了

(3)部署节点上执行,为openstack节点安装ceph:

ceph-deploy install controller

ceph-deploy admin controller

(4)客户端执行

sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

(5)create pools,只需在一个ceph节点上操作即可:

[root@node1 ~]# ceph osd pool create images 128 #根据osd数量来决定数量

[root@node1 ~]# ceph osd pool create vms 128

[root@node1 ~]# ceph osd pool create volumes 128

显示pool的状态

[root@node1 ~]# ceph osd lspools

(6)在ceph集群中,创建glance和cinder用户,只需在一个ceph节点上操作即可:

[root@node1 ~]# ceph auth get-or-create client.glance mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=images'

[root@node1 ~]# ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images'

nova使用cinder用户,就不单独创建了

(7)拷贝ceph-ring, 只需在一个ceph节点上操作即可:

[root@node1 ~]# cd /etc/ceph/

[root@node1 ceph]# ceph auth get-or-create client.glance > /etc/ceph/ceph.client.glance.keyring

[root@node1 ceph]# ceph auth get-or-create client.cinder > /etc/ceph/ceph.client.cinder.keyring

使用scp拷贝到其他节点

[root@node1 ceph]# scp ceph.client.cinder.keyring ceph.client.glance.keyring node2:/etc/ceph/

[root@node1 ceph]# scp ceph.client.cinder.keyring ceph.client.glance.keyring node3:/etc/ceph/

(8)更改文件的权限(所有客户端节点均执行)

[root@node1 ceph]# chown glance:glance /etc/ceph/ceph.client.glance.keyring

[root@node1 ceph]# chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring

(9)更改libvirt权限(只需在nova-compute节点上操作即可,每个计算节点都做)

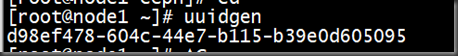

[root@node1 ~]# uuidgen

在/etc/ceph/目录下(在什么目录没有影响,放到/etc/ceph目录方便管理):

[root@node1 ceph]# vim secret.xml

<secret ephemeral='no' private='no'>

<uuid>d98ef478-604c-44e7-b115-b39e0d605095</uuid> #将生成得uuid码写入

<usage type='ceph'>

<name>client.cinder secret</name>

</usage>

</secret>

[root@node1 ceph]# virsh secret-define --file secret.xml

[root@node1 ceph]# ceph auth get-key client.cinder > ./client.cinder.key

[root@node1 ceph]# virsh secret-set-value --secret d98ef478-604c-44e7-b115-b39e0d605095 --base64 $(cat ./client.cinder.key) #uuid值

最后所有compute(计算)节点的secret.xml文件都相同,记下之前生成的uuid:940f0485-e206-4b49-b878-dcd0cb9c70a4,在计算节点进行如下得步骤:

virsh secret-define --file secret.xml

ceph auth get-key client.cinder > ./client.cinder.key

virsh secret-set-value --secret d98ef478-604c-44e7-b115-b39e0d605095 --base64 $(cat ./client.cinder.key)

如遇如下错误:

[root@controller ceph]# virsh secret-define --file secret.xml

错误:使用 secret.xml 设定属性失败

错误:internal error: 已将 UUID 为d448a6ee-60f3-42a3-b6fa-6ec69cab2378 的 secret 定义为与 client.cinder secret 一同使用

[root@controller ~]# virsh secret-list

UUID 用量

--------------------------------------------------------------------------------

d448a6ee-60f3-42a3-b6fa-6ec69cab2378 ceph client.cinder secret

[root@controller ~]# virsh secret-undefine d448a6ee-60f3-42a3-b6fa-6ec69cab2378

已删除 secret d448a6ee-60f3-42a3-b6fa-6ec69cab2378

[root@controller ~]# virsh secret-list

UUID 用量

--------------------------------------------------------------------------------

[root@controller ceph]# virsh secret-define --file secret.xml

生成 secret 940f0485-e206-4b49-b878-dcd0cb9c70a4

[root@controller ~]# virsh secret-list

UUID 用量

--------------------------------------------------------------------------------

940f0485-e206-4b49-b878-dcd0cb9c70a4 ceph client.cinder secret

virsh secret-set-value --secret 940f0485-e206-4b49-b878-dcd0cb9c70a4 --base64 $(cat ./client.cinder.key)

(10)登陆openstack网页

将实例,镜像,卷都删除

(11)配置Glance, 在所有的controller节点上做如下更改:

[root@node1 ~]# vim /etc/glance/glance-api.conf

[DEFAULT]

default_store = rbd

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[glance_store]

stores = rbd

default_store = rbd

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8

[image_format]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = glance

[matchmaker_redis]

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

[root@node1 glance]# systemctl restart openstack-glance-api.service

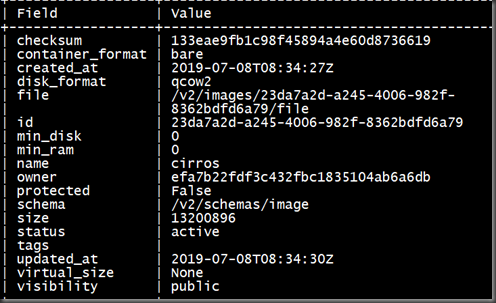

创建image验证:

[root@node1 ~]# ls

[root@node1 ~]# cd openstack-ocata/

[root@node1 openstack-ocata]# openstack image create "cirros" --file cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --public #要上传的镜像名

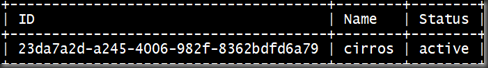

[root@node1 ~]# openstack image list

[root@node1 ~]# rbd ls images

上传镜像成功

(12)配置 Cinder:存储节点

[root@node3 ~]# cd /etc/cinder/

[root@node3 cinder]# vim cinder.conf

[DEFAULT]

my_ip = 172.16.254.63

glance_api_servers = http://controller:9292

auth_strategy = keystone

enabled_backends = ceph

state_path = /var/lib/cinder

transport_url = rabbit://openstack:admin@controller

[backend]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[matchmaker_redis]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[profiler]

[ssl]

[ceph] #在末行添加即可

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

rbd_secret_uuid = 940f0485-e206-4b49-b878-dcd0cb9c70a4 #改为uuid

volume_backend_name=ceph

重启cinder服务:

[root@node1 ~]# systemctl restart openstack-cinder-api.service openstack-cinder-scheduler.service

[root@node3 ~]# systemctl restart openstack-cinder-volume.service

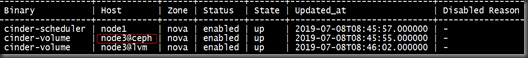

[root@node1 ~]# cinder service-list

此时在网页里创建一个test1的卷创建volume验证:

[root@node1 ~]# rbd ls volumes

(13)配置Nova:控制节点node1和node2

[root@node1 ~]# cd /etc/nova/

[root@node1 nova]# vim nova.conf

[DEFAULT]

my_ip=172.16.254.63

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url = rabbit://openstack:admin@controller

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[barbican]

[cache]

[cells]

[cinder]

os_region_name = RegionOne

[cloudpipe]

[conductor]

[console]

[consoleauth]

[cors]

[cors.subdomain]

[crypto]

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://controller:9292

[guestfs]

[healthcheck]

[hyperv]

[image_file_url]

[ironic]

[key_manager]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = nova

[libvirt]

virt_type=qemu

images_type = rbd

images_rbd_pool = vms

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

rbd_secret_uuid = 940f0485-e206-4b49-b878-dcd0cb9c70a4 #修改uuid

[matchmaker_redis]

[metrics]

[mks]

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET

[notifications]

[osapi_v21]

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_messaging_zmq]

[oslo_middleware]

[oslo_policy]

[pci]

[placement]

os_region_name = RegionOne

auth_type = password

auth_url = http://controller:35357/v3

project_name = service

project_domain_name = Default

username = placement

password = placement

user_domain_name = Default

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[ssl]

[trusted_computing]

[upgrade_levels]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled=true

vncserver_listen=$my_ip

vncserver_proxyclient_address=$my_ip

novncproxy_base_url = http://172.16.254.63:6080/vnc_auto.html

[workarounds]

[wsgi]

[xenserver]

[xvp]

重启nova服务:

[root@node1 ~]# systemctl restart openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-compute.service

[root@node2 ~]# systemctl restart openstack-nova-compute.service

创建虚机验证:

[root@node2 ~]# rbd ls vms

d9e8843f-f2de-434d-806f-2856cbc00214_disk