Apache Kafka™ is a distributed streaming platform. What exactly does that mean?

Apache Kafka™是一个分布式平台。 这究竟是什么意思?

We think of a streaming platform as having three key capabilities:

我们认为kafka平台有三个关键功能:

- It lets you publish and subscribe to streams of records. In this respect it is similar to a message queue or enterprise messaging system. 它允许您发布和订阅记录流。 在这方面,它类似于消息队列或企业消息系统。

- It lets you store streams of records in a fault-tolerant way. 它允许您以容错方式存储记录流。

- It lets you process streams of records as they occur. 它可以让您处理记录流。

What is Kafka good for? 什么是卡夫卡?

It gets used for two broad classes of application: 它被用于两大类应用程序:

1.Building real-time streaming data pipelines that reliably get data between systems or applications 构建在系统或应用程序之间可靠获取数据的实时流数据

2.Building real-time streaming applications that transform or react to the streams of data 构建对数据流进行变换或反应的实时流应用程序

To understand how Kafka does these things, let's dive in and explore Kafka's capabilities from the bottom up. 要了解Kafka 如何做这些事情,让我们从潜入深和探索Kafka 的能力。

First a few concepts: 首先几个概念:

Kafka is run as a cluster on one or more servers. Kafka作为一个或多个服务器上的集群运行。

The Kafka cluster stores streams of records in categories called topics. Kafka 群集将名为主题的类别的记录流存储起来。

Each record consists of a key, a value, and a timestamp. 每个记录由一个键,一个值和一个时间戳组成。

Kafka has four core APIs: Kafka 有四个核心API:

1.The Producer API allows an application to publish a stream of records to one or more Kafka topics. Producer API允许应用程序将记录流发布到一个或多个Kafka主题。

2.The Consumer API allows an application to subscribe to one or more topics and process the stream of records produced to them. Consumer API允许应用程序订阅一个或多个主题并处理为其生成的记录流。

3.The Streams API allows an application to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams. Streams API允许应用程序充当流处理器,从一个或多个主题消耗输入流,并产生输出流到一个或多个输出主题,有效地将输入流转换为输出流。

4.The Connector API allows building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table. 连接器API允许构建和运行将Kafka主题连接到现有应用程序或数据系统的可重用的生产者或消费者。 例如,关系数据库的连接器可能会捕获表的每个更改。

In Kafka the communication between the clients and the servers is done with a simple, high-performance, language agnostic TCP protocol. This protocol is versioned and maintains backwards compatibility with older version. We provide a Java client for Kafka, but clients are available in many languages.

在Kafka,客户端和服务器之间的通信是用简单,高性能的语言不可知TCP协议完成的。 该协议版本化,并保持与旧版本的向后兼容性。 我们为Kafka提供Java客户端,但客户端可以使用多种语言。

Topics and Logs 主题和日志

Let's first dive into the core abstraction Kafka provides for a stream of records—the topic. 让我们先来看一下Kafka提供的一个记录流的核心抽象 - 这个话题。

A topic is a category or feed name to which records are published. Topics in Kafka are always multi-subscriber; that is, a topic can have zero, one, or many consumers that subscribe to the data written to it.

主题是发布记录的类别或名称。 Kafka 的主题总是多用户的; 也就是说,一个主题可以有零个,一个或多个消费者订阅订阅的数据。

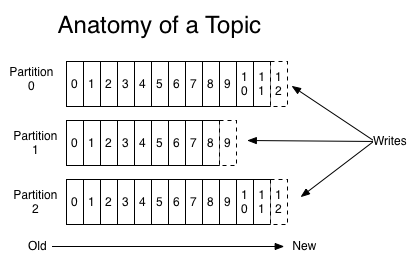

For each topic, the Kafka cluster maintains a partitioned log that looks like this: 对于每个主题,Kafka集群维护一个分区日志,如下所示:

Each partition is an ordered, immutable sequence of records that is continually appended to—a structured commit log. The records in the partitions are each assigned a sequential id number called the offset that uniquely identifies each record within the partition. 每个分区是一个有序的,不可变的记录序列,不断附加到一个结构化的提交日志中。 每个分区中的记录都被分配一个顺序的id号,称为唯一标识分区中每个记录的偏移量。

The Kafka cluster retains all published records—whether or not they have been consumed—using a configurable retention period. For example, if the retention policy is set to two days, then for the two days after a record is published, it is available for consumption, after which it will be discarded to free up space. Kafka's performance is effectively constant with respect to data size so storing data for a long time is not a problem.

Kafka集群保留所有已发布的记录 - 无论它们是否已被使用 - 使用可配置的保留期限。 例如,如果保留策略设置为两天,则在发布记录后的两天内,该策略可用于消费,之后将其丢弃以释放空间。 卡夫卡的性能在数据大小方面是有效的,因此长时间存储数据并不成问题。

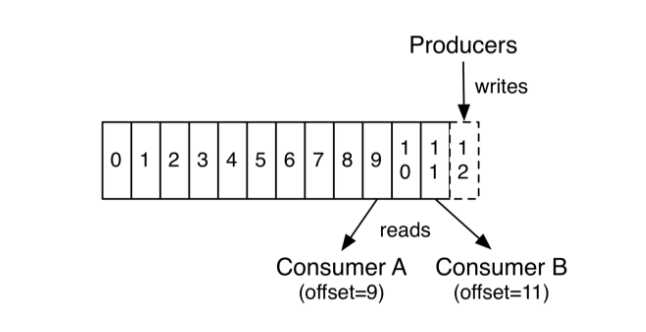

In fact, the only metadata retained on a per-consumer basis is the offset or position of that consumer in the log. This offset is controlled by the consumer: normally a consumer will advance its offset linearly as it reads records, but, in fact, since the position is controlled by the consumer it can consume records in any order it likes. For example a consumer can reset to an older offset to reprocess data from the past or skip ahead to the most recent record and start consuming from "now"

This combination of features means that Kafka consumers are very cheap—they can come and go without much impact on the cluster or on other consumers. For example, you can use our command line tools to "tail" the contents of any topic without changing what is consumed by any existing consumers.

这种特征的组合意味着Kafka 消费者非常低廉 - 他们可以来回去对集群或其他消费者没有太大的影响。 例如,您可以使用我们的命令行工具来“拖尾”任何主题的内容,而无需更改现有消费者所消耗的内容。

The partitions in the log serve several purposes. First, they allow the log to scale beyond a size that will fit on a single server. Each individual partition must fit on the servers that host it, but a topic may have many partitions so it can handle an arbitrary amount of data. Second they act as the unit of parallelism—more on that in a bit.

日志中的分区有几个目的。 首先,它们允许日志扩展到适合单个服务器的大小。 每个单独的分区必须适合托管它的服务器,但主题可能有很多分区,因此它可以处理任意数量的数据。 第二,它们作为并行性的单位 - 更重要的是这一点。

Distribution 分配

The partitions of the log are distributed over the servers in the Kafka cluster with each server handling data and requests for a share of the partitions. Each partition is replicated across a configurable number of servers for fault tolerance.

Each partition has one server which acts as the "leader" and zero or more servers which act as "followers". The leader handles all read and write requests for the partition while the followers passively replicate the leader. If the leader fails, one of the followers will automatically become the new leader. Each server acts as a leader for some of its partitions and a follower for others so load is well balanced within the cluster.

每个分区有一个服务器充当“领导者”,零个或多个服务器充当“追随者”。 领导者处理对分区的所有读取和写入请求,而追随者被动地复制领导者。 如果领导失败,其中一个追随者将自动成为新的领导者。 每个服务器作为其一些分区的领导者,并且其他分支的追随者,因此在集群内负载平衡良好。

Producers 生产者

Producers publish data to the topics of their choice. The producer is responsible for choosing which record to assign to which partition within the topic. This can be done in a round-robin fashion simply to balance load or it can be done according to some semantic partition function (say based on some key in the record). More on the use of partitioning in a second!

生产者将数据发布到他们选择的主题。 生产者负责选择要分配给主题中哪个分区的记录。 这可以通过循环方式简单地平衡负载,或者可以根据某些语义分区功能(例如基于记录中的某些关键字)来完成。 更多关于使用分区在一秒钟!

Consumers 消费者

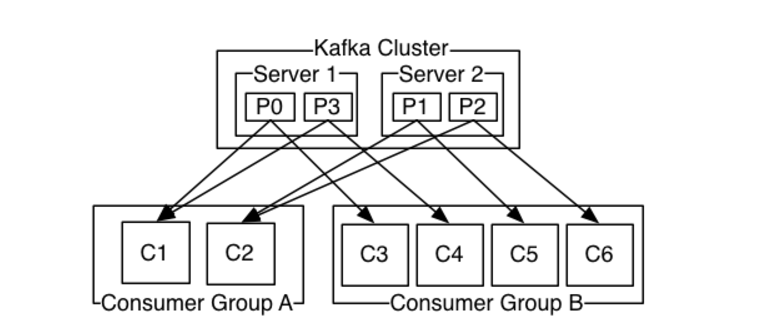

Consumers label themselves with a consumer group name, and each record published to a topic is delivered to one consumer instance within each subscribing consumer group. Consumer instances can be in separate processes or on separate machines.

消费者使用消费者组名称标注自己,并将发布到主题的每条记录传递到每个订阅消费者组中的一个消费者实例。 消费者实例可以在单独的进程中或在单独的机器上。

If all the consumer instances have the same consumer group, then the records will effectively be load balanced over the consumer instances

如果所有消费者实例具有相同的消费者组,则记录将在消费者实例上有效地负载平衡。

If all the consumer instances have different consumer groups, then each record will be broadcast to all the consumer processes.

如果所有消费者实例具有不同的消费者群体,则每个记录将被广播给所有消费者进程。

A two server Kafka cluster hosting four partitions (P0-P3) with two consumer groups. Consumer group A has two consumer instances and group B has four.

Guarantees 担保

At a high-level Kafka gives the following guarantees: 在Kafka 提供以下保证:

1.Messages sent by a producer to a particular topic partition will be appended in the order they are sent. That is, if a record M1 is sent by the same producer as a record M2, and M1 is sent first, then M1 will have a lower offset than M2 and appear earlier in the log.

生产者发送到特定主题分区的消息将按照发送的顺序进行附加。 也就是说,如果记录M1由与记录M2相同的制造商发送,并且首先发送M1,则M1将具有比M2更低的偏移并且在日志中较早出现。

2. A consumer instance sees records in the order they are stored in the log. 消费者实例按照存储在日志中的顺序查看记录。

3. For a topic with replication factor N, we will tolerate up to N-1 server failures without losing any records committed to the log. 对于具有复制因子N的主题,我们将容忍最多N-1个服务器故障,而不会丢失提交到日志的任何记录。

More details on these guarantees are given in the design section of the documentation. 有关这些保证的更多详细信息,请参见文档的设计部分。

Kafka as a Messaging System Kafka 作为消息系统

How does Kafka's notion of streams compare to a traditional enterprise messaging system? 与传统的企业邮件系统相比,Kafa 的概念如何?

Messaging traditionally has two models: queuing and publish-subscribe. In a queue, a pool of consumers may read from a server and each record 消息传统传统上有两种模式:排队和发布 - 订阅。 在队列中,消费者池可以从服务器和每个记录读取。

goes to one of them; in publish-subscribe the record is broadcast to all consumers. Each of these two models has a strength and a weakness. The strength of queuing is that it allows you to divide up the processing of data over multiple consumer instances, which lets you scale your processing. Unfortunately, queues aren't multi-subscriber—once one process reads the data it's gone. Publish-subscribe allows you broadcast data to multiple processes, but has no way of scaling processing since every message goes to every subscriber.

去其中一个; 在发布订阅中,记录广播给所有消费者。 这两个模型中的每一个都有实力和弱点。 排队的优点是它允许您在多个消费者实例上分配数据处理,从而可以扩展您的处理。 不幸的是,队列不是多用户 - 一旦一个进程读取数据,它就会消失。 发布订阅允许您将数据广播到多个进程,但无法缩放处理,因为每个消息都发送给每个用户。

The consumer group concept in Kafka generalizes these two concepts. As with a queue the consumer group allows you to divide up processing over a collection of processes (the members of the consumer group). As with publish-subscribe, Kafka allows you to broadcast messages to multiple consumer groups.

Kafka 消费群体概念概括了这两个概念。 与队列一样,消费者组允许您对进程集合(消费者组的成员)进行分割处理。 与发布订阅一样,Kafka允许您将消息广播到多个消费者组。

The advantage of Kafka's model is that every topic has both these properties—it can scale processing and is also multi-subscriber—there is no need to choose one or the other.

Kafka 模型的优点在于,每个主题都具有这两个属性 - 它可以扩展处理,也是多用户 - 不需要选择一个或另一个。

Kafka has stronger ordering guarantees than a traditional messaging system, too.

Kafka 也比传统的邮件系统有更强大的订购保证。

A traditional queue retains records in-order on the server, and if multiple consumers consume from the queue then the server hands out records in the order they are stored. However, although the server hands out records in order, the records are delivered asynchronously to consumers, so they may arrive out of order on different consumers. This effectively means the ordering of the records is lost in the presence of parallel consumption. Messaging systems often work around this by having a notion of "exclusive consumer" that allows only one process to consume from a queue, but of course this means that there is no parallelism in processing.

传统的队列在服务器上保存顺序的记录,如果多个消费者从队列中消费,则服务器按照存储顺序输出记录。 然而,虽然服务器按顺序输出记录,但是记录被异步传递给消费者,所以他们可能会在不同的消费者处按顺序到达。 这有效地意味着在并行消耗的情况下,记录的排序丢失。 消息传递系统通常通过使“唯一消费者”的概念只允许一个进程从队列中消费,但这当然意味着处理中没有并行性。

Kafka does it better. By having a notion of parallelism—the partition—within the topics, Kafka is able to provide both ordering guarantees and load balancing over a pool of consumer processes. This is achieved by assigning the partitions in the topic to the consumers in the consumer group so that each partition is consumed by exactly one consumer in the group. By doing this we ensure that the consumer is the only reader of that partition and consumes the data in order. Since there are many partitions this still balances the load over many consumer instances. Note however that there cannot be more consumer instances in a consumer group than partitions.

Kafka 做得更好 通过在主题中有一个并行概念(分区),Kafka能够在消费者流程池中提供排序保证和负载平衡。 这是通过将主题中的分区分配给消费者组中的消费者来实现的,以便每个分区被组中正好一个消费者消耗。 通过这样做,我们确保消费者是该分区的唯一读者,并按顺序消耗数据。 由于有很多分区,这仍然平衡了许多消费者实例的负载。 但是请注意,消费者组中的消费者实例不能超过分区。

Kafka as a Storage System Kafka 作为存储系统

Any message queue that allows publishing messages decoupled from consuming them is effectively acting as a storage system for the in-flight messages. What is different about Kafka is that it is a very good storage system.

允许发布消息消除消息的消息队列有效地用作飞行中消息的存储系统。 Kakfa 的不同之处在于它是一个很好的存储系统。

Data written to Kafka is written to disk and replicated for fault-tolerance. Kafka allows producers to wait on acknowledgement so that a write isn't considered complete until it is fully replicated and guaranteed to persist even if the server written to fails.

写入Kafka的数据写入磁盘并复制以进行容错。 Kafka允许生产者等待确认,以便在完全复制之前写入不被认为是完整的,并且即使服务器写入失败,也保证持久写入。

The disk structures Kafka uses scale well—Kafka will perform the same whether you have 50 KB or 50 TB of persistent data on the server.

Kafka的磁盘结构使用缩放功能,Kafka将执行相同的操作,无论您在服务器上是否有50 KB或50 TB的持久数据。

As a result of taking storage seriously and allowing the clients to control their read position, you can think of Kafka as a kind of special purpose distributed filesystem dedicated to high-performance, low-latency commit log storage, replication, and propagation.

由于严重存储并允许客户端控制其读取位置,您可以将Kafka视为专用于高性能,低延迟的提交日志存储,复制和传播的专用分布式文件系统。

For details about the Kafka's commit log storage and replication design, please read this page.

有关Kafka的提交日志存储和复制设计的详细信息,请阅读此页面。

Kafka for Stream Processing Kafka 处理

It isn't enough to just read, write, and store streams of data, the purpose is to enable real-time processing of streams.

仅读取,写入和存储数据流还不够,目的是实现流的实时处理。