一、安装zookeeper

安装参考链接:https://www.cnblogs.com/tonglin0325/p/7039747.html

-

下载zookeeper镜像

建议使用3.4:https://www.apache.org/dyn/closer.lua/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz

-

解压

tar -xzf zookeeper-3.4.14.tar.gz -

修改zookeeper配置

# 复制zoo_sample.cfg到zoo.cfg中 vim zookeeper-3.4.14/config/zoo.cfg#The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=/home/up/thirdApplications/zookeeperData/data dataLogDir=/home/up/thirdApplications/zookeeperData/logs # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1 server.1=0.0.0.0:2888:3888 -

启动zookeeper

cd zookeeper-3.4.14 bin/zkServer.sh start -

查看状态

bin/zkServer.sh status -

停止zookeeper

bin/zkServer.sh stop

二、安装kafka

kafka中文文档:https://kafka.apachecn.org/quickstart.html

-

下载解压

各版本kafka: https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/

tar -xzf kafka.tar.gz -

检查zookeeper服务是否开启

-

已安装zookeeper

ps -aux | grep 'zookeeper'

-

未安装zookeeper

如果没有安装zookeeper,可以使用kafka自带的脚本启动一个zookeeper简单单一服务

# 进入kafka目录 cd kafka bin/zookeeper-server-start.sh config/zookeeper.properties

-

-

启动kafka

kafka-server-start.sh config/server.properties -

停止kafka

bin/kafka-server-stop.sh config/server.properties

三、kafka使用

1、创建topic

cd kafka

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test_topic

2、查看创建的主题

bin/kafka-topics.sh --list --zookeeper localhost:2181

3、生产者生产数据

bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test_topic

# Kafka自带一个命令行客户端,它从文件或标准输入中获取输入,并将其作为message(消息)发送到Kafka集群。默认情况下,每行将作为单独的message发送。

4、查看kafka中数据数量

bin/kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list localhost:9092 --topic test_topic

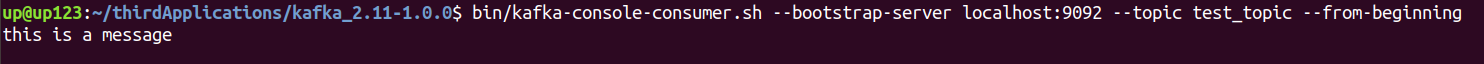

5、消费者消费数据

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test_topic --from-beginning

6、再次查看kafka中数据数量

kafka中数据被消费完并不会删除,所以生产者生产的数据一直存在,只是改变了消费者的offset

bin/kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list localhost:9092 --topic test_topic