服务器环境:

192.168.1.105

192.168.1.160

每台服务器搭建3个节点,组成3个主节点,3个从节点的redis集群。

注意:防火墙一定要开放监听的redis端口,否则会创建失败。

一、redis cluster安装

1、下载和编译安装

cd /home/xm6f/dev wget http://download.redis.io/releases/redis-3.2.4.tar.gz tar -zxvf redis-3.2.4.tar.gz cd redis-3.2.4/ make && make install

2、创建redis节点

选择2台服务器,分别为:192.168.1.105,192.168.1.160,每台服务器有3个节点,组成3个主节点,3个从节点的redis集群。

a、首先在192.168.1.105创建3个节点:

cd /home/xm6f/dev mkdir redis_cluster #创建集群目录 cd redis_cluster/ mkdir 7000 7001 7002 #分别代表三个节点,对应端口分别为7000、7001、7002 #redis.conf拷贝到7000目录 cp redis-3.2.4/redis.conf redis_cluster/7000/ #redis.conf拷贝到7001目录 cp redis-3.2.4/redis.conf redis_cluster/7001/ #redis.conf拷贝到7002目录 cp redis-3.2.4/redis.conf redis_cluster/7002/

b、分别对7000、7001,7002文件夹中的3个redis.conf文件修改对应的配置:

daemonize yes #redis后台运行 pidfile /var/run/redis_7000.pid #pidfile文件对应7000, 7001, 7002 port 7000 #端口7000, 7001, 7002 cluster-enabled yes #开启集群 把注释#去掉 cluster-config-file nodes_7000.conf #集群的配置 配置文件首次启动自动生成 7000,7001,7002,存在/home/xm6f/dev/redis-3.2.4/src目录 cluster-node-timeout 5000 #请求超时,设置5秒即可 appendonly yes #aof日志开启,有需要就开启,它会每次写操作都记录一条日志 logfile "/home/xm6f/dev/redis_cluster/7000/logs/redis.log" bind 192.168.1.105 #绑定当前服务器的IP,否则的话在集群通信的时候会出现:[ERR] Sorry, can't connect to node 192.168.200.140:7001

dbfilename dump_7000.rdb #存在/home/xm6f/dev/redis-3.2.4/src目录

appendfilename "appendonly_7000.aof" #存在/home/xm6f/dev/redis-3.2.4/src目录

requirepass 123456 #设置密码,每个节点的密码都必须一致的

masterauth 123456

在192.168.1.160创建3个节点:对应的端口改为7003,7004,7005.配置对应的改一下就可以了。

3、两台机器启动各节点(两台服务器方式一样)

cd /home/xm6f/dev/redis-3.2.4/src ./redis-server ../../redis_cluster/7000/redis.conf & ./redis-server ../../redis_cluster/7001/redis.conf & ./redis-server ../../redis_cluster/7002/redis.conf & ./redis-server ../../redis_cluster/7003/redis.conf & ./redis-server ../../redis_cluster/7004/redis.conf & ./redis-server ../../redis_cluster/7005/redis.conf &

4、查看服务

ps -ef | grep redis #查看是否启动成功 netstat -tnlp | grep redis #可以看到redis监听端口

5、杀死所有redis进程

pkill -9 redis

二、创建集群

前面已经准备好了搭建集群的redis节点,接下来我们要把这些节点都串连起来搭建集群。官方提供了一个工具:redis-trib.rb(/home/xm6f/dev/redis-3.2.4/src/redis-trib.rb) 看后缀就知道这东西不能直接执行,它是用ruby写的一个程序,所以我们还得安装ruby.

yum -y install ruby ruby-devel rubygems rpm-build

再用 gem 这个命令来安装 redis 接口,gem是ruby的一个工具包。

gem install redis //等一会儿就好了

当然,方便操作,两台Server都要安装。

注意:在执行gem install redis时,报ERROR:Error installing redis:redis requires Ruby version >= 2.2.2异常。

点击此处查看解决方案

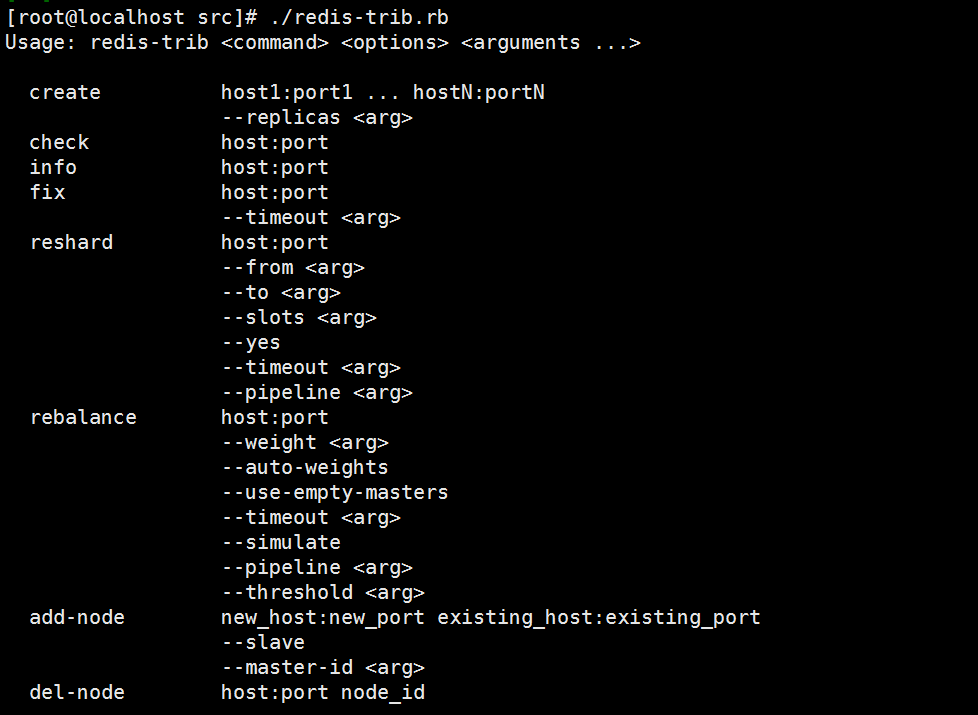

上面的步骤完事了,接下来运行一下redis-trib.rb

cd /home/xm6f/dev/redis-3.2.4/src ./redis-trib.rb

看到这,应该明白了吧,就是靠上面这些操作完成redis集群搭建。

确认所有的节点都启动,接下来使用参数 create 创建 (在192.168.1.160中来创建)

./redis-trib.rb create --replicas 1 192.168.1.105:7000 192.168.1.105:7001 192.168.1.105:7002 192.168.1.160:7003 192.168.1.160:7004 192.168.1.160:7005

注意:

a、--replicas 1参数表示为每个主节点创建一个从节点,其他参数是实例的地址集合。

b、防火墙一定要开放监听的端口,否则会创建失败。

[root@localhost redis-cluster]#./redis-trib.rb create --replicas 1 192.168.1.105:7000 192.168.1.105:7001 192.168.1.105:7002 192.168.1.160:7003 192.168.1.160:7004 192.168.1.160:7005 >>> Creating cluster >>> Performing hash slots allocation on 6 nodes... Using 3 masters: 127.0.0.1:7001 127.0.0.1:7002 127.0.0.1:7003 Adding replica 127.0.0.1:7004 to 127.0.0.1:7001 Adding replica 127.0.0.1:7005 to 127.0.0.1:7002 Adding replica 127.0.0.1:7006 to 127.0.0.1:7003 M: dfd510594da614469a93a0a70767ec9145aefb1a 127.0.0.1:7001 slots:0-5460 (5461 slots) master M: e02eac35110bbf44c61ff90175e04d55cca097ff 127.0.0.1:7002 slots:5461-10922 (5462 slots) master M: 4385809e6f4952ecb122dbfedbee29109d6bb234 127.0.0.1:7003 slots:10923-16383 (5461 slots) master S: ec02c9ef3acee069e8849f143a492db18d4bb06c 127.0.0.1:7004 replicates dfd510594da614469a93a0a70767ec9145aefb1a S: 83e5a8bb94fb5aaa892cd2f6216604e03e4a6c75 127.0.0.1:7005 replicates e02eac35110bbf44c61ff90175e04d55cca097ff S: 10c097c429ca24f8720986c6b66f0688bfb901ee 127.0.0.1:7006 replicates 4385809e6f4952ecb122dbfedbee29109d6bb234 Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join...... >>> Performing Cluster Check (using node 127.0.0.1:7001) M: dfd510594da614469a93a0a70767ec9145aefb1a 127.0.0.1:7001 slots:0-5460 (5461 slots) master M: e02eac35110bbf44c61ff90175e04d55cca097ff 127.0.0.1:7002 slots:5461-10922 (5462 slots) master M: 4385809e6f4952ecb122dbfedbee29109d6bb234 127.0.0.1:7003 slots:10923-16383 (5461 slots) master M: ec02c9ef3acee069e8849f143a492db18d4bb06c 127.0.0.1:7004 slots: (0 slots) master replicates dfd510594da614469a93a0a70767ec9145aefb1a M: 83e5a8bb94fb5aaa892cd2f6216604e03e4a6c75 127.0.0.1:7005 slots: (0 slots) master replicates e02eac35110bbf44c61ff90175e04d55cca097ff M: 10c097c429ca24f8720986c6b66f0688bfb901ee 127.0.0.1:7006 slots: (0 slots) master replicates 4385809e6f4952ecb122dbfedbee29109d6bb234 [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

上面显示创建成功,有3个主节点,3个从节点,每个节点都是成功连接状态。

查看集群节点信息:./redis-trib.rb check 192.168.1.105:7002 #任意一个节点即可

[root@localhost src]# ./redis-trib.rb check 192.168.1.105:7002 >>> Performing Cluster Check (using node 192.168.1.105:7002) S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:5461-10922 (5462 slots) master 1 additional replica(s) M: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 S: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots: (0 slots) slave replicates 28a51a8e34920e2d48fc1650a9c9753ff73dad5d [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

以上集群安装成功了。

./redis-trib.rb create --replicas 1 10.104.111.174:7000 10.104.111.174:7001 10.104.111.174:7002 10.135.172.233:7003 10.135.172.233:7004 10.135.172.233:7005

执行该命令一直出现:Waiting for the cluster to join 一直等待

用 redis-trib.rb check 192.168.18.111:6379 检查,提示 [ERR] Not all 16384 slots are covered by nodes。

解决办法:把创建节点位置换下即可。

./redis-trib.rb create --replicas 1 10.135.172.233:7003 10.135.172.233:7004 10.135.172.233:7005 10.104.111.174:7000 10.104.111.174:7001 10.104.111.174:7002

执行通过。