一、实验架构和环境说明

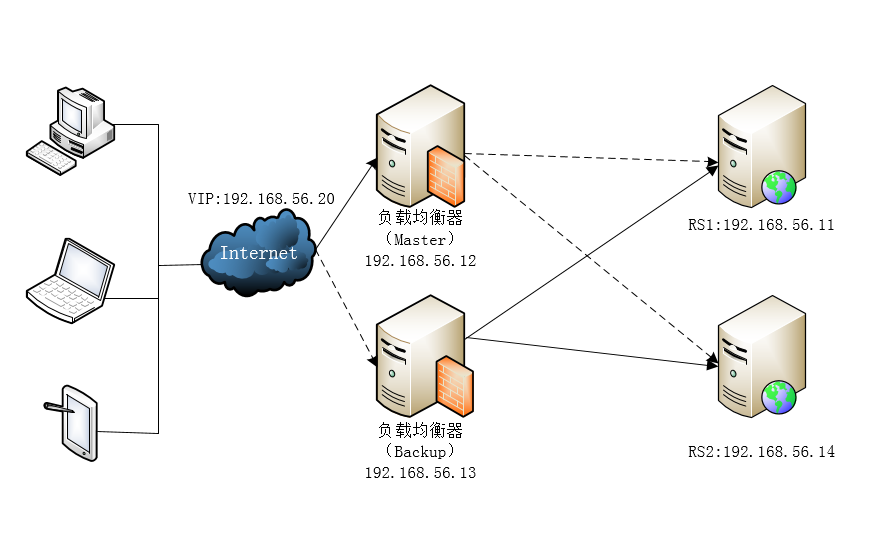

(1)本次基于VMware Workstation搭建一个四台Linux(CentOS 7.4)系统所构成的一个服务器集群,其中两台负载均衡服务器(一台为主机,另一台为备机),另外两台作为真实的Web服务器。

(2)本次实验基于DR负载均衡模式,设置了一个VIP(Virtual IP)为192.168.56.20,用户只需要访问这个IP地址即可获得网页服务。其中,负载均衡主机为192.168.56.12,备机为192.168.56.13。Web服务器RS1为192.168.56.11,Web服务器B为192.168.56.14。

二、配置2台web服务器

(1)在realserver上部署Nginx并配置主页

[root@rs1 ~]# yum install -y nginx [root@rs2 ~]# yum install -y nginx [root@rs1 ~]# echo "welcome to use RS1 192.168.56.11" > /usr/share/nginx/html/index.html [root@rs1 ~]# echo "welcome to use RS1 192.168.56.14" > /usr/share/nginx/html/index.html [root@rs1 ~]# curl 192.168.56.11 <h1>welcome to use RS1 192.168.56.11</h1> [root@rs2 ~]# curl 192.168.56.14 <h1>welcome to use RS1 192.168.56.14</h1>

(2)在rs1和rs2上编辑realserver脚本并执行,此处贴rs1脚本详情

[root@rs1 ~]# vim /etc/init.d/realserver #!/bin/bash SNS_VIP=192.168.56.20 /etc/init.d/functions case "$1" in start) ifconfig lo:0 $SNS_VIP netmask 255.255.255.255 broadcast $SNS_VIP /sbin/route add -host $SNS_VIP dev lo:0 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce sysctl -p >/dev/null 2>&1 echo "RealServer Start OK" ;; stop) ifconfig lo:0 down route del $SNS_VIP >/dev/null 2>&1 echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce echo "RealServer Stoped" ;; *) echo "Usage: $0 {start|stop}" exit 1 esac exit 0 [root@rs1 ~]# chmod +x /etc/init.d/realserver [root@rs1 ~]# /etc/init.d/realserver start RealServer Start OK

三、配置主负载均衡器

(1)lb01和lb02上安装Keepalived

[root@lb01 ~]# yum install -y keepalived [root@lb02 ~]# yum install -y keepalived

(2)编辑lb01和lb02上的keepalived.conf配置文件

[root@lb01 ~]# cp /etc/keepalived/keepalived.conf{,.bak} #备份源文件 [root@lb01 ~]# > /etc/keepalived/keepalived.conf #清空源文件 [root@lb01 ~]# vim /etc/keepalived/keepalived.conf #编辑keepalived.conf ! Configuration File for keepalived global_defs { notification_email { 123456@qq.com } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id lb01 } vrrp_instance VI_1 { state MASTER #指定Keepalived的角色,MASTER为主,BACKUP为备 interface eth0 virtual_router_id 55 #虚拟路由id号,主备需要一直 priority 150 #优先级,数值越大,优先级越高 advert_int 1 #检测间隔,默认为1s authentication { auth_type PASS #认证类型 auth_pass 1111 #认证密码 } virtual_ipaddress { 192.168.56.20/24 #设置DR的虚拟ip,可以多设,一行一个 } } virtual_server 192.168.56.20 80 { #定义LVS对外提供服务的VIP为192.168.56.20和port为80 delay_loop 6 #设置健康检查时间,单位为秒 lb_algo wrr #设置负载均衡调度算法为wrr lb_kind DR #设置LVS实现负载均衡机制为DR模式 nat_mask 255.255.255.0 persistence_timeout 20 #会话保持超时配置 protocol TCP #使用TCP协议检查realserver的状态 real_server 192.168.56.11 80 { #配置真实服务器节点和端口 weight 100 #权重 TCP_CHECK { connect_timeout 10 #连接超时,单位为秒 nb_get_retry 3 #重试连接次数 connect_port 80 #连接端口 } } real_server 192.168.56.14 80 { weight 100 TCP_CHECK { connect_timeout 10 nb_get_retry 3 connect_port 80 } } }

从负载均衡服务器与主负载服务器大致相同,只是在keepalived的配置文件中需要改以下两处:

(1)将state由MASTER改为BACKUP

(2)将priority由100改为99

配置完成后,启动Keepalived

[root@lb01 ~]# systemctl start keepalived [root@lb02 ~]# systemctl start keepalived [root@lb01 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.56.20:80 wrr persistent 20 -> 192.168.56.11:80 Route 100 0 0 -> 192.168.56.14:80 Route 100 0 0 [root@lb02 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.56.20:80 wrr persistent 20 -> 192.168.56.11:80 Route 100 0 0 -> 192.168.56.14:80 Route 100 0 0 [root@lb01 ~]# ip addr |grep 192.168.56.20 #查看lb01上是否存在VIP inet 192.168.56.20/24 scope global secondary eth0 [root@lb02 ~]# ip addr |grep 192.168.56.20 #查看lb02上是否存在VIP,如果有说明存在脑裂

四、验证测试访问http://192.168.56.20

(1)指定请求的均衡转发:因为两个Web服务器的权重都一样,所以会依次转发给两个Web服务器

(2)Web服务器发生故障时

模拟停止192.168.56.14,暂停其Nginx服务,再进行访192.168.56.20,可以看到只会从11上获取页面

[root@rs2 html]# /etc/init.d/nginx stop

Stopping nginx: [ OK ]

从负载均衡器的状态监控上,也可以看到192.168.56.14这台服务器已经从集群中剔除,当故障修复后,再查看调度器状态信息,可以看到rs2已经重新加入集群当中

[root@lb01 ~]# ipvsadm -L -n #lb01上查看调度信息,可以看到rs2已经从集群中剔除 IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.56.20:80 wrr persistent 20 -> 192.168.56.11:80 Route 100 0 2

[root@rs2 html]# /etc/init.d/nginx start #重启rs2上的nginx服务 Starting nginx: [ OK ] [root@lb01 ~]# ipvsadm -L -n #可以看到rs2又重新加入到集群当中提供服务 IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.56.20:80 wrr persistent 20 -> 192.168.56.11:80 Route 100 2 1 -> 192.168.56.14:80 Route 100 0 0

(3)主负载均衡服务器发生故障时,备机立即充当主机角色提供请求转发服务

模拟停止lb01上的keepalived服务,可以看到lb01上的vip漂移到了lb02上,但继续访问vip却不受影响。当主负载均衡器(lb01)服务恢复时,vip又会重新漂移到主负载均衡器上(lb01)

[root@lb01 ~]# ip addr |grep 192.168.56.20 inet 192.168.56.20/24 scope global secondary eth0 [root@lb01 ~]# systemctl stop keepalived [root@lb01 ~]# ip addr |grep 192.168.56.20 [root@lb02 ~]# ip addr |grep 192.168.56.20 inet 192.168.56.20/24 scope global secondary eth0