1、配置ceph.repo并安装批量管理工具ceph-deploy

[root@ceph-node1 ~]# vim /etc/yum.repos.d/ceph.repo [ceph] name=Ceph packages for $basearch baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/$basearch enabled=1 gpgcheck=1 priority=1 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-noarch] name=Ceph noarch packages baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch enabled=1 gpgcheck=1 priority=1 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc [ceph-source] name=Ceph source packages baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS enabled=0 gpgcheck=1 type=rpm-md gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc priority=1 [root@ceph-node1 ~]# yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm [root@ceph-node1 ~]# yum makecache [root@ceph-node1 ~]# yum update -y [root@ceph-node1 ~]# yum install -y ceph-deploy ```

2、ceph的节点部署

(1)安装NTP 在所有 Ceph 节点上安装 NTP 服务(特别是 Ceph Monitor 节点),以免因时钟漂移导致故障

[root@ceph-node1 ~]# yum install -y ntp ntpdate ntp-doc [root@ceph-node2 ~]# yum install -y ntp ntpdate ntp-doc [root@ceph-node3 ~]# yum install -y ntp ntpdate ntp-doc [root@ceph-node1 ~]# ntpdate ntp1.aliyun.com 31 Jul 03:43:04 ntpdate[973]: adjust time server 120.25.115.20 offset 0.001528 sec [root@ceph-node1 ~]# hwclock Tue 31 Jul 2018 03:44:55 AM EDT -0.302897 seconds [root@ceph-node1 ~]# crontab -e */5 * * * * /usr/sbin/ntpdate ntp1.aliyun.com

确保在各 Ceph 节点上启动了 NTP 服务,并且要使用同一个 NTP 服务器

(2)安装SSH服务器并添加hosts解析

默认有ssh,可以省略 [root@ceph-node1 ~]# yum install openssh-server [root@ceph-node2 ~]# yum install openssh-server [root@ceph-node3 ~]# yum install openssh-server 确保所有 Ceph 节点上的 SSH 服务器都在运行。 [root@ceph-node1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.56.11 ceph-node1 192.168.56.12 ceph-node2 192.168.56.13 ceph-node3

(3)允许无密码SSH登录

root@ceph-node1 ~]# ssh-keygen root@ceph-node1 ~]# ssh-copy-id root@ceph-node1 root@ceph-node1 ~]# ssh-copy-id root@ceph-node2 root@ceph-node1 ~]# ssh-copy-id root@ceph-node3

推荐使用方式:

修改 ceph-deploy 管理节点上的 ~/.ssh/config 文件,这样 ceph-deploy 就能用你所建的用户名登录 Ceph 节点了,而无需每次执行 ceph-deploy 都要指定 –username {username} 。这样做同时也简化了 ssh 和 scp 的用法。把 {username} 替换成你创建的用户名。

[root@ceph-node1 ~]# cat .ssh/config Host node1 Hostname ceph-node1 User root Host node2 Hostname ceph-node2 User root Host node3 Hostname ceph-node3 User root [root@ceph-node1 ~]# chmod 600 .ssh/config [root@ceph-node1 ~]# systemctl restart sshd

(4)关闭Selinux

在 CentOS 和 RHEL 上, SELinux 默认为 Enforcing 开启状态。为简化安装,我们建议把 SELinux 设置为 Permissive 或者完全禁用,也就是在加固系统配置前先确保集群的安装、配置没问题。用下列命令把 SELinux 设置为 Permissive :

[root@ceph-node1 ~]# setenforce 0 [root@ceph-node2 ~]# setenforce 0 [root@ceph-node3 ~]# setenforce 0

要使 SELinux 配置永久生效(如果它的确是问题根源),需修改其配置文件 /etc/selinux/config 。

(5)关闭防火墙

[root@ceph-node1 ~]# systemctl stop firewalld.service [root@ceph-node2 ~]# systemctl stop firewalld.service [root@ceph-node3 ~]# systemctl stop firewalld.service [root@ceph-node1 ~]# systemctl disable firewalld.service [root@ceph-node2 ~]# systemctl disable firewalld.service [root@ceph-node3 ~]# systemctl disable firewalld.service

(6)安装epel源和启用优先级

[root@ceph-node1 ~]# yum install -y epel-release [root@ceph-node2 ~]# yum install -y epel-release [root@ceph-node3 ~]# yum install -y epel-release [root@ceph-node1 ~]# yum install -y yum-plugin-priorities [root@ceph-node2 ~]# yum install -y yum-plugin-priorities [root@ceph-node3 ~]# yum install -y yum-plugin-priorities

3、创建集群

创建一个 Ceph 存储集群,它有一个 Monitor 和两个 OSD 守护进程。一旦集群达到 active + clean 状态,再扩展它:增加第三个 OSD 、增加元数据服务器和两个 Ceph Monitors。在管理节点上创建一个目录,用于保存 ceph-deploy 生成的配置文件和密钥对。

(1)创建ceph工作目录并配置ceph.conf

[root@ceph-node1 ~]# mkdir /etc/ceph && cd /etc/ceph [root@ceph-node1 ceph]# ceph-deploy new ceph-node1 #配置监控节点

ceph-deploy的new子命令能够部署一个默认名称为ceph的新集群,并且它能生成集群配置文件和密钥文件。列出当前工作目录,你会看到ceph.conf和ceph.mon.keyring文件。

[root@ceph-node1 ceph]# vim ceph.conf public network =192.168.56.0/24 [root@ceph-node1 ceph]# ll total 20 -rw-r--r-- 1 root root 253 Jul 31 21:36 ceph.conf #ceph的配置文件 -rw-r--r-- 1 root root 12261 Jul 31 21:36 ceph-deploy-ceph.log #monitor的日志文件 -rw------- 1 root root 73 Jul 31 21:36 ceph.mon.keyring #monitor的密钥环文件 遇到的问题: [root@ceph-node1 ceph]# ceph-deploy new ceph-node1 Traceback (most recent call last): File "/usr/bin/ceph-deploy", line 18, in <module> from ceph_deploy.cli import main File "/usr/lib/python2.7/site-packages/ceph_deploy/cli.py", line 1, in <module> import pkg_resources ImportError: No module named pkg_resources 解决方案: [root@ceph-node1 ceph]# yum install -y python-setuptools

(2)管理节点和osd节点都需要安装ceph 集群

[root@ceph-node1 ceph]# ceph-deploy install ceph-node1 ceph-node2 ceph-node3

ceph-deploy工具包首先会安装Ceph luminous版本所有依赖包。命令成功完成后,检查所有节点上Ceph的版本和健康状态,如下所示:

[root@ceph-node1 ceph]# ceph --version ceph version 12.2.7 (3ec878d1e53e1aeb47a9f619c49d9e7c0aa384d5) luminous (stable) [root@ceph-node2 ~]# ceph --version ceph version 12.2.7 (3ec878d1e53e1aeb47a9f619c49d9e7c0aa384d5) luminous (stable) [root@ceph-node3 ~]# ceph --version ceph version 12.2.7 (3ec878d1e53e1aeb47a9f619c49d9e7c0aa384d5) luminous (stable)

(3)配置MON初始化

在ceph-node1上创建第一个Ceph monitor:

[root@ceph-node1 ceph]# ceph-deploy mon create-initial #配置初始 monitor(s)、并收集所有密钥 [root@ceph-node1 ceph]# ll #完成上述操作后,当前目录里应该会出现这些密钥环 total 92 -rw------- 1 root root 113 Jul 31 21:48 ceph.bootstrap-mds.keyring -rw------- 1 root root 113 Jul 31 21:48 ceph.bootstrap-mgr.keyring -rw------- 1 root root 113 Jul 31 21:48 ceph.bootstrap-osd.keyring -rw------- 1 root root 113 Jul 31 21:48 ceph.bootstrap-rgw.keyring -rw------- 1 root root 151 Jul 31 21:48 ceph.client.admin.keyring

注意:只有在安装 Hammer 或更高版时才会创建 bootstrap-rgw 密钥环。

注意:如果此步失败并输出类似于如下信息 “Unable to find /etc/ceph/ceph.client.admin.keyring”,请确认ceph.conf中为monitor指定的IP是 Public IP,而不是 Private IP。查看集群的状态信息:

[root@ceph-node1 ceph]# ceph -s cluster: id: c6165f5b-ada0-4035-9bab-1916b28ec92a health: HEALTH_OK services: mon: 1 daemons, quorum ceph-node1 mgr: no daemons active osd: 0 osds: 0 up, 0 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0 bytes usage: 0 kB used, 0 kB / 0 kB avail pgs:

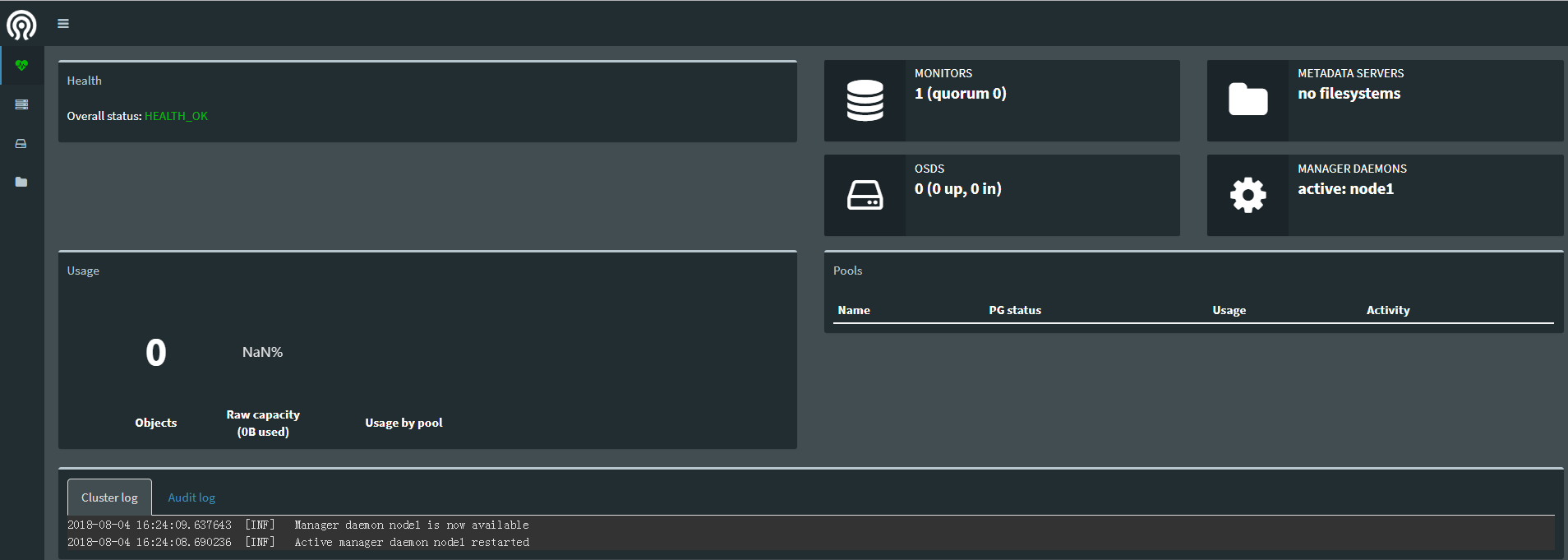

(5)开启监控模块

查看集群支持的模块

[root@ceph-node1 ceph]# ceph mgr dump

[root@ceph-node1 ceph]# ceph mgr module enable dashboard #启用dashboard模块

在/etc/ceph/ceph.conf中添加

[mgr]

mgr modules = dashboard

设置dashboard的ip和端口

[root@ceph-node1 ceph]# ceph config-key put mgr/dashboard/server_addr 192.168.56.11 set mgr/dashboard/server_addr [root@ceph-node1 ceph]# ceph config-key put mgr/dashboard/server_port 7000 set mgr/dashboard/server_port [root@ceph-node1 ceph]# netstat -tulnp |grep 7000 tcp6 0 0 :::7000 :::* LISTEN 13353/ceph-mgr

(5)在ceph-node1上创建OSD

[root@ceph-node1 ceph]# ceph-deploy disk zap ceph-node1 /dev/sdb [root@ceph-node1 ceph]# ceph-deploy disk list ceph-node1 #列出ceph-node1上所有的可用磁盘 ...... [ceph-node1][INFO ] Running command: fdisk -l [ceph-node1][INFO ] Disk /dev/sdb: 1073 MB, 1073741824 bytes, 2097152 sectors [ceph-node1][INFO ] Disk /dev/sdc: 1073 MB, 1073741824 bytes, 2097152 sectors [ceph-node1][INFO ] Disk /dev/sdd: 1073 MB, 1073741824 bytes, 2097152 sectors [ceph-node1][INFO ] Disk /dev/sda: 21.5 GB, 21474836480 bytes, 41943040 sectors

从输出中,慎重选择若干磁盘来创建Ceph OSD(除操作系统分区以外),并将它们分别命名为sdb、sdc和sdd。disk zap子命令会删除现有分区表和磁盘内容。运行此命令之前,确保你选择了正确的磁盘名称:

osd create子命令首先会准备磁盘,即默认地先用xfs文件系统格式化磁盘,然后会激活磁盘的第一、二个分区,分别作为数据分区和日志分区:

[root@ceph-node1 ceph]# ceph-deploy mgr create node1 #部署管理器守护程序,仅仅使用此版本 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create node1 [ceph_deploy.cli][INFO ] ceph-deploy options: [ceph_deploy.cli][INFO ] username : None [ceph_deploy.cli][INFO ] verbose : False [ceph_deploy.cli][INFO ] mgr : [('node1', 'node1')] [ceph_deploy.cli][INFO ] overwrite_conf : False [ceph_deploy.cli][INFO ] subcommand : create [ceph_deploy.cli][INFO ] quiet : False [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xe1e5a8> [ceph_deploy.cli][INFO ] cluster : ceph [ceph_deploy.cli][INFO ] func : <function mgr at 0xda5f50> [ceph_deploy.cli][INFO ] ceph_conf : None [ceph_deploy.cli][INFO ] default_release : False [ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts node1:node1 [node1][DEBUG ] connected to host: node1 [node1][DEBUG ] detect platform information from remote host [node1][DEBUG ] detect machine type [ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.4.1708 Core [ceph_deploy.mgr][DEBUG ] remote host will use systemd [ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to node1 [node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [node1][WARNIN] mgr keyring does not exist yet, creating one [node1][DEBUG ] create a keyring file [node1][DEBUG ] create path recursively if it doesn't exist [node1][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.node1 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-node1/keyring [node1][INFO ] Running command: systemctl enable ceph-mgr@node1 [node1][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@node1.service to /usr/lib/systemd/system/ceph-mgr@.service. [node1][INFO ] Running command: systemctl start ceph-mgr@node1 [node1][INFO ] Running command: systemctl enable ceph.target

(5)创建OSD

添加三个OSD。出于这些说明的目的,我们假设您在每个节点中都有一个未使用的磁盘/dev/sdb。 确保设备当前未使用且不包含任何重要数据。 语法格式:ceph-deploy osd create --data {device} {ceph-node}

[root@ceph-node1 ceph]# ceph-deploy osd create --data /dev/sdb node1 [root@ceph-node1 ceph]# ceph-deploy osd create --data /dev/sdc node1 [root@ceph-node1 ceph]# ceph-deploy osd create --data /dev/sdd node1