一、首先是爬取360手机助手应用市场信息,用来爬取360应用市场,App软件信息,现阶段代码只能爬取下载量,如需爬取别的信息,请自行添加代码。

使用方法:

1、在D盘根目录新建.tet文件,命名为App_name,并把App名称黏贴到文件内,格式如下:

2、直接运行就好。

最近比较忙,好久没更新博客了,为什么忙呢,是因为最近被派到“App专项治理组”做App治理工作了,在专班成立初期热心网友举报量比较多,天天处理举报问题,和统计被举报App的下载量,而且是5个应用市场下载量和,如果就几款App可能还好,但是每天处理几百款App,俺表示眼睛和手指头都抗议,这时我就想起了python,所以决定做5个爬虫,分别爬取5个应用市场上App信息,废话不多说,下面是我的代码:

1、第一种方法,使用字典完成。

# !/usr/bin/env python

# -*- coding: UTF-8 –*-

__author__ = 'Mr.Li'

import requests

from bs4 import BeautifulSoup

import xlsxwriter,time

def write_excel(name, download, type_name=0, url=0):

# 全局变量row代表行号 0-2代表列数

global row

sheet.write(row, 0, row)

sheet.write(row, 1, name)

sheet.write(row, 2, download)

row += 1

headers = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36'}

def App_download(url,app_name):

# 获取地址

i = 1

try:

time.sleep(0.5)

req = requests.get(url=url,headers=headers)

req.encoding = 'utf-8'

# 获取的内容保存在变量

html_all = req.text

div_bf = BeautifulSoup(html_all, 'html.parser') # 使用BeautifulSoup进行解析

div = div_bf.find_all('div',class_='SeaCon')#查找SeaCon元素内容

a_bf = BeautifulSoup(str(div), 'html.parser') # 重新解析

info = a_bf.find_all('li') # 查找元素为'li'的内容,其中包括APP的名称和下载量信息

name = info[0].dl.dd.h3.a.text.strip()

all_list = []

if name == app_name:

download_num = BeautifulSoup(str(info[0]), 'html.parser') # 重新解析

texts = download_num.find_all('p', class_='downNum')#查找下载量

find_download_num = texts[0].text.replace('xa0'*8,'

')[:-3]#去除不需要的信息

print(name, find_download_num)

write_excel(name,find_download_num)#写入xlsx文件

else:

find_download_num1= 'None'

print(app_name,find_download_num1)

write_excel(app_name,find_download_num1)

except Exception as e:

#print('error:%s,尝试重新获取'%(e,i))

#print(url)

if i != 3:

App_download(url, app_name)

i += 1

row = 1

# 新建一个excel文件

file = xlsxwriter.Workbook('360_applist.xlsx')

# 新建一个sheet

sheet = file.add_worksheet()

if __name__ == '__main__':

path_file = "D:\"

Old_AppFlie = open(path_file + "App_name.txt").read()

app_list = Old_AppFlie.split('

') # 把字符串转为列表

#app_list = ['微信','1113123','支付宝','荔枝']

for app_name in app_list:

yyb_url = 'http://zhushou.360.cn/search/index/?kw={app_name}'.format(app_name=app_name)

App_download(yyb_url,app_name)

file.close()

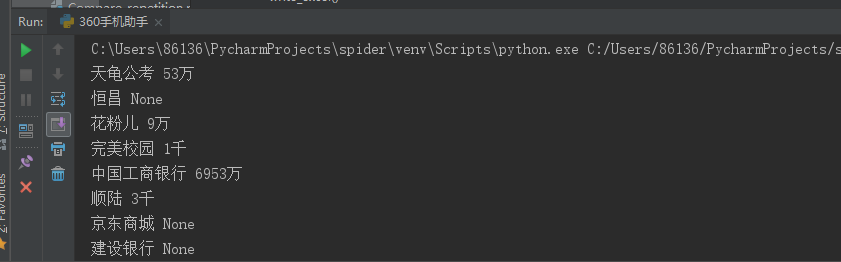

运行结果:

可以查到的,会显示下载量,如果在应用中查不到会显示None,并把结果写到360_applist.xlsx文件中。

已经过一段时间使用以上程序发现一个问题,如果所爬取的程序不存在,也就是在360应用市场中搜索不到的话,程序就会卡死,搜索不到指的是页面一个内容也没有,如下图:

这样的话程序会卡死,最终出错,这是因为我们程序中需要用到索引,在查询结果中进行索引,但是结果是空的,在使用索引就超范围了,所以会卡死报错,我们可以判断是,如果搜索不到内容及搜索结果为空也就是0,我在程序中做了判断,如果搜索结果长度不等于0就执行爬取下载量操作,然后下入数据,如果等于0就直接写入APP名称和None表示没有东西。

修改后代码:

# !/usr/bin/env python # -*- coding: UTF-8 –*- __author__ = 'Mr.Li' import requests from bs4 import BeautifulSoup import xlsxwriter,time def write_excel(name, download, type_name=0, url=0): # 全局变量row代表行号 0-2代表列数 global row sheet.write(row, 0, row) sheet.write(row, 1, name) sheet.write(row, 2, download) row += 1 headers = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36'} def App_download(url,app_name): # 获取地址 i = 1 try: time.sleep(0.5) req = requests.get(url=url,headers=headers) req.encoding = 'utf-8' # 获取的内容保存在变量 html_all = req.text div_bf = BeautifulSoup(html_all, 'html.parser') # 使用BeautifulSoup进行解析 div = div_bf.find_all('div',class_='SeaCon')#查找SeaCon元素内容 a_bf = BeautifulSoup(str(div), 'html.parser') # 重新解析 info = a_bf.find_all('li') # 查找元素为'li'的内容,其中包括APP的名称和下载量信息 name = info[0].dl.dd.h3.a.text.strip() all_list = [] if name == app_name: download_num = BeautifulSoup(str(info[0]), 'html.parser') # 重新解析 texts = download_num.find_all('p', class_='downNum')#查找下载量 find_download_num = texts[0].text.replace('xa0'*8,' ')[:-3]#去除不需要的信息 print(name, find_download_num) write_excel(name,find_download_num)#写入xlsx文件 else: find_download_num1= 'None' print(app_name,find_download_num1) write_excel(app_name,find_download_num1) except Exception as e: #print('error:%s,尝试重新获取'%(e,i)) #print(url) if i != 3: App_download(url, app_name) i += 1 row = 1 # 新建一个excel文件 file = xlsxwriter.Workbook('360_applist.xlsx') # 新建一个sheet sheet = file.add_worksheet() if __name__ == '__main__': path_file = "D:\" Old_AppFlie = open(path_file + "App_name.txt").read() app_list = Old_AppFlie.split(' ') # 把字符串转为列表 #app_list = ['微信','1113123','支付宝','荔枝'] for app_name in app_list: yyb_url = 'http://zhushou.360.cn/search/index/?kw={app_name}'.format(app_name=app_name) App_download(yyb_url,app_name) file.close()

二、爬取百度应用市场APP信息

# !/usr/bin/env python

# -*- coding: UTF-8 –*-

__author__ = 'Mr.Li'

import requests

from bs4 import BeautifulSoup

import xlsxwriter,time

def write_excel(name, download, type_name=0, url=0):

# 全局变量row代表行号 0-2代表列数

global row

sheet.write(row, 0, row)

sheet.write(row, 1, name)

sheet.write(row, 2, download)

row += 1

headers = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36'}

def App_download(url,app_name):

# 获取地址

i = 1

try:

time.sleep(0.5)

req = requests.get(url=url,headers=headers)

req.encoding = 'utf-8'

# 获取的内容保存在变量

html_all = req.text

div_bf = BeautifulSoup(html_all, 'html.parser') # 使用BeautifulSoup进行解析

div = div_bf.find_all('div',class_='SeaCon')#查找SeaCon元素内容

a_bf = BeautifulSoup(str(div), 'html.parser') # 重新解析

info = a_bf.find_all('li') # 查找元素为'li'的内容,其中包括APP的名称和下载量信息

name = info[0].dl.dd.h3.a.text.strip()

if len(info) != 0:

if name == app_name:

download_num = BeautifulSoup(str(info[0]), 'html.parser') # 重新解析

texts = download_num.find_all('p', class_='downNum')#查找下载量

find_download_num = texts[0].text.replace('xa0'*8,' ')[:-3]#去除不需要的信息

print(name, find_download_num)

write_excel(name,find_download_num)#写入xlsx文件

else:

find_download_num1= 'None'

print(app_name,find_download_num1)

write_excel(app_name,find_download_num1)

else:

print(app_name, 'None')

write_excel(app_name, 'None')

except Exception as e:

#print('error:%s,尝试重新获取'%(e,i))

#print(url)

if i != 3:

App_download(url, app_name)

i += 1

row = 1

# 新建一个excel文件

file = xlsxwriter.Workbook('360_applist.xlsx')

# 新建一个sheet

sheet = file.add_worksheet()

if __name__ == '__main__':

path_file = "D:\"

Old_AppFlie = open(path_file + "App_name.txt").read()

app_list = Old_AppFlie.split(' ') # 把字符串转为列表

#app_list = ['微信','1113123','支付宝','荔枝']

for app_name in app_list:

yyb_url = 'http://zhushou.360.cn/search/index/?kw={app_name}'.format(app_name=app_name)

App_download(yyb_url,app_name)

file.close()

三、爬取华为应用市场APP信息

# !/usr/bin/env python # -*- coding: UTF-8 –*- __author__ = 'Mr.Li' import requests from bs4 import BeautifulSoup import xlsxwriter def write_excel(name, download, type_name=0, url=0): # 全局变量row代表行号 0-2代表列数 global row sheet.write(row, 0, row) sheet.write(row, 1, name) sheet.write(row, 2, download) row += 1 headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.62 Safari/537.36'} def App_download(url,app_name): # 获取地址 i = 1 try: req = requests.get(url=url,headers=headers) req.encoding = 'utf-8' # 获取的内容保存在变量 html_all = req.text div_bf = BeautifulSoup(html_all, 'html.parser') # 使用BeautifulSoup进行解析 div = div_bf.find_all('div',class_='unit-main')#查找SeaCon元素内容 a_bf = BeautifulSoup(str(div), 'html.parser') # 重新解析 info = a_bf.find_all('div',class_='list-game-app dotline-btn nofloat') # 查找元素为'li'的内容,其中包括APP的名称和下载量信息 if len(info) != 0: name = info[0].h4.a.text.strip() # 获取app名称,去除两边的空格 if name == app_name: download_num = BeautifulSoup(str(info[0]), 'html.parser') # 重新解析 texts = download_num.find_all('span') # 查找下载量 find_download_num = texts[3].text.replace('xa0' * 8, ' ')[2:] # 去除不需要的信息 print(name, find_download_num) write_excel(name, find_download_num) # 写入xlsx文件 else: find_download_num1= 'None' print(app_name,find_download_num1) write_excel(app_name,find_download_num1) else: print(app_name, 'None') write_excel(app_name, 'None') # 写入xlsx文件 except Exception as e: #print('error:%s,尝试重新获取'%(e,i)) #print(url) if i != 3: App_download(url, app_name) i += 1 row = 1 # 新建一个excel文件 file = xlsxwriter.Workbook('hw_applist.xlsx') # 新建一个sheet sheet = file.add_worksheet() if __name__ == '__main__': path_file = "D:\" Old_AppFlie = open(path_file + "App_name.txt").read() app_list = Old_AppFlie.split(' ') # 把字符串转为列表 #app_list = ['微信','wea11','支付宝','荔枝'] for app_name in app_list: yyb_url = 'https://appstore.huawei.com/search/{app_name}'.format(app_name=app_name) App_download(yyb_url,app_name) file.close()

爬取华为和百度应用市场代码就不详细介绍了,原理一样,如果感兴趣你还可以在这基础上做出更改,加入爬取APP介绍信息,爬取APP版本等功能。

2019.12.09再次更新,更新原因,因为各大应用市场名称不太一样,为了实现模糊匹配,提高精度,笔者又进行了一次更新,模糊匹配本来应该使用re的正则表达式的,但是考虑到各大应用市场名称的不确定性,以及如果下载量错误,还不如没有下载量的情况,所以笔者在代码中只是增加了一个小小或的判断,看代码:

百度手机助手应用市场代码:

# !/usr/bin/env python # -*- coding: UTF-8 –*- __author__ = 'Mr.Li' import requests from bs4 import BeautifulSoup import xlsxwriter def write_excel(name, download, type_name=0, url=0): # 全局变量row代表行号 0-2代表列数 global row sheet.write(row, 0, row) sheet.write(row, 1, name) sheet.write(row, 2, download) row += 1 name_wu = 'None' find_download_num_wu = '无' headers = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36'} def App_download(url,app_name): # 获取地址 i = 1 try: req = requests.get(url=url,headers=headers) req.encoding = 'utf-8' # 获取的内容保存在变量 html_all = req.text div_bf = BeautifulSoup(html_all, 'html.parser') # 使用BeautifulSoup进行解析 div = div_bf.find_all('ul',class_='app-list')#查找app-list元素内容 a_bf = BeautifulSoup(str(div), 'html.parser') # 重新解析 info = a_bf.find_all('div',class_='info') # 查找元素为'info'的内容,其中包括APP的名称和下载量信息 if len(info) != 0: name = info[0].a.text.strip()#获取app名称,去除两边的空格 download_num = BeautifulSoup(str(info[0]), 'html.parser') # 重新解析 texts = download_num.find_all('span', class_='download-num') # 查找下载量 find_download_num = texts[0].text.replace('xa0' * 8, ' ') # 去除不需要的信息 if app_name in name or name in app_name: #模糊匹配,判断自己输入App名称是否在查找到的内容中 print(name,find_download_num) write_excel(name,find_download_num)#写入xlsx文件 else: find_download_num1= 'None' print(app_name,find_download_num1) write_excel(app_name,find_download_num1) else: print(app_name, 'None') write_excel(app_name, 'None') except Exception as e: #print('error:%s,尝试重新获取'%(e,i)) #print(url) if i != 3: App_download(url, app_name) i += 1 row = 1 # 新建一个excel文件 file = xlsxwriter.Workbook('baidu_applist.xlsx') # 新建一个sheet sheet = file.add_worksheet() if __name__ == '__main__': path_file = "D:\" Old_AppFlie = open(path_file + "App_name.txt").read() app_list = Old_AppFlie.split(' ') # 把字符串转为列表 #app_list = ['支付宝','as','荔枝'] for app_name in app_list: yyb_url = 'https://shouji.baidu.com/s?wd={app_name}&data_type=app&f=header_all%40input'.format(app_name=app_name) App_download(yyb_url,app_name) file.close()

这样更新后的精度虽然不是很高,但是可以提高一半,最主要是不会爬错,我们要的就是数据准确,哈哈!!,如果小伙伴有高精度要求,可以考虑正则匹配,自己改一下。