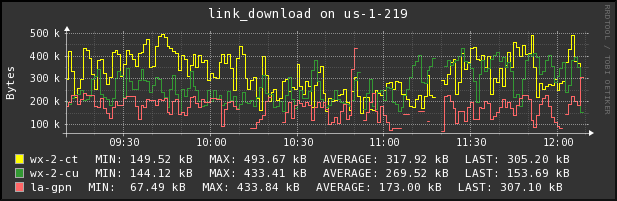

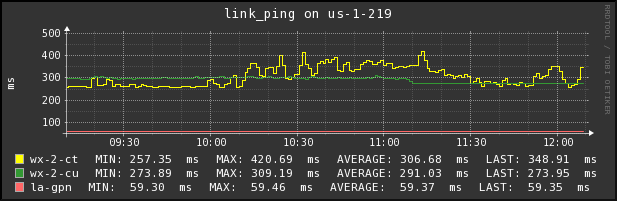

分别检测下载速度,丢包率,ping。下载是顺序下载,丢包率,ping使用mtr进行检测,使用多线程。注意的是mtr运行在root用户下,用nrpe用户执行的话,需要配置sudo。

可以将rrd生成png文件。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import urllib, urllib2

import os, sys, socket

import datetime, time

import json, re, subprocess

import pycurl, cStringIO

import threading

import rrdtool

from optparse import OptionParser

MTR = []

SPEED = []

def get_m3u8_info(type, ip):

params = urllib.urlencode({'unit': 'newusa', 'channel': 'CCTV1', 'type': type})

req = urllib2.urlopen('http://' + ip + '/approve/live?%s' % params)

headers = req.info()

rawdata = req.read()

if ('Content-Encoding' in headers and headers['Content-Encoding']) or ('content-encoding' in headers and headers['content-encoding']):

import gzip

import StringIO

data = StringIO.StringIO(rawdata)

gz = gzip.GzipFile(fileobj=data)

rawdata = gz.read()

gz.close()

urls = []

for line in rawdata.split('

'):

m = re.match('^http.*ts$', line)

if m:

urls.append(m.group(0))

return urls[0]

def get_ts_file(name, url):

buffer = cStringIO.StringIO()

curl = pycurl.Curl()

curl.setopt(pycurl.CONNECTTIMEOUT, 3)

curl.setopt(pycurl.TIMEOUT, 15)

curl.setopt(pycurl.NOSIGNAL, 1)

curl.setopt(pycurl.URL, url)

curl.setopt(pycurl.WRITEFUNCTION, buffer.write)

try:

curl.perform()

download_speed = curl.getinfo(pycurl.SPEED_DOWNLOAD)

SPEED.append((name, str(download_speed)))

buffer.close()

curl.close()

except Exception, e:

SPEED.append((name, 'U'))

buffer.close()

curl.close()

class Quality(threading.Thread):

def __init__(self, name, ip, count):

threading.Thread.__init__(self)

self.name = name

self.ip = ip

self.count = count

def run(self):

try:

p = subprocess.Popen(['/usr/sbin/mtr', '-n', '-c', self.count, '-r', self.ip], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

output, error = p.communicate()

if output:

info = output.split('

')[-2]

m = re.match('s+S+s+S+s+(S+)%s+S+s+S+s+(S+).*', info)

if m:

MTR.append((self.name, m.group(1), m.group(2)))

else:

MTR.append((self.name, 'U', 'U'))

if error:

print "ret> ", p.returncode

print "Error> error ", error.strip()

except OSError as e:

print "OSError > ", e.errno

print "OSError > ", e.strerror

print "OSError > ", e.filename

except:

print "Error > ", sys.exc_info()

def create_rrd(rrdfile, datasource):

dss = [ 'DS:%s:GAUGE:120:0:U' % ds for ds in datasource.split(',') ]

rra = [

'RRA:AVERAGE:0.5:1:1440',

'RRA:AVERAGE:0.5:15:672',

'RRA:AVERAGE:0.5:60:744',

'RRA:AVERAGE:0.5:1440:375',

'RRA:MIN:0.5:1:1440',

'RRA:MIN:0.5:15:672',

'RRA:MIN:0.5:60:744',

'RRA:MIN:0.5:1440:375',

'RRA:MAX:0.5:1:1440',

'RRA:MAX:0.5:15:672',

'RRA:MAX:0.5:60:744',

'RRA:MAX:0.5:1440:375',

'RRA:LAST:0.5:1:1440',

'RRA:LAST:0.5:15:672',

'RRA:LAST:0.5:60:744',

'RRA:LAST:0.5:1440:375',

]

rrdtool.create(rrdfile, '--step', '60', '--start', '-1y', dss, rra)

def update_rrd(mode, datasource, timestamp):

if mode == 'download':

rrd_file = 'download.rrd'

if not os.path.exists(rrd_file):

create_rrd(rrd_file, datasource)

labels = []

downloads = []

for label, download in SPEED:

labels.append(label)

downloads.append(download)

rrdtool.update(rrd_file, '--template', ':'.join(labels), 'N:'+':'.join(downloads))

if mode == 'mtr':

rrd_file_loss = 'loss.rrd'

rrd_file_ping = 'ping.rrd'

if not os.path.exists(rrd_file_loss):

create_rrd(rrd_file_loss, datasource)

if not os.path.exists(rrd_file_ping):

create_rrd(rrd_file_ping, datasource)

labels = []

losses = []

pings = []

for label, loss, ping in MTR:

labels.append(label)

losses.append(loss)

pings.append(ping)

rrdtool.update(rrd_file_loss, '--template', ':'.join(labels), 'N:'+':'.join(losses))

rrdtool.update(rrd_file_ping, '--template', ':'.join(labels), 'N:'+':'.join(pings))

def generate_graph_element(rrdfile, dss, unit):

colors = ['#FFFF00', '#339933', '#FF6666', '#0066CC', '#FFFFFF']

comment = 'COMMENT: \n'

defs = [ 'DEF:%s=%s:%s:AVERAGE' % (ds, rrdfile, ds) for ds in dss.split(',') ]

vdefs_min = [ 'VDEF:%s_min=%s,MINIMUM' % (ds, ds) for ds in dss.split(',') ]

vdefs_max = [ 'VDEF:%s_max=%s,MAXIMUM' % (ds, ds) for ds in dss.split(',') ]

vdefs_avg = [ 'VDEF:%s_avg=%s,AVERAGE' % (ds, ds) for ds in dss.split(',') ]

vdefs_last = [ 'VDEF:%s_last=%s,LAST' % (ds, ds) for ds in dss.split(',') ]

vdefs = []

for vdef in zip(vdefs_min, vdefs_max, vdefs_avg, vdefs_last):

vdefs.extend(vdef)

lines = [ 'LINE1:%s%s:%s' % (ds, colors[idx], ds) for idx, ds in enumerate(dss.split(',')) ]

gprints_min = [ 'GPRINT:%s_min:MIN:%%7.2lf %%s%s' % (ds, unit) for ds in dss.split(',') ]

gprints_max = [ 'GPRINT:%s_max:MAX:%%7.2lf %%s%s' % (ds, unit) for ds in dss.split(',') ]

gprints_avg = [ 'GPRINT:%s_avg:AVERAGE:%%7.2lf %%s%s' % (ds, unit) for ds in dss.split(',') ]

gprints_last = [ 'GPRINT:%s_last:LAST:%%7.2lf %%s%s' % (ds, unit) for ds in dss.split(',') ]

gprints = []

for gprint in zip(lines, gprints_min, gprints_max, gprints_avg, gprints_last):

gprints.extend(gprint)

gprints.append(comment)

return defs, vdefs, gprints

def graph_rrd(hostname, names, dss):

colors = [

'-c', 'SHADEA#000000',

'-c', 'SHADEB#111111',

'-c', 'FRAME#AAAAAA',

'-c', 'FONT#FFFFFF',

'-c', 'ARROW#FFFFFF',

'-c', 'AXIS#FFFFFF',

'-c', 'BACK#333333',

'-c', 'CANVAS#333333',

'-c', 'MGRID#CCCCCC',

]

xgrid = 'MINUTE:10:MINUTE:30:MINUTE:30:0:%H:%M'

for name in names.split(','):

if name == 'download':

label = 'Bytes'

title = 'link_%s on %s' % (name, hostname)

rrdfile = name + '.rrd'

imgfile = name + '.png'

defs, vdefs, gprints = generate_graph_element(rrdfile, dss, 'B')

rrdtool.graph(imgfile, '-t', title, '-v', label, '-w', '520', '--start', '-3h', '--step', '60', '--x-grid', xgrid, colors, defs, vdefs, gprints)

if name == 'loss':

label = '%'

title = 'link_%s on %s' % (name, hostname)

rrdfile = name + '.rrd'

imgfile = name + '.png'

defs, vdefs, gprints = generate_graph_element(rrdfile, dss, '%%')

rrdtool.graph(imgfile, '-t', title, '-v', label, '-w', '520', '--start', '-3h', '--step', '60', '--x-grid', xgrid, colors, defs, vdefs, gprints)

if name == 'ping':

label = 'ms'

title = 'link_%s on %s' % (name, hostname)

rrdfile = name + '.rrd'

imgfile = name + '.png'

defs, vdefs, gprints = generate_graph_element(rrdfile, dss, 'ms')

rrdtool.graph(imgfile, '-t', title, '-v', label, '-w', '520', '--start', '-3h', '--step', '60', '--x-grid', xgrid, colors, defs, vdefs, gprints)

if __name__ == '__main__':

usage = '''%prog [options] arg1 arg2

example:

. %prog --mode=download --name=wx-2-ct,wx-2-cu --ip=111.111.111.111,222.222.222.222 --type=iptv

. %prog --mode=mtr --name=wx-2-ct,wx-2-cu --ip=111.111.111.111,222.222.222.222 --count=50

. %prog --mode=graph --name=download,loss,ping --ds=wx-2-ct,wx-2-cu

'''

parser = OptionParser(usage)

parser.add_option('--mode', action='store', type='string', dest='mode', help='check mode')

parser.add_option('--name', action='store', type='string', dest='name', help='name list of the network link')

parser.add_option('--ip', action='store', type='string', dest='ip', help='ip address list of the network link')

parser.add_option('--type', action='store', type='string', dest='type', help='data rate of the ts file')

parser.add_option('--count', action='store', type='string', dest='count', help='send packets per second')

parser.add_option('--ds', action='store', type='string', dest='ds', help='data source')

(options, args) = parser.parse_args()

hostname = socket.gethostname()

timestamp = int(time.time())

if options.mode == 'download':

links = zip(options.name.split(','), options.ip.split(','))

for link in links:

url = get_m3u8_info(options.type, link[1])

get_ts_file(link[0], url)

update_rrd(options.mode, options.name, timestamp)

if options.mode == 'mtr':

links = zip(options.name.split(','), options.ip.split(','))

threads = []

for link in links:

threads.append(Quality(link[0], link[1], options.count))

for t in threads:

t.start()

for t in threads:

t.join()

update_rrd(options.mode, options.name, timestamp)

if options.mode == 'graph':

graph_rrd(hostname, options.name, options.ds)

生成json数据 link_info.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import os, sys

import re, json

import datetime, time

import subprocess, socket

def unit_convert(data):

if data < 10**3:

return str(round(float(data), 2))

elif data > 10**3 and data < 10**6:

return str(round(float(data) / 10**3, 2)) + 'Kb'

elif data > 10**6 and data < 10**9:

return str(round(float(data) / 10**6, 2)) + 'Mb'

elif data > 10**9 and data < 10**12:

return str(round(float(data) / 10**9, 2)) + 'Gb'

elif data > 10**12 and data < 10**15:

return str(round(float(data) / 10**12, 2)) + 'Tb'

def get_traffic_info():

proc_stat = '/proc/net/dev'

traffic = []

with open(proc_stat, 'r') as f:

for line in f:

m = re.match('s*(S+):s*(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+(d+)s+', line)

if m:

traffic.append((m.group(1), int(m.group(2)), int(m.group(10))))

return traffic

def get_connection_info():

command = 'netstat -n |grep ^tcp |wc -l'

p = subprocess.Popen(command, shell=True, stdout=subprocess.PIPE, stderr=subprocess.PIPE)

output, error = p.communicate()

return int(output)

def get_current_link_info():

hostfile = '/etc/hosts'

with open(hostfile, 'r') as f:

for line in f:

m = re.match('(d{1,3}.d{1,3}.d{1,3}.d{1,3})s+(S+)', line)

if m and m.group(2) == 'wx.service.live.tvmining.com':

return m.group(1)

return 'unknown'

def main():

counter = 2

first = get_traffic_info()

time.sleep(counter)

second = get_traffic_info()

traffic = map(lambda x, y: (x[0], unit_convert(abs(x[1]-y[1])*8.0/counter), unit_convert(abs(x[2]-y[2])*8.0/counter)), first, second)

for name, receive, transmit in traffic:

if name == 'p2p1':

traffic_in, traffic_out = receive, transmit

connection = get_connection_info()

current_link = get_current_link_info()

message = {}

hostname = socket.gethostname()

message[hostname] = {

'in': traffic_in,

'out': traffic_out,

'connection': connection,

'current_link': current_link,

}

with open('link_info.json', 'w') as f:

json.dump(message, f)

if __name__ == '__main__':

main()

# --data--

{"la-1-219": {"connection": 10068, "out": "2.56Mb", "current_link": "221.228.74.36", "in": "147.18Mb"}}