非高可用方式(没使用ZooKeeper)的安装步骤

https://www.cnblogs.com/live41/p/15467263.html

* 全文的命令都是在登录root账号的情况下执行。

一、硬件环境

假设有4台机,IP及主机名如下:

192.168.100.105 c1 192.168.100.110 c2 192.168.100.115 c3 192.168.100.120 c4

二、软件环境

操作系统:Ubuntu Server 18.04

JDK:1.8.0

Hadoop:3.3.0/3.3.1

* 这里的ZooKeeper、Hadoop根目录都放在/home/目录下

三、部署规划

1.组件规划

* 以下是较合理的规划,但由于本文的操作部分是较早之前写好的,没按这份规划,先凑合着搭建了测试吧,熟悉后再自行调整。

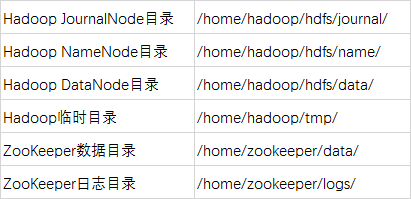

2.目录规划

* 以下目录需要在每台机手动创建

四、系统运行环境配置

https://www.cnblogs.com/live41/p/15525826.html

五、安装和配置ZooKeeper

https://www.cnblogs.com/live41/p/15522363.html

六、下载安装包及配置系统环境变量

* 以下操作在每台机都要执行一次

1.下载及解压

https://downloads.apache.org/hadoop/common/

解压到/home/目录

2.配置环境变量

vim ~/.bashrc

在末尾加入以下内容:

export HADOOP_HOME=/home/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export HADOOP_COMMON_HOME=/home/hadoop export HADOOP_HDFS_HOME=/home/hadoop export HADOOP_MAPRED_HOME=/home/hadoop export HADOOP_CONF_DIR=/home/hadoop/etc/hadoop export HDFS_DATANODE_USER=root export HDFS_NAMENODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root export YARN_HOME=/home/hadoop export YARN_RESOURCEMANAGER_USER=root export YARN_NODEMANAGER_USER=root

更新环境变量

source ~/.bashrc

七、安装和配置Hadoop

* 不用每台机执行,只在c1机执行,再通过scp命令同步配置文件给其它机

1.进入配置目录

cd $HADOOP_HOME/etc/hadoop

2.编辑hadoop-env.sh

vim hadoop-env.sh

添加以下行(已有的不用改):

export JAVA_HOME=/usr/bin/jdk1.8.0 export HADOOP_OS_TYPE=${HADOOP_OS_TYPE:-$(uname -s)}

* 这里的JAVA_HOME根据你安装的路径来修改。

3.编辑core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://ns6/</value> <!--该属性对应的是hdfs-site.xml的dfs.nameservices属性--> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/tmp</value> </property> <property> <name>io.file.buffer.size</name> <value>131072</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>c1:2181,c2:2181,c3:2181,c4:2181</value> </property> <property> <name>ha.zookeeper.session-timeout.ms</name> <value>1000</value> </property> </configuration>

4.编辑hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:///home/hadoop/hdfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:///home/hadoop/hdfs/data</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <!-- core-site.xml中使用的是这里的配置值 --> <property> <name>dfs.nameservices</name> <value>ns6</value> </property> <property> <name>dfs.ha.namenodes.ns6</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.ns6.nn1</name> <value>c1:9000</value> </property> <property> <name>dfs.namenode.http-address.ns6.nn1</name> <value>c1:50070</value> </property> <property> <name>dfs.namenode.rpc-address.ns6.nn2</name> <value>c2:9000</value> </property> <property> <name>dfs.namenode.http-address.ns6.nn2</name> <value>c2:50070</value> </property> <!-- 就是JournalNode列表,url格式: qjournal://host1:port1;host2:port2;host3:port3/journalId journalId推荐使用nameservice,默认端口号是:8485 --> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://c1:8485;c2:8485;c3:8485;c4:8485/ns6</value> </property> <property> <name>dfs.journalnode.edits.dir</name> <value>/home/hadoop/hdfs/journal</value> </property> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <property> <name>dfs.client.failover.proxy.provider.ns6</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.ha.fencing.methods</name> <value> sshfence shell(/bin/true) </value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>~/.ssh/id_rsa</value> <!-- 或/root/.ssh/id_rsa --> </property> <property> <name>dfs.ha.fencing.ssh.connect-timeout</name> <value>30000</value> </property> <property> <name>ha.failover-controller.cli-check.rpc-timeout.ms</name> <value>60000</value> </property> </configuration>

5.编辑mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobtracker.http.address</name> <value>c1:50030</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>c1:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>c1:19888</value> </property> <property> <name>mapred.job.tracker</name> <value>http://c1:9001</value> </property> </configuration>

6.编辑yarn-site.xml

<configuration> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.cluster-id</name> <value>yn6</value> <!-- 可自行定义cluster-id --> </property> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>c1</value> </property> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>c2</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm1</name> <value>c1:8088</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm2</name> <value>c2:8088</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>86400</value> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>c1:2181,c2:2181,c3:2181,c4:2181</value> </property> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <property> <name>yarn.application.classpath</name> <value>/home/hadoop/etc/hadoop:/home/hadoop/share/hadoop/common/lib/*:/home/hadoop/share/hadoop/common/*:/home/hadoop/share/hadoop/hdfs:/home/hadoop/share/hadoop/hdfs/lib/*:/home/hadoop/share/hadoop/hdfs/*:/home/hadoop/share/hadoop/mapreduce/*:/home/hadoop/share/hadoop/yarn:/home/hadoop/share/hadoop/yarn/lib/*:/home/hadoop/share/hadoop/yarn/*</value> </property> </configuration>

7.配置workers

vim workers

添加以下内容:

c1

c2

c3

c4

8.同步配置文件

由于前面已经配置过免密登录,所以可以先配置c1机的文件,再通过scp同步给其它机。

cd /home/hadoop/etc/hadoop scp *.* c2:/home/hadoop/etc/hadoop scp *.* c3:/home/hadoop/etc/hadoop scp *.* c4:/home/hadoop/etc/hadoop

八、启动和停止Hadoop

* 只在c1机执行

1.启动JournalNode

hdfs --workers --daemon start journalnode

旧版命令如下:

hadoop-daemons.sh start journalnode

旧版命令也能执行,但会报警告信息。

* 有2个相似文件:hadoop-daemon.sh和hadoop-daemons.sh

* 前者(没带s)的是只执行本机的journalnode,后者(有带s)是执行所有机器的journalnode。

* 注意不要输错~!

2.格式化NameNode

hadoop namenode -format

格式化后同步namenode的信息文件给c2机。因为有2个NameNode节点,c1和c2

scp -r /home/hadoop/hdfs/name/current/ c2:/home/hadoop/hdfs/name/

3.格式化zkfc

hdfs zkfc -formatZK

zkfc = ZKFailoverController = ZooKeeper Failover Controller

zkfc用于监控NameNode状态信息,并进行自动切换。

4.启动HDFS和Yarn

start-dfs.sh start-yarn.sh

* 这步是最经常报错的,部分错误及解决方法见下面附录。

5.检查进程

jps

6.检查节点状态

hdfs haadmin -getServiceState nn1 hdfs haadmin -getServiceState nn2 yarn rmadmin -getServiceState rm1 yarn rmadmin -getServiceState rm2

7.关闭Hadoop

stop-yarn.sh stop-dfs.sh

九、使用Hadoop

* 只在c1机执行

1.Web页面

2.使用命令

https://www.cnblogs.com/xiaojianblogs/p/14281445.html

附录

1.没有配置用root启动Hadoop

错误提示信息:

Starting namenodes on [master]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [slave1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

解决方法:

(1) 修改start-dfs.sh和stop-dfs.sh,在头部添加以下内容:

#!/usr/bin/env bash HDFS_DATANODE_USER=root HADOOP_SECURE_DN_USER=root HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root

(2) 修改start-yarn.sh和stop-yarn.sh,在头部添加以下内容:

#!/usr/bin/env bash HADOOP_SECURE_DN_USER=root YARN_RESOURCEMANAGER_USER=root YARN_NODEMANAGER_USER=root

* 可以先在c1机修改,用scp同步给其它机器

2.重新格式化后的IO错误

错误提示信息(多种):

Incompatible clusterIDs in /home/hadoop/hdfs/data

Failed to add storage directory [DISK]file

Directory /home/hadoop/hdfs/journal/ns6 is in an inconsistent state: Can't format the storage directory because the current directory is not empty.

解决方法:

在格式化之前,先把name、data、logs等目录里面的文件先清除掉。注意只是删除里面的文件,不删除目录