python 函数官方网:http://docs.python.org/library/functions.html

原址:http://wiki.python.org/moin/PythonSpeed/PerformanceTips

几个函数:

sorted(array,key=lambda item:item[0],reverse=True)

匿名函数lambda。

lambda的使用方法如下:lambda [arg1[,arg2,arg3,...,argn]] : expression

例如:

>>> add = lambda x,y : x + y

>>> add(1,2)

3

接下来分别介绍filter,map和reduce。

1、filter(bool_func,seq):map()函数的另一个版本,此函数的功能相当于过滤器。调用一个布尔函数bool_func来迭代遍历每个seq中的元素;返回一个使bool_seq返回值为true的元素的序列。

例如:

>>> filter(lambda x : x%2 == 0,[1,2,3,4,5])

[2, 4]

filter内建函数的python实现:

>>> def filter(bool_func,seq):

filtered_seq = []

for eachItem in seq:

if bool_func(eachItem):

filtered_seq.append(eachItem)

return filtered_seq

2、map(func,seq1[,seq2...]):将函数func作用于给定序列的每个元素,并用一个列表来提供返回值;如果func为None,func表现为身份函数,返回一个含有每个序列中元素集合的n个元组的列表。

map(function, sequence[, sequence, ...]) -> list

Return a list of the results of applying the function to the items of

the argument sequence(s). If more than one sequence is given, the

function is called with an argument list consisting of the corresponding

item of each sequence, substituting None for missing values when not all

sequences have the same length. If the function is None, return a list of

the items of the sequence (or a list of tuples if more than one sequence).

例如:

>>> map(lambda x : None,[1,2,3,4])

[None, None, None, None]

>>> map(lambda x : x * 2,[1,2,3,4])

[2, 4, 6, 8]

>>> map(lambda x : x * 2,[1,2,3,4,[5,6,7]])

[2, 4, 6, 8, [5, 6, 7, 5, 6, 7]]

map内建函数的python实现:

>>> def map(func,seq):

mapped_seq = []

for eachItem in seq:

mapped_seq.append(func(eachItem))

return mapped_seq

3、reduce(func,seq[,init]):func为二元函数,将func作用于seq序列的元素,每次携带一对(先前的结果以及下一个序列的元素),连续的将现有的结果和下一个值作用在获得的随后的结果上,最后减少我们的序列为一个单一的返回值:如果初始值init给定,第一个比较会是init和第一个序列元素而不是序列的头两个元素。

reduce(function, sequence[, initial]) -> value

Apply a function of two arguments cumulatively to the items of a sequence,

from left to right, so as to reduce the sequence to a single value.

For example, reduce(lambda x, y: x+y, [1, 2, 3, 4, 5]) calculates

((((1+2)+3)+4)+5). If initial is present, it is placed before the items

of the sequence in the calculation, and serves as a default when the

sequence is empty.

例如:

>>> reduce(lambda x,y : x + y,[1,2,3,4])

10

>>> reduce(lambda x,y : x + y,[1,2,3,4],10)

20

reduce的python实现:

>>> def reduce(bin_func,seq,initial=None):

lseq = list(seq)

if initial is None:

res = lseq.pop(0) #弹出第一个成员

else:

res = initial

for eachItem in lseq:

res = bin_func(res,eachItem)

return res

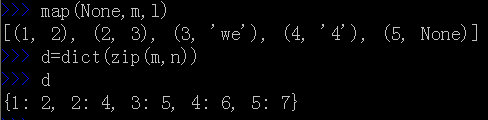

4、zip函数:

使用zip函数可以把两个列表合并起来,成为一个元组的列表。生成字典函数dict():

#当长度不一的时候,多余的被忽略

#map则不会忽略而会用第一个参数来填充。

#使用zip来造出一个字典。

5、 在定义函数的时候可以使用*args指定在函数中使用元组的形式访问参数,使用**args来指定按照字典形式来使用参数:

def showArgs(*args):

print args

showArgs(1,2,3,4)

def showArgsDict(**args):

print args

showArgsDict(name = 'chenzhe',age=22)

结果:>>>

(1, 2, 3, 4)

{'age': 22, 'name': 'chenzhe'}

6、拆解参数是一个与集合参数相对应的概念,定义的时候采用常规的参数表示方式,但是调用的时候使用列表或者字典的方式:

def showArgsUnpacking(a,b,c,d):

print a,b,c,d

args = [1,2,3,4]

showArgsUnpacking(*args)

argsDict = {'a':1,'b':2,'c':3,'d':4}

showArgsDict(**argsDict)

7、C中的三元运算符 A = X? Y : Z Python中:A=X if Y else Z

表达式Y成立,A=X,不成立,A=Z

8、在Python中有+=之类的赋值,但是没有++和--这类运算符。

L = [1, 2, 3, 4]

while L:

front, L = L[0], L[1:]

print front, L

>>>

1 [2, 3, 4]

2 [3, 4]

3 [4]

4 []

8、绑定输出到文件 在open的时候制定'a'即为(append)模式,在这种模式下,文件的原有内容不会消失,新写入的内容会自动被添加到文件的末尾。

from sys import stdout

temp = stdout #for later use

outputFile = open('out.txt','a')

stdout = outputFile

stdout.write('just a test')

stdout = temp#restore the output stream

print >> outputFile,'\nchanged for a little whie\tmake'

from sys import stderr

print >> stderr,'use!\n'

在open的时候制定'r'即为读取模式。

testFile = open('cainiao.txt','r')

testStr = testFile.readline()

print testStr

testStr = testFile.read()

print testStr

testFile.close()

9、

使用Python的pickle模块,可以将Python对象直接存储在文件中,并且可以再以后需要的时候重新恢复到内容中。

testFile = open('pickle.txt','w')

#and import pickle

import pickle

testDict = {'name':'Chen Zhe','gender':'male'}

pickle.dump(testDict,testFile)

testFile.close()

testFile = open('pickle.txt','r')

print pickle.load(testFile)

testFile.close()

二进制模式:

testFile = open('cainiao.txt','wb')

#where wb means write and in binary

import struct

bytes = struct.pack('>i4sh',100,'string',250)

testFile.write(bytes)

testFile.close()

读取二进制文件。

testFile = open('cainiao.txt','rb')

data = testFile.read()

values = struct.unpack('>i4sh',data)

print values

10、字典操作

一.创建字典

方法①:

>>> dict1 = {}

>>> dict2 = {'name': 'earth', 'port': 80}

>>> dict1, dict2

({}, {'port': 80, 'name': 'earth'})

方法②:从Python 2.2 版本起

>>> fdict = dict((['x', 1], ['y', 2]))

>>> fdict

{'y': 2, 'x': 1}

方法③:

从Python 2.3 版本起, 可以用一个很方便的内建方法fromkeys() 来创建一个"默认"字典, 字

典中元素具有相同的值 (如果没有给出, 默认为None):

>>> ddict = {}.fromkeys(('x', 'y'), -1)

>>> ddict

{'y': -1, 'x': -1}

>>>

>>> edict = {}.fromkeys(('foo', 'bar'))

>>> edict

{'foo': None, 'bar': None}

二.如何访问字典中的值

①要想遍历一个字典(一般用键), 你只需要循环查看它的键, 像这样:

>>> dict2 = {'name': 'earth', 'port': 80}

>>>

>>>> for key in dict2.keys():

... print 'key=%s, value=%s' % (key, dict2[key])

...

key=name, value=earth

key=port, value=80

②从Python 2.2 开始

在 for 循环里遍历字典。

>>> dict2 = {'name': 'earth', 'port': 80}

>>>

>>>> for key in dict2:

... print 'key=%s, value=%s' % (key, dict2[key])

...

key=name, value=earth

key=port, value=80

要得到字典中某个元素的值, 可以用你所熟悉的字典键加上中括号来得到:

>>> dict2['name']

'earth'

>>>

>>> print 'host %s is running on port %d' % \

... (dict2['name'], dict2['port'])

host earth is running on port 80

③字典所有的方法。方法has_key()和 in 以及 not in 操作符都是布尔类型的

>>> 'server' in dict2 # 或 dict2.has_key('server')

False

>>> 'name' in dict # 或 dict2.has_key('name')

True

>>> dict2['name']

'earth'

一个字典中混用数字和字符串的例子:

>>> dict3 = {}

>>> dict3[1] = 'abc'

>>> dict3['1'] = 3.14159

>>> dict3[3.2] = 'xyz'

>>> dict3

{3.2: 'xyz', 1: 'abc', '1': 3.14159}

三.更新字典

采取覆盖更新

上例中 dict2['name']='earth';

更新 dict2['name']='abc';

四.删除字典元素和字典

del dict2['name'] # 删除键为“name”的条目

dict2.clear() # 删除dict2 中所有的条目

del dict2 # 删除整个dict2 字典

dict2.pop('name') # 删除并返回键为“name”的条目

dict2 = {'name': 'earth', 'port': 80}

>>> dict2.keys()

['port', 'name']

>>>

>>> dict2.values()

[80, 'earth']

>>>

>>> dict2.items()

[('port', 80), ('name', 'earth')]

>>>

>>> for eachKey in dict2.keys():

... print 'dict2 key', eachKey, 'has value', dict2[eachKey]

...

dict2 key port has value 80

dict2 key name has value earth

update()方法可以用来将一个字典的内容添加到另外一个字典中

{'server': 'http', 'port': 80, 'host': 'venus'}

>>> dict3.clear()

>>> dict3

五.映射类型相关的函数

>>> dict(x=1, y=2)

{'y': 2, 'x': 1}

>>> dict8 = dict(x=1, y=2)

>>> dict8

{'y': 2, 'x': 1}

>>> dict9 = dict(**dict8)

>>> dict9

{'y': 2, 'x': 1}

dict9 = dict8.copy()

字典内建方法:

字典key值:dict9.keys()

字典值: dict9.values()

字典所有项:dict9.items()

返回字典值:dict9.get('y')

表 7.2 字典类型方法

方法名字 操作

dict.cleara() 删除字典中所有元素

dict.copya() 返回字典(浅复制)的一个副本

dict.fromkeysc(seq,val=None) c 创建并返回一个新字典,以seq 中的元素做该字典的键,val 做该字典中所有键对应的初始值(如果不提供此值,则默认为None)

dict.get(key,default=None)a 对字典dict 中的键key,返回它对应的值value,如果字典中不存在此键,则返回default 的值(注意,参数default 的默认值为None)

dict.has_key(key) 如果键(key)在字典中存在,返回True,否则返回False. 在Python2.2版本引入in 和not in 后,此方法几乎已废弃不用了,但仍提供一个

可工作的接口。

dict.items() 返回一个包含字典中(键, 值)对元组的列表

dict.keys() 返回一个包含字典中键的列表

dict.iter()d 方法iteritems(), iterkeys(), itervalues()与它们对应的非迭代方法一样,不同的是它们返回一个迭代子,而不是一个列表。

dict.popc(key[, default]) c 和方法get()相似,如果字典中key 键存在,删除并返回dict[key],如果key 键不存在,且没有给出default 的值,引发KeyError 异常。

dict.setdefault(key,default=None)e 和方法set()相似,如果字典中不存在key 键,由dict[key]=default 为它赋值。

dict.update(dict2)a 将字典dict2 的键-值对添加到字典dict

dict.values() 返回一个包含字典中所有值的列表

①②③④⑤⑥⑦⑧⑨⑩

六.集合类型

①用集合的工厂方法 set()和 frozenset():

>>> s = set('cheeseshop')

>>> s

set(['c', 'e', 'h', 'o', 'p', 's'])

>>> t = frozenset('bookshop')

>>> t

frozenset(['b', 'h', 'k', 'o', 'p', 's'])

>>> type(s)

<type 'set'>

>>> type(t)

<type 'frozenset'>

②如何更新集合

用各种集合内建的方法和操作符添加和删除集合的成员:

>>> s.add('z')

>>> s

set(['c', 'e', 'h', 'o', 'p', 's', 'z'])

>>> s.update('pypi')

>>> s

set(['c', 'e', 'i', 'h', 'o', 'p', 's', 'y', 'z'])

>>> s.remove('z')

>>> s

set(['c', 'e', 'i', 'h', 'o', 'p', 's', 'y'])

>>> s -= set('pypi')

>>> s

set(['c', 'e', 'h', 'o', 's'])

③删除集合

del s

④成员关系 (in, not in)

>>> s = set('cheeseshop')

>>> t = frozenset('bookshop')

>>> 'k' in s

False

>>> 'k' in t

True

>>> 'c' not in t

True

⑤集合等价/不等价

>>> s == t

False

>>> s != t

True

>>> u = frozenset(s)

>>> s == u

True

>>> set('posh') == set('shop')

True

print testDict['name']

print testDict.get('name')#same as the above#判断某个字典里是否包含某个key

print testDict.has_key('gender') #如包含则返回True

#所有的key

print testDict.keys()

#所有的值

print testDict.values()

#所有的(key,value)元组

print testDict.items()

#给字典添加(key,value)对

updateDict = {'skill':'JavaSctipt'}

testDict.update(updateDict)

print testDict

#使用dict构造函数来构造字典

#注意key不使用引号括起来

print dict(name='gaoshou')

print dict([('name', 'Chen Zhe'), ('gender', 'male')])

#暂时讲所有值都指定为0

#适合keys已知而值为动态决定的时候

print dict.fromkeys(['a', 'b'], 0)给不存在的key赋值会扩展字典。key不一定是string。可以是任何immutable类型。

字典在内部是使用哈希表来实现的。

11、列表操作testList = [1,2,3,4,5] print testList print testList * 2上面进行了列表的乘法,注意并不是每个元素乘以二,而是整个列表被重复了两次,重新接合成一个新的列表。

#number of deleted and inserted need not match #向列表中插入的元素和被插入的元素没有必要相等 testList[0:2] = [1,0,0,0] print testList #在列表的最后添加一个元素 testList.append(u'菜鸟') print testList #列表排序 testList.sort() #扩展列表 testList.extend([7,8,9,10]) print testList #讲列表反转 testList.reverse() print testList #弹出最后一个列表项目 testList.pop() print testList #弹出第一个列表项目 popedItem = testList.pop(0) print testList print popedItem #删除部分列表 del testList[0:4] print testList 12、运算符数字还有一个除完后约去小数的运算“//”,求余运算符“%”,幂运算符“**”。位操作:左移“<<”、右移“>>”、按位或“|”、按位与“&”。

print 50.0/3 print 50.0//3#floor division

在Python中,有内置的方法来表示复数,虚部使用j来表示。

#complex number print 2+3j print 2+3j*3使用Decimal控制精确的小数点位数

#decimal from decimal import Decimal print Decimal('1.0') + Decimal('1.2')和数字相关的模块有:math数学模块、random随机模块、等于和是的概念

“等于”和“是” (== and is)

#== tests if the two variables refer equal objects L = [1,2,3] M = [1,2,3] print L == M print L is M #表示是否是同一个对象,即是否共享内存 #is tests if two variables refer the same object

>>>

True

False

Suppose, for example, you have a list of tuples that you want to sort by the n-th field of each tuple. The following function will do that.

def sortby(somelist, n):

nlist = [(x[n], x) for x in somelist]

nlist.sort()

return [val for (key, val) in nlist]

Matching the behavior of the current list sort method (sorting in place) is easily achieved as well:

def sortby_inplace(somelist, n):

somelist[:] = [(x[n], x) for x in somelist]

somelist.sort()

somelist[:] = [val for (key, val) in somelist]

return

>>> somelist = [(1, 2, 'def'), (2, -4, 'ghi'), (3, 6, 'abc')]

>>> somelist.sort()

>>> somelist

[(1, 2, 'def'), (2, -4, 'ghi'), (3, 6, 'abc')]

>>> nlist = sortby(somelist, 2)

>>> sortby_inplace(somelist, 2)

>>> nlist == somelist

True

>>> nlist = sortby(somelist, 1)

>>> sortby_inplace(somelist, 1)

>>> nlist == somelist

True字符串操作:s = ""

for substring in list:

s += substring

Use s = "".join(list) instead. The former is a very common and catastrophic mistake when building large strings. Similarly, if you are generating bits of a string sequentially instead of:

s = ""

for x in list:

s += some_function(x)

use

slist = [some_function(elt) for elt in somelist]

s = "".join(slist)

Avoid:

out = "<html>" + head + prologue + query + tail + "</html>"

Instead, use

out = "<html>%s%s%s%s</html>" % (head, prologue, query, tail)

Even better, for readability (this has nothing to do with efficiency other than yours as a programmer), use dictionary substitution:

out = "<html>%(head)s%(prologue)s%(query)s%(tail)s</html>" % locals()

This last two are going to be much faster, especially when piled up over many CGI script executions, and easier to modify to boot. In addition, the slow way of doing things got slower in Python 2.0 with the addition of rich comparisons to the language. It now takes the Python virtual machine a lot longer to figure out how to concatenate two strings. (Don't forget that Python does all method lookup at runtime.)

Loops

说到提高程序运行效率问题,在这插入range()与xrange()两个函数的区别,我们经常用于循环,感觉没啥不同,可实质上他们是有区别的。range()返回的是一个列表,而xrange()返回的只是一个xrange()类型的值,很显然后者要占用的内存少得多。如果我们只是单独用作循环的话最好用xrange(),虽然在小程序里不存在效率问题,但是我们养成这样的习惯肯定是没错的。

Python supports a couple of looping constructs. The for statement is most commonly used. It loops over the elements of a sequence, assigning each to the loop variable. If the body of your loop is simple, the interpreter overhead of the for loop itself can be a substantial amount of the overhead. This is where the map function is handy. You can think of map as a for moved into C code. The only restriction is that the "loop body" of map must be a function call. Besides the syntactic benefit of list comprehensions, they are often as fast or faster than equivalent use of map.

Here's a straightforward example. Instead of looping over a list of words and converting them to upper case:

newlist = []

for word in oldlist:

newlist.append(word.upper()) #word.upper()中是将word字符串转化为大写字母,但并不改变word里的值

you can use map to push the loop from the interpreter into compiled C code:

newlist = map(str.upper, oldlist) #个人理解:前面函数,后面是循环的次数

List comprehensions were added to Python in version 2.0 as well. They provide a syntactically more compact and more efficient way of writing the above for loop:

newlist = [s.upper() for s in oldlist]

Generator expressions were added to Python in version 2.4. They function more-or-less like list comprehensions or map but avoid the overhead of generating the entire list at once. Instead, they return a generator object which can be iterated over bit-by-bit:

newlist = (s.upper() for s in oldlist)

Which method is appropriate will depend on what version of Python you're using and the characteristics of the data you are manipulating.

Guido van Rossum wrote a much more detailed (and succinct) examination of loop optimization that is definitely worth reading.

Avoiding dots...

Suppose you can't use map or a list comprehension? You may be stuck with the for loop. The for loop example has another inefficiency. Both newlist.append and word.upper are function references that are reevaluated each time through the loop. The original loop can be replaced with:

upper = str.upper

newlist = [] #把小数点缩少。。

append = newlist.append

for word in oldlist:

append(upper(word))

This technique should be used with caution. It gets more difficult to maintain if the loop is large. Unless you are intimately familiar with that piece of code you will find yourself scanning up to check the definitions of append and upper.

Local Variables

The final speedup available to us for the non-map version of the for loop is to use local variables wherever possible. If the above loop is cast as a function, append and upper become local variables. Python accesses local variables much more efficiently than global variables.

def func():

upper = str.upper 定义一个函数,所有变量都变成了局部变量。。

newlist = []

append = newlist.append

for word in oldlist:

append(upper(word))

return newlist

At the time I originally wrote this I was using a 100MHz Pentium running BSDI. I got the following times for converting the list of words in /usr/share/dict/words (38,470 words at that time) to upper case:

Version Time (seconds)

Basic loop 3.47

Eliminate dots 2.45

Local variable & no dots 1.79

Using map function 0.54

Initializing Dictionary Elements

Suppose you are building a dictionary of word frequencies and you've already broken your text up into a list of words. You might execute something like:

wdict = {}

for word in words:

if word not in wdict:

wdict[word] = 0

wdict[word] += 1

Except for the first time, each time a word is seen the if statement's test fails. If you are counting a large number of words, many will probably occur multiple times. In a situation where the initialization of a value is only going to occur once and the augmentation of that value will occur many times it is cheaper to use a try statement:

wdict = {}

for word in words:

try:

wdict[word] += 1

except KeyError:

wdict[word] = 1

It's important to catch the expected KeyError exception, and not have a default except clause to avoid trying to recover from an exception you really can't handle by the statement(s) in the try clause.

A third alternative became available with the release of Python 2.x. Dictionaries now have a get() method which will return a default value if the desired key isn't found in the dictionary. This simplifies the loop:

wdict = {}

get = wdict.get

for word in words:

wdict[word] = get(word, 0) + 1

When I originally wrote this section, there were clear situations where one of the first two approaches was faster. It seems that all three approaches now exhibit similar performance (within about 10% of each other), more or less independent of the properties of the list of words.

Also, if the value stored in the dictionary is an object or a (mutable) list, you could also use the dict.setdefault method, e.g.

You might think that this avoids having to look up the key twice. It actually doesn't (even in python 3.0), but at least the double lookup is performed in C.

Import Statement Overhead

import statements can be executed just about anywhere. It's often useful to place them inside functions to restrict their visibility and/or reduce initial startup time. Although Python's interpreter is optimized to not import the same module multiple times, repeatedly executing an import statement can seriously affect performance in some circumstances.

Consider the following two snippets of code (originally from Greg McFarlane, I believe - I found it unattributed in a comp.lang.python python-list@python.org posting and later attributed to him in another source):

def doit1():

import string ###### import statement inside function

string.lower('Python')

for num in range(100000):

doit1()

or:

import string ###### import statement outside function

def doit2():

string.lower('Python')

for num in range(100000):

doit2()

doit2 will run much faster than doit1, even though the reference to the string module is global in doit2. Here's a Python interpreter session run using Python 2.3 and the new timeit module, which shows how much faster the second is than the first:

>>> def doit1():

... import string

... string.lower('Python')

...

>>> import string

>>> def doit2():

... string.lower('Python')

...

>>> import timeit

>>> t = timeit.Timer(setup='from __main__ import doit1', stmt='doit1()')

>>> t.timeit()

11.479144930839539

>>> t = timeit.Timer(setup='from __main__ import doit2', stmt='doit2()')

>>> t.timeit()

4.6661689281463623

String methods were introduced to the language in Python 2.0. These provide a version that avoids the import completely and runs even faster:

def doit3():

'Python'.lower()

for num in range(100000):

doit3()

Here's the proof from timeit:

>>> def doit3():

... 'Python'.lower()

...

>>> t = timeit.Timer(setup='from __main__ import doit3', stmt='doit3()')

>>> t.timeit()

2.5606080293655396

The above example is obviously a bit contrived, but the general principle holds.

Note that putting an import in a function can speed up the initial loading of the module, especially if the imported module might not be required. This is generally a case of a "lazy" optimization -- avoiding work (importing a module, which can be very expensive) until you are sure it is required.

This is only a significant saving in cases where the module wouldn't have been imported at all (from any module) -- if the module is already loaded (as will be the case for many standard modules, like string or re), avoiding an import doesn't save you anything. To see what modules are loaded in the system look in sys.modules.

A good way to do lazy imports is:

email = None

def parse_email():

global email

if email is None:

import email

...

This way the email module will only be imported once, on the first invocation of parse_email().

Data Aggregation

Function call overhead in Python is relatively high, especially compared with the execution speed of a builtin function. This strongly suggests that where appropriate, functions should handle data aggregates. Here's a contrived example written in Python.

import time

x = 0

def doit1(i):

global x

x = x + i

list = range(100000)

t = time.time()

for i in list:

doit1(i)

print "%.3f" % (time.time()-t)

vs.

import time

x = 0

def doit2(list):

global x

for i in list:

x = x + i

list = range(100000)

t = time.time()

doit2(list)

print "%.3f" % (time.time()-t)

Here's the proof in the pudding using an interactive session:

>>> t = time.time()

>>> for i in list:

... doit1(i)

...

>>> print "%.3f" % (time.time()-t)

0.758

>>> t = time.time()

>>> doit2(list)

>>> print "%.3f" % (time.time()-t)

0.204

Even written in Python, the second example runs about four times faster than the first. Had doit been written in C the difference would likely have been even greater (exchanging a Python for loop for a C for loop as well as removing most of the function calls).

Doing Stuff Less Often

The Python interpreter performs some periodic checks. In particular, it decides whether or not to let another thread run and whether or not to run a pending call (typically a call established by a signal handler). Most of the time there's nothing to do, so performing these checks each pass around the interpreter loop can slow things down. There is a function in the sys module, setcheckinterval, which you can call to tell the interpreter how often to perform these periodic checks. Prior to the release of Python 2.3 it defaulted to 10. In 2.3 this was raised to 100. If you aren't running with threads and you don't expect to be catching many signals, setting this to a larger value can improve the interpreter's performance, sometimes substantially.

Python is not C

It is also not Perl, Java, C++ or Haskell. Be careful when transferring your knowledge of how other languages perform to Python. A simple example serves to demonstrate:

% timeit.py -s 'x = 47' 'x * 2'

1000000 loops, best of 3: 0.574 usec per loop

% timeit.py -s 'x = 47' 'x << 1'

1000000 loops, best of 3: 0.524 usec per loop

% timeit.py -s 'x = 47' 'x + x'

1000000 loops, best of 3: 0.382 usec per loop

Now consider the similar C programs (only the add version is shown):

#include <stdio.h>

int main (int argc, char *argv[]) {

int i = 47;

int loop;

for (loop=0; loop<500000000; loop++)

i + i;

return 0;

}

and the execution times:

% for prog in mult add shift ; do

< for i in 1 2 3 ; do

< echo -n "$prog: "

< /usr/bin/time ./$prog

< done

< echo

< done

mult: 6.12 real 5.64 user 0.01 sys

mult: 6.08 real 5.50 user 0.04 sys

mult: 6.10 real 5.45 user 0.03 sys

add: 6.07 real 5.54 user 0.00 sys

add: 6.08 real 5.60 user 0.00 sys

add: 6.07 real 5.58 user 0.01 sys

shift: 6.09 real 5.55 user 0.01 sys

shift: 6.10 real 5.62 user 0.01 sys

shift: 6.06 real 5.50 user 0.01 sys

Note that there is a significant advantage in Python to adding a number to itself instead of multiplying it by two or shifting it left by one bit. In C on all modern computer architectures, each of the three arithmetic operations are translated into a single machine instruction which executes in one cycle, so it doesn't really matter which one you choose.

A common "test" new Python programmers often perform is to translate the common Perl idiom

while (<>) {

print;

}into Python code that looks something like

import fileinput

for line in fileinput.input():

print line,

and use it to conclude that Python must be much slower than Perl. As others have pointed out numerous times, Python is slower than Perl for some things and faster for others. Relative performance also often depends on your experience with the two languages.

Use xrange instead of range

This section no longer applies if you're using Python 3, where range now provides an iterator over ranges of arbitrary size, and where xrange no longer exists.

Python has two ways to get a range of numbers: range and xrange. Most people know about range, because of its obvious name. xrange, being way down near the end of the alphabet, is much less well-known.

xrange is a generator object, basically equivalent to the following Python 2.3 code:

def xrange(start, stop=None, step=1):

if stop is None:

stop = start

start = 0

else:

stop = int(stop)

start = int(start)

step = int(step)

while start < stop:

yield start

start += step

Except that it is implemented in pure C.

xrange does have limitations. Specifically, it only works with ints; you cannot use longs or floats (they will be converted to ints, as shown above).

It does, however, save gobs of memory, and unless you store the yielded objects somewhere, only one yielded object will exist at a time. The difference is thus: When you call range, it creates a list containing so many number (int, long, or float) objects. All of those objects are created at once, and all of them exist at the same time. This can be a pain when the number of numbers is large.

xrange, on the other hand, creates no numbers immediately - only the range object itself. Number objects are created only when you pull on the generator, e.g. by looping through it. For example:

xrange(sys.maxint) # No loop, and no call to .next, so no numbers are instantiated

And for this reason, the code runs instantly. If you substitute range there, Python will lock up; it will be too busy allocating sys.maxint number objects (about 2.1 billion on the typical PC) to do anything else. Eventually, it will run out of memory and exit.

In Python versions before 2.2, xrange objects also supported optimizations such as fast membership testing (i in xrange(n)). These features were removed in 2.2 due to lack of use.

Re-map Functions at runtime

Say you have a function

class Test:

def check(self,a,b,c):

if a == 0:

self.str = b*100

else:

self.str = c*100

a = Test()

def example():

for i in xrange(0,100000):

a.check(i,"b","c")

import profile

profile.run("example()")

And suppose this function gets called from somewhere else many times.

Well, your check will have an if statement slowing you down all the time except the first time, so you can do this:

class Test2:

def check(self,a,b,c):

self.str = b*100

self.check = self.check_post

def check_post(self,a,b,c):

self.str = c*100

a = Test2()

def example2():

for i in xrange(0,100000):

a.check(i,"b","c")

import profile

profile.run("example2()")

Well, this example is fairly inadequate, but if the 'if' statement is a pretty complicated expression (or something with lots of dots), you can save yourself evaluating it, if you know it will only be true the first time.

Profiling Code

The first step to speeding up your program is learning where the bottlenecks lie. It hardly makes sense to optimize code that is never executed or that already runs fast. I use two modules to help locate the hotspots in my code, profile and trace. In later examples I also use the timeit module, which is new in Python 2.3.

See the separate profiling document for alternatives to the approaches given below.

See the separate profiling document for alternatives to the approaches given below.

Profile Module

The profile module is included as a standard module in the Python distribution. Using it to profile the execution of a set of functions is quite easy. Suppose your main function is called main, takes no arguments and you want to execute it under the control of the profile module. In its simplest form you just execute

import profile

profile.run('main()')

When main() returns, the profile module will print a table of function calls and execution times. The output can be tweaked using the Stats class included with the module. In Python 2.4 profile will allow the time consumed by Python builtins and functions in extension modules to be profiled as well.

A slightly longer description of profiling using the profile and pstats modules can be found here (archived version):

Hotshot Module

New in Python 2.2, the hotshot package is intended as a replacement for the profile module. The underlying module is written in C, so using hotshot should result in a much smaller performance hit, and thus a more accurate idea of how your application is performing. There is also a hotshotmain.py program in the distributions Tools/scripts directory which makes it easy to run your program under hotshot control from the command line.

Trace Module

The trace module is a spin-off of the profile module I wrote originally to perform some crude statement level test coverage. It's been heavily modified by several other people since I released my initial crude effort. As of Python 2.0 you should find trace.py in the Tools/scripts directory of the Python distribution. Starting with Python 2.3 it's in the standard library (the Lib directory). You can copy it to your local bin directory and set the execute permission, then execute it directly. It's easy to run from the command line to trace execution of whole scripts:

% trace.py -t spam.py eggs

In Python 2.4 it's even easier to run. Just execute python -m trace.

There's no separate documentation, but you can execute "pydoc trace" to view the inline documentation.

Visualizing Profiling Result

RunSnakeRun is a GUI tool by Mike Fletcher which visualizes profile dumps from cProfile using square maps. Function/method calls may be sorted according to various criteria, and source code may be displayed alongside the visualization and call statistics.

An example usage:

runsnake some_profile_dump.prof

Gprof2Dot is a python based tool that can transform profiling results output into a graph that can be converted into a PNG image or SVG.

A typical profiling session with python 2.5 looks like this (on older platforms you will need to use actual script instead of the -m option):

python -m cProfile -o stat.prof MYSCRIPY.PY [ARGS...]

python -m pbp.scripts.gprof2dot -f pstats -o stat.dot stat.prof

dot -ostat.png -Tpng stat.dot

PyCallGraph pycallgraph is a Python module that creates call graphs for Python programs. It generates a PNG file showing an modules's function calls and their link to other function calls, the amount of times a function was called and the time spent in that function.

Typical usage:

pycallgraph scriptname.