https://hub.docker.com/r/sequenceiq/hadoop-docker/tags

overview

#Apache Hadoop 2.7.0 Docker image Note: this is the master branch - for a particular Hadoop version always check the related branch A few weeks ago we released an Apache Hadoop 2.3 Docker image - this quickly become the most popular Hadoop image in the Docker registry. Following the success of our previous Hadoop Docker images, the feedback and feature requests we received aligned with the Hadoop release cycle, so we have released an Apache Hadoop 2.7.0 Docker image - same as the previous version, it's available as a trusted and automated build on the official Docker registry. FYI: All the former Hadoop releases (2.3, 2.4.0, 2.4.1, 2.5.0, 2.5.1, 2.5.2, 2.6.0) are available in the GitHub branches or our Docker Registry - check the tags. Build the image If you'd like to try directly from the Dockerfile you can build the image as: docker build -t sequenceiq/hadoop-docker:2.7.0 . Pull the image The image is also released as an official Docker image from Docker's automated build repository - you can always pull or refer the image when launching containers. docker pull sequenceiq/hadoop-docker:2.7.0 Start a container In order to use the Docker image you have just build or pulled use: Make sure that SELinux is disabled on the host. If you are using boot2docker you don't need to do anything. docker run -it sequenceiq/hadoop-docker:2.7.0 /etc/bootstrap.sh -bash Testing You can run one of the stock examples: cd $HADOOP_PREFIX # run the mapreduce bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.0.jar grep input output 'dfs[a-z.]+' # check the output bin/hdfs dfs -cat output/* Hadoop native libraries, build, Bintray, etc The Hadoop build process is no easy task - requires lots of libraries and their right version, protobuf, etc and takes some time - we have simplified all these, made the build and released a 64b version of Hadoop nativelibs on this Bintray repo. Enjoy. Automate everything As we have mentioned previousely, a Docker file was created and released in the official Docker repository Docker Pull Command Owner profilesequenceiq Source Repository Github sequenceiq/hadoop-docker

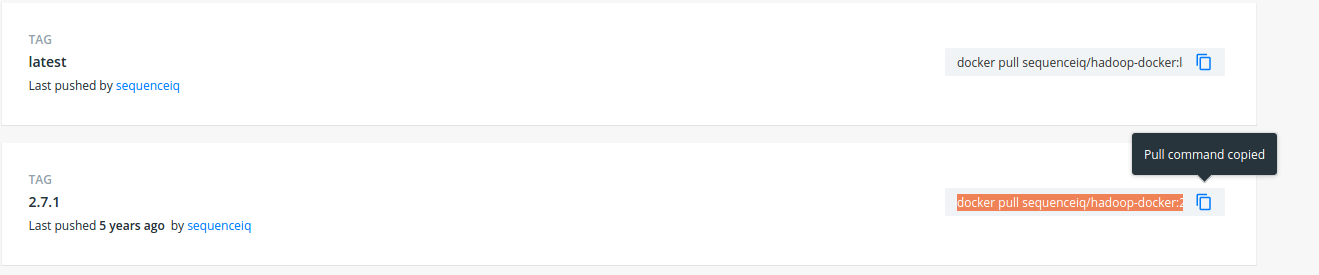

tags:

docker pull sequenceiq/hadoop-docker:2.7.1

Dockerfile

# Creates pseudo distributed hadoop 2.7.0 # # docker build -t sequenceiq/hadoop . FROM sequenceiq/pam:centos-6.5 MAINTAINER SequenceIQ USER root # install dev tools RUN yum clean all; rpm --rebuilddb; yum install -y curl which tar sudo openssh-server openssh-clients rsync # update libselinux. see https://github.com/sequenceiq/hadoop-docker/issues/14 RUN yum update -y libselinux # passwordless ssh RUN ssh-keygen -q -N "" -t dsa -f /etc/ssh/ssh_host_dsa_key RUN ssh-keygen -q -N "" -t rsa -f /etc/ssh/ssh_host_rsa_key RUN ssh-keygen -q -N "" -t rsa -f /root/.ssh/id_rsa RUN cp /root/.ssh/id_rsa.pub /root/.ssh/authorized_keys # java RUN curl -LO 'http://download.oracle.com/otn-pub/java/jdk/7u71-b14/jdk-7u71-linux-x64.rpm' -H 'Cookie: oraclelicense=accept-securebackup-cookie' RUN rpm -i jdk-7u71-linux-x64.rpm RUN rm jdk-7u71-linux-x64.rpm ENV JAVA_HOME /usr/java/default ENV PATH $PATH:$JAVA_HOME/bin # download native support RUN mkdir -p /tmp/native RUN curl -Ls http://dl.bintray.com/sequenceiq/sequenceiq-bin/hadoop-native-64-2.7.0.tar | tar -x -C /tmp/native # hadoop RUN curl -s http://www.eu.apache.org/dist/hadoop/common/hadoop-2.7.0/hadoop-2.7.0.tar.gz | tar -xz -C /usr/local/ RUN cd /usr/local && ln -s ./hadoop-2.7.0 hadoop ENV HADOOP_PREFIX /usr/local/hadoop ENV HADOOP_COMMON_HOME /usr/local/hadoop ENV HADOOP_HDFS_HOME /usr/local/hadoop ENV HADOOP_MAPRED_HOME /usr/local/hadoop ENV HADOOP_YARN_HOME /usr/local/hadoop ENV HADOOP_CONF_DIR /usr/local/hadoop/etc/hadoop ENV YARN_CONF_DIR $HADOOP_PREFIX/etc/hadoop RUN sed -i '/^export JAVA_HOME/ s:.*:export JAVA_HOME=/usr/java/default export HADOOP_PREFIX=/usr/local/hadoop export HADOOP_HOME=/usr/local/hadoop :' $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh RUN sed -i '/^export HADOOP_CONF_DIR/ s:.*:export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop/:' $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh #RUN . $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh RUN mkdir $HADOOP_PREFIX/input RUN cp $HADOOP_PREFIX/etc/hadoop/*.xml $HADOOP_PREFIX/input # pseudo distributed ADD core-site.xml.template $HADOOP_PREFIX/etc/hadoop/core-site.xml.template RUN sed s/HOSTNAME/localhost/ /usr/local/hadoop/etc/hadoop/core-site.xml.template > /usr/local/hadoop/etc/hadoop/core-site.xml ADD hdfs-site.xml $HADOOP_PREFIX/etc/hadoop/hdfs-site.xml ADD mapred-site.xml $HADOOP_PREFIX/etc/hadoop/mapred-site.xml ADD yarn-site.xml $HADOOP_PREFIX/etc/hadoop/yarn-site.xml RUN $HADOOP_PREFIX/bin/hdfs namenode -format # fixing the libhadoop.so like a boss RUN rm -rf /usr/local/hadoop/lib/native RUN mv /tmp/native /usr/local/hadoop/lib ADD ssh_config /root/.ssh/config RUN chmod 600 /root/.ssh/config RUN chown root:root /root/.ssh/config # # installing supervisord # RUN yum install -y python-setuptools # RUN easy_install pip # RUN curl https://bitbucket.org/pypa/setuptools/raw/bootstrap/ez_setup.py -o - | python # RUN pip install supervisor # # ADD supervisord.conf /etc/supervisord.conf ADD bootstrap.sh /etc/bootstrap.sh RUN chown root:root /etc/bootstrap.sh RUN chmod 700 /etc/bootstrap.sh ENV BOOTSTRAP /etc/bootstrap.sh # workingaround docker.io build error RUN ls -la /usr/local/hadoop/etc/hadoop/*-env.sh RUN chmod +x /usr/local/hadoop/etc/hadoop/*-env.sh RUN ls -la /usr/local/hadoop/etc/hadoop/*-env.sh # fix the 254 error code RUN sed -i "/^[^#]*UsePAM/ s/.*/#&/" /etc/ssh/sshd_config RUN echo "UsePAM no" >> /etc/ssh/sshd_config RUN echo "Port 2122" >> /etc/ssh/sshd_config RUN service sshd start && $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh && $HADOOP_PREFIX/sbin/start-dfs.sh && $HADOOP_PREFIX/bin/hdfs dfs -mkdir -p /user/root RUN service sshd start && $HADOOP_PREFIX/etc/hadoop/hadoop-env.sh && $HADOOP_PREFIX/sbin/start-dfs.sh && $HADOOP_PREFIX/bin/hdfs dfs -put $HADOOP_PREFIX/etc/hadoop/ input CMD ["/etc/bootstrap.sh", "-d"] # Hdfs ports EXPOSE 50010 50020 50070 50075 50090 # Mapred ports EXPOSE 19888 #Yarn ports EXPOSE 8030 8031 8032 8033 8040 8042 8088 #Other ports EXPOSE 49707 2122