昨晚,有了试试爬虫项目的想法。

总感觉光学基础语法知识不实战,有点纸上谈兵。既然想到了,那么就说干就干,在网上找了一些爬虫的资料,仔细阅读一番,算是做了初步了解。现在及时把这些想法记录下来,以备日后完善。

所谓爬虫,简单的说,就是利用计算机程序代替人,自动获取想要的互联网信息,并保存下来。简言之,就是网络机器人。

今天整理的是一个简易爬取拉钩网职位信息的程序。也就模仿书上的程序,自己做了一点总结,估计连入门都还不算,任重道远啊。

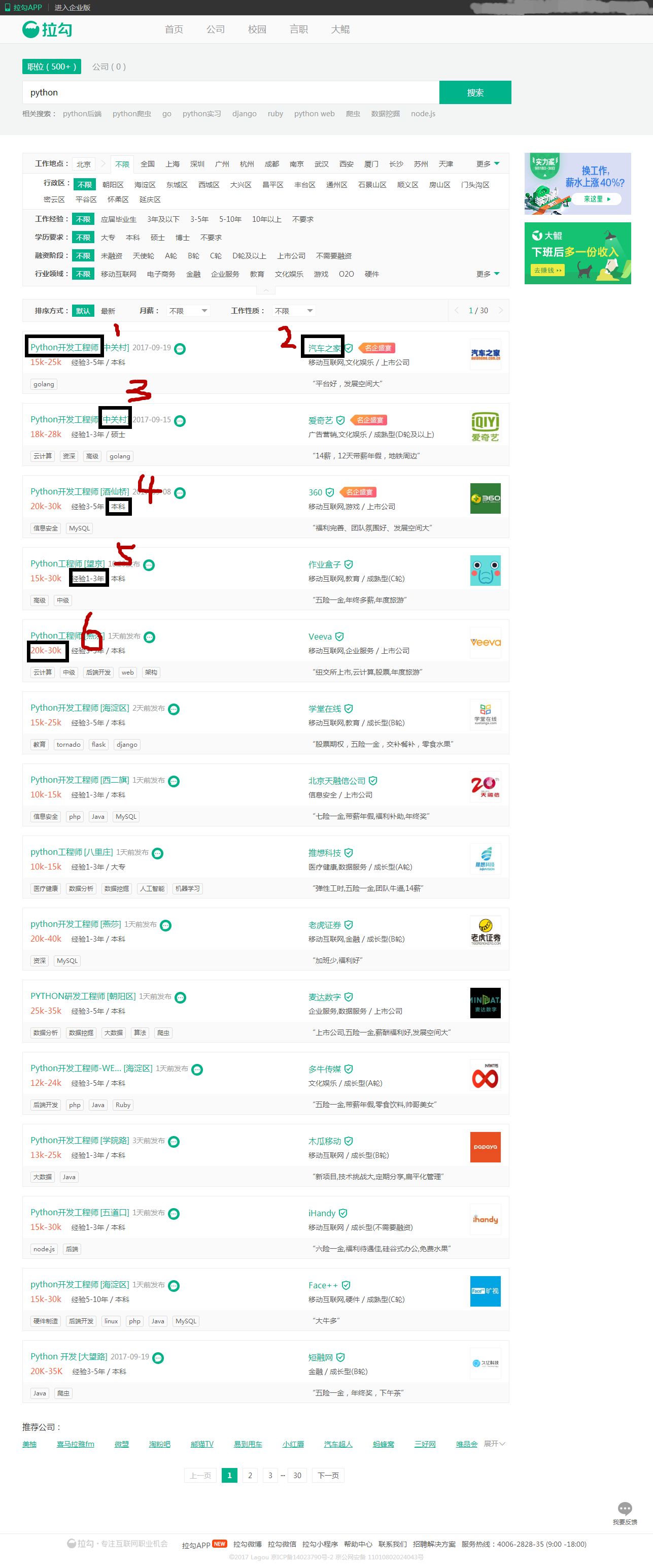

以下是拉钩网Python职位信息截图:

从拉钩网网站截图可以看出,Python职位信息一共有30页,那么我们就将这30页中标注了的数据将其保存至EXCEL中,以便我们对比,进行数据分析。

以下是程序:

# Author: Lucas w #Python3.4 import requests import xlwt headers = { "User-Agent":"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36",#访问终端信息 "Referer":"https://www.lagou.com/jobs/list_python?city=%E5%8C%97%E4%BA%AC&cl=false&fromSearch=true&labelWords=&suginput=",#从哪个页面跳转过去 "Cookie":"_gat=1; user_trace_token=20170923145813-910b5327-a02c-11e7-9257-5254005c3644; PRE_UTM=m_cf_cpt_baidu_pc; PRE_HOST=bzclk.baidu.com; PRE_SITE=http%3A%2F%2Fbzclk.baidu.com%2Fadrc.php%3Ft%3D06KL00c00f7Ghk60t8km0FNkUs0KK_uu00000aFBTNb00000LJ63sM.THL0oUh11x60UWdBmy-bIfK15yRsuyn1P1F9nj0sPW6vPWR0IHY4wHD3wbRkfYc3nHDYPWNjPYm1PRR4PRfLnHnYnH-jf6K95gTqFhdWpyfqn10YP1c3rjT4PiusThqbpyfqnHm0uHdCIZwsT1CEQLILIz4_myIEIi4WUvYE5LNYUNq1ULNzmvRqUNqWu-qWTZwxmh7GuZNxTAn0mLFW5HmYPjDz%26tpl%3Dtpl_10085_15730_11224%26l%3D1500602914%26attach%3Dlocation%253D%2526linkName%253D%2525E6%2525A0%252587%2525E9%2525A2%252598%2526linkText%253D%2525E3%252580%252590%2525E6%25258B%252589%2525E5%25258B%2525BE%2525E7%2525BD%252591%2525E3%252580%252591%2525E5%2525AE%252598%2525E7%2525BD%252591-%2525E4%2525B8%252593%2525E6%2525B3%2525A8%2525E4%2525BA%252592%2525E8%252581%252594%2525E7%2525BD%252591%2525E8%252581%25258C%2525E4%2525B8%25259A%2525E6%25259C%2525BA%2526xp%253Did%28%252522m26faf71c%252522%29%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FDIV%25255B1%25255D%25252FH2%25255B1%25255D%25252FA%25255B1%25255D%2526linkType%253D%2526checksum%253D139%26ie%3Dutf-8%26f%3D3%26tn%3Dbaidu%26wd%3D%25E6%258B%2589%25E9%2592%25A9%25E7%25BD%2591%26rqlang%3Dcn%26inputT%3D8920%26prefixsug%3D%2525E6%25258B%252589%2525E9%252592%2525A9%2525E7%2525BD%252591%26rsp%3D0; PRE_LAND=https%3A%2F%2Fwww.lagou.com%2F%3Futm_source%3Dm_cf_cpt_baidu_pc; LGUID=20170923145813-910b5b3d-a02c-11e7-9257-5254005c3644; JSESSIONID=ABAAABAACDBAAIA11C89460F812ACB58846CB390ADC7396; _putrc=AAD00F92B2209827; login=true; unick=%E7%8E%8B%E7%90%9B; showExpriedIndex=1; showExpriedCompanyHome=1; showExpriedMyPublish=1; hasDeliver=0; TG-TRACK-CODE=index_search; _ga=GA1.2.1599245716.1506149896; _gid=GA1.2.950954961.1506149896; Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1506149897; Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1506150019; LGSID=20170923145813-910b586a-a02c-11e7-9257-5254005c3644; LGRID=20170923150015-da0be6de-a02c-11e7-9257-5254005c3644; SEARCH_ID=8e1052bf383f45b5a37cc5c103f4b78c; index_location_city=%E5%8C%97%E4%BA%AC"#cookie } def getjoblist(page): data={ "first": "false", "pn": page, "kd": "python" }#数据信息 page代表页数 res = requests.post("https://www.lagou.com/jobs/positionAjax.json?city=%E5%8C%97%E4%BA%AC&needAddtionalResult=false&isSchoolJob=0",headers=headers,data=data) result = res.json() jobs=result["content"]["positionResult"]["result"] return jobs w=xlwt.Workbook() sheet1=w.add_sheet("sheet1",cell_overwrite_ok=True) n=0 for page in range(1,31): for job in getjoblist(page=page): sheet1.write(n, 0, job["positionName"]) sheet1.write(n, 1, job["companyFullName"]) sheet1.write(n, 2, job["businessZones"]) sheet1.write(n, 3, job["education"]) sheet1.write(n, 4, job["workYear"]) sheet1.write(n, 5, job["salary"]) n+=1 w.save("job.xls")

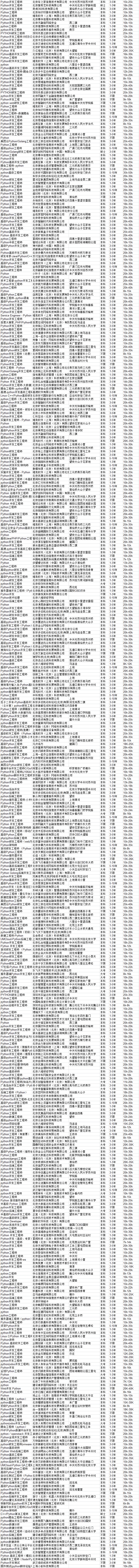

程序运行正常,生成的excel表如下:

我们成功获取到了我们想要的数据,从这些数据中可以进行一个直观的分析。瞬间感觉高大上,这也算我第一个爬虫程序。

说了这么多,总结一下:小有成就感,但是。。。。。。。。

需要学习的东西太多了,全靠自己慢慢琢磨。还是那句话,每天进步一点点。

好了,滚去学习了。