一、实验准备

1、文件结构与组成

[root@master heapster-influxdb]# ll total 20 -rw-r--r-- 1 root root 414 May 13 16:35 grafana-service.yaml -rw-r--r-- 1 root root 694 May 21 12:14 heapster-controller.yaml -rw-r--r-- 1 root root 249 May 13 16:36 heapster-service.yaml -rw-r--r-- 1 root root 1627 May 13 17:19 influxdb-grafana-controller.yaml -rw-r--r-- 1 root root 259 May 13 16:37 influxdb-service.yaml

2、具体内容

grafana-service.yaml

[root@master heapster-influxdb]# cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup, we recommend accessing Grafana through an external Loadbalancer

# or through a public IP.

# type: LoadBalancer

ports:

- port: 80

targetPort: 3000

selector:

name: influxGrafana

heapster-controller.yaml

[root@master heapster-influxdb]# cat heapster-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

labels:

k8s-app: heapster

name: heapster

version: v6

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

k8s-app: heapster

version: v6

template:

metadata:

labels:

k8s-app: heapster

version: v6

spec:

nodeSelector:

kubernetes.io/hostname: master

containers:

- name: heapster

image: 192.168.118.18:5000/heapster:canary

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:http://192.168.118.18:8080?inClusterConfig=false

- --sink=influxdb:http://monitoring-influxdb:8086

heapster-service.yaml

[root@master heapster-influxdb]# cat heapster-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

influxdb-grafana-controller.yaml

[root@master heapster-influxdb]# cat influxdb-grafana-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

labels:

name: influxGrafana

name: influxdb-grafana

namespace: kube-system

spec:

replicas: 1

selector:

name: influxGrafana

template:

metadata:

labels:

name: influxGrafana

spec:

containers:

- name: influxdb

image: 192.168.118.18:5000/heapster_influxdb:v0.5

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /data

name: influxdb-storage

- name: grafana

imagePullPolicy: IfNotPresent

image: 192.168.118.18:5000/heapster_grafana:v2.6.0

env:

- name: INFLUXDB_SERVICE_URL

value: http://monitoring-influxdb:8086

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/

volumeMounts:

- mountPath: /var

name: grafana-storage

nodeSelector:

kubernetes.io/hostname: 192.168.118.18

volumes:

- name: influxdb-storage

emptyDir: {}

- name: grafana-storage

emptyDir: {}

influxdb-service.yaml

[root@master heapster-influxdb]# cat influxdb-service.yaml

apiVersion: v1

kind: Service

metadata:

labels: null

name: monitoring-influxdb

namespace: kube-system

spec:

ports:

- name: http

port: 8083

targetPort: 8083

- name: api

port: 8086

targetPort: 8086

selector:

name: influxGrafana

镜像仓库

[root@master dashboard]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE 192.168.118.18:5000/heapster_influxdb v0.5 a47993810aac 4 years ago 251 MB 192.168.118.18:5000/heapster_grafana v2.6.0 4fe73eb13e50 4 years ago 267 MB 192.168.118.18:5000/heapster canary 0a56f7040da5 3 years ago 971 MB

二、操作演示过程

1、安装部署检查

创建数据库容器

[root@master pod]# kubectl create -f heapster-influxdb/ service "monitoring-grafana" created replicationcontroller "heapster" created service "heapster" created replicationcontroller "influxdb-grafana" created service "monitoring-influxdb" created

检查

[root@master ~]# kubectl get pod -o wide --all-namespaces|grep kube-system kube-system heapster-hrfmb 1/1 Running 0 5s 172.16.56.3 192.168.118.18 kube-system influxdb-grafana-0lj0n 2/2 Running 0 4s 172.16.56.4 192.168.118.18 kube-system kube-dns-1835838994-jm5bk 4/4 Running 0 6h 172.16.99.3 192.168.118.20 kube-system kubernetes-dashboard-latest-2728556226-fc2pc 1/1 Running 0 5h 172.16.60.6 192.168.118.19

2、遇到的坑

状态查看

[root@master pod]# kubectl get svc --namespace=kube-system NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE heapster 10.254.31.250 <none> 80/TCP 1m kube-dns 10.254.230.254 <none> 53/UDP,53/TCP 4h kubernetes-dashboard 10.254.245.38 <none> 80/TCP 3h monitoring-grafana 10.254.153.72 <none> 80/TCP 1m monitoring-influxdb 10.254.137.174 <none> 8083/TCP,8086/TCP 1m [root@master pod]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE heapster-2l264 0/1 Pending 0 1m influxdb-grafana-j602n 0/2 Pending 0 1m kube-dns-1835838994-jm5bk 4/4 Running 0 4h kubernetes-dashboard-latest-2728556226-fc2pc 1/1 Running 0 2h

错误信息

[root@master pod]# kubectl describe pods heapster-lq553 --namespace=kube-system

Name: heapster-lq553

......

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

26m 23s 92 {default-scheduler } Warning FailedScheduling pod (heapster-lq553) failed to fit in any node

fit failure summary on nodes : MatchNodeSelector (2)

处理方法

spec:

nodeSelector:

kubernetes.io/hostname: master #这里一定要和自己的节点hostnam保持一致

3、nodeSelector

labels 在 K8s 中是一个很重要的概念,作为一个标识,Service、Deployments 和 Pods 之间的关联都是通过 label 来实现的。而每个节点也都拥有 label,通过设置 label 相关的策略可以使得 pods 关联到对应 label 的节点上。

nodeSelector 是最简单的约束方式。nodeSelector 是 PodSpec 的一个字段。通过--show-labels可以查看当前nodes的labelsctl get all ...... NAME READY STATUS RESTARTS AGE po/mysql-3qkf1 1/1 Running 0 15h po/myweb-z2g3m 1/1 Running 0 15h

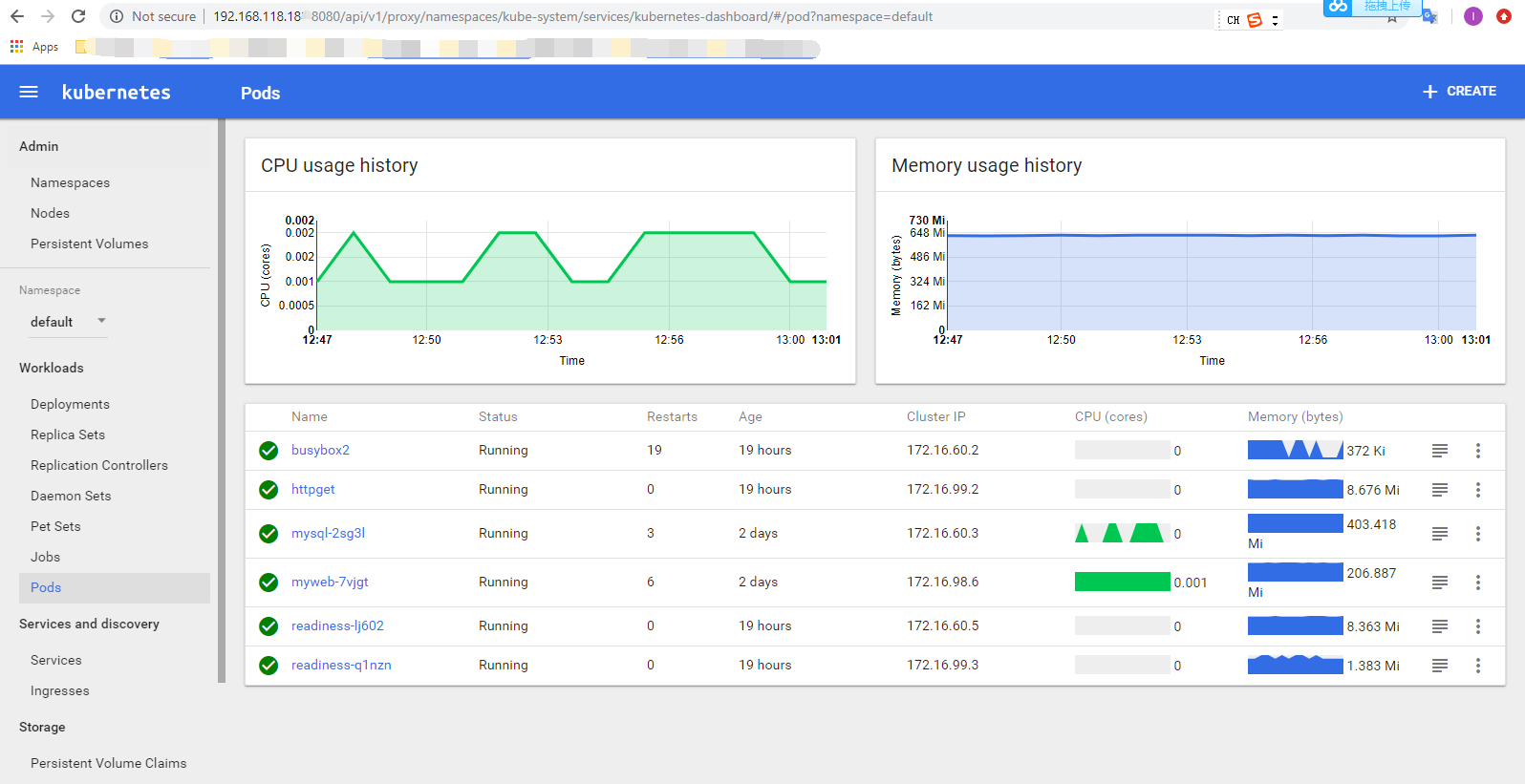

三、测试

四、监控的信息来源在哪里

1、从架构图中看来源的组件

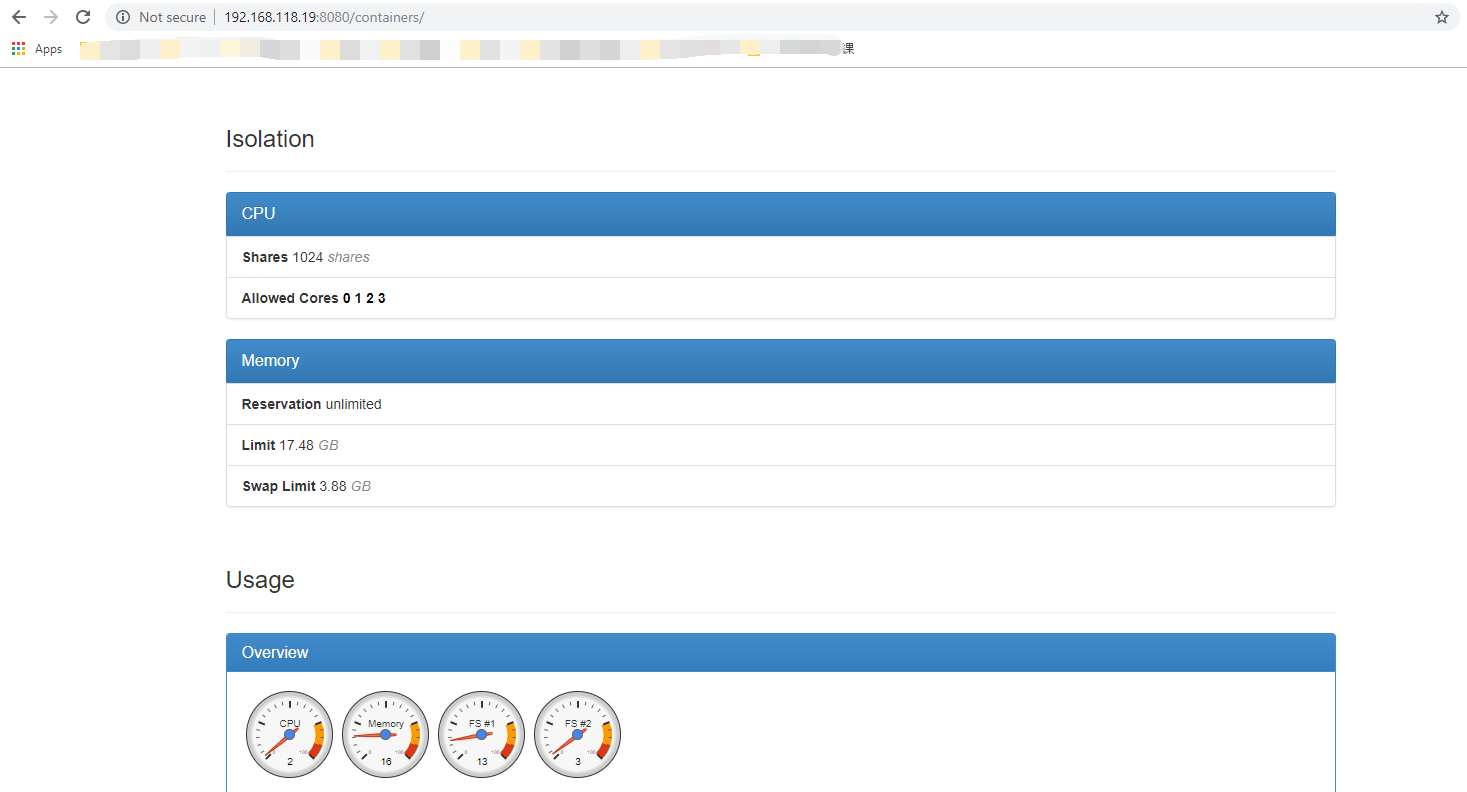

2、配置/etc/kubernetes/kubelet开启cadvisor

vim /etc/kubernetes/kubelet 修改21行如下:--cadvisor-port=8080 KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local --cadvisor-port=8080"

重启服务

systemctl restart kubelet.service systemctl restart kube-proxy.service

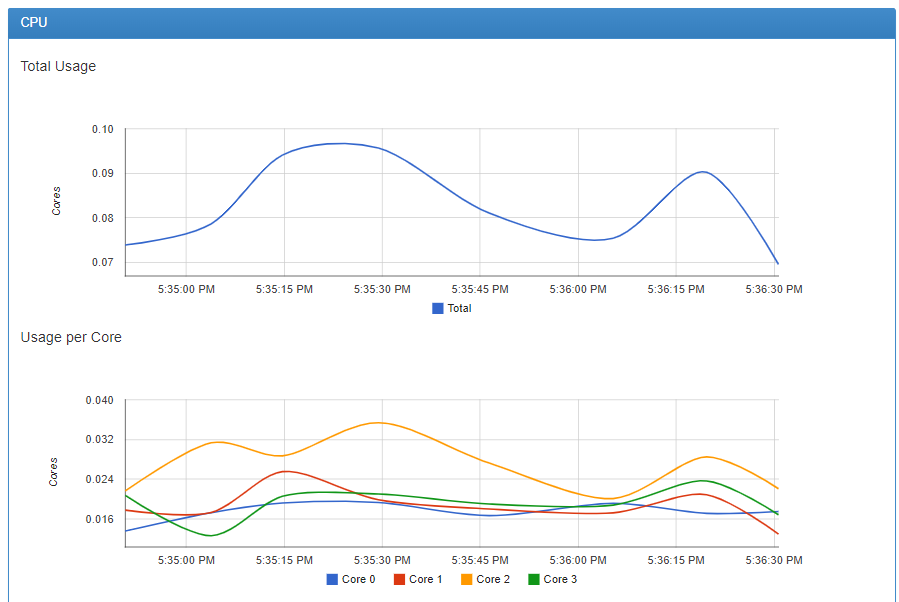

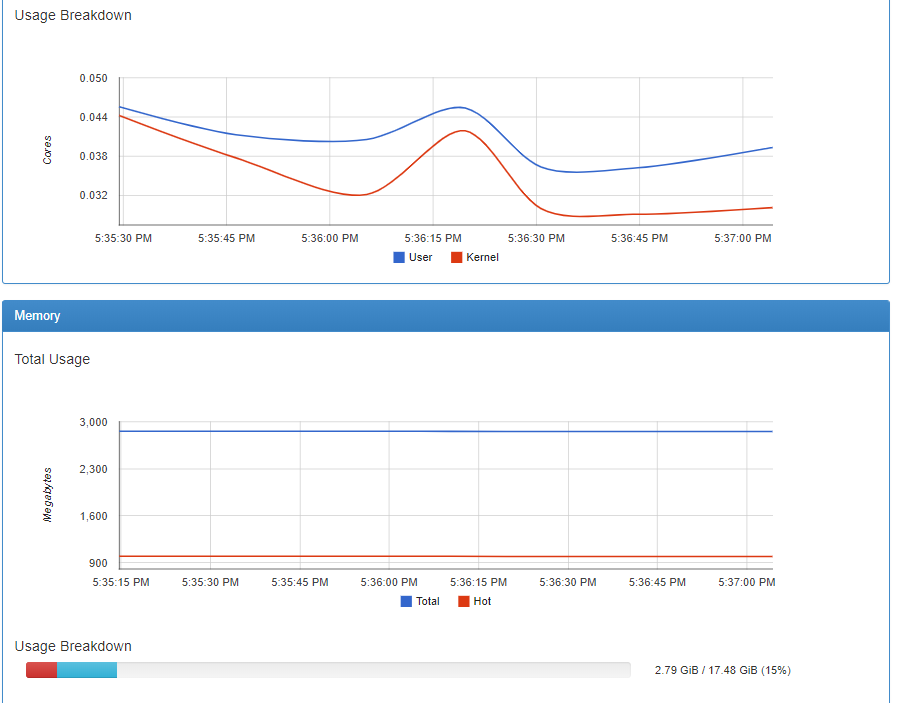

3、web页面截图

浏览器地址栏输入:

http://192.168.118.19:8080/containers/

总览

cpu

内存

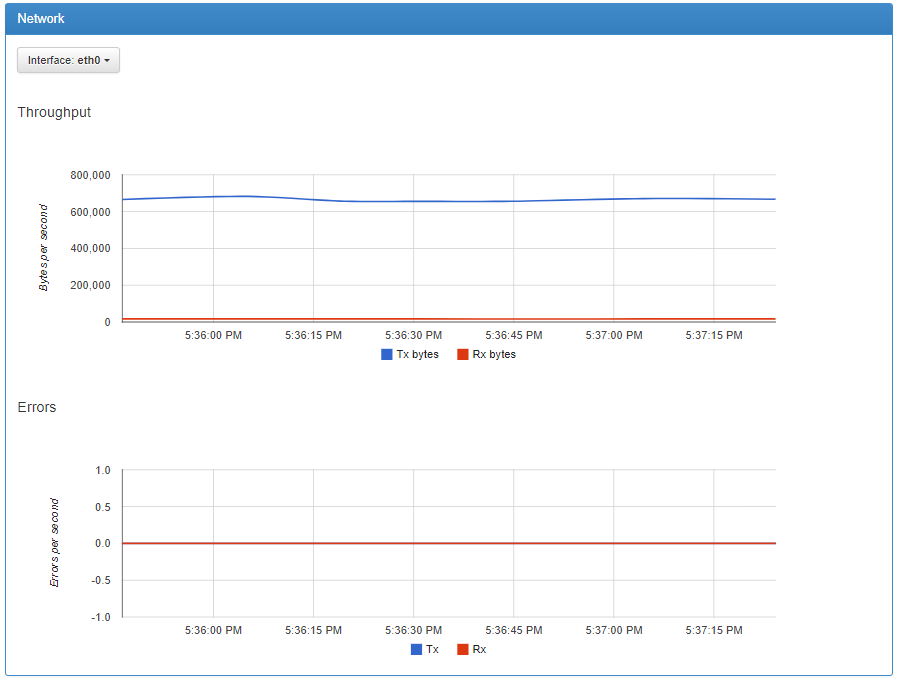

网络

磁盘