PAXOS实现 —— libevent_paxos

该文章是项目的一部分。主要讲PAXOS算法的实现。

Qiushan, Shandong University

Part I Start

Part II PAXOS

Part III Architecture

Part IV Performance

Part V Skills

Part I Generated on November 15, 2014 (Start)

The libevent_paxos ensures that all replicas of an application(e.g., apache) receive a consistent total order of requests(i.e., socket operations) despite of a small number of replicas fail. That's why we used paxos.

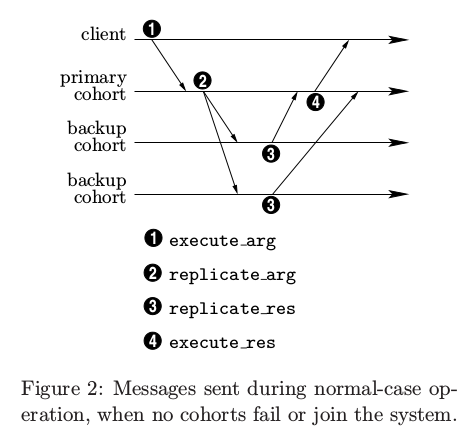

To begin with, let's read Paxos Made Practical carefully. Below is a figure in it:

1) In libevent_paxos, when a client connects with a PROXY via socket, then it sends a message ‘clientx:send’ to the proxy. When the connection is established, it will trigger a listener callback function called proxy_on_accept. Then when the message arrives at the proxy, it will trigger another listener callback function called client_side_on_read, the function will handle the message. Then client_process_data will be called, and in the function build_req_sub_msg will be invoked, it is responsible for building formal message (REQUEST_SUBMIT). Then the message will be sent to &proxy->sys_addr.c_addr,in another word, the corresponding consensus, the message will be sent to127.0.0.1:8000. And then the consensus will handle the coming message with handle_request_submit,handle_request_sumbit will invoke consensus_submit_request, if the current node is the leader, consensus_submit_request will use leader_handle_submit_req to combine the request message from the client with a view_stamp which is generated by function get_next_view_stamp. And then in leader_handle_submit_req, the new request message with type ACCEPT_REQ will be built by function build_accept_req, and then the message will be broadcasted to the other replicas via function uc (i.e.send_for_consensus_comp). In fact the process is just like the phase 1-2 of the following figure which cited from the paper Paxos Made Practical.

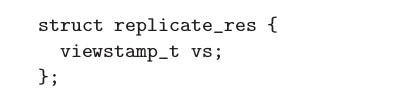

2) In the figure, 3 is replicate_res which is the acknowledge that backups make after receiving the request information from the primary.In libevent_paxos, it should correspond to

handle_accept_req (src/consensus/consensus.c)

// build the reply to the leader accept_ack* reply = build_accept_ack(comp,&msg->msg_vs);

After seeing build_accept_ack, we can find that what backups deliver to the primary is:

msg->node_id = comp->node_id;

msg->msg_vs = *vs;

msg->header.msg_type = ACCEPT_ACK;

which is similar to replicate_res in the paper:

So libevent_paxos also follows what Paxos Made Practical said.

Next for libevent_paxos should be execute the request after receiving a majority of replicas' acknowledge. The execution should be

handle_accept_ack

try_to_execute

leader_try_to_execute

And how to send the ack to the primary, it is the function uc (a.k.a send_for_consensus_comp), let's see it.

// consensus part

static void send_for_consensus_comp(node* my_node,size_t data_size,void* data,int target){

consensus_msg* msg = build_consensus_msg(data_size,data);

if(NULL==msg){

goto send_for_consensus_comp_exit;

}

// means send to every node except me

if(target<0){

for(uint32_t i=0;i<my_node->group_size;i++){

if(i!=my_node->node_id && my_node->peer_pool[i].active){

struct bufferevent* buff = my_node->peer_pool[i].my_buff_event;

bufferevent_write(buff,msg,CONSENSUS_MSG_SIZE(msg));

SYS_LOG(my_node,

"Send Consensus Msg To Node %u

",i);

}

}

}else{

if(target!=(int)my_node->node_id&&my_node->peer_pool[target].active){

struct bufferevent* buff = my_node->peer_pool[target].my_buff_event;

bufferevent_write(buff,msg,CONSENSUS_MSG_SIZE(msg));

SYS_LOG(my_node,

"Send Consensus Msg To Node %u.

",target);

}

}

send_for_consensus_comp_exit:

if(msg!=NULL){

free(msg);

}

return;

}So, uc sees to delivering the message between the primary and the backups. For the same reason, when a client request arrives at the primary, the primary also uses uc (a.k.a send_for_consensus_comp) to broadcast the request information to backups.

3) Next there is 4 left to be explained.

group_size = 3;

leader_try_to_execute

{

if(reached_quorum(record_data,comp->group_size))

{

SYS_LOG(comp,"Node %d : View Stamp%u : %u Has Reached Quorum.

",…)

SYS_LOG(comp,"Before Node %d IncExecute %u : %u.

",…)

SYS_LOG(comp,"After Node %d Inc Execute %u : %u.

",…)

}

}

static int reached_quorum(request_record*record,int group_size){

// this may be compatibility issue

if(__builtin_popcountl(record->bit_map)>=((group_size/2)+1)){

return 1;

}else{

return 0;

}

}__builtin_popcountl which comes from GCC can calculate the number of 1 accurately.

So next we should pay attention to the data structure record->bit_map.

In concensus.c

typedef struct request_record_t{

struct timeval created_time; // data created timestamp

char is_closed;

uint64_t bit_map; // now we assume the maximal replica group size is 64;

size_t data_size; // data size

char data[0]; // real data

}__attribute__((packed))request_record;

Before our further exploration, let us suppose that the leader needs to use the bit_map to record that how many replicas has accepted the request that it proposed, and iff the number reaches the quorum (i.e. majority, i.e. (group_size/2)+1),the leader can execute the request. So based on the assumption, there must be a place where recording the number of ACCEPT ACK, and it should be after the leader has received the ACCEPT ACK messages which come from the other replicas.Let’s find the place, verifying our hypothesis.

consensus_handle_msg

{

case ACCEPT_ACK:

handle_accept_ack(comp,data)

{

update_record(record_data,msg->node_id)

{

record->bit_map= (record->bit_map | (1<<node_id));

//debug_log("the record bit map isupdated to %x

",record->bit_map);

}

}

}So our assumption is correct, and meanwhile through the expression

record->bit_map | (1<<node_id)

we could see that the number of 1 in record->bit_map is the number of replicas which has sent ACCEPT_ACK.

Evaluation Framework

- Python script

./eval.py apache_ab.cfg

- DEBUG Logs: proxy-req.log | proxy-sys.log | consensus-sys.log

node-0-proxy-req.log:

1418002395 : 1418002395.415361,1418002395.415362,1418002395.416476,1418002395.416476

Operation: Connects.

1418002395 : 1418002395.415617,1418002395.415619,1418002395.416576,1418002395.416576

Operation: Sends data: (START):client0:send:(END)

1418002395 : 1418002395.416275,1418002395.416276,1418002395.417113,1418002395.417113

Operation: Closes.

About proxy-req.log, what's the meaning of 1418002395 : 1418002395.415361,1418002395.415362,1418002395.416476,1418002395.416476

fprintf(output,"%lu : %lu.%06lu,%lu.%06lu,%lu.%06lu,%lu.%06lu

",header->connection_id,

header->received_time.tv_sec,header->received_time.tv_usec,

header->created_time.tv_sec,header->created_time.tv_usec,

endtime.tv_sec,endtime.tv_usec,endtime.tv_sec,endtime.tv_usec);

It shows the received time, created time and end time of the operation.

- Google Spreadsheets

| suites | benchmark | workload per client | Server # | Concurrency # | Client # | Requests # | w/ proxy consensus mean(us) | w/ proxy consensus s.t.d. | w/ proxy response mean(us) | w/ proxy response s.t.d. | w/ proxy throughput(Req/s) | w/ proxy server mean(us) | w/ proxy server throughput(Req/s) | w/o proxy server mean(us) | w/o proxy server throughput(Req/s) | overhead mean(us) | notes |

| Mongoose | Ab | 10 | 3 | 10 | 100 | 5434 | 11784.4 | 12637 | 12137.4 | 12671 | 1125.57 | 6024 | 1660.03 | 1865 | 5361.93 | 4159 | |

| Mongoose | Ab | 100 | 3 | 10 | 100 | 58384 | 9650.152 | 15138.11 | 9992.337 | 15145.30 | 2485.936 | 16216 | 616.69 | 1315 | 7605.72 | 14901 | |

| Mongoose | Ab | 100 | 3 | 50 | 100 | 57812 | 17456.41 | 17694.93 | 18459.16 | 17518.62 | 5127.989 | 70611 | 708.1 | 5744 | 8704.74 | 64867 | |

| Apache | Ab | 10 | 3 | 10 | 100 |

查看全文

肾积水 十月一日 9月27日 星期六 080929 气温骤降 東京の空 9月26日 星期五 9月30日 星期二 粉蓝房子&电影 080922 雨

Copyright © 2011-2022 走看看

|