爬取目标

1.本次代码是在python3上运行通过的

- selenium3 +firefox59.0.1(最新)

- BeautifulSoup

- requests

2.爬取目标网站,我的博客:https://home.cnblogs.com/u/lxs1314

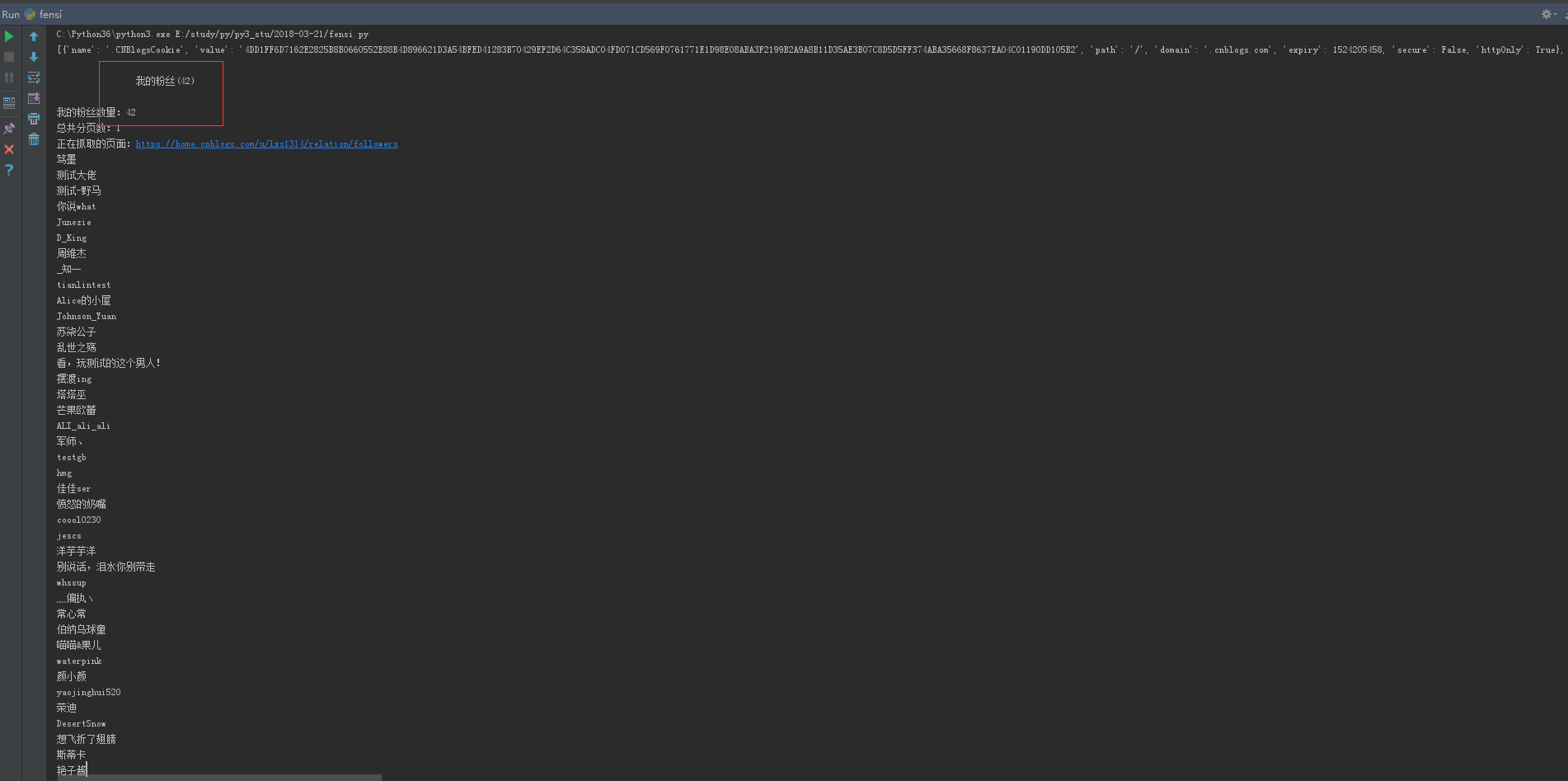

爬取内容:爬我的博客的所有粉丝的名称,并保存到txt

3.由于博客园的登录是需要人机验证的,所以是无法直接用账号密码登录,需借助selenium登录

直接贴代码:

# coding:utf-8 # __author__ = 'Carry' import requests from selenium import webdriver from bs4 import BeautifulSoup import re import time # firefox浏览器配置文件地址 profile_directory = r'C:UsersAdministratorAppDataRoamingMozillaFirefoxProfilespxp74n2x.default' s = requests.session() # 新建session url = "https://home.cnblogs.com/u/lxs1314" def get_cookies(url): '''启动selenium获取登录的cookies''' # 加载配置 profile = webdriver.FirefoxProfile(profile_directory) # 启动浏览器配置 driver = webdriver.Firefox(profile) driver.get(url+"/followers") time.sleep(3) cookies = driver.get_cookies() # 获取浏览器cookies print(cookies) driver.quit() return cookies def add_cookies(cookies): '''往session添加cookies''' # 添加cookies到CookieJar c = requests.cookies.RequestsCookieJar() for i in cookies: c.set(i["name"], i['value']) s.cookies.update(c) # 更新session里cookies def get_ye_nub(url): # 发请求 r1 = s.get(url+"/relation/followers") soup = BeautifulSoup(r1.content, "html.parser") # 抓取我的粉丝数 fensinub = soup.find_all(class_="current_nav") print (fensinub[0].string) num = re.findall(u"我的粉丝((.+?))", fensinub[0].string) print (u"我的粉丝数量:%s"%str(num[0])) # 计算有多少页,每页45条 ye = int(int(num[0])/45)+1 print (u"总共分页数:%s"%str(ye)) return ye def save_name(nub): # 抓取第一页的数据 if nub <= 1: url_page = url+"/relation/followers" else: url_page = url+"/relation/followers?page=%s" % str(nub) print (u"正在抓取的页面:%s" %url_page) r2 = s.get(url_page) soup = BeautifulSoup(r2.content, "html.parser") fensi = soup.find_all(class_="avatar_name") for i in fensi: name = i.string.replace(" ", "").replace(" ","") print (name) with open("name.txt", "a") as f: # 追加写入 f.write(name+" ") #name.encode("utf-8") if __name__ == "__main__": cookies = get_cookies(url) add_cookies(cookies) n = get_ye_nub(url) for i in range(1, n+1): save_name(i)

原文链接:http://www.cnblogs.com/yoyoketang/p/8610779.html