I have a large file ( ~4G) to process in Python. I wonder whether it is OK to "read" such a large file. So I tried in the following several ways:

The original large file to deal with is not "./CentOS-6.5-i386.iso", I just take this file as an example here.

1: Normal Method. (ignore try/except/finally)

def main(): f = open(r"./CentOS-6.5-i386.iso", "rb") for line in f: print(line, end="") f.close() if __name__ == "__main__": main()

2: "With" Method.

def main(): with open(r"./CentOS-6.5-i386.iso", "rb") as f: for line in f: print(line, end="") if __name__ == "__main__": main()

3: "readlines" Method. [Bad Idea]

#NO. readlines() is really bad for large files. #Memory Error. def main(): for line in open(r"./CentOS-6.5-i386.iso", "rb").readlines(): print(line, end="") if __name__ == "__main__": main()

4: "fileinput" Method.

import fileinput def main(): for line in fileinput.input(files=r"./CentOS-6.5-i386.iso", mode="rb"): print(line, end="") if __name__ == "__main__": main()

5: "Generator" Method.

def readFile(): with open(r"./CentOS-6.5-i386.iso", "rb") as f: for line in f: yield line def main(): for line in readFile(): print(line, end="") if __name__ == "__main__": main()

The methods above, all work well for small files, but not always for large files(readlines Method). The readlines() function loads the entire file into memory as it runs.

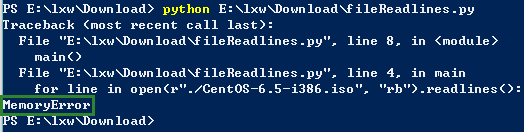

When I run the readlines Method, I got the following error message:

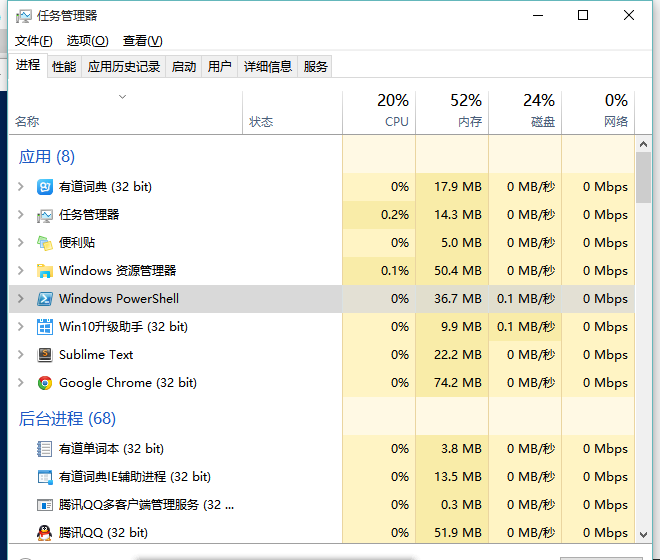

When using the readlines Method, the Percentage of Used CPU and Used Memory rises rapidly(in the following figure). And when the percentage of Used Memory reaches over 50%, I got the "MemoryError" in Python.

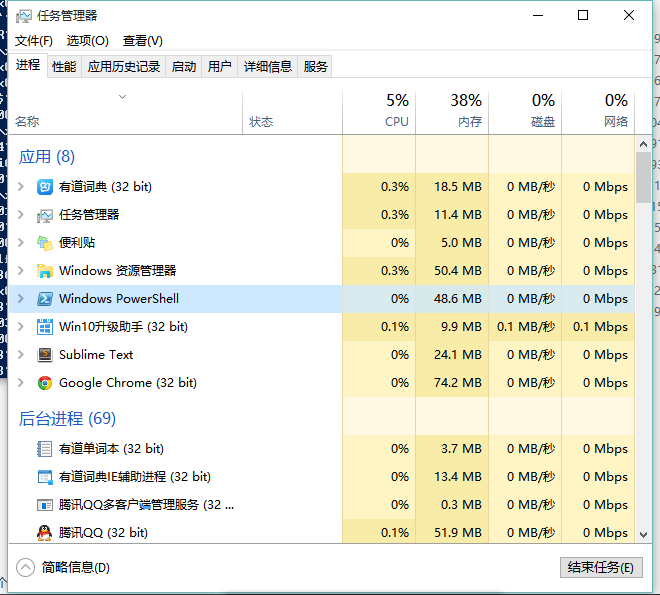

The other methods (Normal Method, With Method, fileinput Method, Generator Method) works well for large files. And when using these methods, the workload for CPU and memory which is shown in the following figure does not get a distinct rise.

By the way, I recommend the generator method, because it shows clearly that you have taken the file size into account.

Reference: