- 深入解析:Shared Variables

- 深入解析:RDD Persistence

- 深入解析:RDD Key Value Pairs API

- 额外知识点:Implicit Conversion

Shared Variables

一般来说,Spark中的变量都是local变量,每个executor都有一份自己的copy,本地的变化不会反应到driver上。除此之外,Spark也提供了两种方法,实现全局变量。

Broadcast Variables

Broadcast variables are created from a variable v by calling SparkContext.broadcast(v).

scala> val broadcastVar = sc.broadcast(Array(1, 2, 3))

broadcastVar: org.apache.spark.broadcast.Broadcast[Array[Int]] = Broadcast(0)

scala> broadcastVar.value

res0: Array[Int] = Array(1, 2, 3)

创建了broadcast value之后,原始变量v就不要再用了,更不要再去修改它的值,以免发生错误。

Accumulators

A numeric accumulator can be created by calling SparkContext.longAccumulator() or SparkContext.doubleAccumulator() to accumulate values of type Long or Double, respectively.

scala> val accum = sc.longAccumulator("My Accumulator")

accum: org.apache.spark.util.LongAccumulator = LongAccumulator(id: 0, name: Some(My Accumulator), value: 0)

scala> sc.parallelize(Array(1, 2, 3, 4)).foreach(x => accum.add(x))

...

10/09/29 18:41:08 INFO SparkContext: Tasks finished in 0.317106 s

scala> accum.value

res2: Long = 10

Programmers can also create their own types by subclassing AccumulatorV2.

RDD Persistence

RDD主要是存储在

- 内存(Memory)

- 硬盘(Disk)

存在内存中,当然运算起来快,但是受到内存容量的限制。存在硬盘中,可以更加廉价地存储大量数据,但是读写上有速度限制。选择时需要综合考虑。

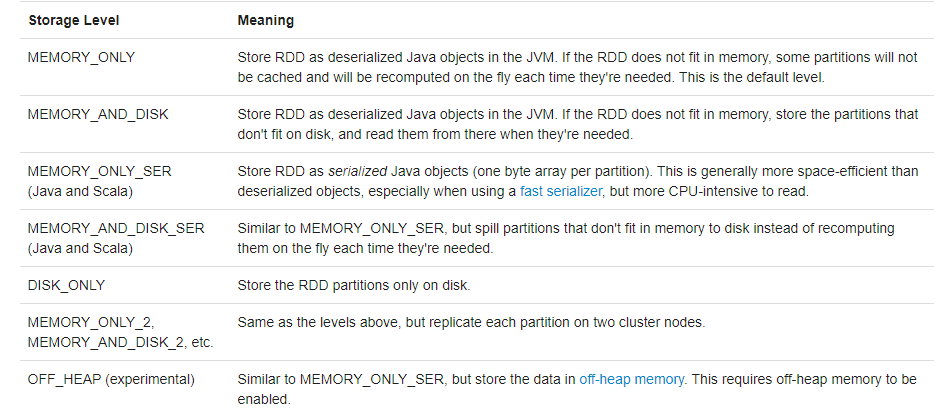

由此,引出 storage levels 如下图:

在上图中,除了上面提到的Memory,Disk,还有第三个变量Serialization,即序列化后的对象存储空间更小,但是需要额外计算(反序列化)消耗。

最终的存储级别,基本上就是这三个变量的一些组合。

在代码层面,可以使用两个方法实现persistence:

persist():可以选择StorageLevelcache():使用默认存储级别StorageLevel.MEMORY_ONLY

Which Storage Level to Choose?

- 根据数据量,从小到大,依次从上图选择。

- 如果需要fast fault recovery (e.g. if using Spark to serve requests from a web application),使用replicated storage levels,即

MEMORY_ONLY_2,MEMORY_AND_DISK_2, etc.

RDD Key Value Pairs API

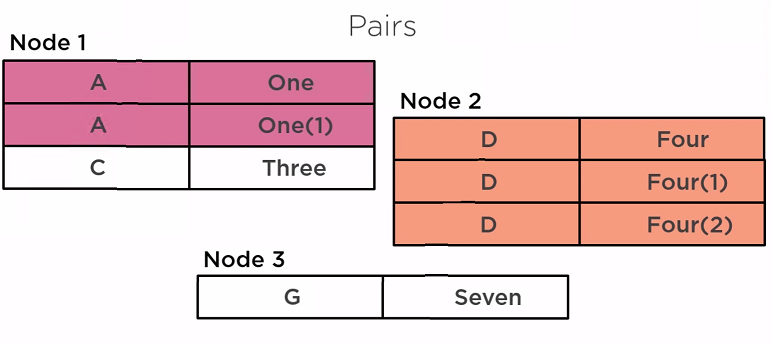

Spark中Key Value Pair如果有多个值,在partition的时候,会尽量把同一个key的所有pairs分到一个node上。这样的好处是可以在单个node上完成所有关于这个key的操作。

下面列举一些常用API,都是在Class PairRDDFunctions<K,V>下的:

1

collectAsMap()

Return the key-value pairs in this RDD to the master as a Map.

mapValues(scala.Function1<V,U> f)

Pass each value in the key-value pair RDD through a map function without changing the keys;

flatMapValues(scala.Function1<V,scala.collection.TraversableOnce<U>> f)

Pass each value in the key-value pair RDD through a flatMap function without changing the keys;

reduceByKey(scala.Function2<V,V,V> func)

Merge the values for each key using an associative reduce function.

groupByKey()

Group the values for each key in the RDD into a single sequence.

countByKey()

Count the number of elements for each key, and return the result to the master as a Map.

2

join(RDD<scala.Tuple2<K,W>> other)

Return an RDD containing all pairs of elements with matching keys in this and other.

leftOuterJoin(RDD<scala.Tuple2<K,W>> other)

Perform a left outer join of this and other.

rightOuterJoin(RDD<scala.Tuple2<K,W>> other)

Perform a right outer join of this and other.

3

public void saveAsHadoopFile(String path,

Class<?> keyClass,

Class<?> valueClass,

Class<? extends org.apache.hadoop.mapred.OutputFormat<?,?>> outputFormatClass,

Class<? extends org.apache.hadoop.io.compress.CompressionCodec> codec)

Output the RDD to any Hadoop-supported file system, using a Hadoop OutputFormat class supporting the key and value types K and V in this RDD.

Implicit Conversion

隐式转换,即将typeS转换为typeT。

举例1,when calling a Java method that expects a java.lang.Integer, you are free to pass it a scala.Int instead by using 'Implicit Conversion'.

import scala.language.implicitConversions

implicit def int2Integer(x: Int) =

java.lang.Integer.valueOf(x)

举例2,通过隐式转换实现1.plus(1)。

// 1

case class IntExtensions(value: Int) {

def plus(operand: Int) = value + operand

}

// 2

import scala.language.implicitConversions

implicit def intToIntExtensions(value: Int) = {

IntExtensions(value)

}