CUDA

compute Unified Device Architecture

CUDA C/C++

基于C/C++的编程方法

支持异构编程的扩展方法

简单明了的API

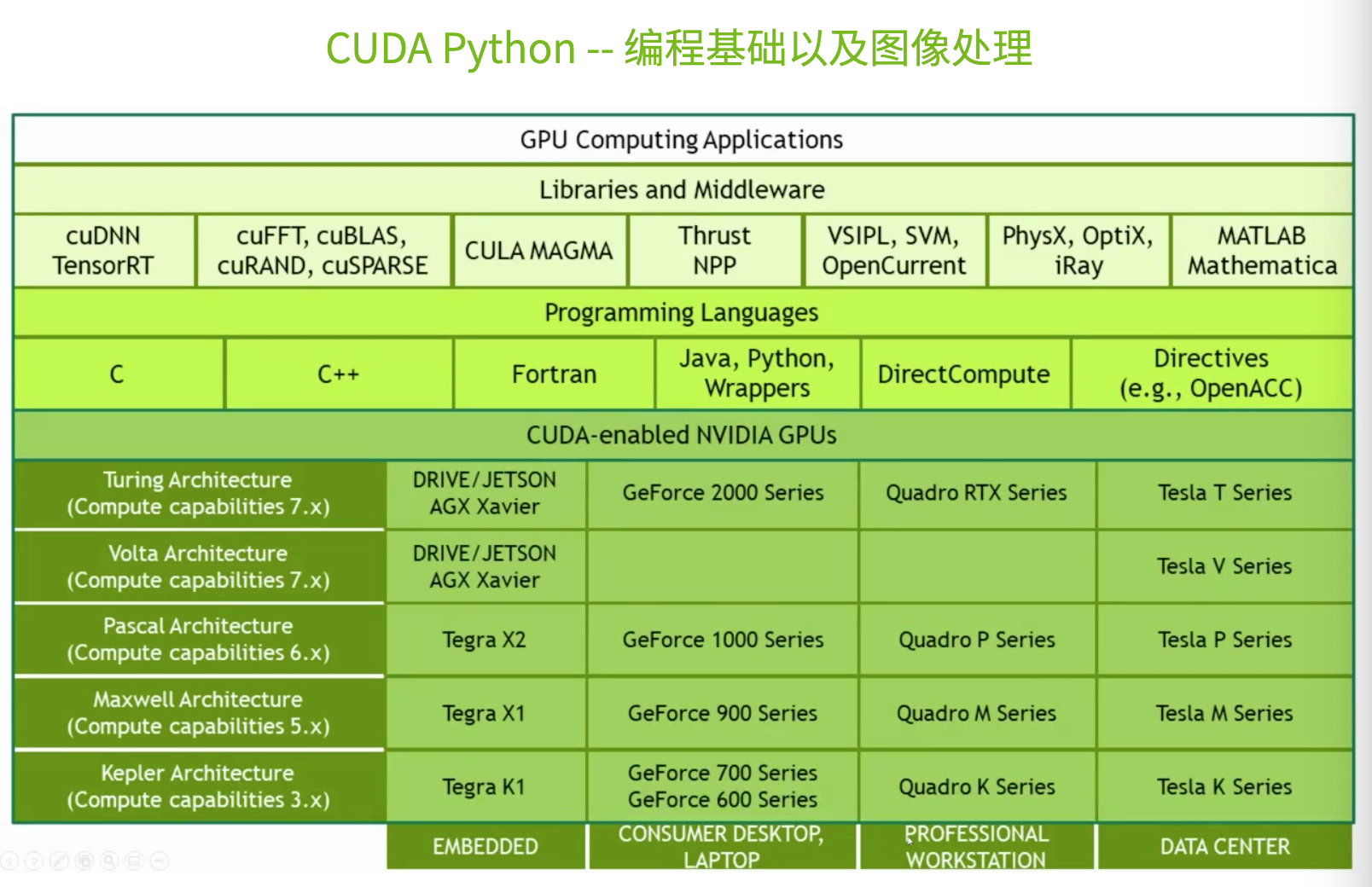

CUDA支持的编程语言:

C/C++/PYTHON/Fortran/Java/...

CUDA并行计算模式

并行计算是同时应用多个计算资源解决一个计算问题

(空间换时间)

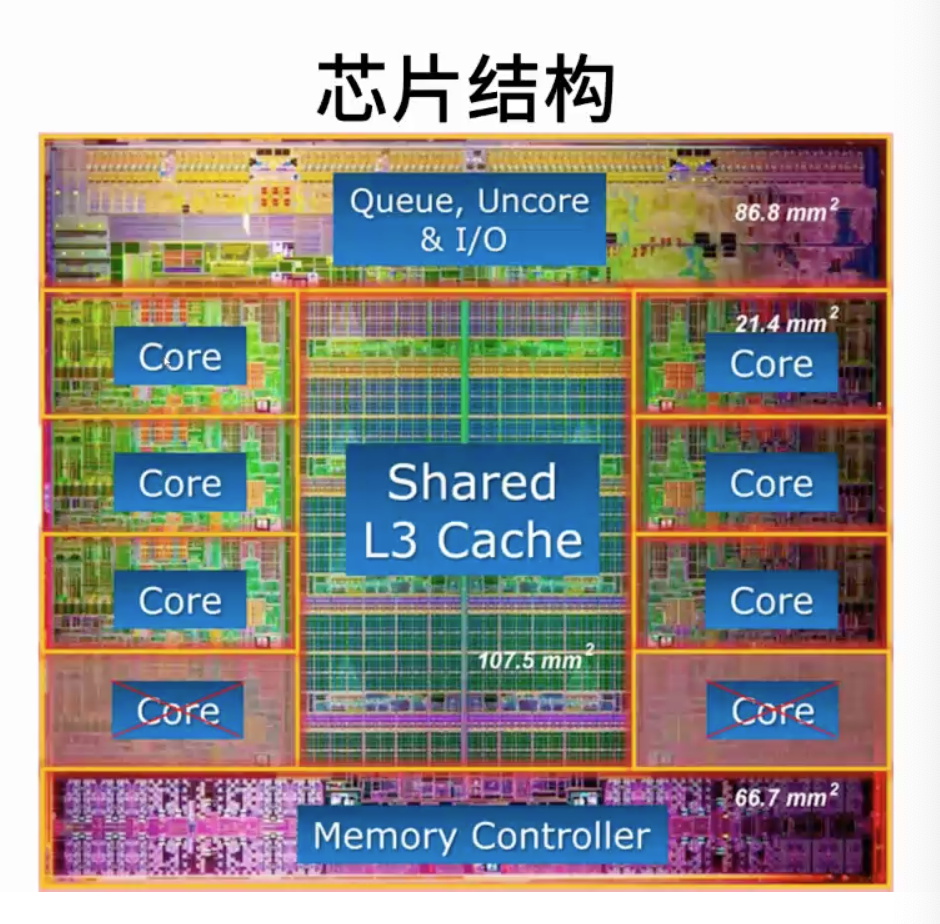

异构计算

HOST CPU和内存(host memory)

DEVICE GPU和现存 (device memory)

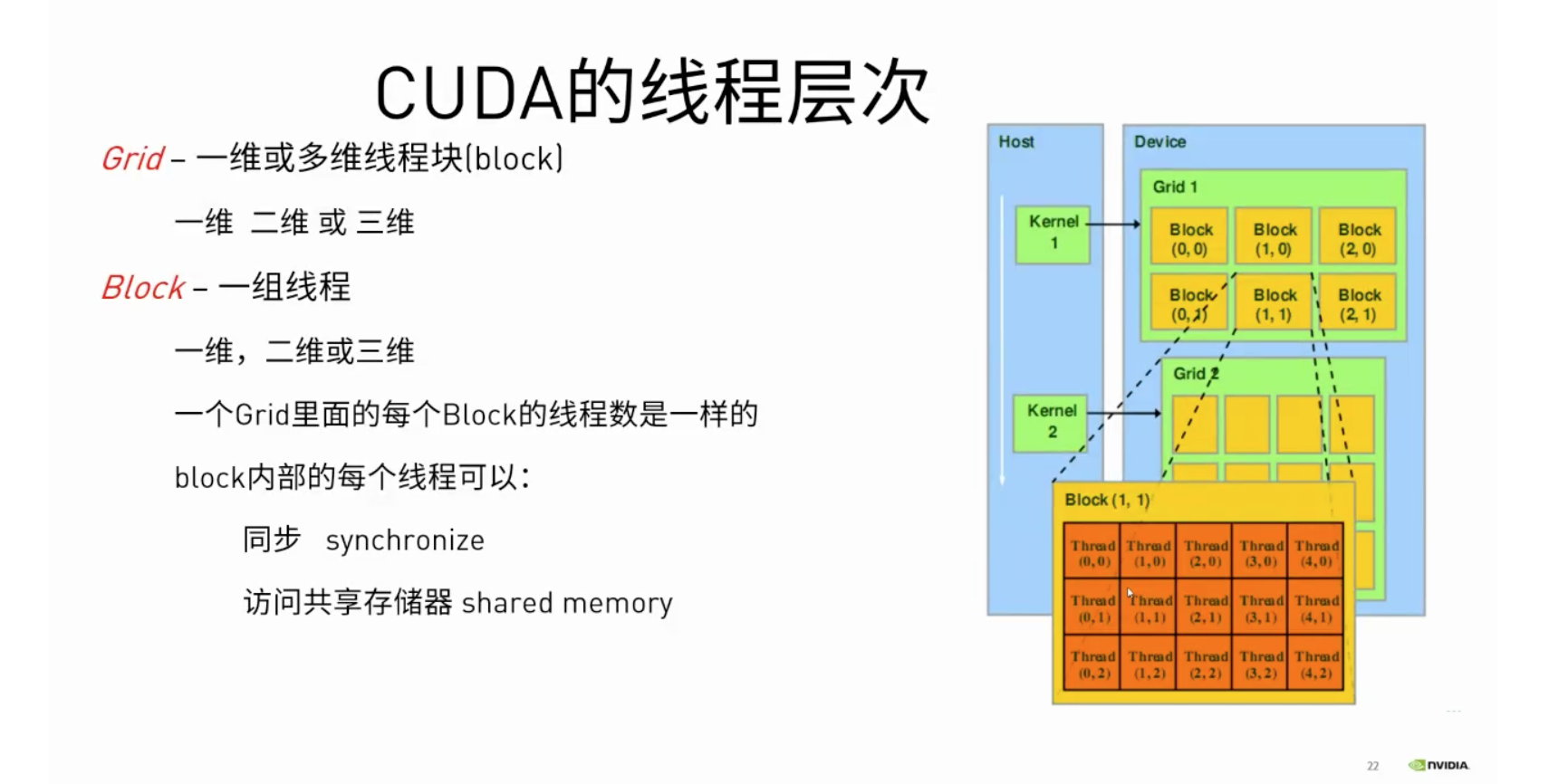

32个CUDA核(一个warp)共享一个execution contexts

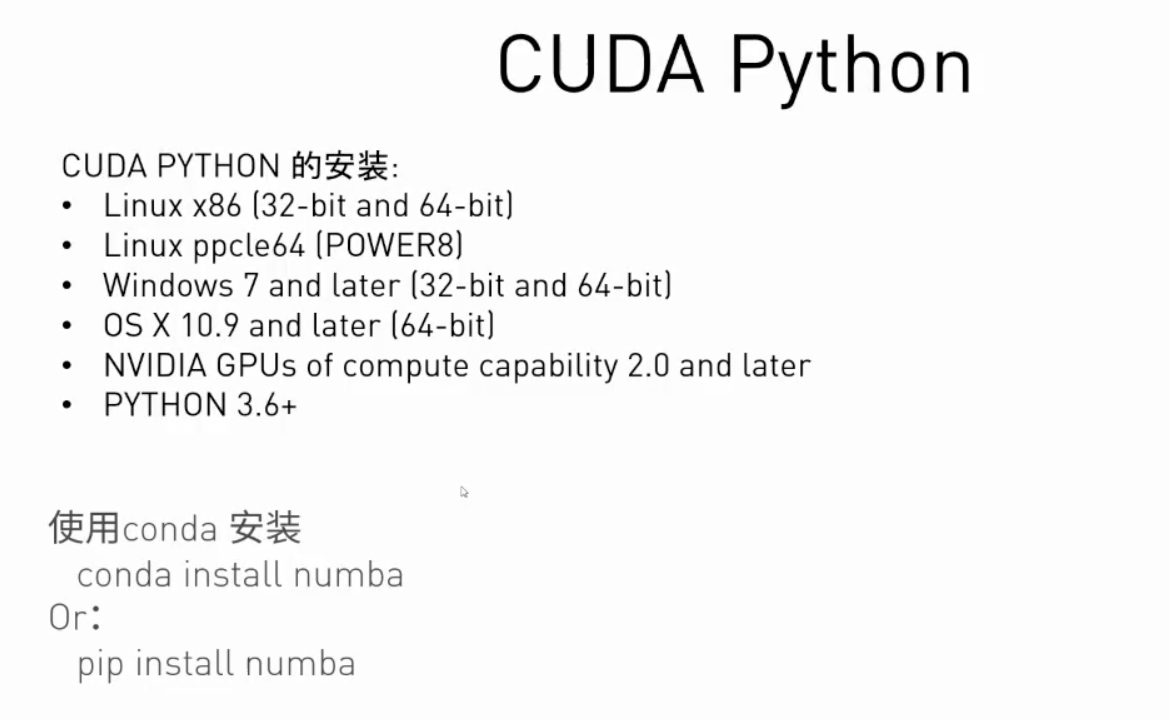

CUDA Python

host: The CPU

device: The GPU

host memory: The system main memory

device memory: Onboard memroy on a GPU card

kernals: a GPU function launched by the host and executed on the device

device function: a GPU function executed on the device which can only be called from the device (i.e. form a kernel or another device function)

定义Kernal函数:

@cuda.jit('void(int32[:]),int32[:]')

def foo(aryA,aryB):

...

调用Kernal函数:

griddim = 1,2

blockdim = 3,4

foo[griddim,blockdim](aryA,aryB)

查看CUDA 版本

nvcc -V

创建文件

touch 20200609-python-cuda-cv.py