前言

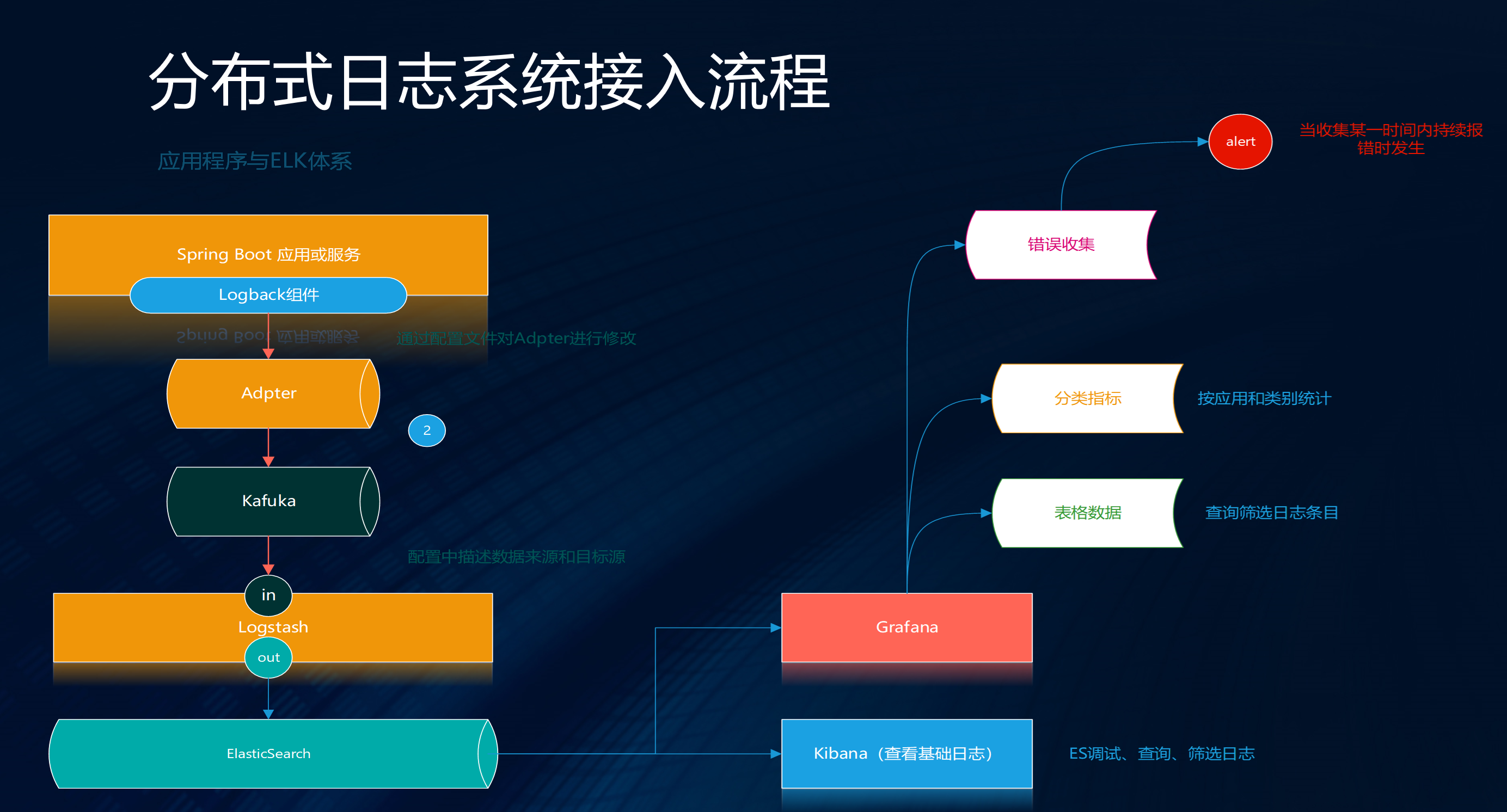

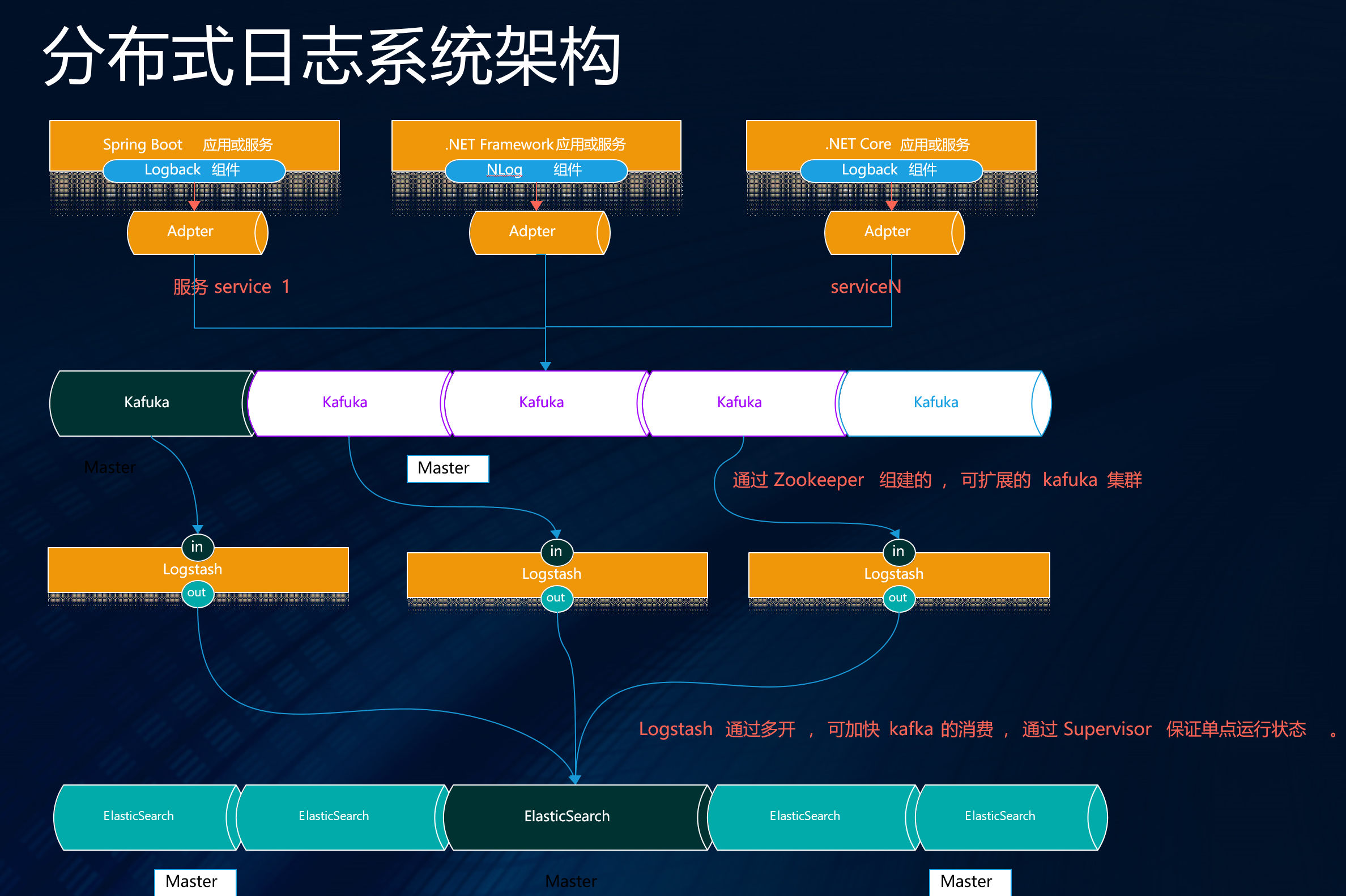

承接上文( 微服务日志之.NET Core使用NLog通过Kafka实现日志收集 https://www.cnblogs.com/maxzhang1985/p/9522017.html ).NET/Core的实现,我们的目地是为了让微服务环境中dotnet和java的服务都统一的进行日志收集。

Java体系下Spring Boot + Logback很容易就接入了Kafka实现了日志收集。

Spring Boot集成

Maven 包管理

<dependencyManagement>

<dependencies>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-core</artifactId>

<version>1.2.3</version>

</dependency>

</dependencies>

</dependencyManagement>

包依赖引用:

<dependency>

<groupId>com.github.danielwegener</groupId>

<artifactId>logback-kafka-appender</artifactId>

<version>0.2.0-RC1</version>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.2.3</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>5.0</version>

</dependency>

logback-spring.xml

在Spring Boot项目resources目录下添加logback-spring.xml配置文件,注意:一定要修改 {"appname":"webdemo"},这个值也可以在配置中设置为变量。添加如下配置,STDOUT是在连接失败时,使用的日志输出配置。所以这每个项目要根据自己的情况添加配置。在普通日志输出中使用异步策略提高性能,内容如下:

<appender name="kafkaAppender" class="com.github.danielwegener.logback.kafka.KafkaAppender">

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" >

<customFields>{"appname":"webdemo"}</customFields>

<includeMdc>true</includeMdc>

<includeContext>true</includeContext>

<throwableConverter class="net.logstash.logback.stacktrace.ShortenedThrowableConverter">

<maxDepthPerThrowable>30</maxDepthPerThrowable>

<rootCauseFirst>true</rootCauseFirst>

</throwableConverter>

</encoder>

<topic>loges</topic>

<keyingStrategy class="com.github.danielwegener.logback.kafka.keying.HostNameKeyingStrategy" />

<deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy" />

<producerConfig>bootstrap.servers=127.0.0.1:9092</producerConfig>

<!-- don't wait for a broker to ack the reception of a batch. -->

<producerConfig>acks=0</producerConfig>

<!-- wait up to 1000ms and collect log messages before sending them as a batch -->

<producerConfig>linger.ms=1000</producerConfig>

<!-- even if the producer buffer runs full, do not block the application but start to drop messages -->

<!--<producerConfig>max.block.ms=0</producerConfig>-->

<producerConfig>block.on.buffer.full=false</producerConfig>

<!-- kafka连接失败后,使用下面配置进行日志输出 -->

<appender-ref ref="STDOUT" />

</appender>

注意:一定要修改 {"appname":"webdemo"} , 这个值也可以在配置中设置为变量 。对于第三方框架或库的错误和异常信息如需要写入日志,错误配置如下:

<appender name="kafkaAppenderERROR" class="com.github.danielwegener.logback.kafka.KafkaAppender">

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" >

<customFields>{"appname":"webdemo"}</customFields>

<includeMdc>true</includeMdc>

<includeContext>true</includeContext>

<throwableConverter class="net.logstash.logback.stacktrace.ShortenedThrowableConverter">

<maxDepthPerThrowable>30</maxDepthPerThrowable>

<rootCauseFirst>true</rootCauseFirst>

</throwableConverter>

</encoder>

<topic>ep_component_log</topic>

<keyingStrategy class="com.github.danielwegener.logback.kafka.keying.HostNameKeyingStrategy" />

<deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy" />

<deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.BlockingDeliveryStrategy">

<!-- wait indefinitely until the kafka producer was able to send the message -->

<timeout>0</timeout>

</deliveryStrategy>

<producerConfig>bootstrap.servers=127.0.0.1:9020</producerConfig>

<!-- don't wait for a broker to ack the reception of a batch. -->

<producerConfig>acks=0</producerConfig>

<!-- wait up to 1000ms and collect log messages before sending them as a batch -->

<producerConfig>linger.ms=1000</producerConfig>

<!-- even if the producer buffer runs full, do not block the application but start to drop messages -->

<producerConfig>max.block.ms=0</producerConfig>

<appender-ref ref="STDOUT" />

<filter class="ch.qos.logback.classic.filter.LevelFilter"><!-- 只打印错误日志 -->

<level>ERROR</level>

<onMatch>ACCEPT</onMatch>

<onMismatch>DENY</onMismatch>

</filter>

</appender>

在异常日志用使用了同步策略保证,错误日志的有效收集,当然可以根据实际项目情况进行配置。

LOG配置建议:

日志root指定错误即可输出第三方框架异常日志:

<root level="INFO">

<appender-ref ref="kafkaAppenderERROR" />

</root>

建议只输出自己程序里的级别日志配置如下(只供参考):

<logger name="项目所在包" additivity="false">

<appender-ref ref="STDOUT" />

<appender-ref ref="kafkaAppender" />

</logger>

最后

GitHub:https://github.com/maxzhang1985/YOYOFx 如果觉还可以请Star下, 欢迎一起交流。

.NET Core 开源学习群:214741894