Programming Guides

1.1 Programming Model

1.1.1 Kernels

CUDA C++ extends C++ by allowing the programmer to define C++ functions, called kernels, that, when called, are executed N times in parallel by N different CUDA threads, as opposed to only once like regular C++ functions.

A kernel is defined using the __global__ declaration specifier and the number of CUDA threads that execute that kernel for a given kernel call is specified using a new <<<...>>> execution configuration syntax. Each thread that executes the kernel is given a unique thread ID that is accessible within the kernel through built-in variables.

As an illustration, the following sample code, using the built-in variable threadIdx, adds two vectors A and B of size N and stores the result into vector C:

// Kernel definition

__global__ void VecAdd(float* A, float* B, float* C) //kernel 定义在GPU上执行的函数

{

int i = threadIdx.x;

C[i] = A[i] + B[i];

}

int main()

{

...

// Kernel invocation with N threads

VecAdd<<<1, N>>>(A, B, C); //kernel 调用

...

}

1.1.2 Thread Hierarchy

For convenience, threadIdx is a 3-component vector, so that threads can be identified using a one-dimensional, two-dimensional, or three-dimensional thread index, forming a one-dimensional, two-dimensional, or three-dimensional block of threads, called a thread block. This provides a natural way to invoke computation across the elements in a domain such as a vector, matrix, or volume.

The index of a thread and its thread ID relate to each other in a straightforward way: For a one-dimensional block, they are the same; for a two-dimensional block of size (Dx, Dy),the thread ID of a thread of index (x, y) is (x + y Dx); for a three-dimensional block of size (Dx, Dy, Dz), the thread ID of a thread of index (x, y, z) is (x + y Dx + z Dx Dy).

As an example, the following code adds two matrices A and B of size NxN and stores the result into matrix C:

// Kernel definition

__global__ void MatAdd(float A[N][N], float B[N][N],

float C[N][N])

{

int i = threadIdx.x;

int j = threadIdx.y;

C[i][j] = A[i][j] + B[i][j];

}

int main()

{

...

// Kernel invocation with 【one block】 of N * N * 1 threads

int numBlocks = 1;

dim3 threadsPerBlock(N, N);

MatAdd<<<numBlocks, threadsPerBlock>>>(A, B, C);

...

}

There is a limit to the number of threads per block, since all threads of a block are expected to reside on the same processor core and must share the limited memory resources of that core. On current GPUs, a thread block may contain up to 1024 threads.

However, a kernel can be executed by multiple equally-shaped thread blocks, so that the total number of threads is equal to the number of threads per block times the number of blocks.

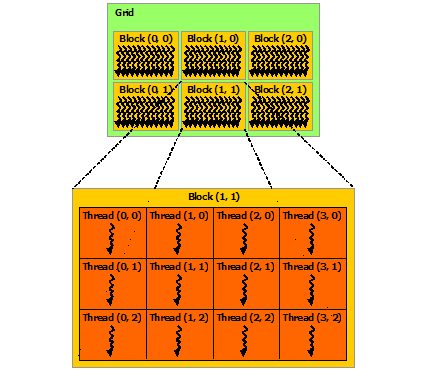

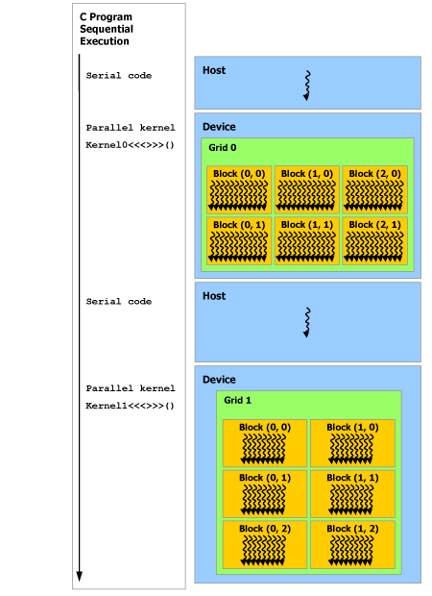

Blocks are organized into a one-dimensional, two-dimensional, or three-dimensional grid of thread blocks as illustrated by Figure. The number of thread blocks in a grid is usually dictated by the size of the data being processed, which typically exceeds the number of processors in the system.

The number of threads per block and the number of blocks per grid specified in the <<<...>>> syntax can be of type int or dim3. Two-dimensional blocks or grids can be specified as in the example above.

Each block within the grid can be identified by a one-dimensional, two-dimensional, or three-dimensional unique index accessible within the kernel through the built-in blockIdx variable. The dimension of the thread block is accessible within the kernel through the built-in blockDim variable.

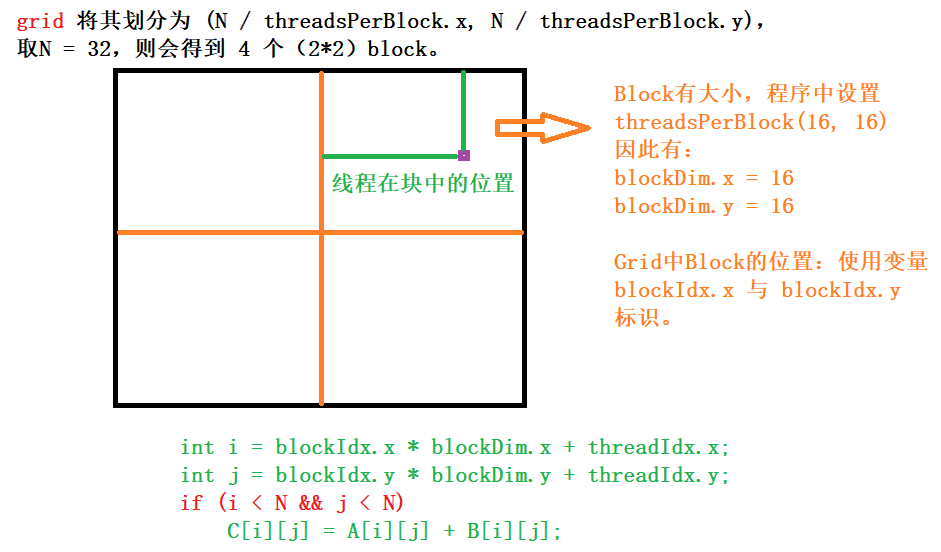

Extending the previous MatAdd() example to handle multiple blocks, the code becomes as follows.

// Kernel definition

__global__ void MatAdd(float A[N][N], float B[N][N],

float C[N][N])

{

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

if (i < N && j < N)

C[i][j] = A[i][j] + B[i][j];

}

int main()

{

...

// Kernel invocation

dim3 threadsPerBlock(16, 16);

dim3 numBlocks(N / threadsPerBlock.x, N / threadsPerBlock.y);

MatAdd<<<numBlocks, threadsPerBlock>>>(A, B, C);

...

}

执行配置运算符

<<< >>>,用来传递内核函数的执行参数。执行配置有四个参数,第一个参数声明网格的大小,第二个参数声明块的大小,第三个参数声明动态分配的共享存储器大小,默认为 0,最后一个参数声明执行的流,默认为 0。

A thread block size of 16x16 (256 threads), although arbitrary in this case, is a common choice. The grid is created with enough blocks to have one thread per matrix element as before(创建网格时使用了足够多的块,这样每个矩阵元素就有一个线程). For simplicity, this example assumes that the number of threads per grid in each dimension is evenly divisible by the number of threads per block in that dimension, although that need not be the case(为简单起见,本示例假设每个维度中每个网格的线程数可以被该维度中每个块的线程数均匀地整除,但实际情况并非如此).

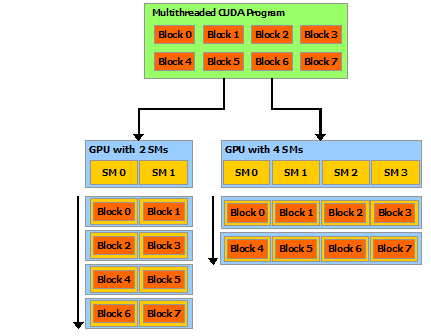

Thread blocks are required to execute independently: It must be possible to execute them in any order, in parallel or in series. This independence requirement allows thread blocks to be scheduled in any order across any number of cores as illustrated by Figure, enabling programmers to write code that scales with the number of cores.

Threads within a block can cooperate by sharing data through some shared memory and by synchronizing their execution to coordinate memory accesses(块中的线程可以通过共享内存共享数据,并通过同步它们的执行来协调内存访问). More precisely, one can specify synchronization points in the kernel by calling the __syncthreads() intrinsic function; __syncthreads() acts as a barrier at which all threads in the block must wait before any is allowed to proceed(充当一个屏障,在允许任何线程继续执行之前,块中的所有线程都必须等待).

For efficient cooperation, the shared memory is expected to be a low-latency memory near each processor core (much like an L1 cache) and __syncthreads() is expected to be lightweight.

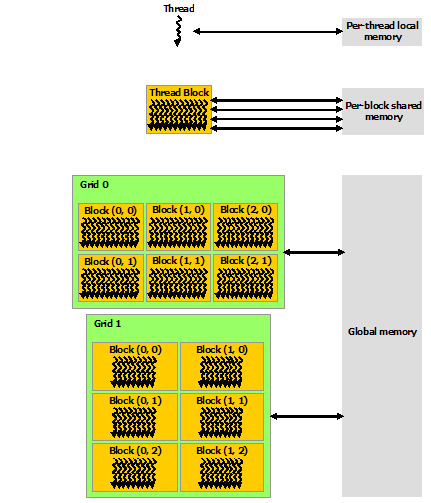

1.1.3 Memory Hierarchy

CUDA threads may access data from multiple memory spaces during their execution as illustrated by Figure. Each thread has private local memory. Each thread block has shared memory visible to all threads of the block and with the same lifetime as the block. All threads have access to the same global memory.

There are also two additional read-only memory spaces accessible by all threads: the constant and texture memory spaces. The global, constant, and texture memory spaces are optimized for different memory usages. Texture memory also offers different addressing modes, as well as data filtering, for some specific data formats.

The global, constant, and texture memory spaces are persistent across kernel launches by the same application(全局内存空间、常量内存空间和纹理内存空间在同一应用程序的内核启动之间是持久的。).

1.1.4 Heterogeneous Programming

As illustrated by Figure, the CUDA programming model assumes that the CUDA threads execute on a physically separate device that operates as a coprocessor to the host running the C++ program. This is the case, for example, when the kernels execute on a GPU and the rest of the C++ program executes on a CPU.

The CUDA programming model also assumes that both the host and the device maintain their own separate memory spaces in DRAM, referred to as host memory and device memory, respectively. Therefore, a program manages the global, constant, and texture memory spaces visible to kernels through calls to the CUDA runtime. This includes device memory allocation and deallocation as well as data transfer between host and device memory.

Unified Memory provides managed memory to bridge the host and device memory spaces. Managed memory is accessible from all CPUs and GPUs in the system as a single, coherent memory image with a common address space. This capability enables oversubscription of device memory and can greatly simplify the task of porting applications by eliminating the need to explicitly mirror data on host and device. See Unified Memory Programming for an introduction to Unified Memory.

v11.0.3