写此篇文章之前,已经搭建好spark集群并测试成功;

spark集群搭建文章链接:http://www.cnblogs.com/mmzs/p/8193707.html

一、启动环境

由于每次都要启动,比较麻烦,所以博主写了个简单的启动脚本:第一个在root用户下,第二个在hadoop用户下执行;

#!/bin/sh #提示“请输入当前时间,格式为:2017-3-2”,把用户的输入保存入变量date中 read -t 30 -p "请输入正确时间: 格式为:'09:30:56': " nowdate echo -e " " echo "当前时间为:$nowdate" #同步时间 echo "开始同步时间……" for node in master01 slave01 slave02 slave03;do ssh $node "date -s $nowdate ";done

#!/bin/bash #启动zookeeper集群 #启动slave01的QuorumPeerMain进程 ssh hadoop@slave01 << remotessh cd /software/zookeeper-3.4.10/bin/ ./zkServer.sh start jps exit remotessh #启动slave02的QuorumPeerMain进程 ssh hadoop@slave02 << remotessh cd /software/zookeeper-3.4.10/bin/ ./zkServer.sh start jps exit remotessh #启动slave03的QuorumPeerMain进程 ssh hadoop@slave03 << remotessh cd /software/zookeeper-3.4.10/bin/ ./zkServer.sh start jps exit remotessh #开启dfs集群 cd /software/ && start-dfs.sh && jps #开启yarn集群 #cd /software/ && start-yarn.sh && jps #开启spark集群 #启动master01的Master进程,slave节点的Worker进程 cd /software/spark-2.1.1/sbin/ && ./start-master.sh && ./start-slaves.sh && jps #启动master02的Master进程 ssh hadoop@master02 << remotessh cd /software/spark-2.1.1/sbin/ ./start-master.sh jps exit remotessh #spark集群的日志服务,一般不开,因为比较占资源 #cd /software/spark-2.1.1/sbin/ && ./start-history-server.sh && cd - && jps

#!/bin/bash #启动zookeeper集群 for node in hadoop@slave01 hadoop@slave02 hadoop@slave03;do ssh $node "source /etc/profile; cd /software/zookeeper-3.4.10/bin/; ./zkServer.sh start; jps";done #开启dfs集群 cd /software/ && start-dfs.sh && jps #开启yarn集群 #cd /software/ && start-yarn.sh && jps #开启spark集群 #启动master01的Master进程,slave节点的Worker进程 cd /software/spark-2.1.1/sbin/ && ./start-master.sh && ./start-slaves.sh && jps #启动master02的Master进程 ssh hadoop@master02 << remotessh cd /software/spark-2.1.1/sbin/ ./start-master.sh jps exit remotessh #spark集群的日志服务,一般不开,因为比较占资源 #cd /software/spark-2.1.1/sbin/ && ./start-history-server.sh && cd - && jps

#!/bin/sh #关闭主节点的spark相关服务 for node in master01 master02;do ssh $node "cd /software/spark-2.1.1/sbin/; ./stop-slaves.sh; ./stop-master.sh; ./stop-history-server.sh";done #关闭yarn集群 #cd /software/hadoop-2.7.3/sbin && stop-yarn.sh && cd - && jps #关闭dfs集群 cd /software/hadoop-2.7.3/sbin && stop-dfs.sh && cd - && jps #关闭zookeeper集群 for node in slave01 slave02 slave03;do ssh $node "cd /software/zookeeper-3.4.10/bin/; ./zkServer.sh stop";done

二、测试准备

1、创建Spark在HDFS集群中的输入路径

[hadoop@master01 software]$ hdfs dfs -mkdir -p /spark/input

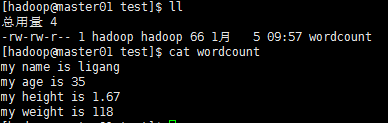

2、在本地创建数据并上传到集群的输入路径下

[hadoop@master01 test]$ hdfs dfs -put wordcount /spark/input

大数据学习交流群:217770236

三、执行指令开始计算

spark-submit:该命令用于提交Spark的Job应用

spark-shell:Spark交互式命令行工具

A、Spark on Mesos运行模式:

1、#以Spark on Mesos模式运行Spark Shell,指定master参数为spark://master01:7077

#在master01节点上运行Spark-Shell测试

[hadoop@master01 install]$ cd /software/spark-2.1.1/bin/

[hadoop@master01 bin]$ ./spark-shell --master spark://master01:7077

scala> val mapRdd=sc.textFile("/spark/input").flatMap(_.split(" ")).map(word=>(word,1)).reduceByKey(_+_).map(entry=>(entry._2,entry._1))

mapRdd: org.apache.spark.rdd.RDD[(Int, String)] = MapPartitionsRDD[5] at map at <console>:24

#下面的排序如果设置并行度为2则内存被撑爆,因为每个Executor(Task(JVM))的最小内存是480MB,并行度为2就表示所需内存在原有的基础上翻一倍

scala> val sortRdd=mapRdd.sortByKey(false,1)

sortRdd: org.apache.spark.rdd.RDD[(Int, String)] = ShuffledRDD[6] at sortByKey at <console>:26

scala> val mapRdd2=sortRdd.map(entry=>(entry._2,entry._1))

mapRdd2: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[7] at map at <console>:28

#下面这个存储步骤也是非常消耗内存的

scala> mapRdd2.saveAsTextFile("/spark/output")

scala> :quit

//或 sc.textFile("/spark/input").flatMap(_.split(" ")).map(word=>(word,1)).reduceByKey(_+_).map(entry=>(entry._2,entry._1)).sortByKey(false,1).map(entry=>(entry._2,entry._1)).saveAsTextFile("/spark/output")

sc(Spark context available as 'sc' ) textFile(读取文件) line1 line2 line3 _.split(" ") [[word1,word2,word],[word1,word3,word],[word1,word4,word]] flatmap(扁平化) [word1,word2,word,word1,word2,word,word1,word2,word] map(一个map任务,计算) (word1,1),(word2,1),(word,1),(word1,1),(word3,1),(word,1),(word1,1),(word4,1),(word,1) reduceByKey(通过key统计) [(word1,3),(word2,1),(word,3),(word3,1),(word4,1)] map(一个map任务,交换顺序) [(3,word1),(1,word2),(3,word),(1,word3),(1,word4)] sortByKey(根据key排序,排序如果设置并行度为2则内存被撑爆,因为每个Executor(Task(JVM))的最小内存是480MB,并行度为2就表示所需内存在原有的基础上翻一倍) [(1,word2),(1,word3),(1,word4),(3,word1),(3,word)] map(一个map任务,交换顺序) [(word2,1),(word3,1),(word4,1),(word1,3),(word,3)] saveAsTextFile(保存到指定目录)

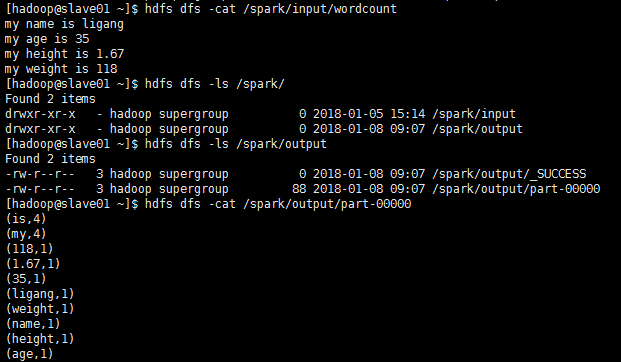

#查看HDFS集群中是否已经存在数据

[hadoop@master01 ~]$ hdfs dfs -ls /spark/output

[hadoop@master01 ~]$ hdfs dfs -cat /spark/output/part-00000

2、#以Spark on Mesos模式运行Spark-Submit,指定master参数为spark://master01:7077

[hadoop@master01 bin]$ spark-submit --class org.apache.spark.examples.JavaSparkPi --master spark://master01:7077 ../examples/jars/spark-examples_2.11-2.1.1.jar 1

Pi的运行结果

Pi的运行结果注:

--class参数用于指定运行main方法所在的类(在Scala中,main方法所在的类通常是一个由object定义的对象)

--master参数用于指定Job提交的Master节点(Master是Spark-Mesos模式下启动的特有主进程,用于管理集群中的各个Worker节点进程,如果是在Yarn模式下则提交给RM(ResourceManager)节点)

../examples/jars/spark-examples_2.11-2.1.1.jar是提交到Spark集群的Job作业打包jar,这与Hadoop提交作业的方式相同

最后的数字5是给main方法传递的参数,即运行的slices数量(即并行度),每一个slice都将启动一个Task来运行,每一个Task任务对应一个JVM进程上述提交Job打印出来的详情日志中有一行是:Pi is roughly 3.13976,这行显示的就是通过提交Spark的Job计算出来的PI值,值为3.14288

小结:

Spark-Shell与Spark-Submit的参数都是相同的,同时如果在配置文件中指定了export SPARK_MASTER_IP=master01参数配置则在运行Spark-Shell或Spark-Submit时无需再指定--master参数了,当然如果在命令行中指定了--master参数则它会覆盖配置文件中配置的值;

启动复杂Job时导致如下错误,建议将复杂的流水化操作拆分成一步一步的操作以减少内存占用或参照以前的模式配置Spark的各项内存参数试试:

#Spark支持可定制化的内存管理 export SPARK_WORKER_MEMORY=200m #一个Worker节点对应一个Excutor,一个Executor对应多个Task(JVM) export SPARK_EXECUTOR_MEMORY=100m //Spark2.x至少需要500m export SPARK_DRIVER_MEMORY=100m //Spark2.x至少需要500m #Spark计算节点上的CPU核心数(该参数的设置将影响启动的并发线程数) export SPARK_WORKER_CORES=2

报的错误如下:

scala> 17/08/09 10:14:55 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

17/08/09 10:15:11 WARN scheduler.TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resourcessortByKey

注:经过测试发现上述错误主要是发生在sortByKey排序以及saveAsTextFile存盘操作时

B、Spark on Yarn运行模式:

首先关闭Mesos模式下的所有Master进程和所有Worker进程,因为Yarn模式下不需要这些进程

[hadoop@master01 bin]$ cd /install/

[hadoop@master01 install]$ sh stop-total.sh

打开脚本中的该行注释

[hadoop@master01 install]$ sh start-total.sh

[hadoop@master02 ~]$ cd /software/hadoop-2.7.3/sbin/

[hadoop@master02 sbin]$ yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /software/hadoop-2.7.3/logs/yarn-hadoop-resourcemanager-master02.out

#删除以前旧的输出目录

[hadoop@master01 install]$ hdfs dfs -rm -r /spark/output

3、以Spark on Yarn运行模式运行spark-shell交互式命令行,注意--master的参数必须是yarn

#在master01节点上运行Spark-Shell测试

[hadoop@master01 install]$ cd /software/spark-2.1.1/bin/

[hadoop@master01 bin]$ ./spark-shell --master yarn

scala> sc.textFile("/spark/input").flatMap(_.split(" ")).map(word=>(word,1)).reduceByKey(_+_).map(entry=>(entry._2,entry._1)).sortByKey(false,1).map(entry=>(entry._2,entry._1)).saveAsTextFile("/spark/output")

scala> :q

4、在Yarn模式下运行Spark-Submit需要指定--master yarn (其中:--deploy-mode参数默认值为client)

[hadoop@CloudDeskTop bin]$ ./spark-submit --class org.apache.spark.examples.JavaSparkPi --master yarn ../examples/jars/spark-examples_2.11-2.1.1.jar 1

[hadoop@master01 bin]$ ./spark-submit --class org.apache.spark.examples.JavaSparkPi --master yarn ../examples/jars/spark-examples_2.11-2.1.1.jar 1 18/01/08 10:09:23 INFO spark.SparkContext: Running Spark version 2.1.1 18/01/08 10:09:23 WARN spark.SparkContext: Support for Java 7 is deprecated as of Spark 2.0.0 18/01/08 10:09:26 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/01/08 10:09:27 INFO spark.SecurityManager: Changing view acls to: hadoop 18/01/08 10:09:27 INFO spark.SecurityManager: Changing modify acls to: hadoop 18/01/08 10:09:27 INFO spark.SecurityManager: Changing view acls groups to: 18/01/08 10:09:27 INFO spark.SecurityManager: Changing modify acls groups to: 18/01/08 10:09:27 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set() 18/01/08 10:09:29 INFO util.Utils: Successfully started service 'sparkDriver' on port 55577. 18/01/08 10:09:29 INFO spark.SparkEnv: Registering MapOutputTracker 18/01/08 10:09:29 INFO spark.SparkEnv: Registering BlockManagerMaster 18/01/08 10:09:29 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 18/01/08 10:09:29 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 18/01/08 10:09:29 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-0202746a-8adc-4cac-8789-7fe9b8a03242 18/01/08 10:09:29 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MB 18/01/08 10:09:30 INFO spark.SparkEnv: Registering OutputCommitCoordinator 18/01/08 10:09:30 INFO util.log: Logging initialized @15055ms 18/01/08 10:09:31 INFO server.Server: jetty-9.2.z-SNAPSHOT 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@55a36fe{/jobs,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@65d06070{/jobs/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@536b8d48{/jobs/job,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3a089cc1{/jobs/job/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@b6b60ab{/stages,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@36978068{/stages/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@146f47d8{/stages/stage,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@15d5c063{/stages/stage/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@449aec8{/stages/pool,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@171ceab{/stages/pool/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@56b73d4a{/storage,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1818f1c0{/storage/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@da7809c{/storage/rdd,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6fec88c4{/storage/rdd/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@12073544{/environment,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@173a0c9b{/environment/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6411a006{/executors,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@41211d3d{/executors/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6506b132{/executors/threadDump,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1a5b7d6f{/executors/threadDump/json,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6c880fed{/static,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@58cdc845{/,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2e17578f{/api,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7e7584ec{/jobs/job/kill,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5569e2d0{/stages/stage/kill,null,AVAILABLE,@Spark} 18/01/08 10:09:31 INFO server.ServerConnector: Started Spark@4dd5b173{HTTP/1.1}{0.0.0.0:4040} 18/01/08 10:09:31 INFO server.Server: Started @15508ms 18/01/08 10:09:31 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 18/01/08 10:09:31 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.154.130:4040 18/01/08 10:09:31 INFO spark.SparkContext: Added JAR file:/software/spark-2.1.1/bin/../examples/jars/spark-examples_2.11-2.1.1.jar at spark://192.168.154.130:55577/jars/spark-examples_2.11-2.1.1.jar with timestamp 1515377371427 18/01/08 10:09:50 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers 18/01/08 10:09:50 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container) 18/01/08 10:09:50 INFO yarn.Client: Will allocate AM container, with 896 MB memory including 384 MB overhead 18/01/08 10:09:50 INFO yarn.Client: Setting up container launch context for our AM 18/01/08 10:09:50 INFO yarn.Client: Setting up the launch environment for our AM container 18/01/08 10:09:50 INFO yarn.Client: Preparing resources for our AM container 18/01/08 10:09:55 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. 18/01/08 10:10:14 INFO yarn.Client: Uploading resource file:/tmp/spark-e0070b97-4026-422a-a3be-bb9b17a864f4/__spark_libs__3039298495540143983.zip -> hdfs://ns1/user/hadoop/.sparkStaging/application_1515375319672_0003/__spark_libs__3039298495540143983.zip 18/01/08 10:11:02 INFO yarn.Client: Uploading resource file:/tmp/spark-e0070b97-4026-422a-a3be-bb9b17a864f4/__spark_conf__3013148491659653782.zip -> hdfs://ns1/user/hadoop/.sparkStaging/application_1515375319672_0003/__spark_conf__.zip 18/01/08 10:11:03 INFO spark.SecurityManager: Changing view acls to: hadoop 18/01/08 10:11:03 INFO spark.SecurityManager: Changing modify acls to: hadoop 18/01/08 10:11:03 INFO spark.SecurityManager: Changing view acls groups to: 18/01/08 10:11:03 INFO spark.SecurityManager: Changing modify acls groups to: 18/01/08 10:11:03 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set() 18/01/08 10:11:03 INFO yarn.Client: Submitting application application_1515375319672_0003 to ResourceManager 18/01/08 10:11:03 INFO impl.YarnClientImpl: Submitted application application_1515375319672_0003 18/01/08 10:11:03 INFO cluster.SchedulerExtensionServices: Starting Yarn extension services with app application_1515375319672_0003 and attemptId None 18/01/08 10:11:04 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:04 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: default start time: 1515377463128 final status: UNDEFINED tracking URL: http://master01:8088/proxy/application_1515375319672_0003/ user: hadoop 18/01/08 10:11:05 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:06 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:07 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:08 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:09 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:10 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:11 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:12 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:13 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:14 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:15 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:16 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:17 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:18 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:19 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:20 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:21 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:22 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:23 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:24 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:25 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:26 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:27 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:28 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:29 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:30 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:31 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:32 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:33 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:34 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:35 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:36 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:37 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:38 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:39 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:40 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:41 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:42 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:43 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:44 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:45 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:46 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:47 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:48 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:49 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:50 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:51 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:52 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:53 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:54 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:55 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:56 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:57 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:58 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:11:59 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:00 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:01 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:02 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:03 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:04 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:05 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:06 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:07 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:08 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:09 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:10 INFO cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(null) 18/01/08 10:12:10 INFO cluster.YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> slave01, PROXY_URI_BASES -> http://slave01:8088/proxy/application_1515375319672_0003), /proxy/application_1515375319672_0003 18/01/08 10:12:10 INFO ui.JettyUtils: Adding filter: org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter 18/01/08 10:12:10 INFO yarn.Client: Application report for application_1515375319672_0003 (state: ACCEPTED) 18/01/08 10:12:11 INFO yarn.Client: Application report for application_1515375319672_0003 (state: RUNNING) 18/01/08 10:12:11 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: 192.168.154.131 ApplicationMaster RPC port: 0 queue: default start time: 1515377463128 final status: UNDEFINED tracking URL: http://master01:8088/proxy/application_1515375319672_0003/ user: hadoop 18/01/08 10:12:11 INFO cluster.YarnClientSchedulerBackend: Application application_1515375319672_0003 has started running. 18/01/08 10:12:11 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 56117. 18/01/08 10:12:11 INFO netty.NettyBlockTransferService: Server created on 192.168.154.130:56117 18/01/08 10:12:11 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 18/01/08 10:12:12 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.154.130, 56117, None) 18/01/08 10:12:12 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.154.130:56117 with 366.3 MB RAM, BlockManagerId(driver, 192.168.154.130, 56117, None) 18/01/08 10:12:12 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 192.168.154.130, 56117, None) 18/01/08 10:12:12 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, 192.168.154.130, 56117, None) 18/01/08 10:12:12 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@8c1b14b{/metrics/json,null,AVAILABLE,@Spark} 18/01/08 10:12:12 INFO scheduler.EventLoggingListener: Logging events to hdfs://ns1/sparkLog/application_1515375319672_0003 18/01/08 10:12:13 INFO cluster.YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after waiting maxRegisteredResourcesWaitingTime: 30000(ms) 18/01/08 10:12:13 INFO internal.SharedState: Warehouse path is 'file:/software/spark-2.1.1/bin/spark-warehouse/'. 18/01/08 10:12:13 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5ccc8026{/SQL,null,AVAILABLE,@Spark} 18/01/08 10:12:13 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3d86206c{/SQL/json,null,AVAILABLE,@Spark} 18/01/08 10:12:13 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@24bcd19e{/SQL/execution,null,AVAILABLE,@Spark} 18/01/08 10:12:13 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7c28388a{/SQL/execution/json,null,AVAILABLE,@Spark} 18/01/08 10:12:13 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6dd58736{/static/sql,null,AVAILABLE,@Spark} 18/01/08 10:12:15 INFO spark.SparkContext: Starting job: reduce at JavaSparkPi.java:52 18/01/08 10:12:15 INFO scheduler.DAGScheduler: Got job 0 (reduce at JavaSparkPi.java:52) with 1 output partitions 18/01/08 10:12:15 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at JavaSparkPi.java:52) 18/01/08 10:12:15 INFO scheduler.DAGScheduler: Parents of final stage: List() 18/01/08 10:12:15 INFO scheduler.DAGScheduler: Missing parents: List() 18/01/08 10:12:15 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at JavaSparkPi.java:52), which has no missing parents 18/01/08 10:12:16 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 2.3 KB, free 366.3 MB) 18/01/08 10:12:16 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1405.0 B, free 366.3 MB) 18/01/08 10:12:16 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.154.130:56117 (size: 1405.0 B, free: 366.3 MB) 18/01/08 10:12:16 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:996 18/01/08 10:12:16 INFO scheduler.DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at JavaSparkPi.java:52) 18/01/08 10:12:16 INFO cluster.YarnScheduler: Adding task set 0.0 with 1 tasks 18/01/08 10:12:21 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(null) (192.168.154.131:52870) with ID 2 18/01/08 10:12:21 INFO storage.BlockManagerMasterEndpoint: Registering block manager slave01:35449 with 366.3 MB RAM, BlockManagerId(2, slave01, 35449, None) 18/01/08 10:12:21 WARN scheduler.TaskSetManager: Stage 0 contains a task of very large size (982 KB). The maximum recommended task size is 100 KB. 18/01/08 10:12:21 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, slave01, executor 2, partition 0, PROCESS_LOCAL, 1006035 bytes) 18/01/08 10:12:23 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on slave01:35449 (size: 1405.0 B, free: 366.3 MB) 18/01/08 10:12:24 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 3163 ms on slave01 (executor 2) (1/1) 18/01/08 10:12:24 INFO cluster.YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool 18/01/08 10:12:24 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at JavaSparkPi.java:52) finished in 8.119 s 18/01/08 10:12:24 INFO scheduler.DAGScheduler: Job 0 finished: reduce at JavaSparkPi.java:52, took 9.424750 s Pi is roughly 3.14112 18/01/08 10:12:24 INFO server.ServerConnector: Stopped Spark@4dd5b173{HTTP/1.1}{0.0.0.0:4040} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@5569e2d0{/stages/stage/kill,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@7e7584ec{/jobs/job/kill,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@2e17578f{/api,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@58cdc845{/,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6c880fed{/static,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@1a5b7d6f{/executors/threadDump/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6506b132{/executors/threadDump,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@41211d3d{/executors/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6411a006{/executors,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@173a0c9b{/environment/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@12073544{/environment,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@6fec88c4{/storage/rdd/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@da7809c{/storage/rdd,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@1818f1c0{/storage/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@56b73d4a{/storage,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@171ceab{/stages/pool/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@449aec8{/stages/pool,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@15d5c063{/stages/stage/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@146f47d8{/stages/stage,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@36978068{/stages/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@b6b60ab{/stages,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@3a089cc1{/jobs/job/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@536b8d48{/jobs/job,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@65d06070{/jobs/json,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@55a36fe{/jobs,null,UNAVAILABLE,@Spark} 18/01/08 10:12:24 INFO ui.SparkUI: Stopped Spark web UI at http://192.168.154.130:4040 18/01/08 10:12:25 INFO cluster.YarnClientSchedulerBackend: Interrupting monitor thread 18/01/08 10:12:25 INFO cluster.YarnClientSchedulerBackend: Shutting down all executors 18/01/08 10:12:25 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down 18/01/08 10:12:25 INFO cluster.SchedulerExtensionServices: Stopping SchedulerExtensionServices (serviceOption=None, services=List(), started=false) 18/01/08 10:12:25 INFO cluster.YarnClientSchedulerBackend: Stopped 18/01/08 10:12:25 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 18/01/08 10:12:25 INFO memory.MemoryStore: MemoryStore cleared 18/01/08 10:12:25 INFO storage.BlockManager: BlockManager stopped 18/01/08 10:12:25 INFO storage.BlockManagerMaster: BlockManagerMaster stopped 18/01/08 10:12:25 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 18/01/08 10:12:25 INFO spark.SparkContext: Successfully stopped SparkContext 18/01/08 10:12:25 INFO util.ShutdownHookManager: Shutdown hook called 18/01/08 10:12:25 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-e0070b97-4026-422a-a3be-bb9b17a864f4

5、在Yarn模式下运行Spark-Submit需要指定--master yarn(其中:--deploy-mode参数指定为cluster,即以集群方式运行)

[hadoop@CloudDeskTop bin]$ ./spark-submit --class org.apache.spark.examples.JavaSparkPi --master yarn --deploy-mode cluster ../examples/jars/spark-examples_2.11-2.1.1.jar 1

[hadoop@master01 bin]$ ./spark-submit --class org.apache.spark.examples.JavaSparkPi --master yarn --deploy-mode cluster ../examples/jars/spark-examples_2.11-2.1.1.jar 1 18/01/08 10:16:33 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/01/08 10:16:51 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers 18/01/08 10:16:52 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (8192 MB per container) 18/01/08 10:16:52 INFO yarn.Client: Will allocate AM container, with 1408 MB memory including 384 MB overhead 18/01/08 10:16:52 INFO yarn.Client: Setting up container launch context for our AM 18/01/08 10:16:52 INFO yarn.Client: Setting up the launch environment for our AM container 18/01/08 10:16:52 INFO yarn.Client: Preparing resources for our AM container 18/01/08 10:16:55 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME. 18/01/08 10:17:12 INFO yarn.Client: Uploading resource file:/tmp/spark-9481fd54-6b59-439d-886e-67e0703b3c79/__spark_libs__8567957490879510137.zip -> hdfs://ns1/user/hadoop/.sparkStaging/application_1515375319672_0004/__spark_libs__8567957490879510137.zip 18/01/08 10:18:02 INFO yarn.Client: Uploading resource file:/software/spark-2.1.1/examples/jars/spark-examples_2.11-2.1.1.jar -> hdfs://ns1/user/hadoop/.sparkStaging/application_1515375319672_0004/spark-examples_2.11-2.1.1.jar 18/01/08 10:18:02 INFO yarn.Client: Uploading resource file:/tmp/spark-9481fd54-6b59-439d-886e-67e0703b3c79/__spark_conf__6440770128026002818.zip -> hdfs://ns1/user/hadoop/.sparkStaging/application_1515375319672_0004/__spark_conf__.zip 18/01/08 10:18:03 INFO spark.SecurityManager: Changing view acls to: hadoop 18/01/08 10:18:03 INFO spark.SecurityManager: Changing modify acls to: hadoop 18/01/08 10:18:03 INFO spark.SecurityManager: Changing view acls groups to: 18/01/08 10:18:03 INFO spark.SecurityManager: Changing modify acls groups to: 18/01/08 10:18:03 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set() 18/01/08 10:18:03 INFO yarn.Client: Submitting application application_1515375319672_0004 to ResourceManager 18/01/08 10:18:03 INFO impl.YarnClientImpl: Submitted application application_1515375319672_0004 18/01/08 10:18:04 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:04 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: default start time: 1515377883251 final status: UNDEFINED tracking URL: http://master01:8088/proxy/application_1515375319672_0004/ user: hadoop 18/01/08 10:18:05 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:06 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:07 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:08 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:09 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:10 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:11 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:12 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:13 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:14 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:15 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:16 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:17 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:18 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:19 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:20 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:21 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:22 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:23 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:24 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:25 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:26 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:27 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:28 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:29 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:30 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:31 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:32 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:33 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:34 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:35 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:36 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:37 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:38 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:39 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:40 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:41 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:42 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:43 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:44 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:46 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:47 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:48 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:49 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:50 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:51 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:52 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:53 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:54 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:55 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:56 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:57 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:58 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:18:59 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:00 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:01 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:02 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:03 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:04 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:05 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:06 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:07 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:08 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:09 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:10 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:11 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:12 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:13 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:14 INFO yarn.Client: Application report for application_1515375319672_0004 (state: ACCEPTED) 18/01/08 10:19:15 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:15 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: 192.168.154.132 ApplicationMaster RPC port: 0 queue: default start time: 1515377883251 final status: UNDEFINED tracking URL: http://master01:8088/proxy/application_1515375319672_0004/ user: hadoop 18/01/08 10:19:16 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:17 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:18 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:19 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:20 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:21 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:22 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:23 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:24 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:25 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:26 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:27 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:28 INFO yarn.Client: Application report for application_1515375319672_0004 (state: RUNNING) 18/01/08 10:19:29 INFO yarn.Client: Application report for application_1515375319672_0004 (state: FINISHED) 18/01/08 10:19:29 INFO yarn.Client: client token: N/A diagnostics: N/A ApplicationMaster host: 192.168.154.132 ApplicationMaster RPC port: 0 queue: default start time: 1515377883251 final status: FAILED tracking URL: http://master01:8088/proxy/application_1515375319672_0004/ user: hadoop Exception in thread "main" org.apache.spark.SparkException: Application application_1515375319672_0004 finished with failed status at org.apache.spark.deploy.yarn.Client.run(Client.scala:1180) at org.apache.spark.deploy.yarn.Client$.main(Client.scala:1226) at org.apache.spark.deploy.yarn.Client.main(Client.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:743) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) 18/01/08 10:19:29 INFO util.ShutdownHookManager: Shutdown hook called 18/01/08 10:19:29 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-9481fd54-6b59-439d-886e-67e0703b3c79

6、小结:

--deploy-mode参数表示Driver类(启动Main方法所在的Job驱动类)的部署模式,它有client(默认值)和cluster两种值,前者表示Driver类位于客户端(提交Job的节点,即master01节点或CloudDeskTop节点),后者表示Driver类位于Spark集群中的某个由Spark的master节点指定的Slave节点上;

client模式下要求客户端必须与Spark集群(Slave集群)位于同一可通信的网段中,因为Driver类需要连接Slave节点以分发Job包和启动Job作业,客户端模式下Driver类不占Slave节点上的任何资源,同时Job运行时的标准输出将回显到客户端控制台上,因为Driver类位于你提交Job作业的客户端节点上;

cluster模式下客户端与Spark集群(Slave集群)是否位于同一网段是没有要求的,因为分发jar包和启动Job的Driver直接存在于Slave集群中的某个节点上,但是这种模式下Driver类本身需要消耗Slave节点上的CPU和内存资源,同时Job运行时的标准输出将不能回显到客户端控制台上,因为Driver类并没有存在于你提交Job作业的客户端节点上

对于Spark的运行模式小结:

A、Spark on Mesos:无需启动Yarn集群,但需要启动Spark集群的主从进程,即主节点上的Master进程和所有从节点上的Worker进程,启动集群的步骤如下:

zkServer.sh start start-dfs.sh start-master.sh start-slaves.sh

#Spark-Shell启动方式

[hadoop@master01 bin]$ ./spark-shell --master spark://master01:7077

#如果需要关闭则关闭顺序与启动顺序刚好相反:

stop-slaves.sh stop-master.sh stop-dfs.sh zkServer.sh stop

B、Spark on Yarn:无需启动Spark集群的Master进程和Worker进程,但需要启动Yarn集群

zkServer.sh start start-dfs.sh start-yarn.sh

#Spark-Shell启动方式

[hadoop@master01 bin]$ ./spark-shell --master yarn

#如果需要关闭则关闭顺序与启动顺序刚好相反:

stop-yarn.sh stop-dfs.sh zkServer.sh stop

说明:

上述启动操作都是在master01节点上执行的,不论是使用Mesos方式还是Yarn方式都需要启动ZK集群和HDFS集群;

在Yarn模式下运行时,Master进程与Worker进程是没有被使用到的,应该将其关闭,一旦将Master进程关闭则不能再使用浏览器访问http://master01:8080地址来观察Job运行工况了,因为此时的资源调度组件实际上是Yarn,浏览器应该通过http://master01:8088地址来访问Job的运行工况;

由于Spark运行于Mesos模式下的技术相对于Yarn模式更加成熟,同时在企业实践中Mesos也是占据主导模式的,所以我通常使用Spark on Mesos模式。