HDFS:分布式文件系统

HDFS集群模式

| 节点 | IP | 角色 |

| node1 | 192.168.55.128 | NameNode,DataNode |

| node2 | 192.168.55.129 | SecondaryNameNode,DataNode |

| node3 | 192.168.55.130 | DataNode |

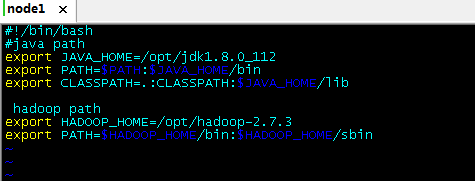

Hadoop环境变量

[root@node1 ~]# vi /etc/profile.d/custom.sh

#Hadoop path export HADOOP_HOME=/opt/hadoop-2.7.3 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

[root@node1 ~]# source /etc/profile.d/custom.sh

同理在node2和node3也要进行类似的环境变量配置。

准备工作

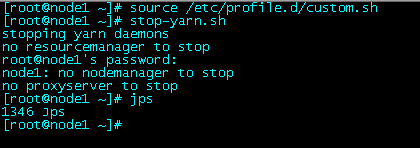

由于前面在node1上部署了Hadoop单机模式,需要停止Hadoop所有服务并清除数据目录。顺便检验一下设置的Hadoop环境变量。

清除Hadoop数据目录

[root@node1 ~]# rm -rf /tmp/hadoop-root/

core-site.xml

[root@node1 ~]# cd /opt/hadoop-2.7.3/etc/hadoop/ [root@node1 hadoop]# vi core-site.xml

core-site.xml文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/var/data/hadoop</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>65536</value>

</property>

</configuration>

hdfs-site.xm

[root@node1 hadoop]# vi hdfs-site.xml

hdfs-site.xml文件内容如下:

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <configuration> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.namenode.secondary.http-address</name> <value>node2:50090</value> </property> <property> <name>dfs.namenode.secondary.https-address</name>

<value>node2:50091</value> </property> </configuration>

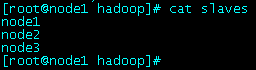

slaves

编辑slaves文件

[root@node1 hadoop]# vi slaves

slaves文件内容设置为:

分发文件

将Hadoop软件包复制到node2和node3节点上

[root@node1 ~]# scp -r /opt/hadoop-2.7.3/ node2:/opt

[root@node1 ~]# scp -r /opt/hadoop-2.7.3/ node3:/opt

将环境变量文件复制到node2和node3节点上

[root@node2 ~]# source /etc/profile.d/custom.sh

[root@node3 ~]# source /etc/profile.d/custom.sh

NameNode格式化

[root@node1 ~]# hdfs namenode -format

18/11/22 22:09:59 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 18/11/22 22:09:59 INFO namenode.NameNode: createNameNode [-format] Formatting using clusterid: CID-1435a0cf-33c1-4c5e-9473-b88769f60dcd 18/11/22 22:10:03 INFO namenode.FSNamesystem: No KeyProvider found. 18/11/22 22:10:03 INFO namenode.FSNamesystem: fsLock is fair:true 18/11/22 22:10:03 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 18/11/22 22:10:03 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 18/11/22 22:10:03 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 18/11/22 22:10:03 INFO blockmanagement.BlockManager: The block deletion will start around 2018 Nov 22 22:10:03 18/11/22 22:10:03 INFO util.GSet: Computing capacity for map BlocksMap 18/11/22 22:10:03 INFO util.GSet: VM type = 64-bit 18/11/22 22:10:04 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB 18/11/22 22:10:04 INFO util.GSet: capacity = 2^21 = 2097152 entries 18/11/22 22:10:04 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 18/11/22 22:10:04 INFO blockmanagement.BlockManager: defaultReplication = 3 18/11/22 22:10:04 INFO blockmanagement.BlockManager: maxReplication = 512 18/11/22 22:10:04 INFO blockmanagement.BlockManager: minReplication = 1 18/11/22 22:10:04 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 18/11/22 22:10:04 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 18/11/22 22:10:04 INFO blockmanagement.BlockManager: encryptDataTransfer = false 18/11/22 22:10:04 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 18/11/22 22:10:04 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 18/11/22 22:10:04 INFO namenode.FSNamesystem: supergroup = supergroup 18/11/22 22:10:04 INFO namenode.FSNamesystem: isPermissionEnabled = true 18/11/22 22:10:04 INFO namenode.FSNamesystem: HA Enabled: false 18/11/22 22:10:04 INFO namenode.FSNamesystem: Append Enabled: true 18/11/22 22:10:07 INFO util.GSet: Computing capacity for map INodeMap 18/11/22 22:10:07 INFO util.GSet: VM type = 64-bit 18/11/22 22:10:07 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB 18/11/22 22:10:07 INFO util.GSet: capacity = 2^20 = 1048576 entries 18/11/22 22:10:07 INFO namenode.FSDirectory: ACLs enabled? false 18/11/22 22:10:07 INFO namenode.FSDirectory: XAttrs enabled? true 18/11/22 22:10:07 INFO namenode.FSDirectory: Maximum size of an xattr: 16384 18/11/22 22:10:07 INFO namenode.NameNode: Caching file names occuring more than 10 times 18/11/22 22:10:07 INFO util.GSet: Computing capacity for map cachedBlocks 18/11/22 22:10:07 INFO util.GSet: VM type = 64-bit 18/11/22 22:10:07 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB 18/11/22 22:10:07 INFO util.GSet: capacity = 2^18 = 262144 entries 18/11/22 22:10:07 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 18/11/22 22:10:07 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 18/11/22 22:10:07 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 18/11/22 22:10:07 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 18/11/22 22:10:07 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 18/11/22 22:10:07 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 18/11/22 22:10:07 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 18/11/22 22:10:07 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 18/11/22 22:10:08 INFO util.GSet: Computing capacity for map NameNodeRetryCache 18/11/22 22:10:08 INFO util.GSet: VM type = 64-bit 18/11/22 22:10:08 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB 18/11/22 22:10:08 INFO util.GSet: capacity = 2^15 = 32768 entries 18/11/22 22:10:08 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1249797347-192.168.55.128-1542942608346 18/11/22 22:10:08 INFO common.Storage: Storage directory /var/data/hadoop/dfs/name has been successfully formatted. 18/11/22 22:10:09 INFO namenode.FSImageFormatProtobuf: Saving image file /var/data/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression 18/11/22 22:10:10 INFO namenode.FSImageFormatProtobuf: Image file /var/data/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 351 bytes saved in 0 seconds. 18/11/22 22:10:10 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 18/11/22 22:10:10 INFO util.ExitUtil: Exiting with status 0 18/11/22 22:10:10 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.55.128 ************************************************************/

[root@node1 ~]# ll /var/data/hadoop/dfs/name/current/ total 16 -rw-r--r-- 1 root root 351 Nov 22 22:10 fsimage_0000000000000000000 -rw-r--r-- 1 root root 62 Nov 22 22:10 fsimage_0000000000000000000.md5 -rw-r--r-- 1 root root 2 Nov 22 22:10 seen_txid -rw-r--r-- 1 root root 207 Nov 22 22:10 VERSION

启动HDFS

[root@node1 ~]# start-dfs.sh Starting namenodes on [node1] node1: starting namenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-namenode-node1.out node3: starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode-node3.out node2: starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode-node2.out node1: starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode-node1.out Starting secondary namenodes [node2] node2: starting secondarynamenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-secondarynamenode-node2.out [root@node1 ~]#

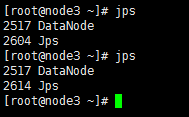

查看三个节点上的Java进程:

node1

node2

node3

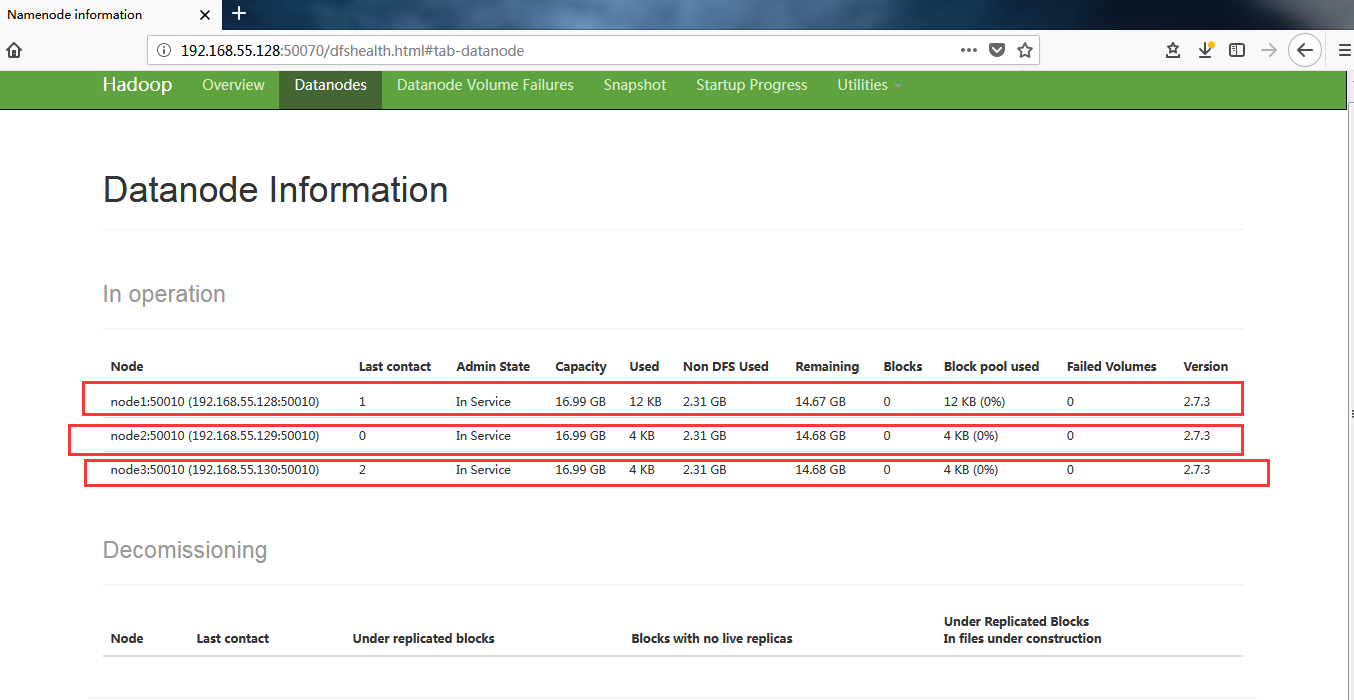

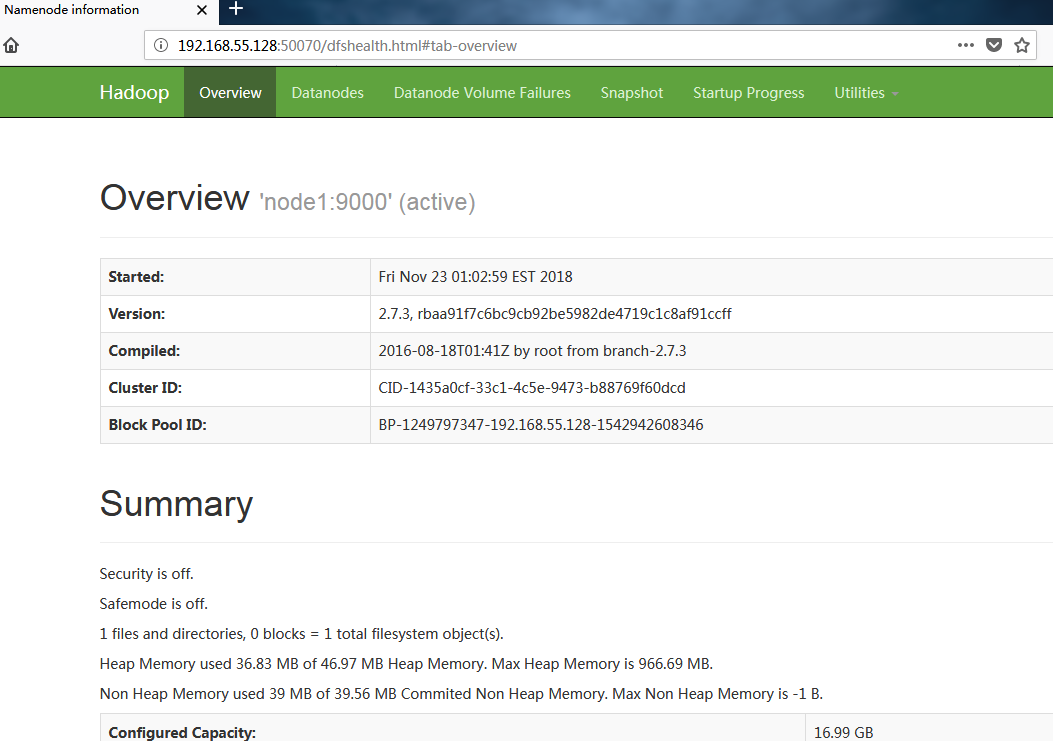

HDFS Web界面

在”Datanodes”可以看到三个DataNode节点的信息: