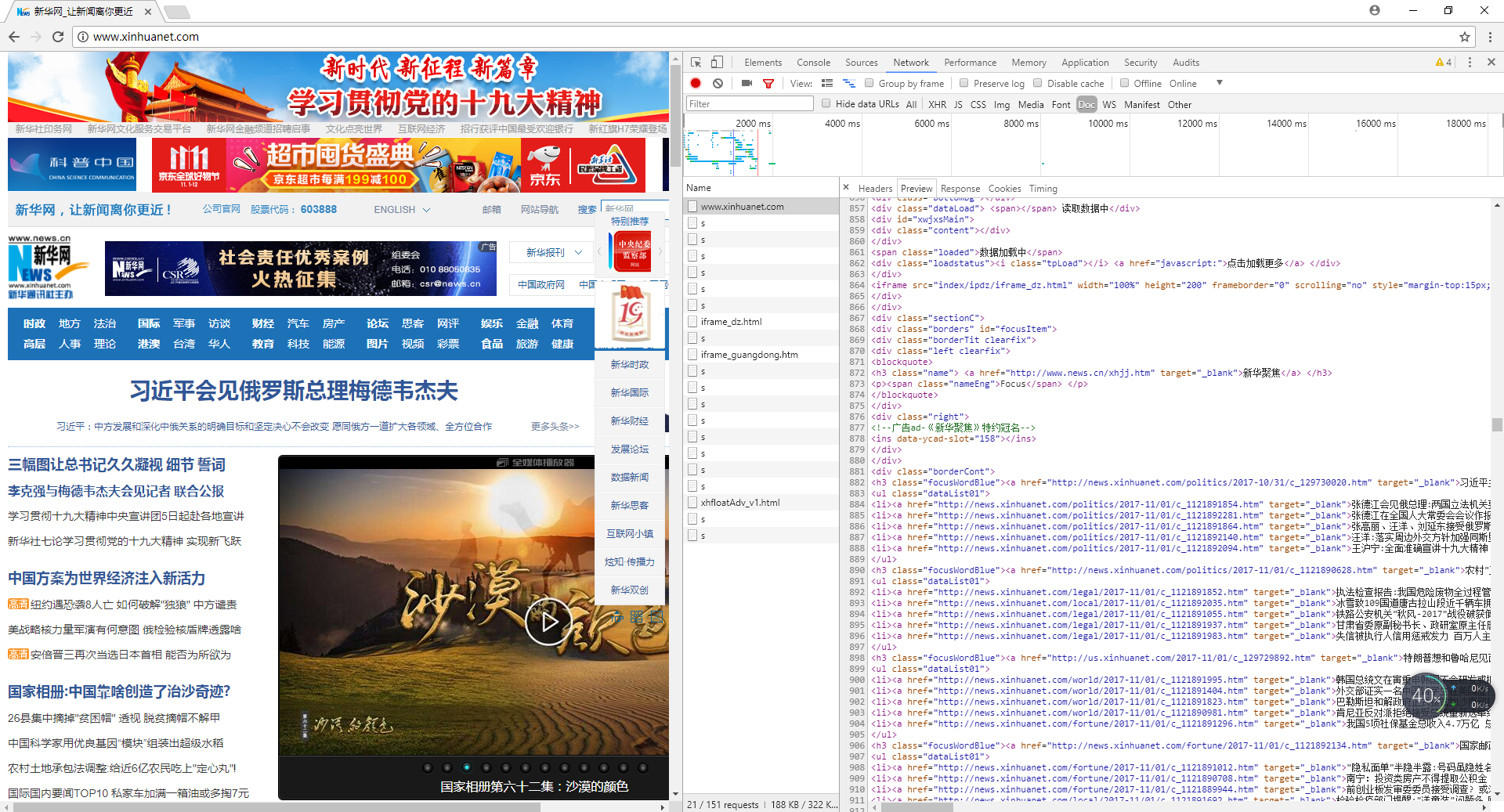

由于能选择一个感兴趣的网站进行数据分析,所以这次选择爬取的网站是新华网,其网址为"http://www.xinhuanet.com/",然后对其进行数据分析并生成词云

运行整个程序相关的代码包

import requests import re from bs4 import BeautifulSoup from datetime import datetime import pandas import sqlite3 import jieba from wordcloud import WordCloud import matplotlib.pyplot as plt

爬取网页信息

url = "http://www.xinhuanet.com/" f=open("css.txt","w+") res0 = requests.get(url) res0.encoding="utf-8" soup = BeautifulSoup(res0.text,"html.parser") newsgroup=[] for news in soup.select("li"): if len(news.select("a"))>0: print(news.select("a")[0].text) title=news.select("a")[0].text f.write(title) f.close()

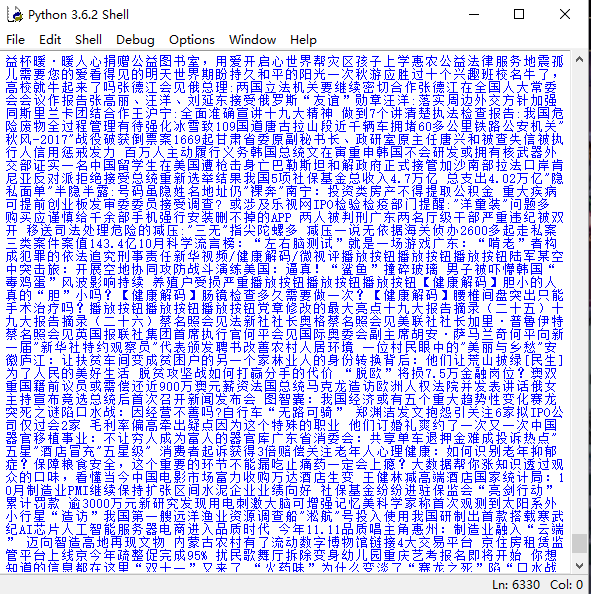

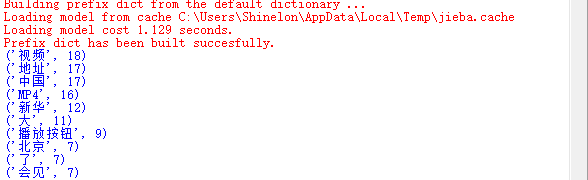

存入txt文件中,并进行字词统计

f0 = open('css.txt','r') qz=[] qz=f0.read() f0.close() print(qz) words = list(jieba.cut(qz)) ul={':','的','"','、','”','“','。','!',':','?',' ','u3000',',',' '} dic={} keys = set(words)-ul for i in keys: dic[i]=words.count(i) c = list(dic.items()) c.sort(key=lambda x:x[1],reverse=True) f1 = open('diectory.txt','w') for i in range(10): print(c[i]) for words_count in range(c[i][1]): f1.write(c[i][0]+' ') f1.close()

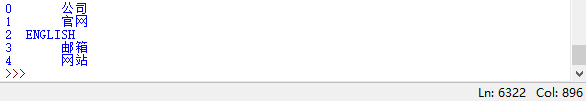

存入数据库

df = pandas.DataFrame(words) print(df.head()) with sqlite3.connect('newsdb3.sqlite') as db: df.to_sql('newsdb3',con = db)

制作词云

f3 = open('diectory.txt','r') cy_file = f3.read() f3.close() cy = WordCloud().generate(cy_file) plt.imshow(cy) plt.axis("off") plt.show()

最终成果

完整代码

import requests import re from bs4 import BeautifulSoup from datetime import datetime import pandas import sqlite3 import jieba from wordcloud import WordCloud import matplotlib.pyplot as plt url = "http://www.xinhuanet.com/" f=open("css.txt","w+") res0 = requests.get(url) res0.encoding="utf-8" soup = BeautifulSoup(res0.text,"html.parser") newsgroup=[] for news in soup.select("li"): if len(news.select("a"))>0: print(news.select("a")[0].text) title=news.select("a")[0].text f.write(title) f.close() f0 = open('css.txt','r') qz=[] qz=f0.read() f0.close() print(qz) words = list(jieba.cut(qz)) ul={':','的','"','、','”','“','。','!',':','?',' ','u3000',',',' '} dic={} keys = set(words)-ul for i in keys: dic[i]=words.count(i) c = list(dic.items()) c.sort(key=lambda x:x[1],reverse=True) f1 = open('diectory.txt','w') for i in range(10): print(c[i]) for words_count in range(c[i][1]): f1.write(c[i][0]+' ') f1.close() df = pandas.DataFrame(words) print(df.head()) with sqlite3.connect('newsdb3.sqlite') as db: df.to_sql('newsdb3',con = db) f3 = open('diectory.txt','r') cy_file = f3.read() f3.close() cy = WordCloud().generate(cy_file) plt.imshow(cy) plt.axis("off") plt.show()