做过部署的小伙伴都知道,在利用TensorRT部署到NVIDIA显卡上时,onnx模型的计算图不好修改,而看了人家NCNN开发者nihui大佬的操作就知道,很多时候大佬是将onnx转换成ncnn的.paran和.bin文件后对.param的计算图做调整的,看的我心痒痒,就想有没有一种工具可以修改onnx计算图,这样,我可以合并op后,自己写个TRT插件就好了嘛,今天,它来了

安装onnx_graphsurgeon

在新版本的TensoRT预编译包里有.whl的python包直接安装就可以了,笔者今天主要是讲怎么用这个工具,以官方的例子很好理解

生成一个onnx计算图

# # Copyright (c) 2021, NVIDIA CORPORATION. All rights reserved. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # import onnx_graphsurgeon as gs import numpy as np import onnx # Register functions to make graph generation easier @gs.Graph.register() def min(self, *args): return self.layer(op="Min", inputs=args, outputs=["min_out"])[0] @gs.Graph.register() def max(self, *args): return self.layer(op="Max", inputs=args, outputs=["max_out"])[0] @gs.Graph.register() def identity(self, inp): return self.layer(op="Identity", inputs=[inp], outputs=["identity_out"])[0] # Generate the graph graph = gs.Graph() graph.inputs = [gs.Variable("input", shape=(4, 4), dtype=np.float32)] # Clip values to [0, 6] MIN_VAL = np.array(0, np.float32) MAX_VAL = np.array(6, np.float32) # Add identity nodes to make the graph structure a bit more interesting inp = graph.identity(graph.inputs[0]) max_out = graph.max(graph.min(inp, MAX_VAL), MIN_VAL) graph.outputs = [graph.identity(max_out), ] # Graph outputs must include dtype information graph.outputs[0].to_variable(dtype=np.float32, shape=(4, 4)) onnx.save(gs.export_onnx(graph), "model.onnx")

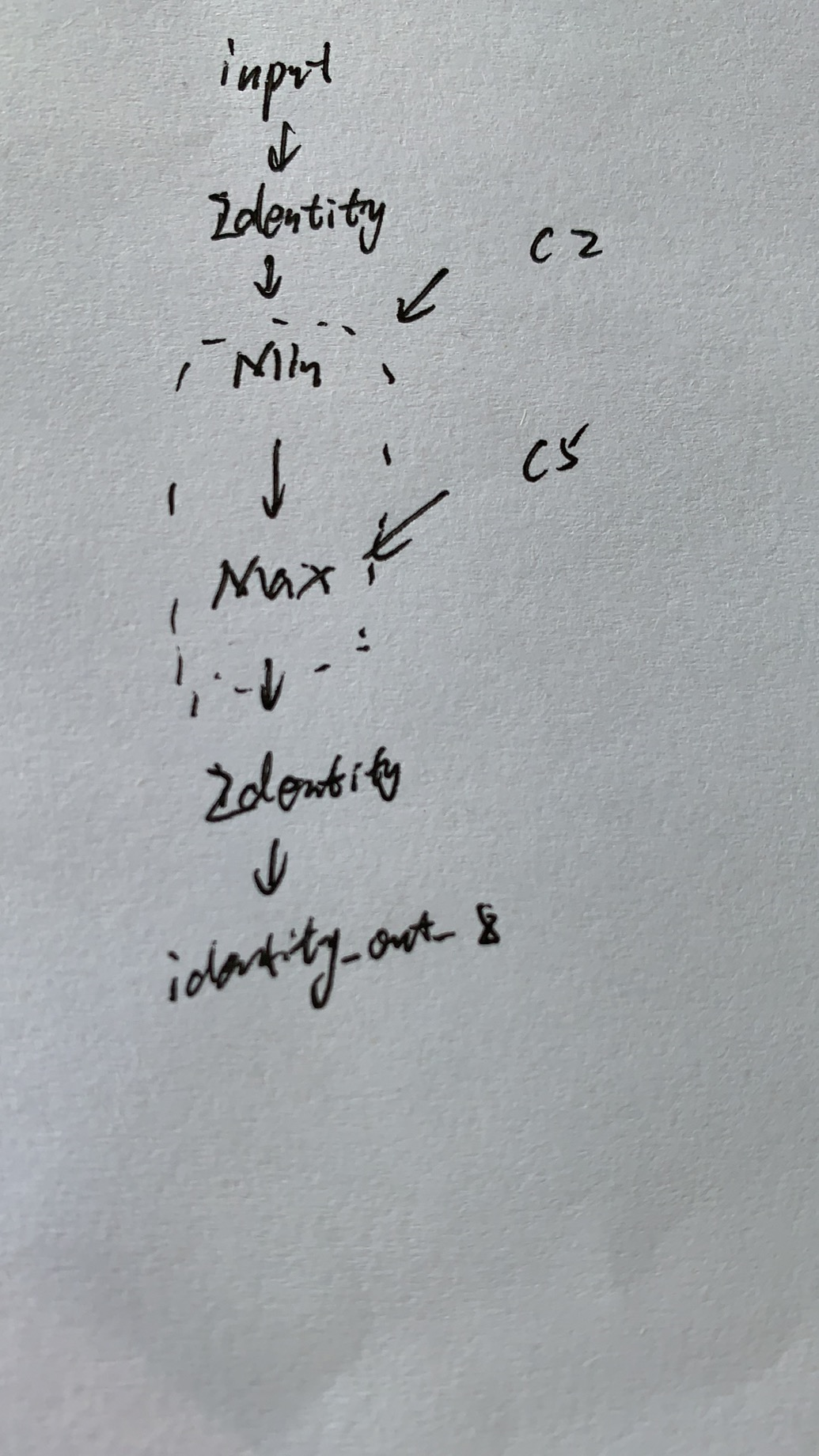

然后netron查看如下

这不就是Clip操作嘛,你要问Clip是什么....e....看这个吧

import numpy as np x=np.array([1,2,3,5,6,7,8,9]) np.clip(x,3,8)

Out[88]: array([3, 3, 3, 5, 6, 7, 8, 8])

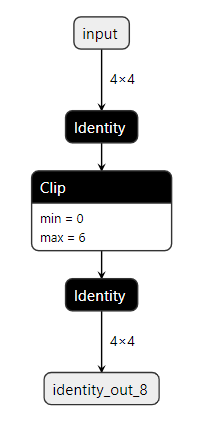

现在就是想使用onnx_graphsurgeon这个工具将OP Min和Max整合成一个叫Clip的心OP这样即使部署时也只需要写个Clip插件就好了,当然本文只是为了演示,Clip OP已经TensorRT支持了。

修改开始

方法非常简单,先把你想要合并的OP和外界所有联系切断,然后替换成新的ONNX OP保存就好了

还不理解?上才艺

就是把Min和Identity断开,Min和c2常数断开,Max和c5常数断开,Max和下面那个Identity断开,然后替换成新的OP就好

看代码

import onnx_graphsurgeon as gs import numpy as np import onnx # Here we'll register a function to do all the subgraph-replacement heavy-lifting. # NOTE: Since registered functions are entirely reusable, it may be a good idea to # refactor them into a separate module so you can use them across all your models.

# 这里写成函数是为了,万一还需要这样的替换操作就可以重复利用了 @gs.Graph.register() def replace_with_clip(self, inputs, outputs): # Disconnect output nodes of all input tensors for inp in inputs: inp.outputs.clear() # Disconnet input nodes of all output tensors for out in outputs: out.inputs.clear() # Insert the new node. return self.layer(op="Clip", inputs=inputs, outputs=outputs) # Now we'll do the actual replacement

# 导入onnx模型 graph = gs.import_onnx(onnx.load("model.onnx")) tmap = graph.tensors() # You can figure out the input and output tensors using Netron. In our case: # Inputs: [inp, MIN_VAL, MAX_VAL] # Outputs: [max_out]

# 子图的需要断开的输入name和子图需要断开的输出name inputs = [tmap["identity_out_0"], tmap["onnx_graphsurgeon_constant_5"], tmap["onnx_graphsurgeon_constant_2"]] outputs = [tmap["max_out_6"]]

# 断开并替换成新的名叫Clip的 OP graph.replace_with_clip(inputs, outputs) # Remove the now-dangling subgraph. graph.cleanup().toposort() # That's it! onnx.save(gs.export_onnx(graph), "replaced.onnx")

最后看下

完成onnx计算图修改