参考文档:

- Install-guide:https://docs.openstack.org/install-guide/

- OpenStack High Availability Guide:https://docs.openstack.org/ha-guide/index.html

- 理解Pacemaker:http://www.cnblogs.com/sammyliu/p/5025362.html

十一.Neutron控制/网络节点集群

1. 创建neutron数据库

# 在任意控制节点创建数据库,后台数据自动同步,以controller01节点为例; [root@controller01 ~]# mysql -u root -pmysql_pass MariaDB [(none)]> CREATE DATABASE neutron; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'neutron_dbpass'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutron_dbpass'; MariaDB [(none)]> flush privileges; MariaDB [(none)]> exit;

2. 创建neutron-api

# 在任意控制节点操作,以controller01节点为例; # 调用neutron服务需要认证信息,加载环境变量脚本即可 [root@controller01 ~]# . admin-openrc

1)创建neutron用户

# service项目已在glance章节创建; # neutron用户在”default” domain中 [root@controller01 ~]# openstack user create --domain default --password=neutron_pass neutron

2)neutron赋权

# 为neutron用户赋予admin权限 [root@controller01 ~]# openstack role add --project service --user neutron admin

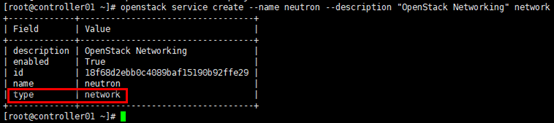

3)创建neutron服务实体

# neutron服务实体类型”network” [root@controller01 ~]# openstack service create --name neutron --description "OpenStack Networking" network

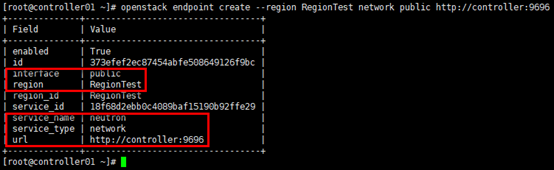

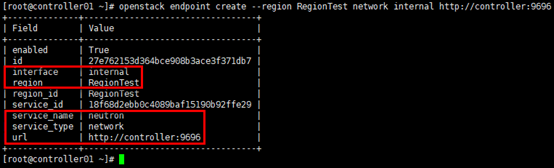

4)创建neutron-api

# 注意--region与初始化admin用户时生成的region一致; # api地址统一采用vip,如果public/internal/admin分别使用不同的vip,请注意区分; # neutron-api 服务类型为network; # public api [root@controller01 ~]# openstack endpoint create --region RegionTest network public http://controller:9696

# internal api [root@controller01 ~]# openstack endpoint create --region RegionTest network internal http://controller:9696

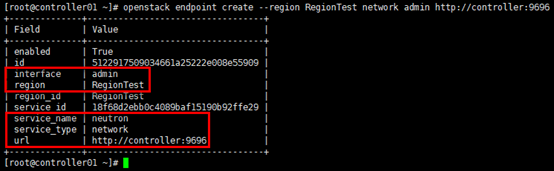

# admin api [root@controller01 ~]# openstack endpoint create --region RegionTest network admin http://controller:9696

3. 安装neutron

# 在全部控制节点安装neutron相关服务,以controller01节点为例 [root@controller01 ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge python-neutronclient ebtables ipset -y

4. 配置neutron.conf

# 在全部控制节点操作,以controller01节点为例; # 注意”bind_host”参数,根据节点修改; # 注意neutron.conf文件的权限:root:neutron [root@controller01 ~]# cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/neutron/neutron.conf [DEFAULT] bind_host = 172.30.200.31 auth_strategy = keystone core_plugin = ml2 service_plugins = router allow_overlapping_ips = True notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true # l3高可用,可以采用vrrp模式或者dvr模式; # vrrp模式下,在各网络节点(此处网络节点与控制节点混合部署)以vrrp的模式设置主备virtual router;mater故障时,virtual router不会迁移,而是将router对外服务的vip漂移到standby router上; # dvr模式下,三层的转发(L3 Forwarding)与nat功能都会被分布到计算节点上,即计算节点也有了网络节点的功能;但是,dvr依然不能消除集中式的virtual router,为了节省IPV4公网地址,仍将snat放在网络节点上提供; # vrrp模式与dvr模式不可同时使用 # Neutron L3 Agent HA 之 虚拟路由冗余协议(VRRP): http://www.cnblogs.com/sammyliu/p/4692081.html # Neutron 分布式虚拟路由(Neutron Distributed Virtual Routing): http://www.cnblogs.com/sammyliu/p/4713562.html # “l3_ha = true“参数即启用l3 ha功能 l3_ha = true # 最多在几个l3 agent上创建ha router max_l3_agents_per_router = 3 # 可创建ha router的最少正常运行的l3 agnet数量 min_l3_agents_per_router = 2 # vrrp广播网络 l3_ha_net_cidr = 169.254.192.0/18 # ”router_distributed “参数本身的含义是普通用户创建路由器时,是否默认创建dvr;此参数默认值为“false”,这里采用vrrp模式,可注释此参数 # 虽然此参数在mitaka(含)版本后,可与l3_ha参数同时打开,但设置dvr模式还同时需要设置网络节点与计算节点的l3_agent.ini与ml2_conf.ini文件 # router_distributed = true # dhcp高可用,在3个网络节点各生成1个dhcp服务器 dhcp_agents_per_network = 3 # 前端采用haproxy时,服务连接rabbitmq会出现连接超时重连的情况,可通过各服务与rabbitmq的日志查看; # transport_url = rabbit://openstack:rabbitmq_pass@controller:5673 # rabbitmq本身具备集群机制,官方文档建议直接连接rabbitmq集群;但采用此方式时服务启动有时会报错,原因不明;如果没有此现象,强烈建议连接rabbitmq直接对接集群而非通过前端haproxy transport_url=rabbit://openstack:rabbitmq_pass@controller01:5672,controller02:5672,controller03:5672 [agent] [cors] [database] connection = mysql+pymysql://neutron:neutron_dbpass@controller/neutron [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller01:11211,controller:11211,controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = neutron_pass [matchmaker_redis] [nova] auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionTest project_name = service username = nova password = nova_pass [oslo_concurrency] lock_path = /var/lib/neutron/tmp [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_messaging_zmq] [oslo_middleware] [oslo_policy] [quotas] [ssl]

5. 配置ml2_conf.ini

# 在全部控制节点操作,以controller01节点为例; # ml2_conf.ini文件的权限:root:neutron [root@controller01 ~]# cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/neutron/plugins/ml2/ml2_conf.ini [DEFAULT] [l2pop] [ml2] type_drivers = flat,vlan,vxlan # ml2 mechanism_driver 列表,l2population对gre/vxlan租户网络有效 mechanism_drivers = linuxbridge,l2population # 可同时设置多种租户网络类型,第一个值是常规租户创建网络时的默认值,同时也默认是master router心跳信号的传递网络类型 tenant_network_types = vlan,vxlan,flat extension_drivers = port_security [ml2_type_flat] # 指定flat网络类型名称为”external”,”*”表示任意网络,空值表示禁用flat网络 flat_networks = external [ml2_type_geneve] [ml2_type_gre] [ml2_type_vlan] # 指定vlan网络类型的网络名称为”vlan”;如果不设置vlan id则表示不受限 network_vlan_ranges = vlan:3001:3500 [ml2_type_vxlan] vni_ranges = 10001:20000 [securitygroup] enable_ipset = true # 服务初始化调用ml2_conf.ini中的配置,但指向/etc/neutron/olugin.ini文件 [root@controller01 ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

6. 配置linuxbridge_agent.ini

1)配置linuxbridge_agent.ini

# 在全部控制节点操作,以controller01节点为例; # linuxbridge_agent.ini文件的权限:root:neutron [root@controller01 ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/neutron/plugins/ml2/linuxbridge_agent.ini [DEFAULT] [agent] [linux_bridge] # 网络类型名称与物理网卡对应,这里flat external网络对应规划的eth1,vlan租户网络对应规划的eth3,在创建相应网络时采用的是网络名称而非网卡名称; # 需要明确的是物理网卡是本地有效,根据主机实际使用的网卡名确定; # 另有” bridge_mappings”参数对应网桥 physical_interface_mappings = external:eth1,vlan:eth3 [network_log] [securitygroup] firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver enable_security_group = true [vxlan] enable_vxlan = true # tunnel租户网络(vxlan)vtep端点,这里对应规划的eth2(的地址),根据节点做相应修改 local_ip = 10.0.0.31 l2_population = true

2)配置内核参数

# bridge:是否允许桥接; # 如果“sysctl -p”加载不成功,报” No such file or directory”错误,需要加载内核模块“br_netfilter”; # 命令“modinfo br_netfilter”查看内核模块信息; # 命令“modprobe br_netfilter”加载内核模块 [root@controller01 ~]# echo "# bridge" >> /etc/sysctl.conf [root@controller01 ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf [root@controller01 ~]# echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf [root@controller01 ~]# sysctl -p

7. 配置l3_agent.ini(self-networking)

# 在全部控制节点操作,以controller01节点为例; # l3_agent.ini文件的权限:root:neutron [root@controller01 ~]# cp /etc/neutron/l3_agent.ini /etc/neutron/l3_agent.ini.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/neutron/l3_agent.ini [DEFAULT] interface_driver = linuxbridge [agent] [ovs]

8. 配置dhcp_agent.ini

# 在全部控制节点操作,以controller01节点为例; # 使用dnsmasp提供dhcp服务; # dhcp_agent.ini文件的权限:root:neutron [root@controller01 ~]# cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true [agent] [ovs]

9. 配置metadata_agent.ini

# 在全部控制节点操作,以controller01节点为例; # metadata_proxy_shared_secret:与/etc/nova/nova.conf文件中参数一致; # metadata_agent.ini文件的权限:root:neutron [root@controller01 ~]# cp /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini.bak [root@controller01 ~]# egrep -v "^$|^#" /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = neutron_metadata_secret [agent] [cache]

10. 配置nova.conf

# 在全部控制节点操作,以controller01节点为例; # 配置只涉及nova.conf的”[neutron]”字段; # metadata_proxy_shared_secret:与/etc/neutron/metadata_agent.ini文件中参数一致 [root@controller01 ~]# vim /etc/nova/nova.conf [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionTest project_name = service username = neutron password = neutron_pass service_metadata_proxy = true metadata_proxy_shared_secret = neutron_metadata_secret

11. 同步neutron数据库

# 任意控制节点操作; [root@controller01 ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron # 验证 [root@controller01 ~]# mysql -h controller01 -u neutron -pneutron_dbpass -e "use neutron;show tables;"

12. 启动服务

# 全部控制节点操作; # 变更nova配置文件,首先需要重启nova服务 [root@controller01 ~]# systemctl restart openstack-nova-api.service # 开机启动 [root@controller01 ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service # 启动 [root@controller01 ~]# systemctl restart neutron-server.service [root@controller01 ~]# systemctl restart neutron-linuxbridge-agent.service [root@controller01 ~]# systemctl restart neutron-l3-agent.service [root@controller01 ~]# systemctl restart neutron-dhcp-agent.service [root@controller01 ~]# systemctl restart neutron-metadata-agent.service

13. 验证

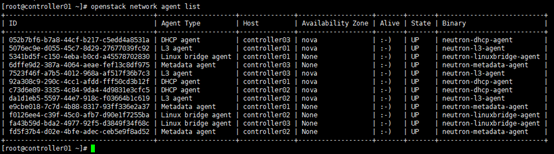

[root@controller01 ~]# . admin-openrc # 查看加载的扩展服务 [root@controller01 ~]# openstack extension list --network # 查看agent服务 [root@controller01 ~]# openstack network agent list

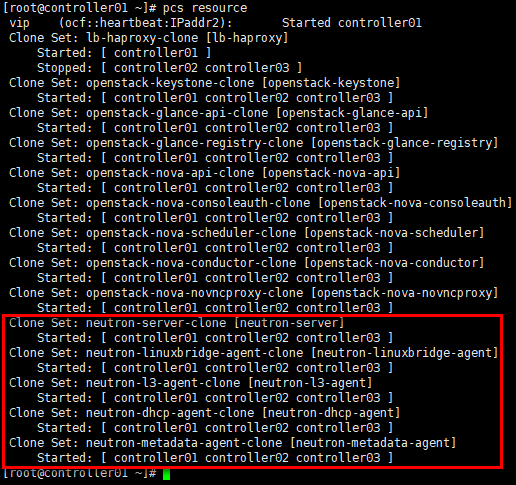

14. 设置pcs资源

# 在任意控制节点操作; # 添加资源neutron-server,neutron-linuxbridge-agent,neutron-l3-agent,neutron-dhcp-agent与neutron-metadata-agent [root@controller01 ~]# pcs resource create neutron-server systemd:neutron-server --clone interleave=true [root@controller01 ~]# pcs resource create neutron-linuxbridge-agent systemd:neutron-linuxbridge-agent --clone interleave=true [root@controller01 ~]# pcs resource create neutron-l3-agent systemd:neutron-l3-agent --clone interleave=true [root@controller01 ~]# pcs resource create neutron-dhcp-agent systemd:neutron-dhcp-agent --clone interleave=true [root@controller01 ~]# pcs resource create neutron-metadata-agent systemd:neutron-metadata-agent --clone interleave=true # 查看pcs资源 [root@controller01 ~]# pcs resource