1.数据字典表

1 CREATE TABLE `dic` ( 2 `id` int(32) NOT NULL AUTO_INCREMENT, 3 `table_name` varchar(225) DEFAULT NULL, 4 `field_name` varchar(225) DEFAULT NULL, 5 `field_value` varchar(225) DEFAULT NULL, 6 `field_describe` varchar(225) DEFAULT NULL, 7 `describe` varchar(225) DEFAULT NULL, 8 PRIMARY KEY (`id`) 9 ) ENGINE=InnoDB AUTO_INCREMENT=3 DEFAULT CHARSET=utf8;

2. DicMap.java类

1 package com.day02.sation.map; 2 3 import com.day02.sation.dao.IDicDao; 4 import com.day02.sation.model.Dic; 5 import org.springframework.context.ApplicationContext; 6 import org.springframework.context.support.ClassPathXmlApplicationContext; 7 8 import java.util.HashMap; 9 import java.util.List; 10 import java.util.Map; 11 12 /** 13 * Created by Administrator on 1/3. 14 */ 15 16 public class DicMap { 17 18 private static IDicDao dicDao; 19 20 private static Map<String, String> map = new HashMap(); 21 22 static { 23 //获取应用上下文对象 24 ApplicationContext ctx = new ClassPathXmlApplicationContext("spring-config.xml"); 25 //获取dicDao实例 26 dicDao = ctx.getBean(IDicDao.class); 27 //调用方法初始化字典 28 addMapValue(); 29 } 30 31 public static String getFieldDetail(String tableName, String fieldName, String filedValue) { 32 String key = tableName + "_" + fieldName + "_" + filedValue; 33 String value = map.get(key); 34 if (value == null) { //如果 value 为空 重新查询数据库 35 Dic dicQuery = new Dic(); 36 Dic dic = dicDao.getDic(dicQuery); 37 if (dic != null) {//数据有该值 38 String fieldDescribe = dic.getFieldDescribe(); 39 map.put(key, fieldDescribe); 40 return fieldDescribe; 41 } 42 value = "暂无"; 43 } 44 return value; 45 } 46 47 /** 48 * 初始化字典数据 49 */ 50 private static void addMapValue() { 51 List<Dic> list = dicDao.getList(); 52 for (int i = 0; i < list.size(); i++) { 53 Dic dic = list.get(i); 54 String tableName = dic.getTableName(); 55 String fieldName = dic.getFieldName(); 56 String fieldValue = dic.getFieldValue(); 57 String key = tableName + "_" + fieldName + "_" + fieldValue; 58 String fieldDescribe = dic.getFieldDescribe(); 59 map.put(key, fieldDescribe); 60 } 61 62 } 63 }

3.dao接口

1 /** 2 * 获取字典列表 3 * @return 4 */ 5 List<Dic> getList(); 6 7 /** 8 * 根据 key 获取字典值 9 * @param dic 10 * @return 11 */ 12 Dic getDic(Dic dic);

4.mapper映射文件

1 <!--查询字典列表--> 2 <select id="getList" resultType="com.day02.sation.model.Dic"> 3 SELECT d.id, d.table_name tableName, d.field_name fieldName, d.field_value fieldValue, 4 d.field_describe fieldDescribe, d.`describe` FROM dic AS d 5 </select> 6 <!--根据key获取字典值--> 7 <select id="getDic" parameterType="com.day02.sation.model.Dic" resultType="com.day02.sation.model.Dic"> 8 SELECT d.id, d.table_name tableName, d.field_name fieldName, d.field_value fieldValue, 9 d.field_describe fieldDescribe, d.`describe` FROM dic AS d 10 WHERE d.table_name=#{tableName} AND d.field_name=#{fieldName} AND d.field_value=#{fieldValue} 11 </select>

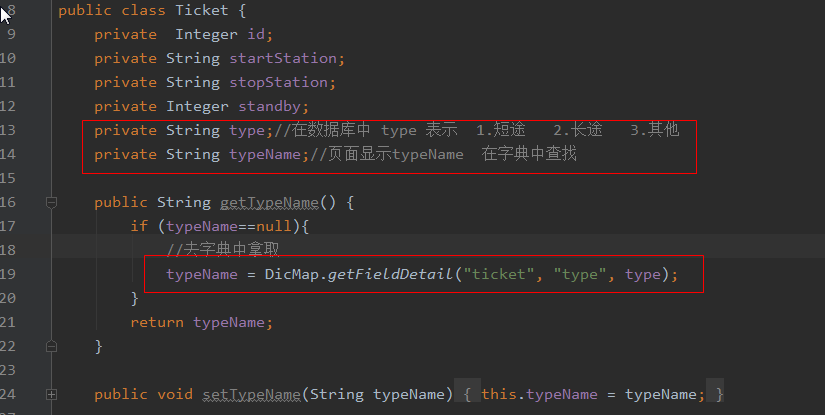

5.在模型中使用

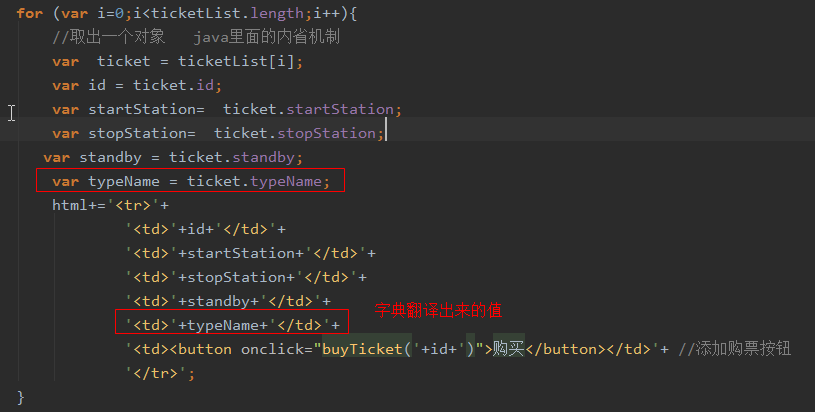

6.页面显示

7.浏览器中使用firbug查看返回数据

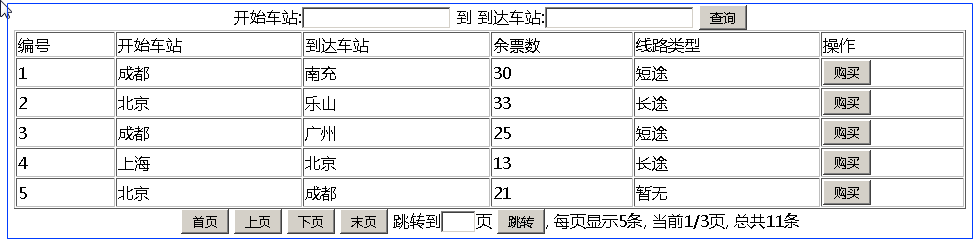

8.页面显示效果

到此字典使用完毕!