1、去这个网站下载对应的版本:

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

我这边下载的是:jdk-8u181-linux-x64.tar.gz

wget -c http://download.oracle.com/otn-pub/java/jdk/8u181-b13/96a7b8442fe848ef90c96a2fad6ed6d1/jdk-8u181-linux-x64.tar.gz

然后解压:

tar -zxvf jdk-8u181-linux-x64.tar.gz

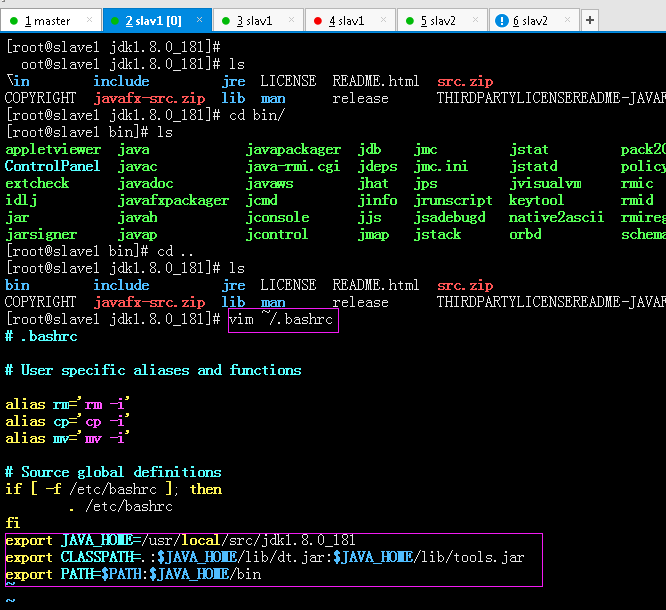

修改配制文件:

vim ~/.bashrc 在最后加入:

export JAVA_HOME=/usr/local/src/jdk1.8.0_181

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

或是把这个加入到

/etc/profile 里面

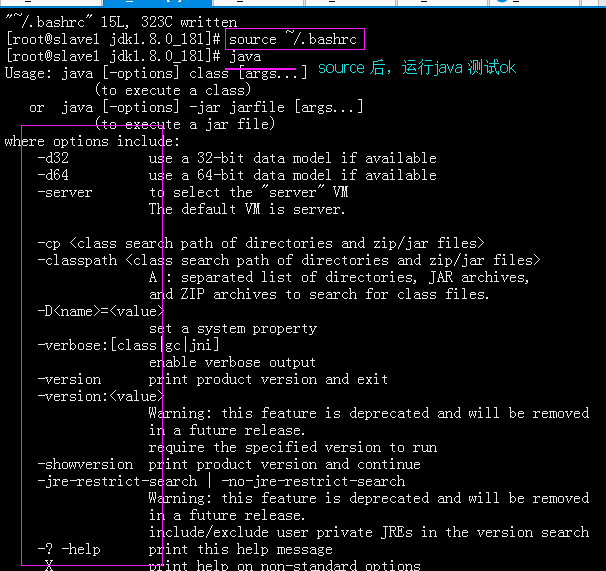

然后,再source ~/.bashrc 或 source /etc/profile

运行就可以查看:

auto_jdk.sh

#!/bin/bash

cd /usr/local/src/

a=`ls |grep jdk*.tar.gz`

tar -xvf $a

b=`ls |grep jdk1*`

cat >> ~/.bashrc << "eof"

export JAVA_HOME=/usr/local/src/$b

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

eof

source ~/.bashrc

2、设置免密登陆:

开启网络ID

[root@localhost /]# vim /etc/sysconfig/network

# Created by anaconda

NETWORKING=yes

HOSTNAME=master

[root@localhost /]# cat >> /etc/hosts << "eof" (在三台机器上都这样子配制)

> 192.168.10.7 master

>192.168.10.8 slave1

> 192.168.10.9 slave2

> eof

[root@localhost /]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.7 master

192.168.10.8 slave1

192.168.10.9 slave2

[root@localhost /]#

密钥生成:ssh-keygen -t rsa -P ''

touch authorized_keys

chmod 600 authorized_keys

cat id_rsa.pub > authorized_keys

用同样的方式,在slave1和slave2上都生成密钥(ssh-keygen -t rsa -P '')

然后,把id_rsa.pub都复制到master上去:

scp id_rsa.pub 192.168.10.7:/root/.ssh/id_rsa.pub1 (在slave1上执行)

scp id_rsa.pub 192.168.10.7:/root/.ssh/id_rsa.pub2 (在slave2上执行)

然后,在maser上执行:

cat id_rsa.pub1 >> authorized_keys

cat id_rsa.pub2 >> authorized_keys

scp authorized_keys root@slave1:/root/.ssh

scp authorized_keys root@slave2:/root/.ssh

最后,再进行测试。

3、进行hadoop安装

这个网站,有目前相关的版本:http://apache.fayea.com/hadoop/common/

wget -c http://apache.fayea.com/hadoop/common/hadoop-2.6.5/hadoop-2.6.5.tar.gz

解压:tar -xvf hadoop-2.6.5.tar.gz

修改hadoop-env.sh,yarn-env.sh中的JAVA_HOME的路径,都在最前面加一个:export JAVA_HOME=/usr/local/src/jdk1.8.0_181

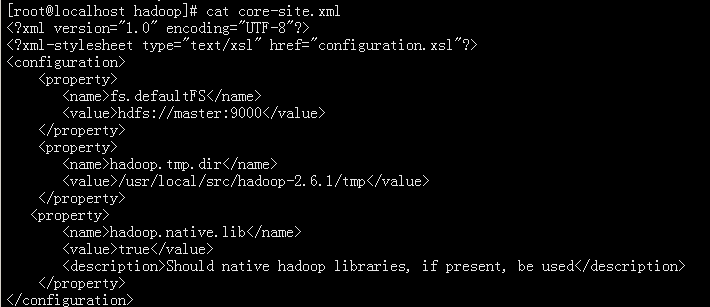

vim core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.10.7:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/src/hadoop-2.6.1/tmp</value>

</property>

<property>

<name>hadoop.native.lib</name>

<value>true</value>

<description>Should native hadoop libraries, if present, be used</description>

</property>

</configuration>

vim hdfs-site.xml

cat hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.datanode.ipc.address</name>

<value>0.0.0.0:50020</value>

</property>

<property>

<name>dfs.datanode.http.address</name>

<value>0.0.0.0:50075</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

mv mapred-site.xml.templat mapred-site.xml

vim mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

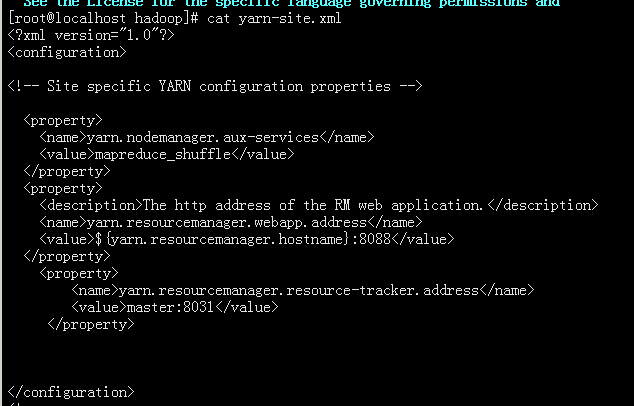

vim yarn-site.xml

[root@localhost hadoop]# cat yarn-site.xml

<?xml version="1.0"?>

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

</configuration>

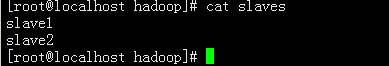

vim slaves

slave1

slave2

4、关闭三台机器的防火墙

systemctl stop firewalld

systemctl disable firewalld.service

iptables -F

systemctl status firewalld

格式化name

/usr/local/src/hadoop-2.6.1/bin/hdfs namenode -format

启动hdfs和yarn

$HADOOP_HOME/sbin/start-dfs.sh

启动完成后,输入jps查看进程,如果看到以下二个进程:

5161 SecondaryNameNode

4989 NameNode

表示master节点基本ok了

再输入$HADOOP_HOME/sbin/start-yarn.sh ,完成后,再输入jps查看进程

2361 SecondaryNameNode

7320 ResourceManager

4989 NameNode

通过web页面查看hdfs和mapreduce

http://master:50090/

http://master:8088/

查看状态

另外也可以通过 bin/hdfs dfsadmin -report 查看hdfs的状态报告