此次试验主要是CloudStack结合openvswitch

背景介绍

之所以引入openswitch,是因为如果按照之前的方式,一个网桥占用一个vlan,假如一个zone有20个vlan,那么岂不是每个vlan都要创建一个桥,维护很麻烦

openvswitch是由Nicira Networks主导的,运行在虚拟化平台(例如KVM,Xen)上的虚拟交换机。在虚拟化平台上,ovs可以动态变化的断电提供2层交换功能,很好的控制虚拟网络中的访问策略、网络隔离、流量监控等

它是软件级别的交换机,可以称之为软件定义网络

ovs的适用范围:高级网络,低级网络,只有1个网卡也可以使用ovs

openvswitch官网如下

openswitch支持的平台

kvm,Xen,openstack,VirtualBox等,虽然没写CloudStack,但是也是支持的

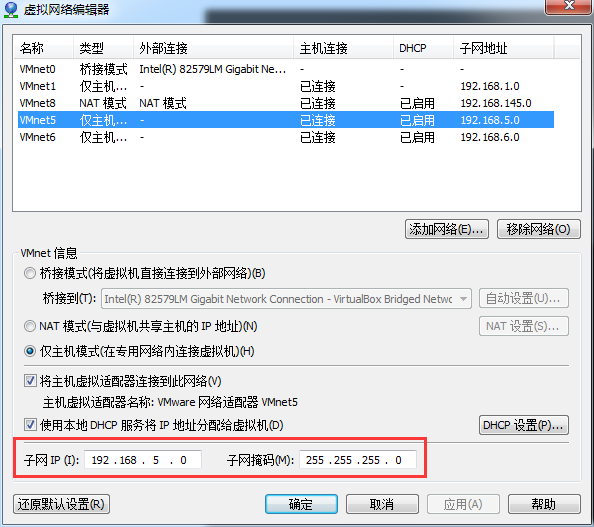

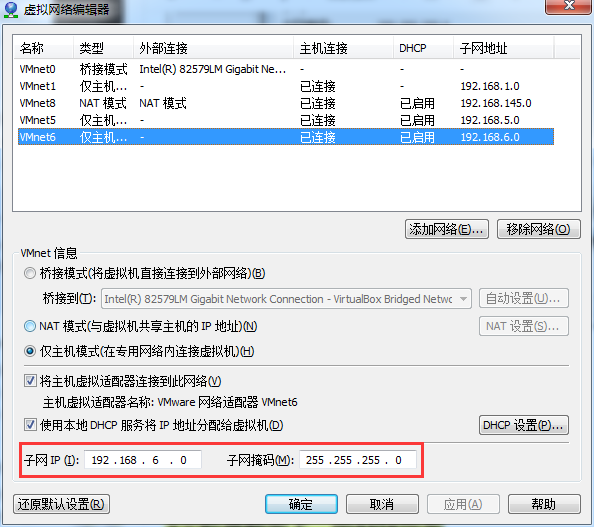

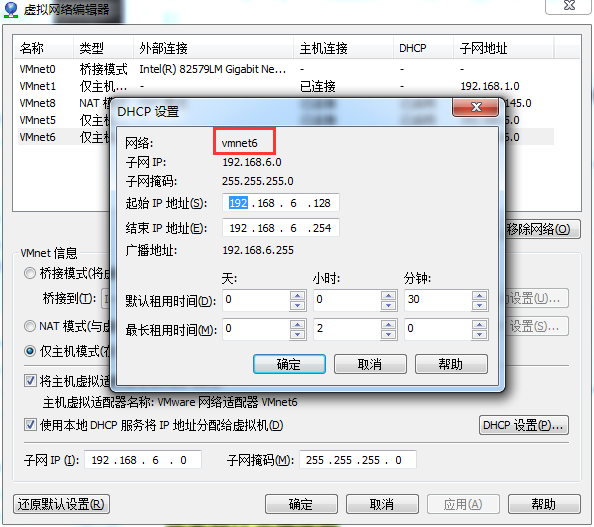

IP地址规划

3台宿主机关机,每台机器再添加2个网卡,最终每个机器3块网卡

每个host3个网卡,分别模拟管理网,存储网,客户网

eth0:管理网,特点是数据量最小,管理宿主机使用

eth1:存储网,不跨vlan,连接自己存储的网络

eth2:客户网,也叫来宾网络。用户端请求。来自公网的访问

master机器之所以添加客户网,为了给后面添加资源域时给agent的客户网当网关用

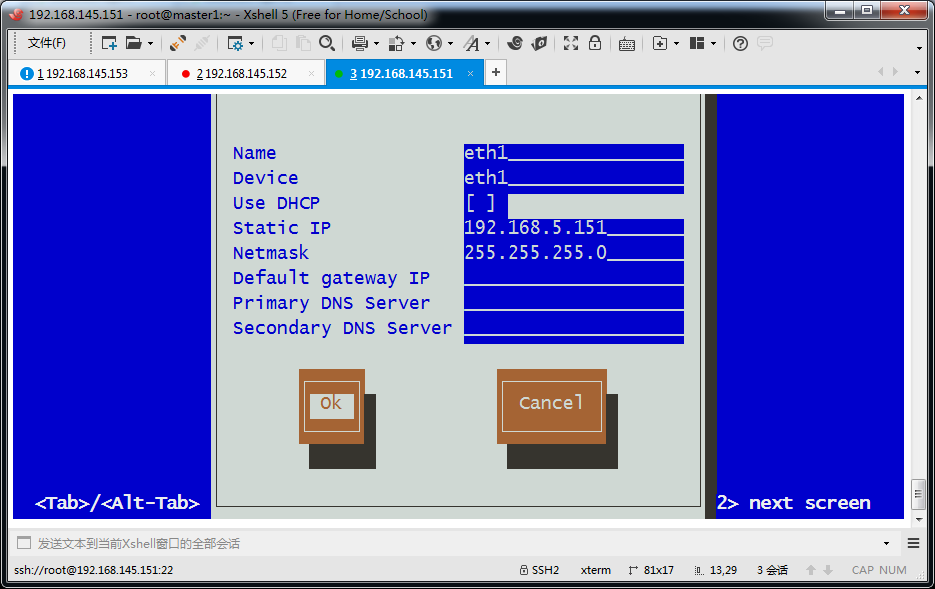

硬件添加完之后,需要配置新网卡的IP

最终3台机器的eth1和eth2的ip地址如下。

原先eth0不变,保证3台机器能互相通过新加的网卡ping通IP

master [root@master1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1 # Please read /usr/share/doc/initscripts-*/sysconfig.txt # for the documentation of these parameters. DEVICE=eth1 BOOTPROTO=none NETMASK=255.255.255.0 TYPE=Ethernet HWADDR=00:0c:29:8f:1e:a2 IPADDR=192.168.5.151 [root@master1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth2 # Please read /usr/share/doc/initscripts-*/sysconfig.txt # for the documentation of these parameters. DEVICE=eth2 BOOTPROTO=none NETMASK=255.255.255.0 TYPE=Ethernet IPADDR=192.168.6.151 [root@master1 ~]# agent1 [root@agent1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1 # Please read /usr/share/doc/initscripts-*/sysconfig.txt # for the documentation of these parameters. DEVICE=eth1 BOOTPROTO=none NETMASK=255.255.255.0 TYPE=Ethernet HWADDR=00:0c:29:ab:d5:b3 IPADDR=192.168.5.152 [root@agent1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth2 # Please read /usr/share/doc/initscripts-*/sysconfig.txt # for the documentation of these parameters. DEVICE=eth2 BOOTPROTO=none NETMASK=255.255.255.0 TYPE=Ethernet IPADDR=192.168.6.152 [root@agent1 ~]# agent2 [root@agent2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1 DEVICE=eth1 BOOTPROTO=none NETMASK=255.255.255.0 TYPE=Ethernet IPADDR=192.168.5.153 [root@agent2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth2 DEVICE=eth2 BOOTPROTO=none NETMASK=255.255.255.0 TYPE=Ethernet IPADDR=192.168.6.153 [root@agent2 ~]#

清理之前实验残留的信息

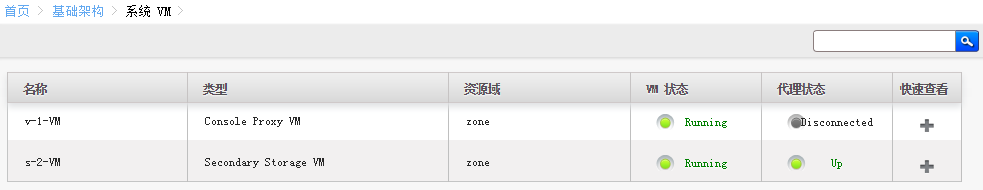

关闭系统虚拟机

登录网页,虽然点击关闭了。系统虚拟机还会自动启动,不用理会

禁用资源域

因为我们要把之前数据清除

agent上删除eth0的桥接,两台agent都操作

删除这一行: BRIDGE=cloudbr0

[root@agent1 ~]# brctl show bridge name bridge id STP enabled interfaces cloud0 8000.fe00a9fe00e0 no vnet0 cloudbr0 8000.000c29abd5a9 no eth0 vnet1 vnet2 virbr0 8000.525400ea877d yes virbr0-nic [root@agent1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 TYPE=Ethernet ONBOOT=yes BOOTPROTO=none IPADDR=192.168.145.152 NETMASK=255.255.255.0 GATEWAY=192.168.145.2 DNS1=10.0.1.11 NM_CONTROLLED=no BRIDGE=cloudbr0 IPV6INIT=no USERCTL=no [root@agent1 ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0 [root@agent1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 TYPE=Ethernet ONBOOT=yes BOOTPROTO=none IPADDR=192.168.145.152 NETMASK=255.255.255.0 GATEWAY=192.168.145.2 DNS1=10.0.1.11 NM_CONTROLLED=no IPV6INIT=no USERCTL=no [root@agent1 ~]#

删除cloudbr0文件

ovs和它是不同的东西,会冲突,agent2同样操作

[root@agent1 ~]# cd /etc/sysconfig/network-scripts [root@agent1 network-scripts]# ls ifcfg-cloudbr0 ifdown-ipv6 ifup-bnep ifup-routes ifcfg-eth0 ifdown-isdn ifup-eth ifup-sit ifcfg-eth1 ifdown-post ifup-ippp ifup-tunnel ifcfg-eth2 ifdown-ppp ifup-ipv6 ifup-wireless ifcfg-lo ifdown-routes ifup-isdn init.ipv6-global ifdown ifdown-sit ifup-plip net.hotplug ifdown-bnep ifdown-tunnel ifup-plusb network-functions ifdown-eth ifup ifup-post network-functions-ipv6 ifdown-ippp ifup-aliases ifup-ppp [root@agent1 network-scripts]# rm -f ifcfg-cloudbr0 [root@agent1 network-scripts]#

重启宿主机

系统虚拟机还在采用reboot让它把信息清理干净

[root@agent1 ~]# virsh list Id Name State ---------------------------------------------------- 2 v-2-VM running [root@agent1 ~]# [root@agent2 ~]# virsh list Id Name State ---------------------------------------------------- 2 s-1-VM running [root@agent2 ~]#

reboot之后,vnet和cloudbr0都没有了,(两台agent都一样)

[root@agent1 ~]# ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet 192.168.145.152/24 brd 192.168.145.255 scope global eth0

inet6 fe80::20c:29ff:feab:d5a9/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:b3 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.152/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feab:d5b3/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet 192.168.6.152/24 brd 192.168.6.255 scope global eth2

inet6 fe80::20c:29ff:feab:d5bd/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

[root@agent1 ~]#

重启后下面这个也只存在了自带的这个桥,(两台agent都一样)

[root@agent1 ~]# brctl show bridge name bridge id STP enabled interfaces virbr0 8000.525400ea877d yes virbr0-nic [root@agent1 ~]#

系统虚拟机也消失了(两台agent都没有)

[root@agent1 ~]# virsh list --all Id Name State ---------------------------------------------------- [root@agent1 ~]#

master端停止cloudstack-management服务

[root@master1 ~]# /etc/init.d/cloudstack-management stop Stopping cloudstack-management: [FAILED] [root@master1 ~]# /etc/init.d/cloudstack-management stop Stopping cloudstack-management: [ OK ] [root@master1 ~]# /etc/init.d/cloudstack-management status cloudstack-management is stopped [root@master1 ~]#

master端清除数据库

[root@master1 ~]# mysql -uroot -p123456 Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 13 Server version: 5.1.73-log Source distribution Copyright (c) 2000, 2013, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | cloud | | cloud_usage | | mysql | | test | +--------------------+ 5 rows in set (0.00 sec) mysql> drop database cloud; Query OK, 274 rows affected (1.17 sec) mysql> drop database cloud_usage; Query OK, 25 rows affected (0.13 sec) mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | test | +--------------------+ 3 rows in set (0.00 sec) mysql> exit Bye [root@master1 ~]#

agent端安装ovs包

两台agent安装ovs

openvswitch是为了替换bridge的一个解决方案,只在宿主机上安装,最后2个rpm包就是ovs相关的包

[root@agent1 tools]# ls cloudstack-agent-4.8.0-1.el6.x86_64.rpm cloudstack-baremetal-agent-4.8.0-1.el6.x86_64.rpm cloudstack-cli-4.8.0-1.el6.x86_64.rpm cloudstack-common-4.8.0-1.el6.x86_64.rpm cloudstack-management-4.8.0-1.el6.x86_64.rpm cloudstack-usage-4.8.0-1.el6.x86_64.rpm kmod-openvswitch-2.3.1-1.el6.x86_64.rpm openvswitch-2.3.1-1.x86_64.rpm

安装过程如下(两台agent上都执行,master上不需要执行)

[root@agent1 tools]# yum install kmod-openvswitch-2.3.1-1.el6.x86_64.rpm openvswitch-2.3.1-1.x86_64.rpm -y Loaded plugins: fastestmirror, security Setting up Install Process Examining kmod-openvswitch-2.3.1-1.el6.x86_64.rpm: kmod-openvswitch-2.3.1-1.el6.x86_64 Marking kmod-openvswitch-2.3.1-1.el6.x86_64.rpm to be installed Loading mirror speeds from cached hostfile epel/metalink | 6.2 kB 00:00 * epel: mirror.premi.st base | 3.7 kB 00:00 centos-gluster37 | 2.9 kB 00:00 epel | 4.3 kB 00:00 epel/primary_db | 5.9 MB 00:24 extras | 3.4 kB 00:00 updates | 3.4 kB 00:00 Examining openvswitch-2.3.1-1.x86_64.rpm: openvswitch-2.3.1-1.x86_64 Marking openvswitch-2.3.1-1.x86_64.rpm to be installed Resolving Dependencies --> Running transaction check ---> Package kmod-openvswitch.x86_64 0:2.3.1-1.el6 will be installed ---> Package openvswitch.x86_64 0:2.3.1-1 will be installed --> Finished Dependency Resolution Dependencies Resolved ================================================================================= Package Arch Version Repository Size ================================================================================= Installing: kmod-openvswitch x86_64 2.3.1-1.el6 /kmod-openvswitch-2.3.1-1.el6.x86_64 6.5 M openvswitch x86_64 2.3.1-1 /openvswitch-2.3.1-1.x86_64 8.0 M Transaction Summary ================================================================================= Install 2 Package(s) Total size: 14 M Installed size: 14 M Downloading Packages: Running rpm_check_debug Running Transaction Test Transaction Test Succeeded Running Transaction Installing : kmod-openvswitch-2.3.1-1.el6.x86_64 1/2 Installing : openvswitch-2.3.1-1.x86_64 2/2 Verifying : openvswitch-2.3.1-1.x86_64 1/2 Verifying : kmod-openvswitch-2.3.1-1.el6.x86_64 2/2 Installed: kmod-openvswitch.x86_64 0:2.3.1-1.el6 openvswitch.x86_64 0:2.3.1-1 Complete! [root@agent1 tools]#

配置 cloudstack-agent 使用 OVS

编辑/etc/cloudstack/agent/agent.properties,添加下列行:

network.bridge.type=openvswitch libvirt.vif.driver=com.cloud.hypervisor.kvm.resource.OvsVifDriver

下面是原先的配置文件

[root@agent1 tools]# cat /etc/cloudstack/agent/agent.properties #Storage #Mon Feb 13 22:57:04 CST 2017 guest.network.device=cloudbr0 workers=5 private.network.device=cloudbr0 port=8250 resource=com.cloud.hypervisor.kvm.resource.LibvirtComputingResource pod=1 zone=1 hypervisor.type=kvm guid=a8be994b-26bd-39d6-a72f-693f06476873 public.network.device=cloudbr0 cluster=1 local.storage.uuid=cd049ede-7106-45ba-acd4-1f229405f272 domr.scripts.dir=scripts/network/domr/kvm LibvirtComputingResource.id=4 host=192.168.145.151 [root@agent1 tools]#

添加上面2行到末尾(agent2同样操作)

[root@agent1 tools]# cat /etc/cloudstack/agent/agent.properties #Storage #Mon Feb 13 22:57:04 CST 2017 guest.network.device=cloudbr0 workers=5 private.network.device=cloudbr0 port=8250 resource=com.cloud.hypervisor.kvm.resource.LibvirtComputingResource pod=1 zone=1 hypervisor.type=kvm guid=a8be994b-26bd-39d6-a72f-693f06476873 public.network.device=cloudbr0 cluster=1 local.storage.uuid=cd049ede-7106-45ba-acd4-1f229405f272 domr.scripts.dir=scripts/network/domr/kvm LibvirtComputingResource.id=4 host=192.168.145.151 network.bridge.type=openvswitch libvirt.vif.driver=com.cloud.hypervisor.kvm.resource.OvsVifDriver [root@agent1 tools]#

启动服务让两台agent加载openvswitch模块

[root@agent1 tools]# lsmod | grep openvswitch [root@agent1 tools]# /etc/init.d/openvswitch start /etc/openvswitch/conf.db does not exist ... (warning). Creating empty database /etc/openvswitch/conf.db [ OK ] Starting ovsdb-server [ OK ] Configuring Open vSwitch system IDs [ OK ] Inserting openvswitch module [ OK ] Starting ovs-vswitchd [ OK ] Enabling remote OVSDB managers [ OK ] [root@agent1 tools]# chkconfig openvswitch on [root@agent1 tools]# lsmod | grep openvswitch openvswitch 88783 0 libcrc32c 1246 1 openvswitch [root@agent1 tools]#

agent端glusterfs配置文件优化

修改/etc/glusterfs/glusterd.vol

添加 option rpc-auth-allow-insecure on 这是因为glusterd默认只接受小于1024的端口下发的请求,而Qemu使用了大于1024的端口下发请求,造成gluterd的安全机制默认阻止Qemu的请求

同时也要对要使用的brick

testvol 做如下操作,并重启该birck

volume set testvol server.allow-insecure on

ps:如果启动虚拟机失败,遇到这样的错误:SETVOLUME on remote-host failed: Authentication failed

可以关闭cluster各个brick,umount所有挂载点后执行:

gluster volume set brickname auth.allow 'serverIp like 192.*'

修改之后如下

[root@agent1 tools]# cat /etc/glusterfs/glusterd.vol

volume management

type mgmt/glusterd

option working-directory /var/lib/glusterd

option transport-type socket,rdma

option transport.socket.keepalive-time 10

option transport.socket.keepalive-interval 2

option transport.socket.read-fail-log off

option ping-timeout 0

option event-threads 1

# option base-port 49152

end-volume

[root@agent1 tools]# vim /etc/glusterfs/glusterd.vol

[root@agent1 tools]# cat /etc/glusterfs/glusterd.vol

volume management

type mgmt/glusterd

option working-directory /var/lib/glusterd

option transport-type socket,rdma

option transport.socket.keepalive-time 10

option transport.socket.keepalive-interval 2

option transport.socket.read-fail-log off

option ping-timeout 0

option event-threads 1

option rpc-auth-allow-insecure on

# option base-port 49152

end-volume

[root@agent1 tools]#

agent2也添加这行参数

[root@agent2 tools]# cat /etc/glusterfs/glusterd.vol

volume management

type mgmt/glusterd

option working-directory /var/lib/glusterd

option transport-type socket,rdma

option transport.socket.keepalive-time 10

option transport.socket.keepalive-interval 2

option transport.socket.read-fail-log off

option ping-timeout 0

option event-threads 1

option rpc-auth-allow-insecure on

# option base-port 49152

end-volume

[root@agent2 tools]#

命令行执行安全配置

这个命令,只需要在一个gluster节点上执行即可,它对整个集群生效

[root@agent1 tools]# gluster volume set gv2 server.allow-insecure on volume set: success [root@agent1 tools]#

gluster节点重启glusterd服务

改动之后,两台agent都要重启glusterd,注意服务名字,不是下面的glusterfsd,是glusterd服务

假如在这之前我们没启动过glusterd服务,可以不用重启。保险起见,重启即可

重启glusterfs对kvm有影响么,几乎无影响。但是先stop,在start,有影响的

[root@agent2 tools]# /etc/init.d/glusterfsd restart Stopping glusterfsd: [FAILED] [root@agent2 tools]# /etc/init.d/glusterd restart Stopping glusterd: [ OK ] Starting glusterd: [ OK ] [root@agent2 tools]#

glusterfs相关部分就操作完了

配置agent机器上网卡文件结合ovs

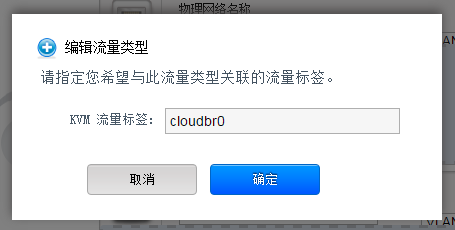

修改agent网卡参数,创建ovs桥cloudbr2,这里网桥名字随便取,这里出于对应eth2,就写成了cloudbr2

两台agent都操作

[root@agent2 tools]# cd /etc/sysconfig/network-scripts/ [root@agent2 network-scripts]# vim ifcfg-cloudbr2 [root@agent2 network-scripts]# vim ifcfg-eth2 [root@agent2 network-scripts]# cat ifcfg-cloudbr2 DEVICE=cloudbr2 ONBOOT=yes DEVICETYPE=ovs TYPE=OVSBridge BOOTPROTO=static STP=no NM_CONTROLLED=no USERCTL=no

删除IP地址等

[root@agent2 network-scripts]# cat ifcfg-eth2 # Please read /usr/share/doc/initscripts-*/sysconfig.txt # for the documentation of these parameters. DEVICE=eth2 BOOTPROTO=none TYPE=OVSPort DEVICETYPE=ovs OVS_BRIDGE=cloudbr2 ONBOOT=yes USERCTL=no NM_CONTROLLED=no [root@agent2 network-scripts]#

修改完之后,两边都重启网络服务

[root@agent2 network-scripts]# /etc/init.d/network restart

Shutting down interface eth0: [ OK ]

Shutting down interface eth1: [ OK ]

Shutting down interface eth2: [ OK ]

Shutting down loopback interface: [ OK ]

Bringing up loopback interface: [ OK ]

Bringing up interface cloudbr2: [ OK ]

Bringing up interface eth0: Determining if ip address 192.168.145.153 is already in use for device eth0...

[ OK ]

Bringing up interface eth1: Determining if ip address 192.168.5.153 is already in use for device eth1...

[ OK ]

Bringing up interface eth2: [ OK ]

[root@agent2 network-scripts]#

查看重启后的网络接口信息,多了cloudbr2

[root@agent2 network-scripts]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:95:fa brd ff:ff:ff:ff:ff:ff

inet 192.168.145.153/24 brd 192.168.145.255 scope global eth0

inet6 fe80::20c:29ff:feab:95fa/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:95:04 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.153/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feab:9504/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:95:0e brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:950e/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 52:54:00:a1:8e:64 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500

link/ether 52:54:00:a1:8e:64 brd ff:ff:ff:ff:ff:ff

8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether b6:34:b6:35:e6:ca brd ff:ff:ff:ff:ff:ff

9: cloudbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:95:0e brd ff:ff:ff:ff:ff:ff

inet6 fe80::4893:c0ff:fe7a:b14e/64 scope link

valid_lft forever preferred_lft forever

[root@agent2 network-scripts]#

查看重启后两台agent的ovs这个虚拟交换机

[root@agent2 network-scripts]# ovs-vsctl show

c17bcc03-f6d0-4368-9f41-004598ec7336

Bridge "cloudbr2"

Port "eth2"

Interface "eth2"

Port "cloudbr2"

Interface "cloudbr2"

type: internal

ovs_version: "2.3.1"

[root@agent2 network-scripts]#

[root@agent1 network-scripts]# ovs-vsctl show

b8d2eae6-27c2-4f94-bb28-81635229141d

Bridge "cloudbr2"

Port "eth2"

Interface "eth2"

Port "cloudbr2"

Interface "cloudbr2"

type: internal

ovs_version: "2.3.1"

[root@agent1 network-scripts]#

master端配置

建库建表

执行数据库初始化操作,导入数据

[root@master1 ~]# cloudstack-setup-databases cloud:123456@localhost --deploy-as=root:123456 Mysql user name:cloud [ OK ] Mysql user password:****** [ OK ] Mysql server ip:localhost [ OK ] Mysql server port:3306 [ OK ] Mysql root user name:root [ OK ] Mysql root user password:****** [ OK ] Checking Cloud database files ... [ OK ] Checking local machine hostname ... [ OK ] Checking SELinux setup ... [ OK ] Detected local IP address as 192.168.145.151, will use as cluster management server node IP[ OK ] Preparing /etc/cloudstack/management/db.properties [ OK ] Applying /usr/share/cloudstack-management/setup/create-database.sql [ OK ] Applying /usr/share/cloudstack-management/setup/create-schema.sql [ OK ] Applying /usr/share/cloudstack-management/setup/create-database-premium.sql [ OK ] Applying /usr/share/cloudstack-management/setup/create-schema-premium.sql [ OK ] Applying /usr/share/cloudstack-management/setup/server-setup.sql [ OK ] Applying /usr/share/cloudstack-management/setup/templates.sql [ OK ] Processing encryption ... [ OK ] Finalizing setup ... [ OK ] CloudStack has successfully initialized database, you can check your database configuration in /etc/cloudstack/management/db.properties [root@master1 ~]#

初始化matser配置

数据库配置完毕后,启动master,它会做一些初始化的操作

以后不要这么启动,初始化只执行一次就行了

[root@master1 ~]# /etc/init.d/cloudstack-management status cloudstack-management is stopped [root@master1 ~]# cloudstack-setup-management Starting to configure CloudStack Management Server: Configure Firewall ... [OK] Configure CloudStack Management Server ...[OK] CloudStack Management Server setup is Done! [root@master1 ~]#

master上导入系统镜像模板

master上执行下面命令

导入模板。它会把系统模板拷贝到对应路径下,同时往数据库里写记录。

之前实验虽然也导入到对应路径下了,但是我们把数据库删除了,记录不存在了。因此需要重新导入

/usr/share/cloudstack-common/scripts/storage/secondary/cloud-install-sys-tmplt -m /export/secondary -f /tools/systemvm64template-4.6.0-kvm.qcow2.bz2 -h kvm -F

这个步骤的作用就是把虚拟机模板导入到二级存储,执行过程如下

[root@master1 ~]# /usr/share/cloudstack-common/scripts/storage/secondary/cloud-install-sys-tmplt > -m /export/secondary > -f /tools/systemvm64template-4.6.0-kvm.qcow2.bz2 > -h kvm -F Uncompressing to /usr/share/cloudstack-common/scripts/storage/secondary/fa050b43-3f2e-4dd7-aecc-119ef1851039.qcow2.tmp (type bz2)...could take a long time Moving to /export/secondary/template/tmpl/1/3///fa050b43-3f2e-4dd7-aecc-119ef1851039.qcow2...could take a while Successfully installed system VM template /tools/systemvm64template-4.6.0-kvm.qcow2.bz2 to /export/secondary/template/tmpl/1/3/ [root@master1 ~]#

重启CloudStack-management

[root@master1 ~]# /etc/init.d/cloudstack-management restart Stopping cloudstack-management: [FAILED] Starting cloudstack-management: [ OK ] [root@master1 ~]# /etc/init.d/cloudstack-management restart Stopping cloudstack-management: [ OK ] Starting cloudstack-management: [ OK ] [root@master1 ~]#

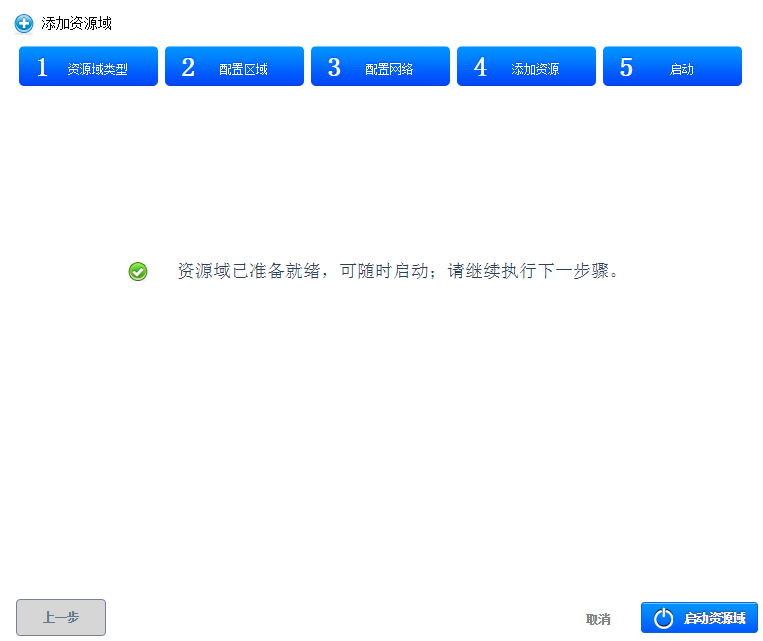

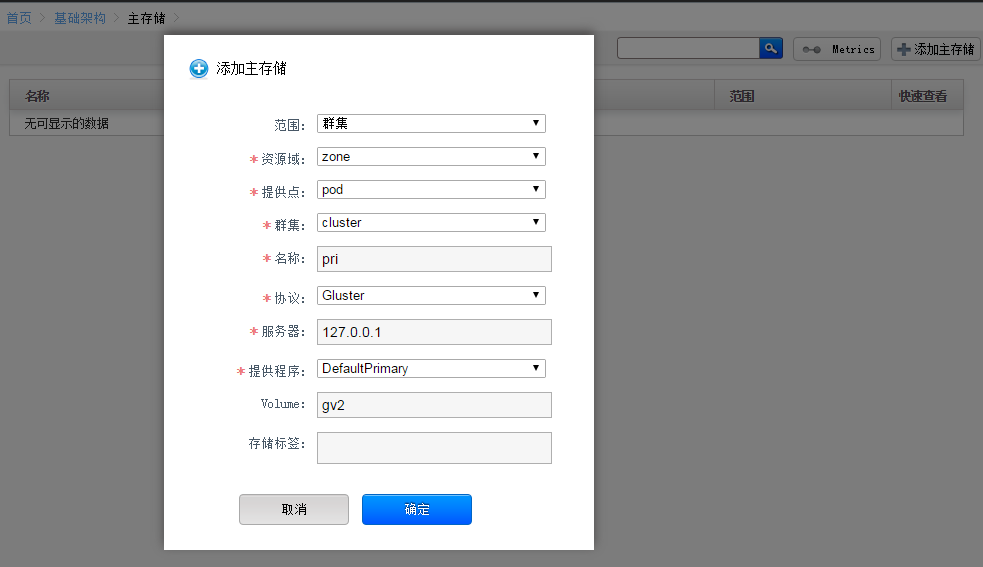

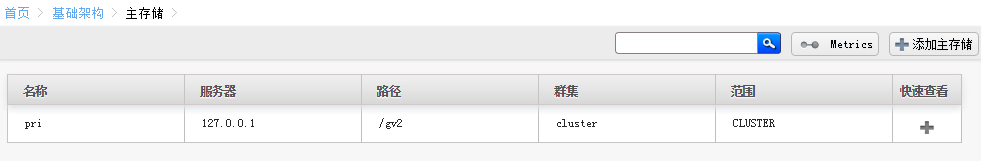

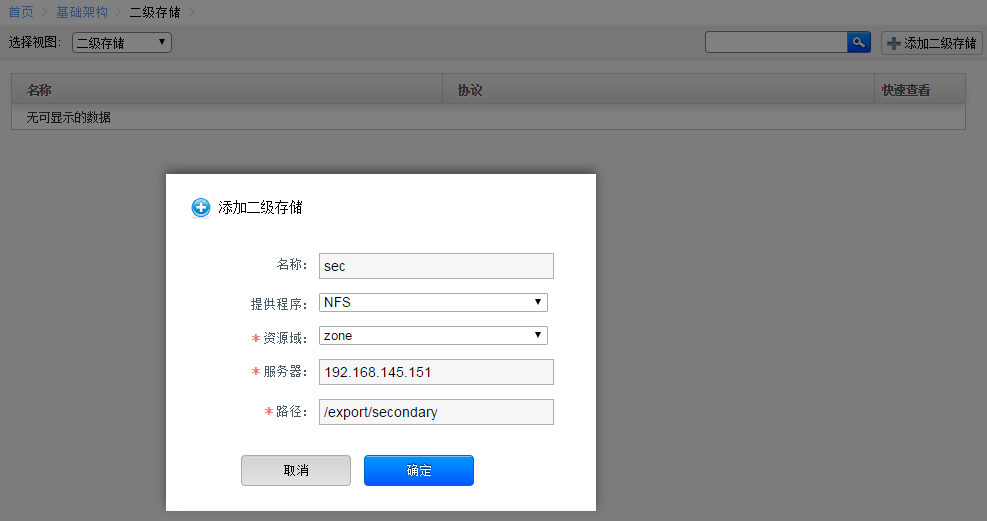

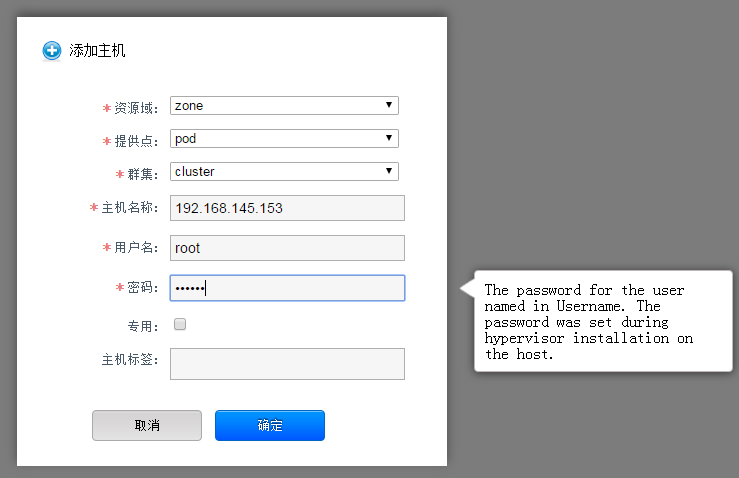

添加资源域

在操作之前,先把3台机器停止iptables

[root@master1 ~]# /etc/init.d/iptables stop iptables: Setting chains to policy ACCEPT: filter [ OK ] iptables: Flushing firewall rules: [ OK ] iptables: Unloading modules: [ OK ] [root@master1 ~]#

操作之前,还需要确保gluster是OK的

[root@agent1 ~]# gluster volume status Status of volume: gv2 Gluster process TCP Port RDMA Port Online Pid ------------------------------------------------------------------------------ Brick agent1:/export/primary 49152 0 Y 27248 Brick agent2:/export/primary 49152 0 Y 27372 NFS Server on localhost 2049 0 Y 27232 Self-heal Daemon on localhost N/A N/A Y 27241 NFS Server on agent2 2049 0 Y 27357 Self-heal Daemon on agent2 N/A N/A Y 27367 Task Status of Volume gv2 ------------------------------------------------------------------------------ There are no active volume tasks [root@agent1 ~]#

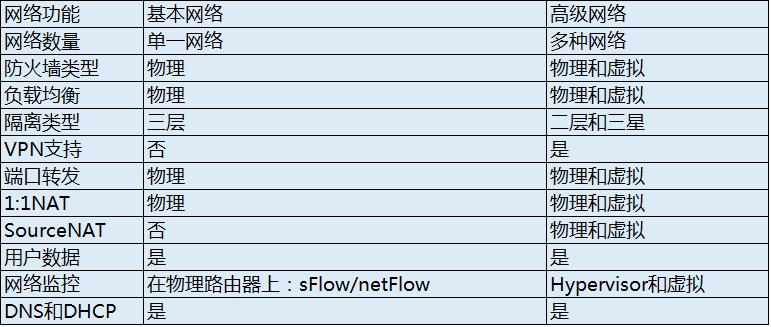

关于高级网络和基本网络的比较

10.0.1.11是本地dns服务器

其余默认

eth0是管理网,桥接到cloudbr0上,cloudbr0会在后面创建

root/root01

二级存储写master共享的nfs,其实生产环境也可以试用glusterfs

主机添加之后的agent1网络信息

多了cloudbr0和cloudbr2网桥设备

[root@agent1 ~]# ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:d5a9/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:b3 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.152/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feab:d5b3/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:d5bd/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 2e:c0:a6:d1:46:73 brd ff:ff:ff:ff:ff:ff

10: cloudbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet 192.168.145.152/24 brd 192.168.145.255 scope global cloudbr0

inet6 fe80::448a:8cff:fe77:e140/64 scope link

valid_lft forever preferred_lft forever

11: cloudbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet6 fe80::30c6:80ff:fe79:a149/64 scope link

valid_lft forever preferred_lft forever

13: cloud0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether c6:9e:e5:49:12:4e brd ff:ff:ff:ff:ff:ff

inet 169.254.0.1/16 scope global cloud0

inet6 fe80::c49e:e5ff:fe49:124e/64 scope link

valid_lft forever preferred_lft forever

[root@agent1 ~]#

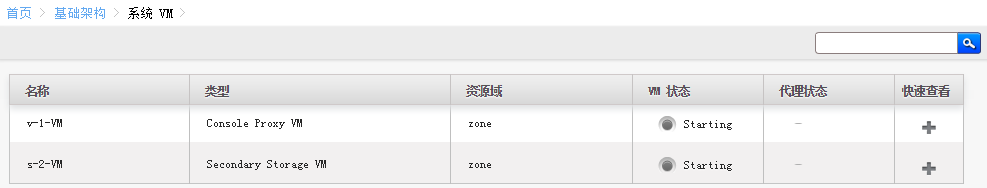

启动资源域

[root@agent2 ~]# virsh list Id Name State ---------------------------------------------------- 2 s-2-VM running 3 v-1-VM running [root@agent2 ~]#

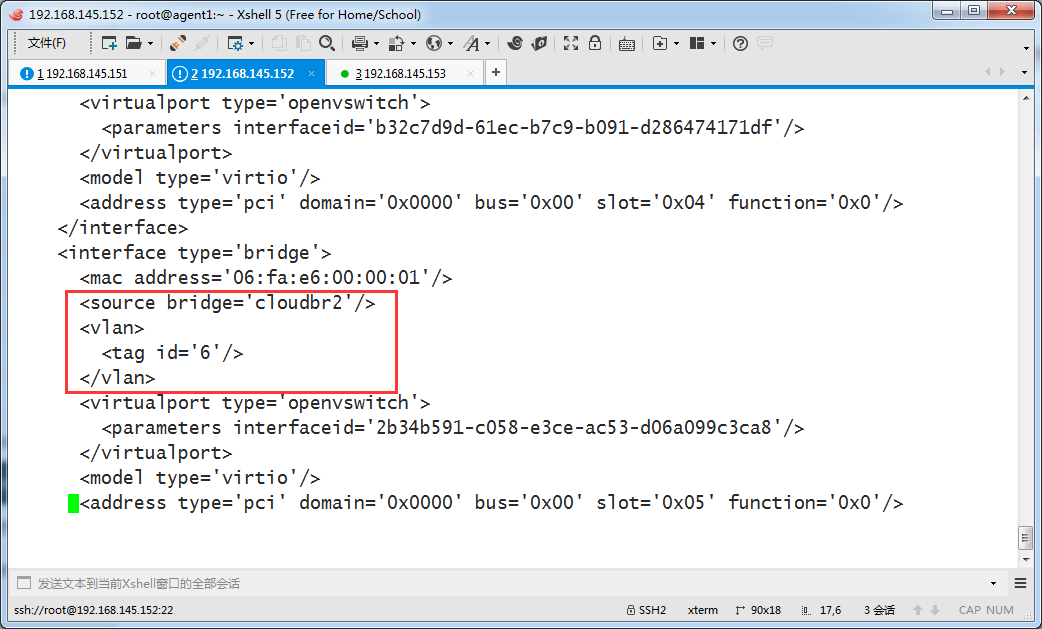

虚拟机启动后,ovs会创建很多桥

管理网段的桥接到了cloudbr0

guest网段的vnet都桥接到了cloudbr2上

下面是agent1的桥接信息,之前的bridge-util桥就没用到,试用的是ovs

[root@agent1 ~]# brctl show

bridge name bridge id STP enabled interfaces

virbr0 8000.525400ea877d yes virbr0-nic

[root@agent1 ~]# ovs-vsctl show

b8d2eae6-27c2-4f94-bb28-81635229141d

Bridge "cloud0"

Port "cloud0"

Interface "cloud0"

type: internal

Bridge "cloudbr0"

Port "eth0"

Interface "eth0"

Port "cloudbr0"

Interface "cloudbr0"

type: internal

Bridge "cloudbr2"

Port "eth2"

Interface "eth2"

Port "cloudbr2"

Interface "cloudbr2"

type: internal

ovs_version: "2.3.1"

[root@agent1 ~]#

下面是agent2上的桥接信息

[root@agent2 ~]# ovs-vsctl show

c17bcc03-f6d0-4368-9f41-004598ec7336

Bridge "cloudbr2"

Port "eth2"

Interface "eth2"

Port "cloudbr2"

Interface "cloudbr2"

type: internal

Port "vnet2"

tag: 6

Interface "vnet2"

Port "vnet5"

tag: 6

Interface "vnet5"

Bridge "cloud0"

Port "vnet0"

Interface "vnet0"

Port "vnet3"

Interface "vnet3"

Port "cloud0"

Interface "cloud0"

type: internal

Bridge "cloudbr0"

Port "eth0"

Interface "eth0"

Port "vnet1"

Interface "vnet1"

Port "vnet4"

Interface "vnet4"

Port "cloudbr0"

Interface "cloudbr0"

type: internal

ovs_version: "2.3.1"

[root@agent2 ~]#

因为系统虚拟机目前都运行在agent2上

所以桥接设备比较多

[root@agent2 ~]# ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:95:fa brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:95fa/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:95:04 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.153/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feab:9504/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:95:0e brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:950e/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 52:54:00:a1:8e:64 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500

link/ether 52:54:00:a1:8e:64 brd ff:ff:ff:ff:ff:ff

8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether b6:34:b6:35:e6:ca brd ff:ff:ff:ff:ff:ff

10: cloudbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:95:fa brd ff:ff:ff:ff:ff:ff

inet 192.168.145.153/24 brd 192.168.145.255 scope global cloudbr0

inet6 fe80::b454:44ff:fe91:8e45/64 scope link

valid_lft forever preferred_lft forever

11: cloudbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:95:0e brd ff:ff:ff:ff:ff:ff

inet6 fe80::c05d:eaff:fe39:2e45/64 scope link

valid_lft forever preferred_lft forever

13: cloud0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 16:d7:4f:51:fc:4b brd ff:ff:ff:ff:ff:ff

inet 169.254.0.1/16 scope global cloud0

inet6 fe80::14d7:4fff:fe51:fc4b/64 scope link

valid_lft forever preferred_lft forever

17: vnet3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:00:a9:fe:03:4e brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc00:a9ff:fefe:34e/64 scope link

valid_lft forever preferred_lft forever

18: vnet4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:62:18:00:00:10 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc62:18ff:fe00:10/64 scope link

valid_lft forever preferred_lft forever

19: vnet5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:28:42:00:00:02 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc28:42ff:fe00:2/64 scope link

valid_lft forever preferred_lft forever

20: vnet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:00:a9:fe:02:37 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc00:a9ff:fefe:237/64 scope link

valid_lft forever preferred_lft forever

21: vnet1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:65:ce:00:00:12 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc65:ceff:fe00:12/64 scope link

valid_lft forever preferred_lft forever

22: vnet2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:fa:e6:00:00:01 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fcfa:e6ff:fe00:1/64 scope link

valid_lft forever preferred_lft forever

[root@agent2 ~]#

下面是此时的agent1的网络设备信息

[root@agent1 ~]# ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:d5a9/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:b3 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.152/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feab:d5b3/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:d5bd/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 2e:c0:a6:d1:46:73 brd ff:ff:ff:ff:ff:ff

10: cloudbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet 192.168.145.152/24 brd 192.168.145.255 scope global cloudbr0

inet6 fe80::448a:8cff:fe77:e140/64 scope link

valid_lft forever preferred_lft forever

11: cloudbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet6 fe80::30c6:80ff:fe79:a149/64 scope link

valid_lft forever preferred_lft forever

13: cloud0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether c6:9e:e5:49:12:4e brd ff:ff:ff:ff:ff:ff

inet 169.254.0.1/16 scope global cloud0

inet6 fe80::c49e:e5ff:fe49:124e/64 scope link

valid_lft forever preferred_lft forever

[root@agent1 ~]#

把v-1-VM迁移到agent1上,看到agent1多了vnet0,vnet1,vnet2

默认是因为系统虚拟机有3个网卡,对应外面的3个虚拟设备

[root@agent1 ~]# ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:d5a9/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:b3 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.152/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feab:d5b3/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet6 fe80::20c:29ff:feab:d5bd/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

6: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500

link/ether 52:54:00:ea:87:7d brd ff:ff:ff:ff:ff:ff

8: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 2e:c0:a6:d1:46:73 brd ff:ff:ff:ff:ff:ff

10: cloudbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:d5:a9 brd ff:ff:ff:ff:ff:ff

inet 192.168.145.152/24 brd 192.168.145.255 scope global cloudbr0

inet6 fe80::448a:8cff:fe77:e140/64 scope link

valid_lft forever preferred_lft forever

11: cloudbr2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether 00:0c:29:ab:d5:bd brd ff:ff:ff:ff:ff:ff

inet6 fe80::30c6:80ff:fe79:a149/64 scope link

valid_lft forever preferred_lft forever

13: cloud0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

link/ether c6:9e:e5:49:12:4e brd ff:ff:ff:ff:ff:ff

inet 169.254.0.1/16 scope global cloud0

inet6 fe80::c49e:e5ff:fe49:124e/64 scope link

valid_lft forever preferred_lft forever

18: vnet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:00:a9:fe:02:37 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc00:a9ff:fefe:237/64 scope link

valid_lft forever preferred_lft forever

19: vnet1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:65:ce:00:00:12 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc65:ceff:fe00:12/64 scope link

valid_lft forever preferred_lft forever

20: vnet2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 500

link/ether fe:fa:e6:00:00:01 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fcfa:e6ff:fe00:1/64 scope link

valid_lft forever preferred_lft forever

可以使用vnc工具查看,系统虚拟机有3个网卡

cloud0是自带的169开头的系统虚拟机桥接的网卡

[root@agent1 ~]# ovs-vsctl show

b8d2eae6-27c2-4f94-bb28-81635229141d

Bridge "cloud0"

Port "cloud0"

Interface "cloud0"

type: internal

Port "vnet0"

Interface "vnet0"

Bridge "cloudbr0"

Port "eth0"

Interface "eth0"

Port "vnet1"

Interface "vnet1"

Port "cloudbr0"

Interface "cloudbr0"

type: internal

Bridge "cloudbr2"

Port "vnet2"

tag: 6

Interface "vnet2"

Port "eth2"

Interface "eth2"

Port "cloudbr2"

Interface "cloudbr2"

type: internal

ovs_version: "2.3.1"

[root@agent1 ~]#

查看tag标记

上面ovs-vsctl show这里也能看到tag标记

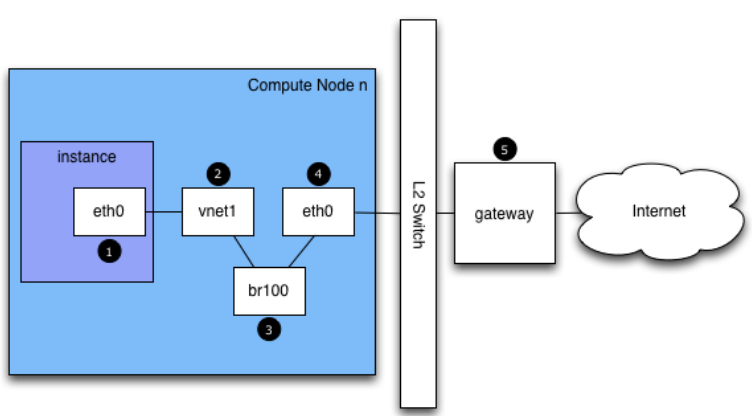

关于数据包流向

1 VM实例instance产生一个数据包并发送至实例内的虚拟网络接口VNIC,图中就是instance中的eth0.

2 这个数据包会传送到物理节点上的VNIC接口,如图就是vnet接口。

3 数据包从vnet NIC出来,到达桥(虚拟交换机)br100上.

4 数据包经过交换机的处理,从物理节点上的物理接口发出,如图中物理节点上的eth0.

5 数据包从eth0出去的时候,是按照物理节点上的路由以及默认网关操作的,这个时候该数据包其实已经不受你的控制了。

ovs-vsctl用法

列出所有挂接到网卡上的网桥

[root@agent1 ~]# ovs-vsctl list-ports cloudbr2 eth2 vnet2 [root@agent1 ~]#

CloudStack的HA

系统可靠性与可用性

管理服务器的HA

CloudStack管理服务器可以部署多节点的配置,使得它不容易受到单个服务器故障影响。

管理服务器(不同于Mysql数据库)本身是无状态的,可以被部署在负载均衡设备后面。

停止的所有管理服务不会影响主机的正常操作。所有来宾VM将继续工作。

当管理主机下线后,不能创建新的VMs、最终用户,管理UI、API、动态负载以及HA都将停止工作

启用了HA的虚拟机

用户可以给指定的虚拟机开启高可用特性。默认情况下所有的虚拟路由虚拟机和弹性辅助均衡虚拟机自动开启了高可用特性。

当CloudStack检测到开启了高可用特性的虚拟机崩溃时将会在相同的可用资源中自动重启该虚拟机。

高可用特性不会垮资源域执行。

CloudStack采用比较保守的方式重启虚拟机,以确使不会同时运行两个相同的实例。

管理服务器会尝试在本集群的另一台主机上开启该虚拟机。

高可用特性只在使用共享主存储的时候才可以使用,不支持使用本地存储作为主存储的高可用

下面模拟开启高可用

这里拿系统虚拟机当普通虚拟机使用(系统虚拟机默认是开启了高可用的)

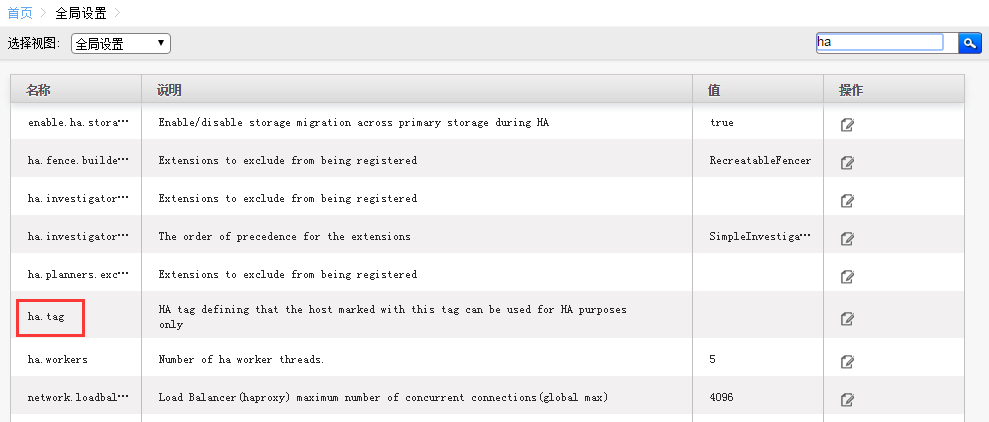

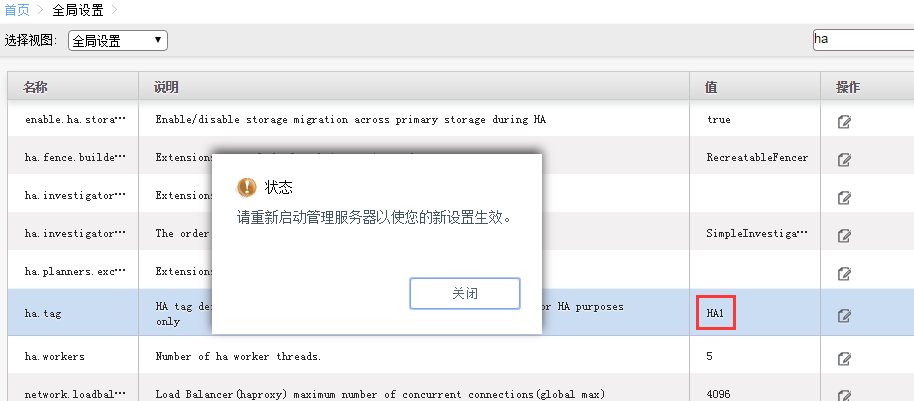

这里写一个高可用标记,随便写

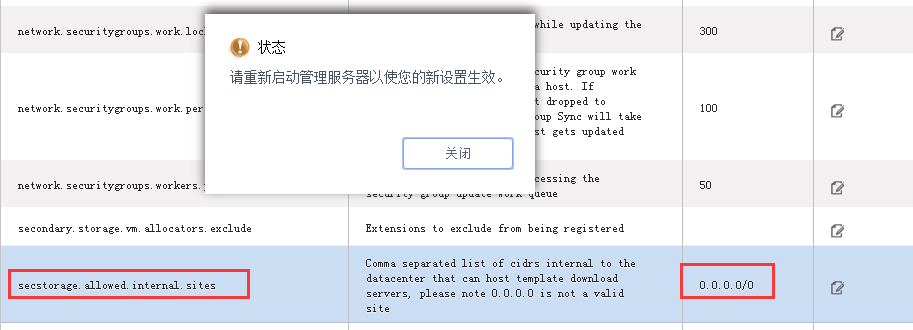

修改了全局设置重启master服务

[root@master1 ~]# /etc/init.d/cloudstack-management restart Stopping cloudstack-management: [FAILED] Starting cloudstack-management: [ OK ] [root@master1 ~]# /etc/init.d/cloudstack-management restart Stopping cloudstack-management: [ OK ] Starting cloudstack-management: [ OK ] [root@master1 ~]#

修改虚拟机标签

API相关

管理页面少批量创建虚拟机功能,但是可以通过api方式自己开发实现,需要创建密钥,然后通过api连接创建实例

知识补充一

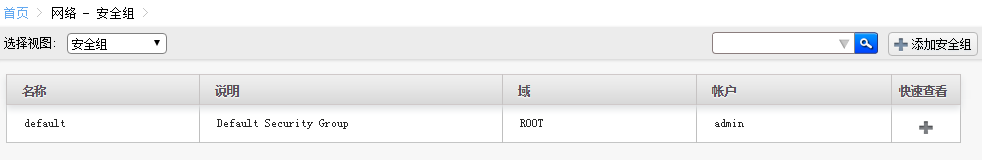

关于添加资源域时选择安全组

生产环境中关于安全组,可以先创建一个开放所有端口和所有协议的安全组,然后创建实例的时候使用

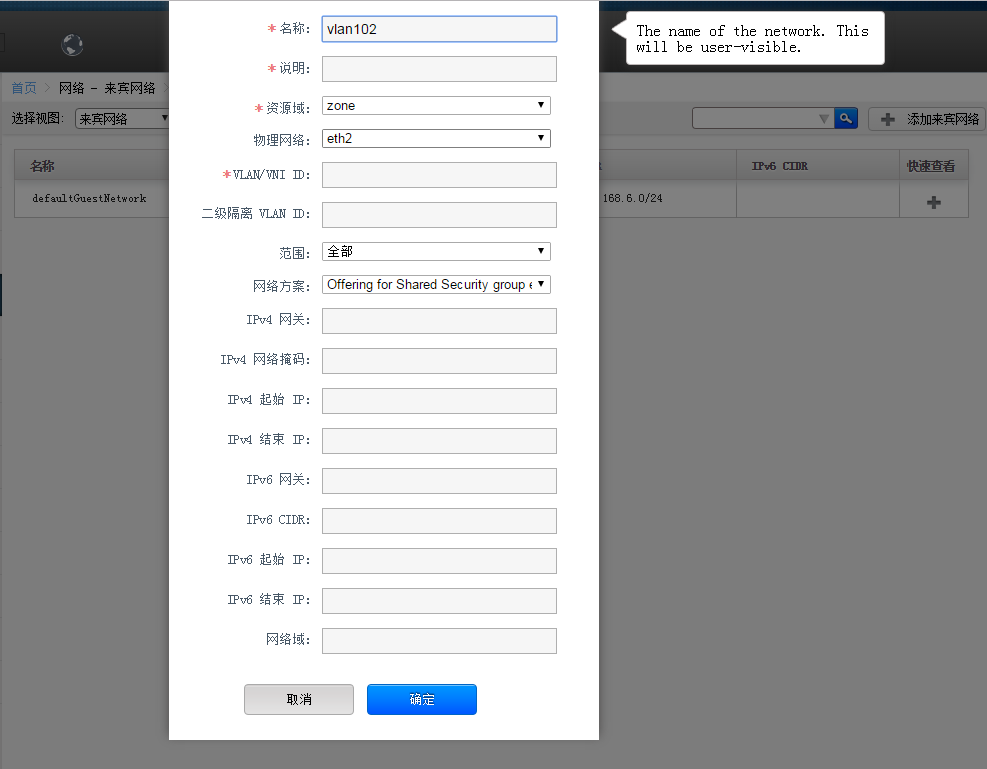

管理页面可以添加vlan

自己实验测试补充

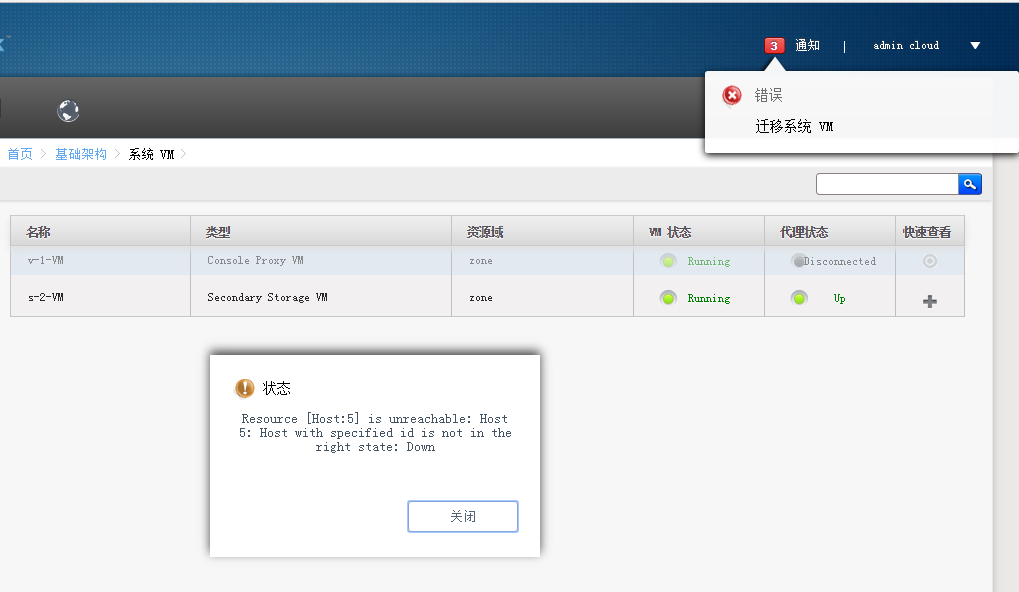

点击迁移虚拟机也不可行

解决办法:

是删除agent1 宿主机

然后在系统vm那里点击运行此虚拟机。它会自动在agent2上启动

(宿主机2上起新的系统虚拟机的时候,会有1分钟左右的断网。 笔记本无法连接此宿主机,1分钟后恢复,可能与我虚拟机配置有关)

知识补充二

1、kvm虚拟机达到2000多台,对mysql也没压力。

因此mysql调优这块不需要研究太深,做好主备即可。

2、企业生产环境服务器配置

企业私有云,服务器配置

企业搭建自己的私有云机器建议配置

CPU:2Cx10核(Intel Xeon E5-2650 v3 或Intel Xeon E52650 v3)

内存:256G (16G*16,单条内存无要求,按照最高性价比采购)

网卡:10G*2(模块与目前机房的万兆交换机匹配)

磁盘:600G*2块 SAS盘(系统盘,大小无特殊规定,按公司标准采购) 4T*6 SATA盘

机器配置太高也没意义。 散热也是问题,电源发热。风扇不停的转

网卡这里千兆网卡是标配,自带的。这里写10GB*2 是附加买的

3、生产环境master配置

master放两台

数据库配置主从

二级存储也可以单独nfs服务器(drbd),也可以使用gluster,然后作为nfs

4、部署架构

部署架构应该从硬件、网络、存储综合考虑,保障私有云整体的稳定性和安全性,

主控制节点需要2台机器保障控制节点高可用,计算节点由多台机器(至少2台)组成一个

或多个集群,保障业务的连续性,稳定性,安全性

5、控制节点架构

主控节点由两台机器作为主备,安装CloudStack管理端,MYSQL和分布式文件系统作为二级存储,都是一主一备。

CloudStack管理服务器可以部署一个或多个前端服务器并连接单一的Mysql数据库。可视需求使用

一对硬件辅助均衡对web请求进行分流,另一备份管理节点可使用远端站点的Mysql复制数据以增加灾难

恢复能力

6、私有云整体架构

管理服务器集群(包括前端辅助均衡节点,管理节点,以及Mysql数据库节点)通过两个负载均衡节点接入管理网络。

辅助存储服务器接入管理网络

每一个机柜提供点POD包括存储和计算节点服务器。

每一个存储和计算节点服务器都需要有冗余网卡连接到不同交换机上

7、关于挂载的glusterfs

我们大多数情况下采用分布式复制卷,已经类似raid10了。

硬盘只需要做成raid5即可

8、高级网络

每个区域都有基本或高级网络。

一个区域的整个生命周期中,不论是基本或高级网络。一旦在CloudStack中选择并配置区域的网络类型,就无法再修改

9、虚拟机迁移

建议是在同一个cluster内,共享统一存储的